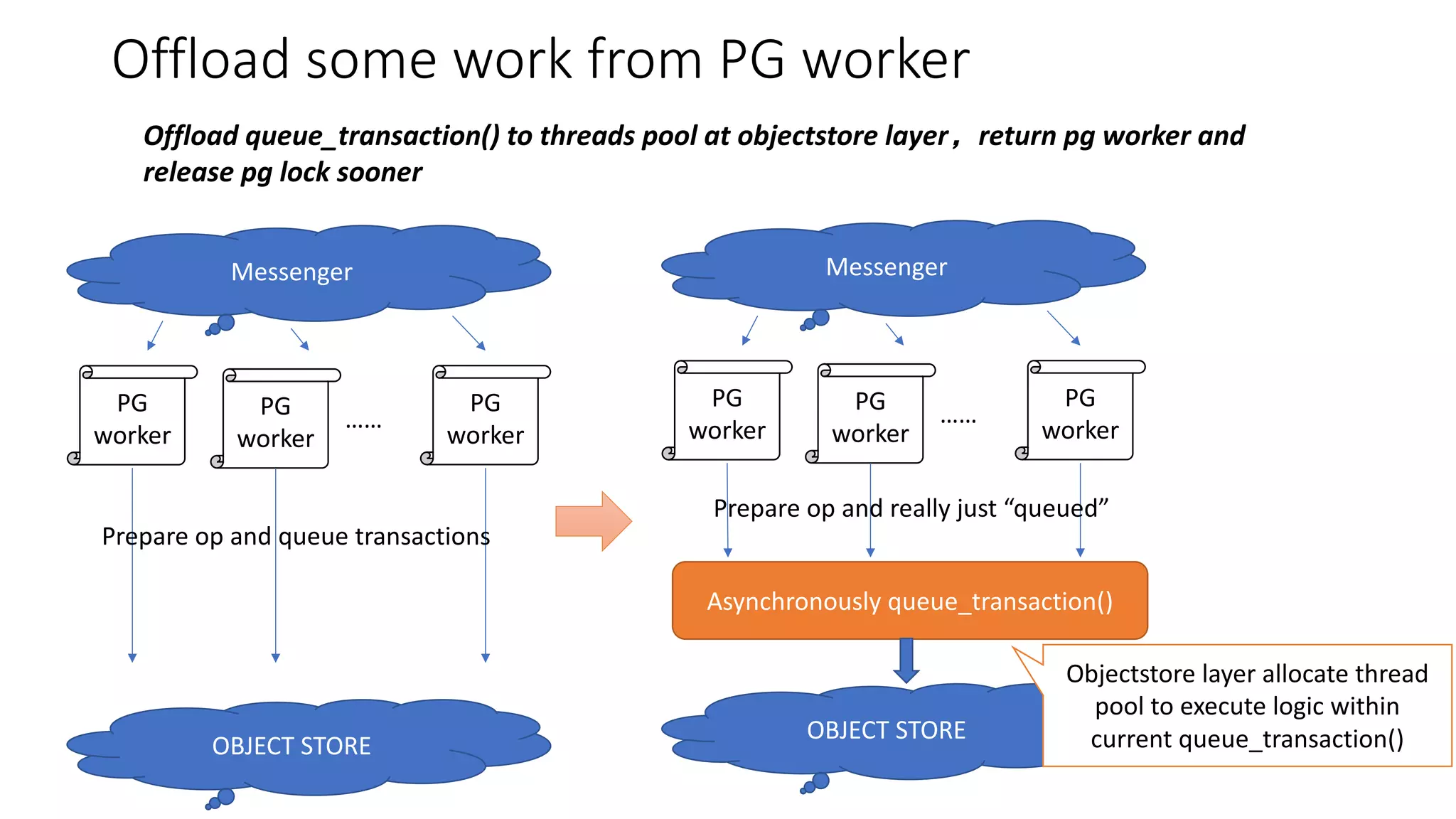

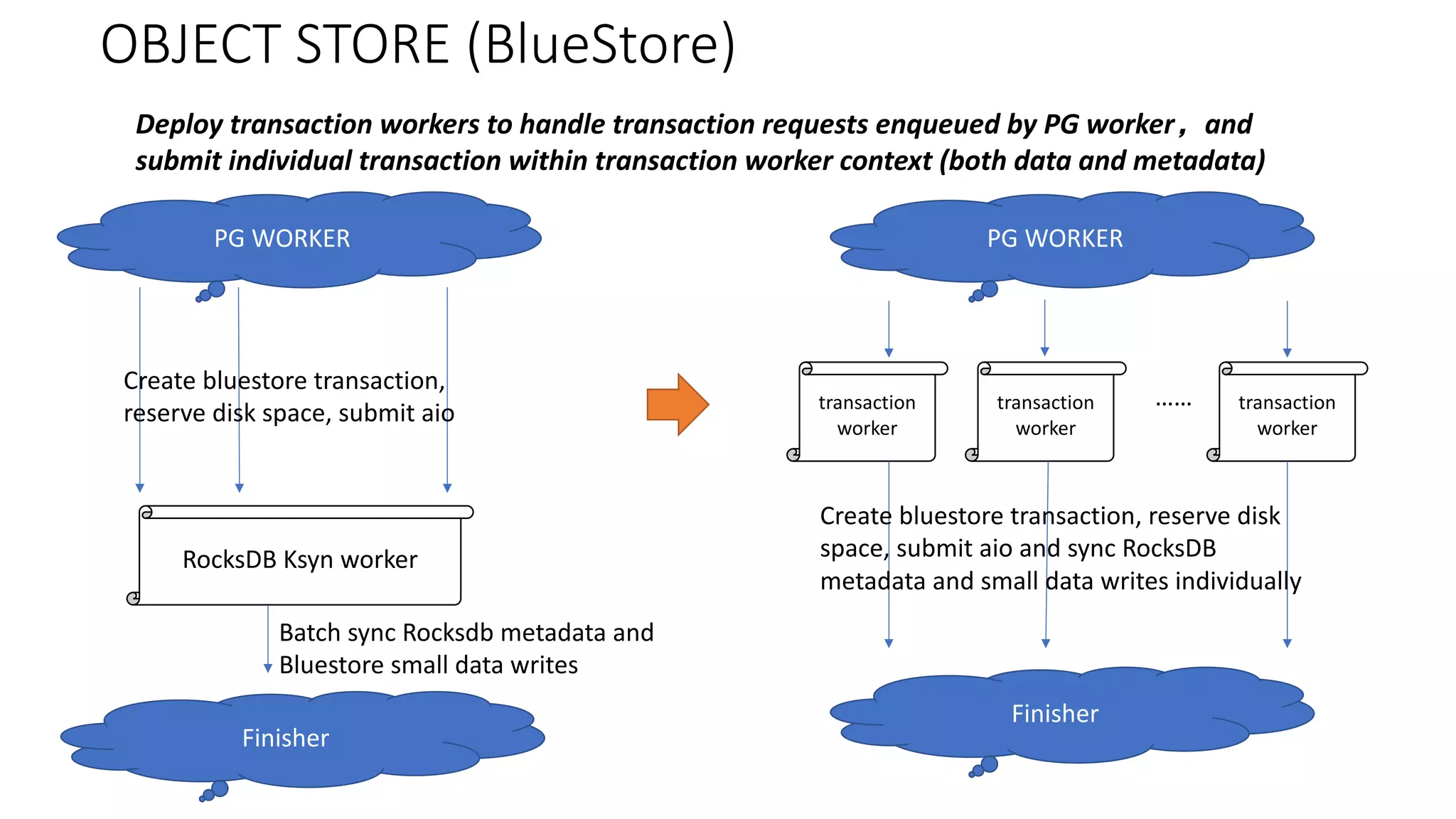

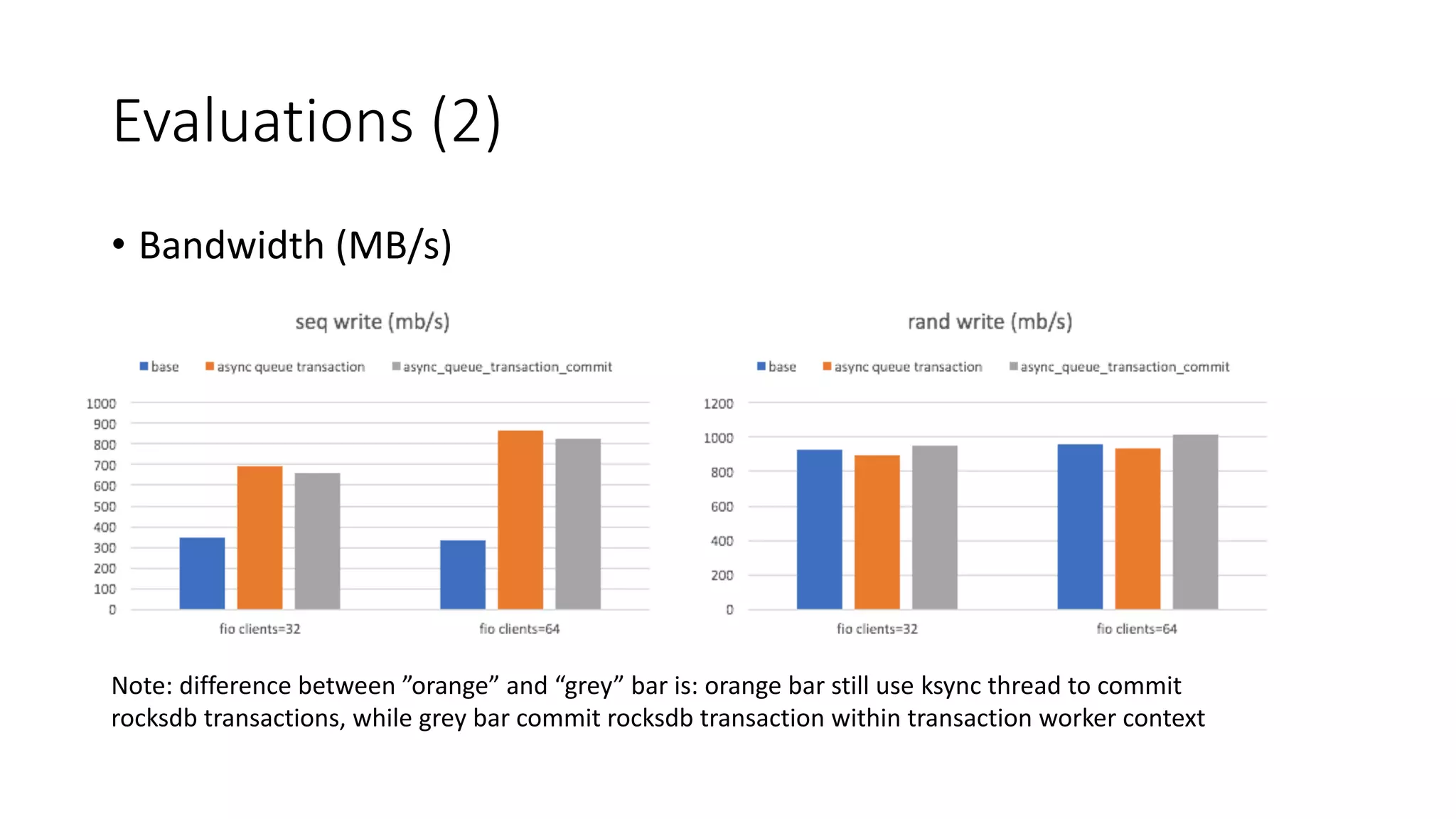

The document discusses the potential offloading of heavy tasks from the pg worker in the Alibaba Group's system to improve performance by using thread pools. By delegating certain operations within the do_op() function to other threads, the aim is to reduce pg_lock contention and individual I/O latency. The document also seeks feedback from the Ceph community on these proposed optimizations.