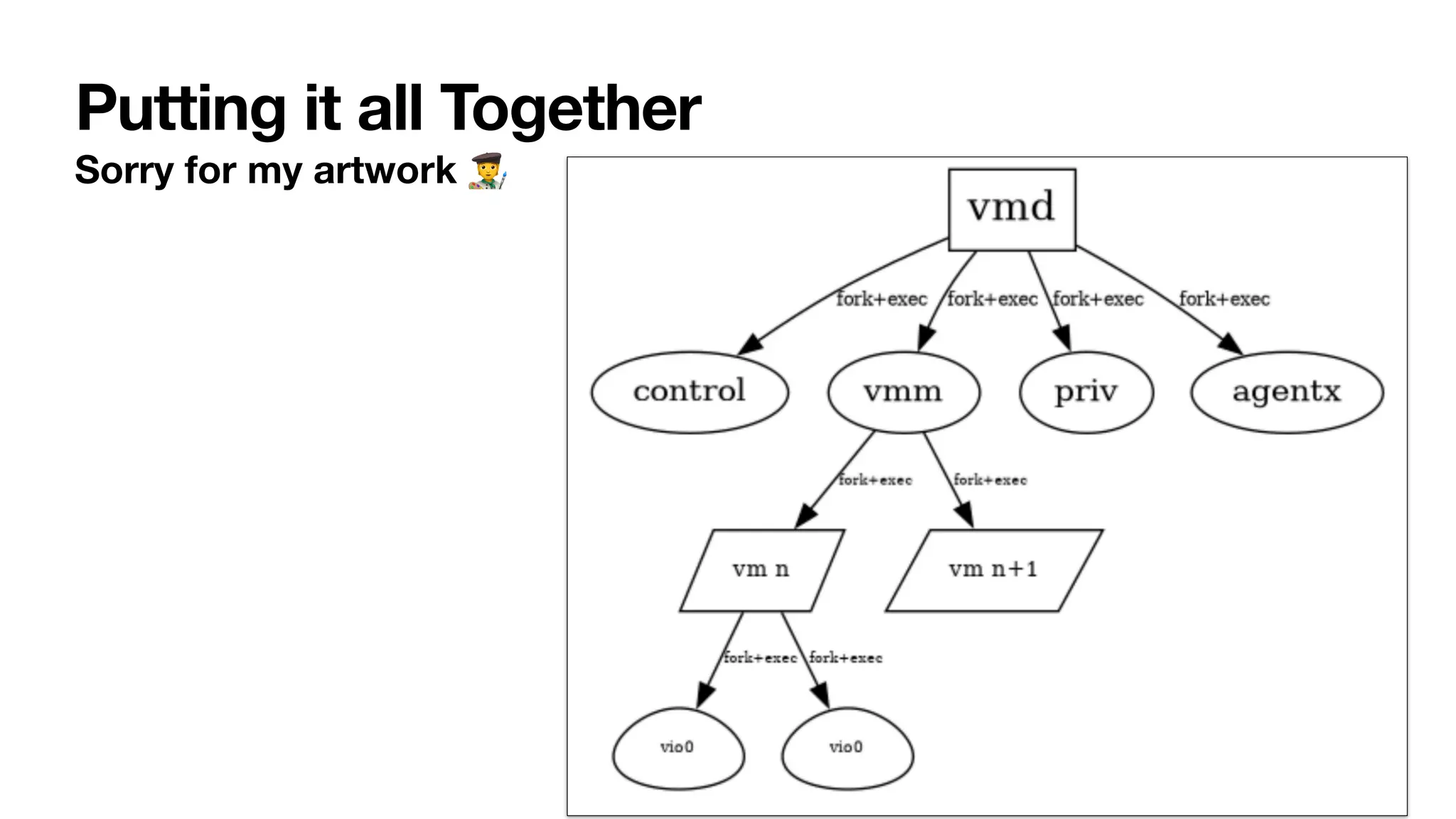

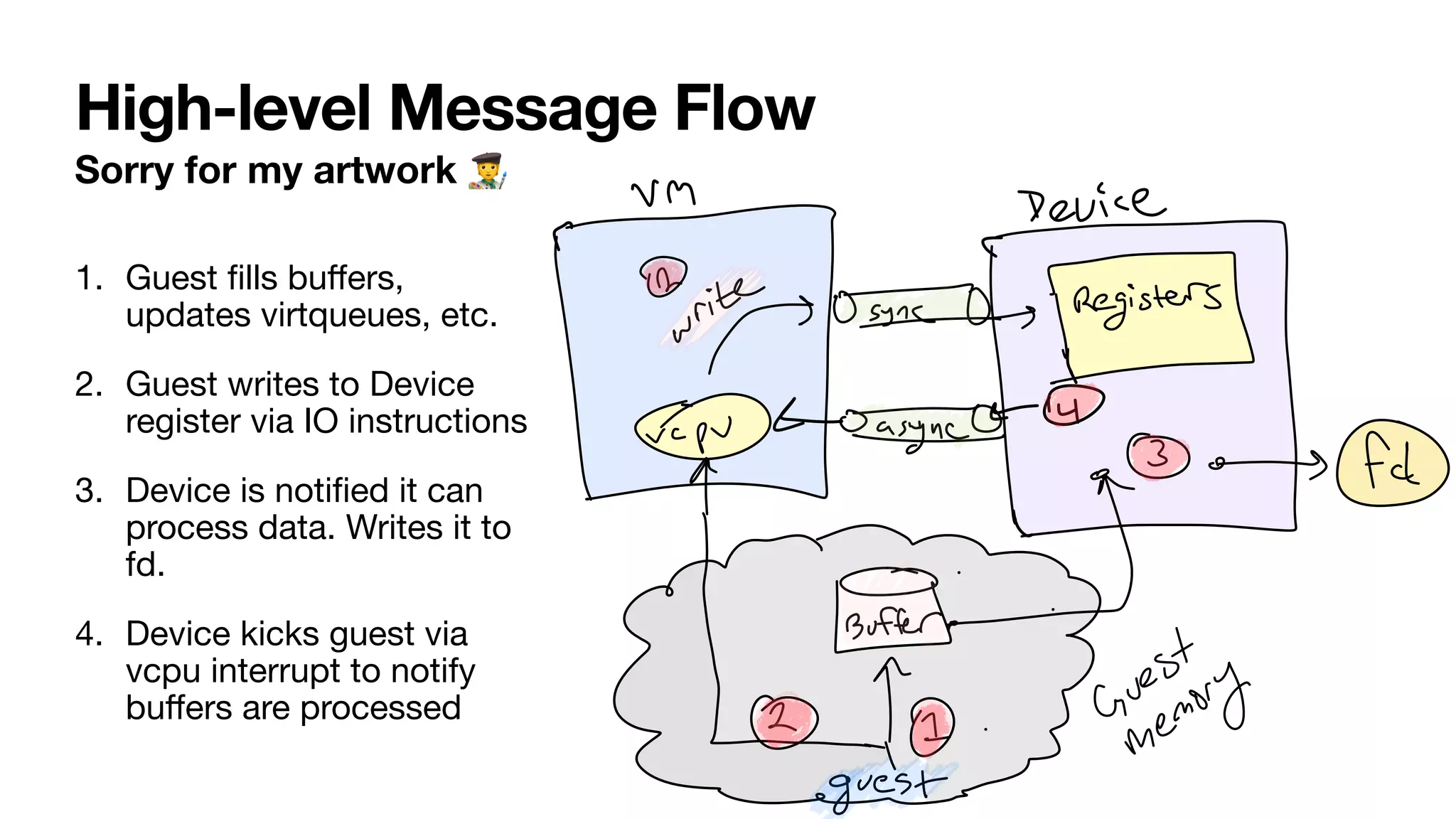

This document summarizes a talk given by Dave Voutila about hardening emulated devices in OpenBSD's vmd hypervisor. The talk discusses how vmd currently uses a single process model that shares memory between the hypervisor and VMs, presenting security risks. It proposes moving to a multi-process model where each VM is launched via fork and exec to isolate it and remove leftover state. This would help prevent guest-to-host escapes by isolating device emulation and limiting information leaks between VMs. Some initial benchmarks show the changes have little performance impact on disk and network I/O. Future work is planned to further isolate guest memory and expand device support.

![vioblk(4) benchmark

fi

o(1) [ports] performing 8 KiB random writes to 2GiB

fi

le for 5 minutes

• Very little di

ff

erence in

throughput

• Very little di

ff

erence in

latency

• Long-tail slightly

worse on Alpine??

Host

Version

Guest

Version

Throughput

MiB/s

clat avg

(usec)

clat stdev

(usec)

99.90th %

(usec)

99.95%

(usec)

OpenBSD-

current

OpenBSD-

current

90.3 89.9 8730 338 379

Prototype

OpenBSD-

current

100 76.4 7220 388 429

OpenBSD-

current

Alpine

Linux 3.17

131 11.2 534 32 38

Prototype

Alpine

Linux 3.17

132 11.7 682 594 685](https://image.slidesharecdn.com/asiabsdcon2023-hardeningvmddevices-230405121753-1ed857b5/75/AsiaBSDCon2023-Hardening-Emulated-Devices-in-OpenBSD-s-vmd-8-Hypervisor-19-2048.jpg)

![vio(4) benchmark

iperf3(1) [ports] with 1 thread run for 30 seconds, alternating TX/RX

• iperf(3) used with 1

thread in alternating

client / server modes

• Observations

• More consistent

throughput in

OpenBSD guests

• Negligible

performance

decrease for Linux

guests (not sure why)

Host

Version

Guest

Version

Receiving

Bitrate

(Mbit/s)

%

Sending

Bitrate

(MBit/s)

%

-current

OpenBSD

7.2

0.86 — 1.26 —

prototype

OpenBSD

7.2

1.22 63% 1.35 7%

-current

Alpine Linux

3.17

1.26 — 4.26 —

prototype

Alpine Linux

3.17

1.30 5% 3.19 -25%](https://image.slidesharecdn.com/asiabsdcon2023-hardeningvmddevices-230405121753-1ed857b5/75/AsiaBSDCon2023-Hardening-Emulated-Devices-in-OpenBSD-s-vmd-8-Hypervisor-20-2048.jpg)