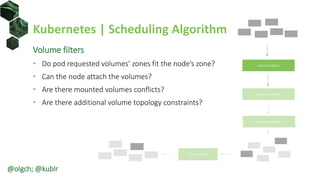

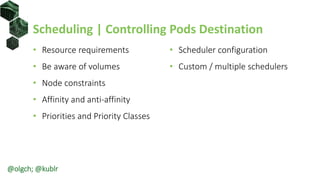

The document presents an overview of advanced scheduling techniques in Kubernetes, discussing the scheduling algorithm, controls, and techniques used to manage resource allocation for pods across nodes. Key topics include resource filters, volume constraints, inter-pod affinity, and the configuration of custom schedulers. Use cases highlighting distributed and co-located pods, as well as strategies for managing reliable services on spot nodes, are also explored.

![Scheduling Controlled | Pod Affinity Terms

• topologyKey – nodes’ label key defining co-location

• labelSelector and namespaces – select group of pods

<pod affinity term>:

topologyKey: <topology label key>

namespaces: [ <namespace>, ... ]

labelSelector:

matchLabels:

<label key>: <label value>

...

matchExpressions:

- key: <label key>

operator: In | NotIn | Exists | DoesNotExist

values: [ <value 1>, ... ]

...

@olgch; @kublr](https://image.slidesharecdn.com/202004advancedschedulinginkubernetes-201016200901/85/Advanced-Scheduling-in-Kubernetes-39-320.jpg)

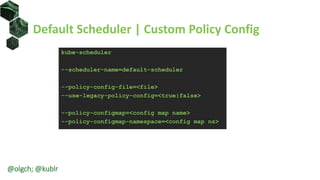

![Default Scheduler | Custom Policy Config

{

"kind" : "Policy",

"apiVersion" : "v1",

"predicates" : [

{"name" : "PodFitsHostPorts"},

...

{"name" : "HostName"}

],

"priorities" : [

{"name" : "LeastRequestedPriority", "weight" : 1},

...

{"name" : "EqualPriority", "weight" : 1}

],

"hardPodAffinitySymmetricWeight" : 10,

"alwaysCheckAllPredicates" : false

}

@olgch; @kublr](https://image.slidesharecdn.com/202004advancedschedulinginkubernetes-201016200901/85/Advanced-Scheduling-in-Kubernetes-44-320.jpg)

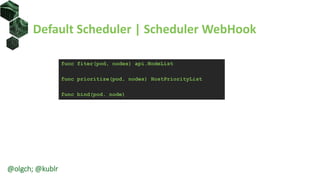

![Default Scheduler | Scheduler WebHook

{

"kind" : "Policy",

"apiVersion" : "v1",

"predicates" : [...],

"priorities" : [...],

"extenders" : [{

"urlPrefix": "http://127.0.0.1:12346/scheduler",

"filterVerb": "filter",

"bindVerb": "bind",

"prioritizeVerb": "prioritize",

"weight": 5,

"enableHttps": false,

"nodeCacheCapable": false

}],

"hardPodAffinitySymmetricWeight" : 10,

"alwaysCheckAllPredicates" : false

}

@olgch; @kublr](https://image.slidesharecdn.com/202004advancedschedulinginkubernetes-201016200901/85/Advanced-Scheduling-in-Kubernetes-45-320.jpg)

![Use Case | Distributed Pods

apiVersion: v1

kind: Pod

metadata:

name: db-replica-3

labels:

component: db

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: component

operator: In

values: [ "db" ]

Node 2

db-replica-2

Node 1

Node 3

db-replica-1

db-replica-3

@olgch; @kublr](https://image.slidesharecdn.com/202004advancedschedulinginkubernetes-201016200901/85/Advanced-Scheduling-in-Kubernetes-51-320.jpg)

![Use Case | Co-located Pods

apiVersion: v1

kind: Pod

metadata:

name: app-replica-1

labels:

component: web

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: kubernetes.io/hostname

labelSelector:

matchExpressions:

- key: component

operator: In

values: [ "db" ]

Node 2

db-replica-2

Node 1

Node 3

db-replica-1

app-replica-1

@olgch; @kublr](https://image.slidesharecdn.com/202004advancedschedulinginkubernetes-201016200901/85/Advanced-Scheduling-in-Kubernetes-52-320.jpg)