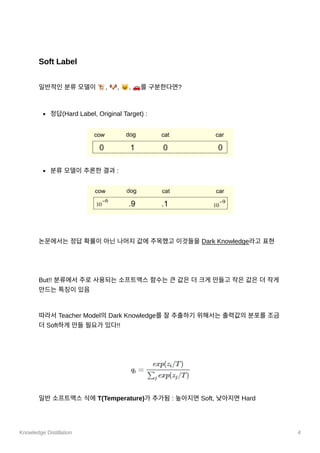

Knowledge distillation is a process that transfers knowledge from a teacher network to a student network. It was initially proposed to help deploy complex models onto devices with limited resources by distilling the knowledge into a smaller student model. Now it is commonly used to compress models for more efficient inference or training. The 2014 NIPS paper first defined knowledge distillation, using a softmax distribution over the teacher's outputs as "dark knowledge" to train the student, in addition to the hard targets. Since then, many models have been proposed that apply different distillation losses and processes to transfer knowledge from large pretrained models to more compact student models while maintaining high performance.

![Knowledge Distillation 8

: Teacher Model 의임베딩결과학습

3. Prediction-layer Distillation

: 최종레이어의결과값에대한Soft CE loss

두가지단계의distillation

1. General Distillation

: Teacher Model에서[Transformer Distillation, Embedding layer Distillation]

수행

2. Task-Specific Distillation (Over-parameterization 해결)

a. Data Augmentation

b. Task-Specific Distillation (Fine Tuning)

→ 4개Layer 버전: BERT_base보다7.5배작고9.4배빠름+ 96.8% 성능

→ 6개Layer 버전: 파라미터40% 감소+ 2배빠름+ 성능유지

SEED : SELF-SUPERVISED DISTILLATION FOR VISUAL

REPRESENTATION (ICLR 2021)

→ Constrastive Learning에서KD 도입

사전학습된Teacher Model을freeze해서더작은모델에게Distill](https://image.slidesharecdn.com/230223knowledgedistillation-230314083211-7ec20c36/85/230223_Knowledge_Distillation-8-320.jpg)