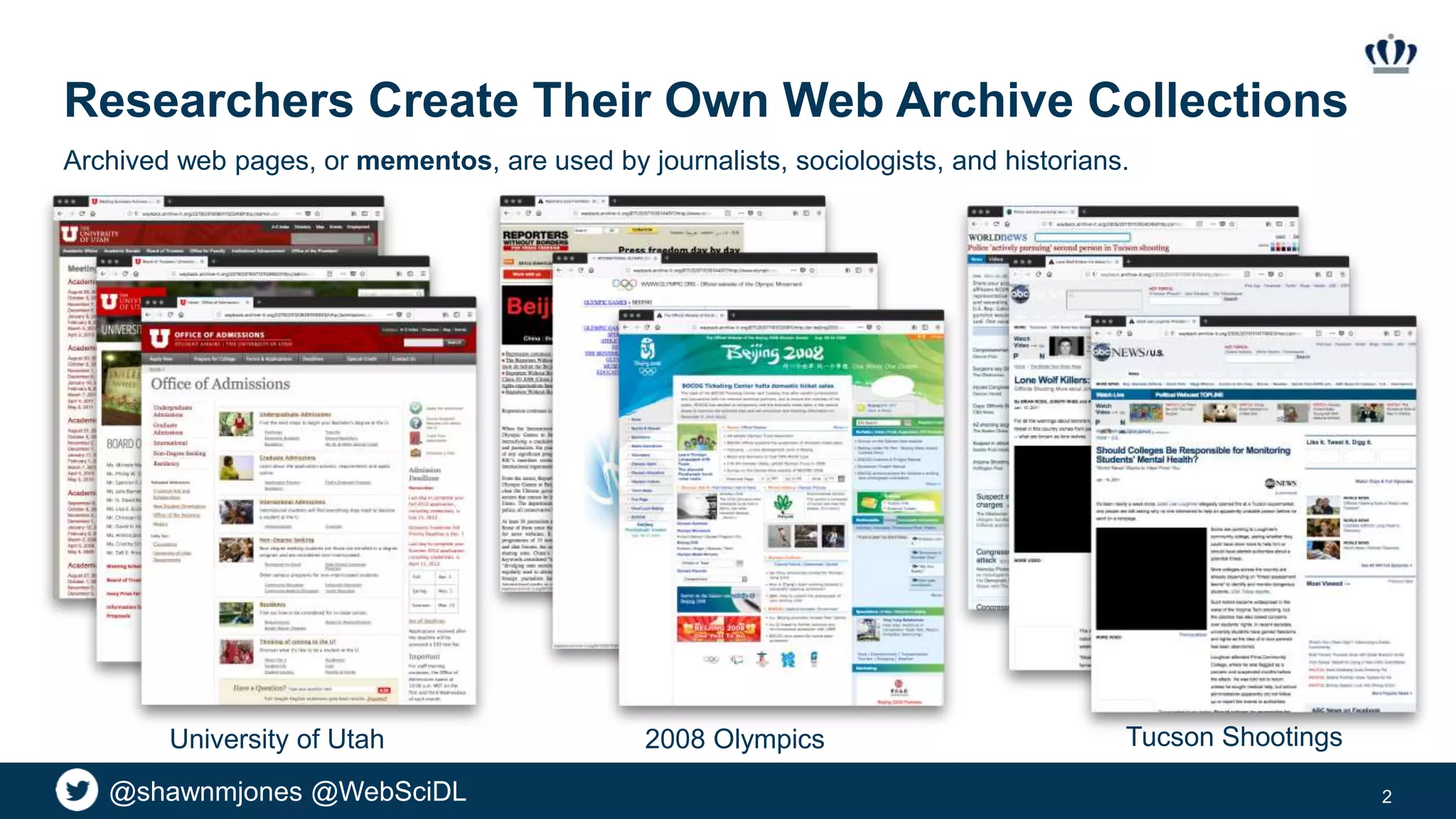

This document discusses improving understanding of collections in web archives. It notes that web archive collections contain multiple versions of pages that allow observing changes over time. However, metadata for collections is often missing or inconsistent, making it difficult for users to understand collections. The document proposes visualizing representative mementos from collections as a summary to help users understand collections at low cost compared to manually reviewing all content. Prior work on visualizing collections and generating summaries is also discussed.