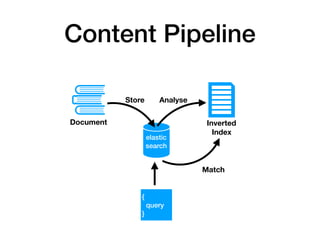

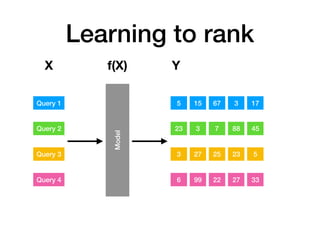

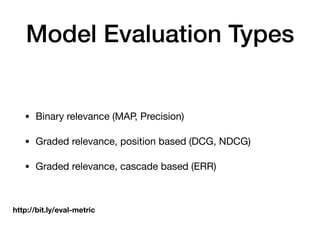

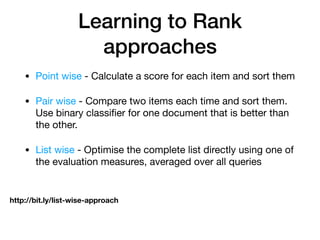

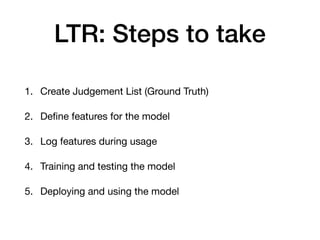

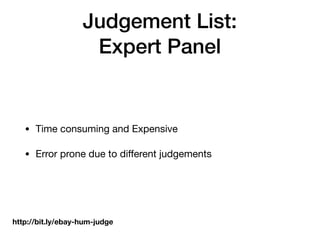

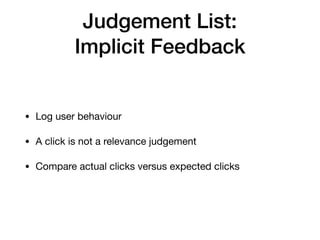

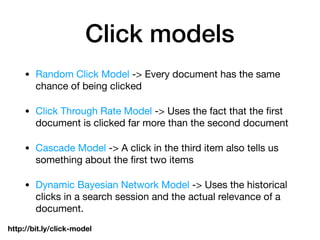

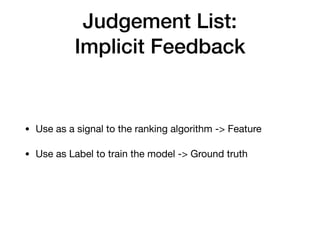

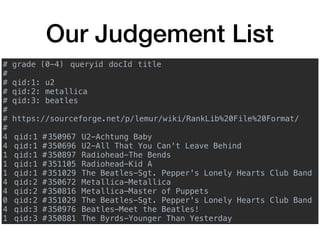

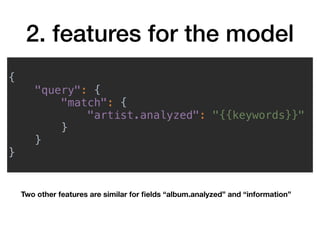

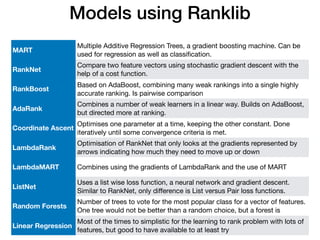

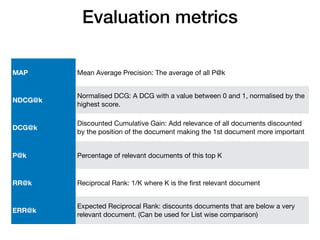

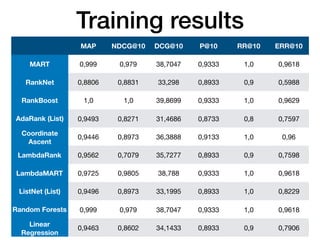

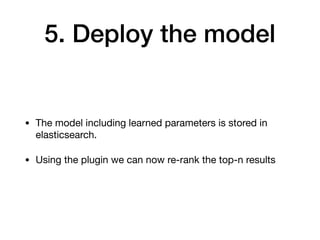

The document discusses the integration of machine learning with search functionalities to improve ranking in e-commerce, specifically through a method known as learning to rank. It outlines various ranking algorithms, evaluation metrics, and the process for training and deploying models in Elasticsearch. Additionally, it provides insights into creating judgement lists and features for improving search results based on user interactions.

![curl -XGET "http://localhost:9200/rolling500/_search"

-H 'Content-Type: application/json' -d'

{

"query": {

"multi_match": {

"query": "u2",

"fields": [

"album.analyzed",

"artist.analyzed",

"information"

]

}

}

}'](https://image.slidesharecdn.com/learningtorank-codemotionmeetup-180314124005/85/Combining-machine-learning-and-search-through-learning-to-rank-19-320.jpg)

![{

"query": {

"nested": {

"path": "clicks",

"query": {

"function_score": {

"query": {

"term": {

"clicks.term": {

"value": "{{keywords}}"

}

}

},

"functions": [

{

"field_value_factor": {

"field": "clicks.clicks",

"modifier": "log1p"

}

}

]

}

}

}

}

}](https://image.slidesharecdn.com/learningtorank-codemotionmeetup-180314124005/85/Combining-machine-learning-and-search-through-learning-to-rank-39-320.jpg)

![GET rolling500/_search

{

"query": {

"multi_match": {

"query": "rolling",

"fields": ["album.analyzed", "artist.analyzed", "information"]

}

},

"rescore": {

"window_size": 1000,

"query": {

"rescore_query": {

"sltr": {

"params": {"keywords": "rolling"},

"model": "test_6"

}

}

}

}

}](https://image.slidesharecdn.com/learningtorank-codemotionmeetup-180314124005/85/Combining-machine-learning-and-search-through-learning-to-rank-46-320.jpg)