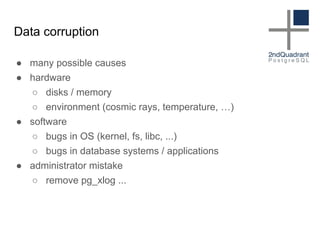

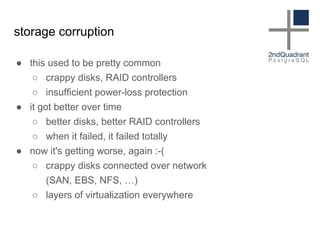

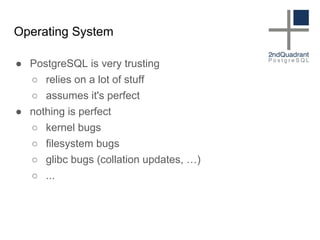

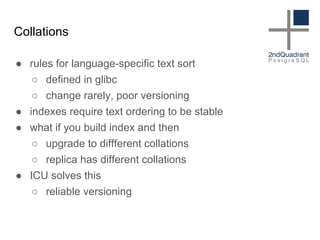

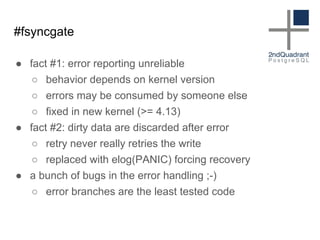

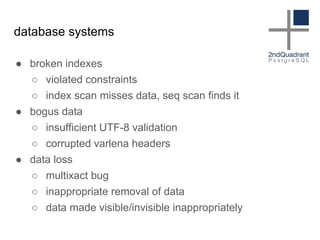

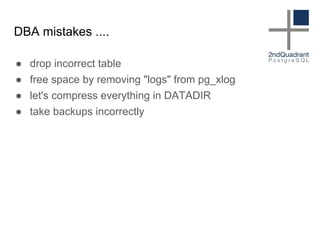

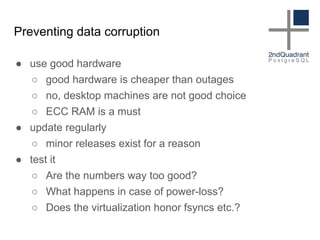

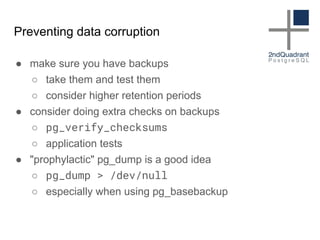

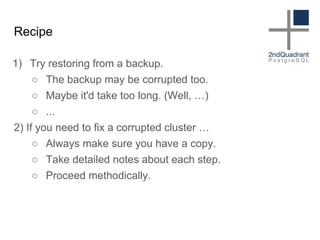

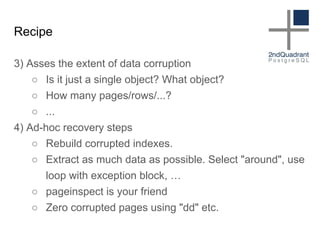

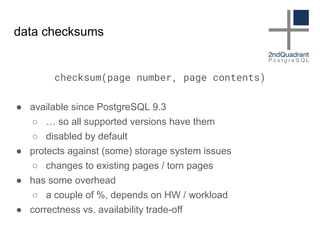

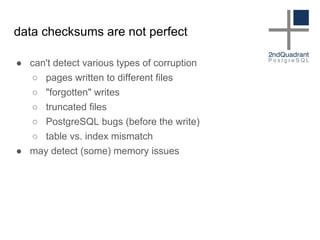

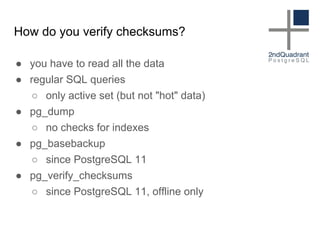

The document discusses data corruption in databases, its causes, and preventive measures. It outlines hardware, software, and administrative mistakes as potential sources of corruption and emphasizes the importance of using good hardware, regular updates, and thorough testing. Additionally, the document highlights the need for backups and explains strategies for fixing corrupted data, while noting the limitations of data checksums in detecting certain corruption types.