For the full video of this presentation, please visit:

http://www.embedded-vision.com/platinum-members/imagination-technologies/embedded-vision-training/videos/pages/may-2016-embedded-vision-summit

For more information about embedded vision, please visit:

http://www.embedded-vision.com

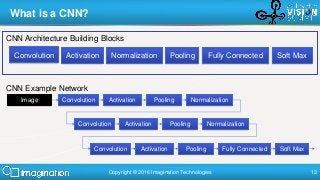

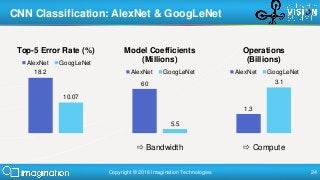

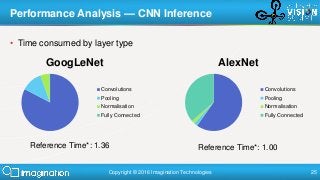

Paul Brasnett, Principal Research Engineer at Imagination Technologies, presents the "Efficient Convolutional Neural Network Inference on Mobile GPUs" tutorial at the May 2016 Embedded Vision Summit.

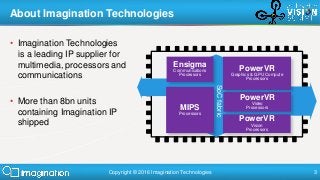

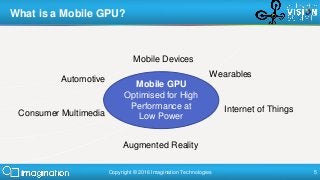

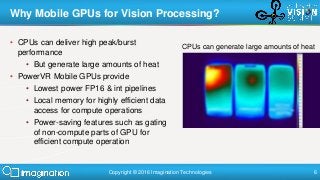

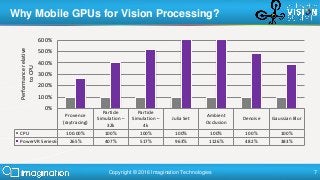

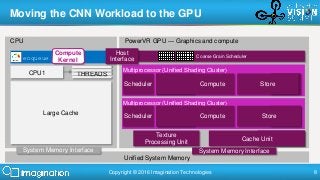

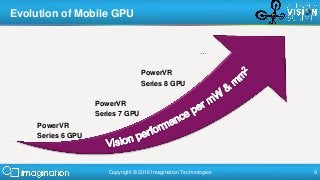

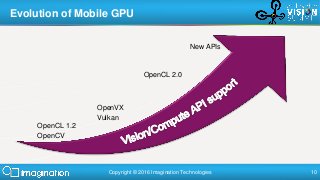

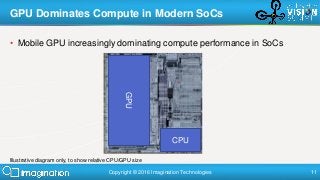

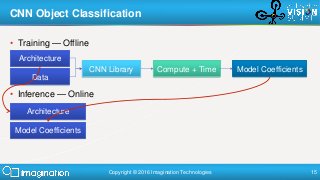

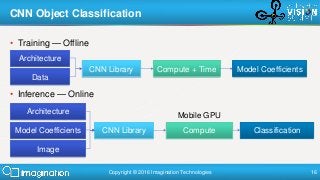

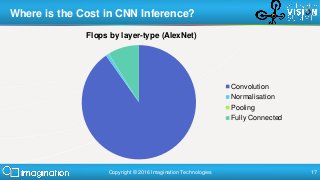

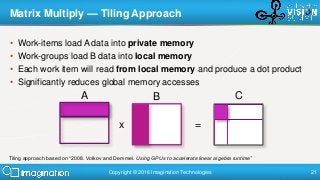

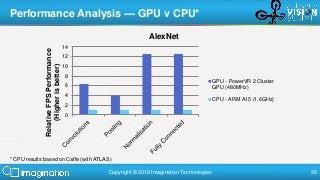

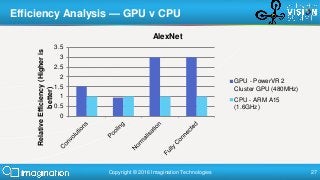

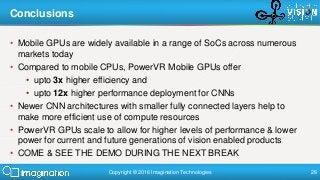

GPUs have become established as a key tool for training of deep learning algorithms. Deploying those algorithms on end devices is a key enabler to their commercial success and mobile GPUs are proving to be an efficient target processor that is readily available in end devices today. This talk looks at how to approach the task of deploying convolutional neural networks (CNNs) on mobile GPUs today. Brasnett explores the key primitives for CNN inference and what strategies there are for implementation. He works through alternative options and trade-offs, and provides reference performance analysis on mobile GPUs, using the PowerVR architecture as a case study.