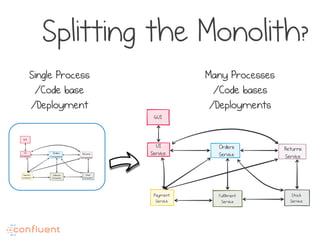

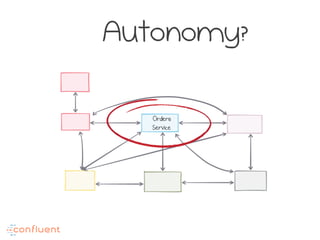

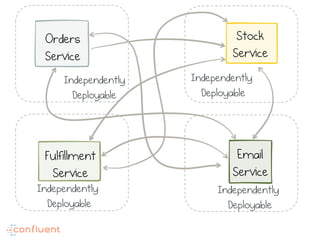

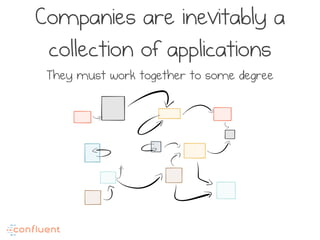

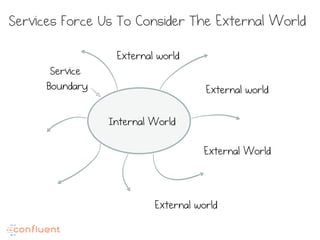

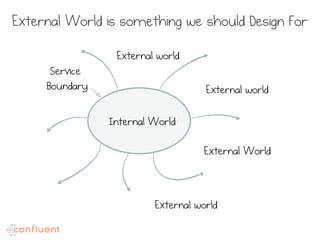

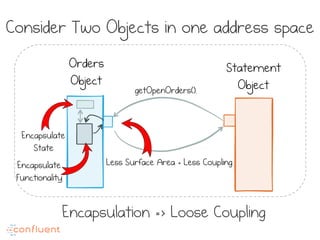

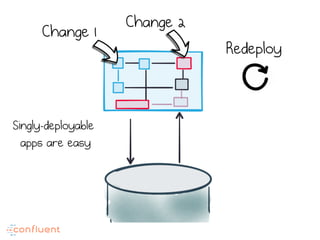

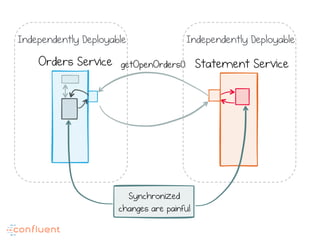

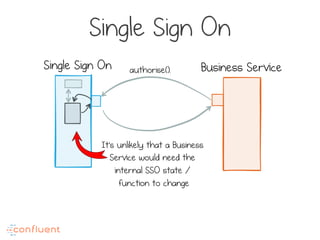

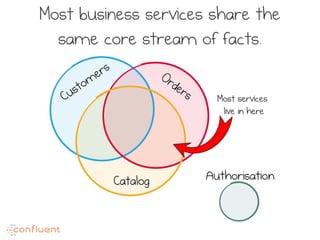

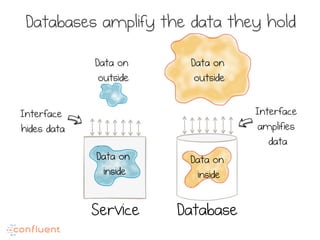

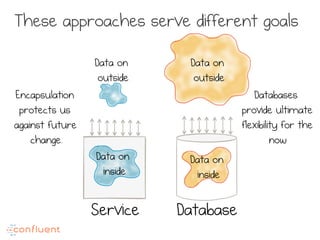

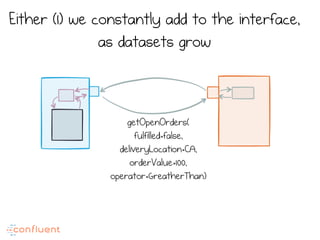

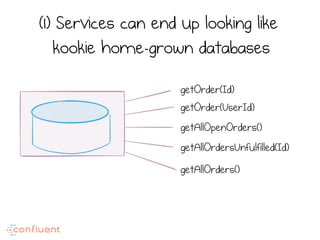

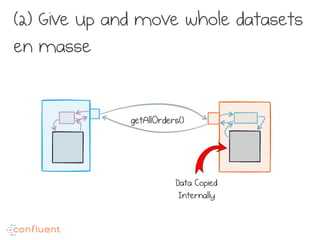

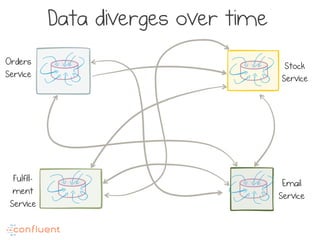

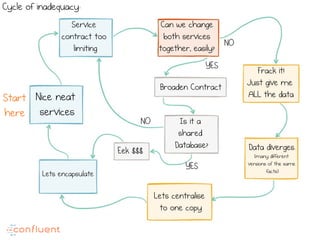

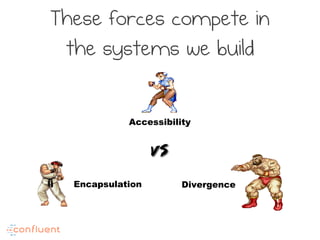

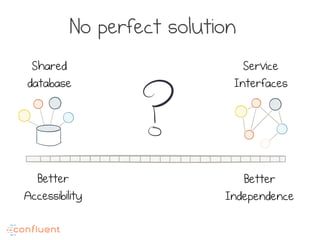

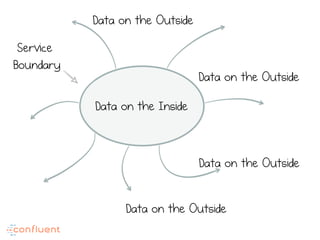

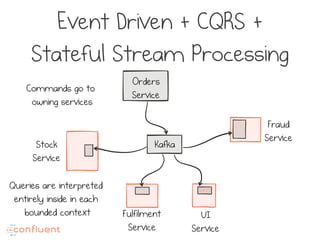

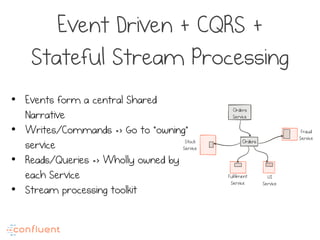

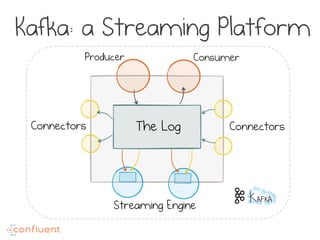

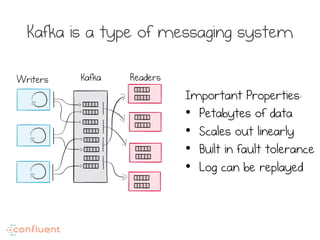

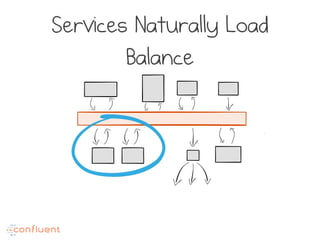

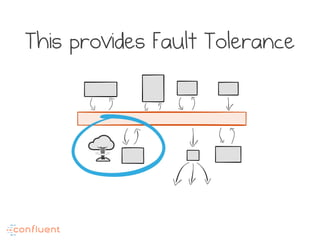

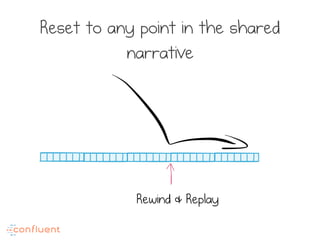

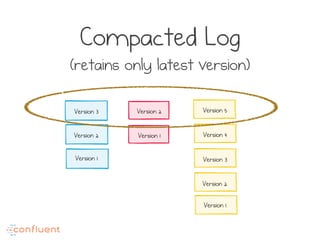

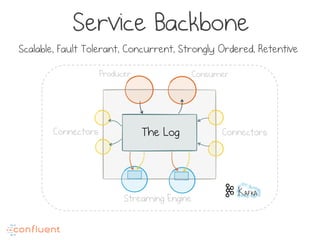

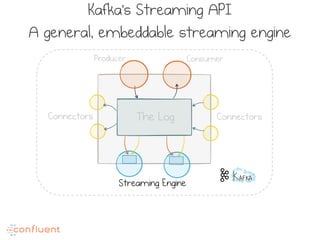

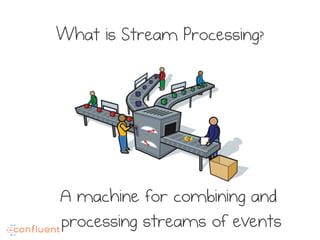

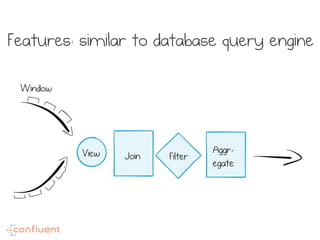

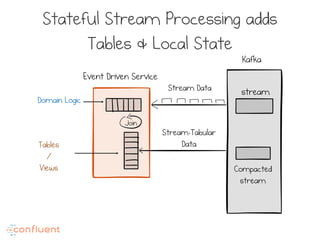

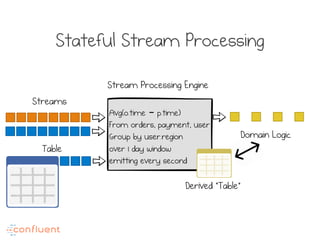

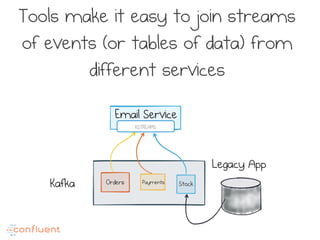

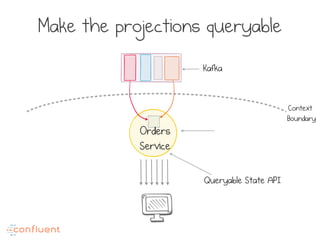

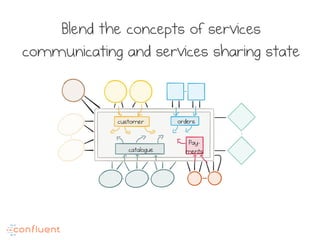

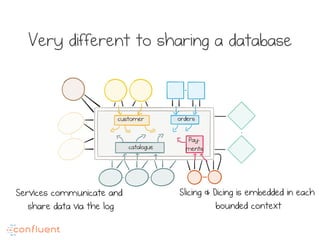

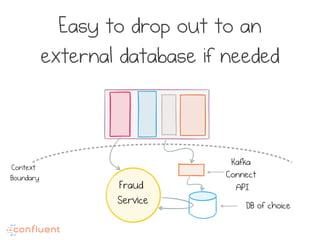

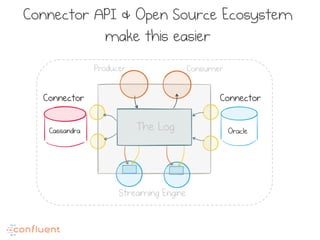

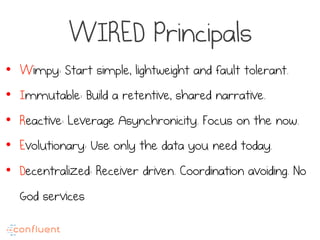

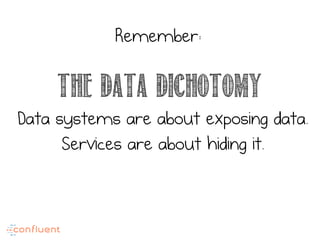

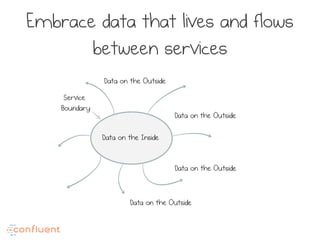

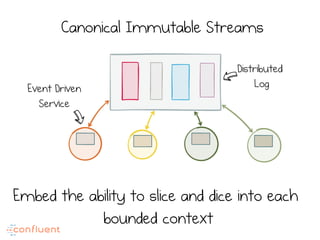

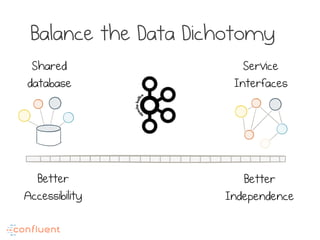

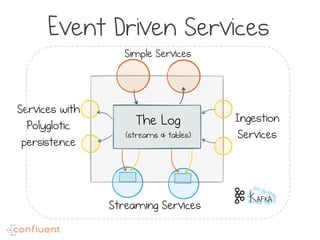

The document discusses the concept of data dichotomy in relation to microservices, emphasizing the balance between data exposure and service encapsulation. It highlights the challenges of managing shared data among services and proposes using event-driven architectures and stream processing with tools like Kafka to create scalable, fault-tolerant systems. Ultimately, the text advocates for an architecture that prioritizes change and flexible data handling while maintaining a clear narrative across services.