Sourav banerjee resume

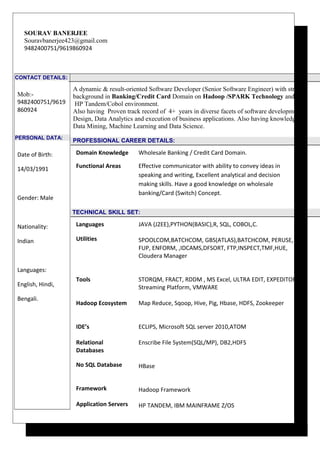

- 1. SOURAV BANERJEE Souravbanerjee423@gmail.com 9482400751/9619860924 CONTACT DETAILS: Mob:- 9482400751/9619 860924 PERSONAL DATA: Date of Birth: 14/03/1991 Gender: Male Nationality: Indian Languages: English, Hindi, Bengali. A dynamic & result-oriented Software Developer (Senior Software Engineer) with strong background in Banking/Credit Card Domain on Hadoop /SPARK Technology and HP Tandem/Cobol environment. Also having Proven track record of 4+ years in diverse facets of software development, Design, Data Analytics and execution of business applications. Also having knowledge on Data Mining, Machine Learning and Data Science. PROFESSIONAL CAREER DETAILS: Domain Knowledge Wholesale Banking / Credit Card Domain. Functional Areas Effective communicator with ability to convey ideas in speaking and writing, Excellent analytical and decision making skills. Have a good knowledge on wholesale banking/Card (Switch) Concept. TECHNICAL SKILL SET: Languages JAVA (J2EE),PYTHON(BASIC),R, SQL, COBOL,C. Utilities SPOOLCOM,BATCHCOM, GBS(ATLAS),BATCHCOM, PERUSE, FUP, ENFORM, ,IDCAMS,DFSORT, FTP,INSPECT,TMF,HUE, Cloudera Manager Tools Hadoop Ecosystem IDE’s Relational Databases STORQM, FRACT, RDDM , MS Excel, ULTRA EDIT, EXPEDITOR, Streaming Platform, VMWARE Map Reduce, Sqoop, Hive, Pig, Hbase, HDFS, Zookeeper ECLIPS, Microsoft SQL server 2010,ATOM Enscribe File System(SQL/MP), DB2,HDFS No SQL Database Framework Application Servers HBase Hadoop Framework HP TANDEM, IBM MAINFRAME Z/OS

- 2. Operating Systems Scripting Language Project Management Tools IT Operation Standard WINDOWS XP/7,UNIX/LINUX, Guardian(Non Stop Kernel), Z/OS Shell Script , Python(Basic) HPSM, JIRA, SNOW SCRUM and Agile Methodology EDUCATIONAL BACKGROUND : Bachelor of Technology. Year of Passout :June ,2012 CAREER SUMMARY: Proactive, flexible, customer focused, innovative with good analytical skills and Can work under pressure and tight deadlines. 3 years of extensive experience in COBOL, TACL, TAL, UNIX, and IBM Mainframe Systems and Tandem Development. 1+ years of experience as Hadoop developer having sound knowledge of Big Data Technology Stack– Hadoop, Hive, HDFS, Mapreduce, Sqoop,PIG,Flume,Impala. Strong Knowledge of Hadoop and Hive and Hive's analytical functions Hands on Experience in working with ecosystems like Hive, Pig, Sqoop, Map Reduce, Flume, OoZie. Implemented Proofs of Concept on Hadoop stack and different big data analytic tools, migration from different databases (i.e Teradata, Oracle,MYSQL ) to Hadoop. Successfully loaded files to Hive and HDFS from HBase Efficient in building hive, pig and map Reduce scripts. Loaded the dataset into Hive for ETL Operation. Experience in using DBvisualizer, Zoo keeper and cloudera Manager. Experience in database design using Stored Procedure, Functions, Triggers and strong experience in writing complex queries for DB2, SQL Server. Banking Knowledge particularly in the areas of payment, Interest Accruals, FX And MM. Credit Card Domain Knowledge especially in Issuing and Acquiring. Has also experience in Testing (Unit testing, functional analysis testing and user acceptance testing). Ability to pay attention to detail and develop a bigger picture of problem. Have a good experience on working in AGILE and Waterfall methodology. Experience on working in SCRUM methodology

- 3. CERTIFICATION: Certification on Basic of Financial Market – NCFM Big Data Analytics with HDInsight: Hadoop on Azure – Microsoft IBM Certified Big Data and Hadoop Developer - IBM Certified Big data and Hadoop Developer - Simplilearn Winner of TCS GEMS On-The-Spot Award Refer to below mentioned LinkedIn profile for more details. Certified Data Science – R Programming - Simplilearn PROFESSIONAL EXPERIENCE: Company Mashreq Global Service (From Nov 2016 to Current) Technical Role : Hadoop Developer Working as development - production support executive in Card Domain Technology IN issuing and acquiring module. Install raw Hadoop and NoSQL applications and develop programs for analyzing data. Responsibilities: • Replaced default Derby metadata storage system for Hive with MySQL system. • Executed queries using Hive and developed Map-Reduce jobs to analyze data. • Developed Pig Latin scripts to extract the data from the web server output files to load into HDFS. • Developed the Pig UDF's to preprocess the data for analysis. • Developed Hive queries for the analysts. • Use Impala in place of Hive for some short application. • Use Flume for Streaming Data. • Involved in loading data from LINUX and UNIX file system to HDFS. • Analyzed the web log data using the HiveQL. Technology Used: Core Java, Apache Hadoop,HDFS, Pig, Hive, Cassandra, Shell Scripting, My Sql, LINUX, UNIX Tata Consultancy Service LTD (From Oct 2012 to Aug 2016) Worked as System Engineer for banking and financial service practice. In my tenure of engagement with TCS, I was involved in performing activities like analyzing business requirements, technical solution design; develop COBOL codes, quality assurance and internal reviews.

- 4. Client: ING Wholesale Banking Duration 3 year 4 months (From May 13 – Aug 2016) Role: System Engineer Key Projects Undertaken: ATLAS Data Analysis : Technical Role : Hadoop Developer Duration: 6 months. Project Details: ING bank from Netherlands is one of the leading banks in the world. ING migrated from Atlas to GBS in 2008; however regulatory requirements make it mandatory to keep data for 10 years. ING keeps the data for 20 years and with the Atlas servers decommissioned, maintaining the servers to retain the data is very costly. Thus all the data from Atlas server was decided to migrate to Hadoop , As the data is highly structured the data was stored in Hive tables so that further analysis can be done on the data and Regulatory/customer requirements can be met. Responsibilities: • Developed Hive scripts for end user / analyst requirements to perform • ad hoc analysis • Solved performance issues in Hive with understanding of Joins, Group and aggregation and how does it translate to Map Reduce jobs. • Created External/ Managed Hive tables with optimized partitioning and bucketing. • Data is partitioned on the basis of year, sub branch, currency and account type. • Generate the statements for an account for a particular year. • Technology Used: COBOL, UNIX, JAVA, SQOOP, HIVE. ING Security Monitoring: Technical Role : Hadoop Developer Duration: 2 months. Project Details: Daily activities in the GBS environments are recorded in the log file. These log files are stored as flat files. At the end of the day after the Pre-EOD run the log files are FTPed to the local system and then stored in HDFS. The log files are analyzed using MapReduce to check for any suspicious activity. Responsibilities: • Worked as lead developer payment module and Foreign Exchange module. • Developed problem definition design for enabling project requirements. • Developed MapReduce program to analyze the log files and check for any suspicious activity. • To perform team activities like internal code reviews and quality assurance

- 5. Technology Used : MQ, MapReduce, Hadoop Framework,Tandem,UNIX Statement Generation: Technical Role : Hadoop Developer Duration: 2 months Project Details: As an extension to the daily log analysis for security monitoring, hadoop can be used to analyze the accounting entry records to generate the statements for customers on daily basis.The generation of the statements makes up a large portion of the daily EOD processing. Responsibilities: • Developing MapReduce program to read the accounting entries file and generate the output such and send it PSE (Paper Statement Engine) for further processing. • Worked on whole design and development part of the project • Develop new COBOL codes for new tags in SWIFT messaging. • Designing application flow and user interaction • Providing development solutions to meet end user requirements. • Delivering code with defined standards within given timeframe Technology Used: UNIX, Mapreduce, COBOL, Hive,SQOOP,HDFS. ING Wholesale Bank: GBS LCM Release 2014 Technical Role : Tandem Developer Duration: 8 months Project Details: It was a combination of different small change requests (CR) which are solely based on various customer requirement, such as Report Creation, Payment Flow changes, Database Handling. Technology used : IBM Mainframe, TANDEM, CICS,UNIX, JAVA Responsibilities: • Worked as lead developer • Develop codes from the very scratch and perform small changes in existing programs. • Developing technical solution design for enabling customer requirements. • Monitor day to day activities. • To perform team activities like internal code reviews, time estimates and quality assurance

- 6. Technology used : COBOL, MVS/JCL, TAL, UNIX,TANDEM. ING Wholesale Bank: SWIFT 2013 Technical Role : Tandem Developer Duration: 4 months Project Details- It was based on updating of SWIFT messaging based on the new SWIFT 2013 manual. We had to add new tag to accommodate new facility to the customer. Technology used : COBOL, MVS/JCL, TAL, UNIX,TANDEM. Responsibilities: • Worked on whole design and development part of the project • Designing application flow and user interaction • Providing development solutions to meet end user requirements. • Delivering code with defined standards within given timeframe ING Wholesale Bank: GMAINT 2013 Technical Role : Tandem Developer Duration: 4 months Project Details: In this project WSS (Wall Street System) deliver new components to accommodate in the TANDEM server. We gather the requirement specifically for ING and modify and update the WSS deliveries as per our own requirement. Technology used : TANDEM, COBOL, JCL, and DB2 Responsibilities: • Worked as developer • Involved in Technical analysis part. • Worked on section preparation and delivery part. • Gathering requirements from onsite functional team • Designing application flow and user interaction • Worked on documentation part. ING Wholesale Bank : GBS LCM Release 2013 Technical Role : Tandem Developer Duration: 8 months Project Details: It is a combination of different small change requests(CR) which are

- 7. Client: TCS INTERNAL Duration 4 months (Jan 2013 – April 2013) Role: Software developer solely based on various customer requirement ,such as Report Creation, Payment Flow changes, Database Handling. Technology Used : IBM Mainframe, TANDEM, CICS,UNIX, JAVA Responsibilities: • Worked as developer. • Develop codes from the very scratch and perform small changes in existing programs. • Developing technical solution design for enabling customer requirements. • Monitor day to day activities. Non Life Insurance First Quote Generation (Jan 2013 to Apr 2013) Technical Role : Tandem Developer Duration: 4 months Project Details: The Non-Life insurance Quote generation system or Non-life Fast Quote generation system need to estimate the premium amount for an applicant based on the given factors including type of insurance, coverage amount, length of coverage, age, gender, driving history, health and medical history, family history, vehicle history and approximate rating class. The calculated premium should be displayed on the screen and the quote has to be saved in the system database after the completion of the transaction Technology used : COBOL, JCL, and DB2,IBM Mainframe,UNIX Responsibilities: • Developing functional solution design as per business requirement • To develop COBOL codes and JCL jobs from scratch • Creating MAPS using CICS for UI purpose. • Developing technical solution design for enabling customer requirements. • To perform team activities like time estimates and quality assurance of documents REFERENCES References Available upon Request LinkedIn Profile : https://www.linkedin.com/in/sourav-banerjee-50b443106/ DECLARATION I hereby declare that the above-mentioned information is correct up to my knowledge and I bear the responsibility for the correctness of the above-mentioned particulars.

- 8. Place: Bangalore (Sourav Banerjee)