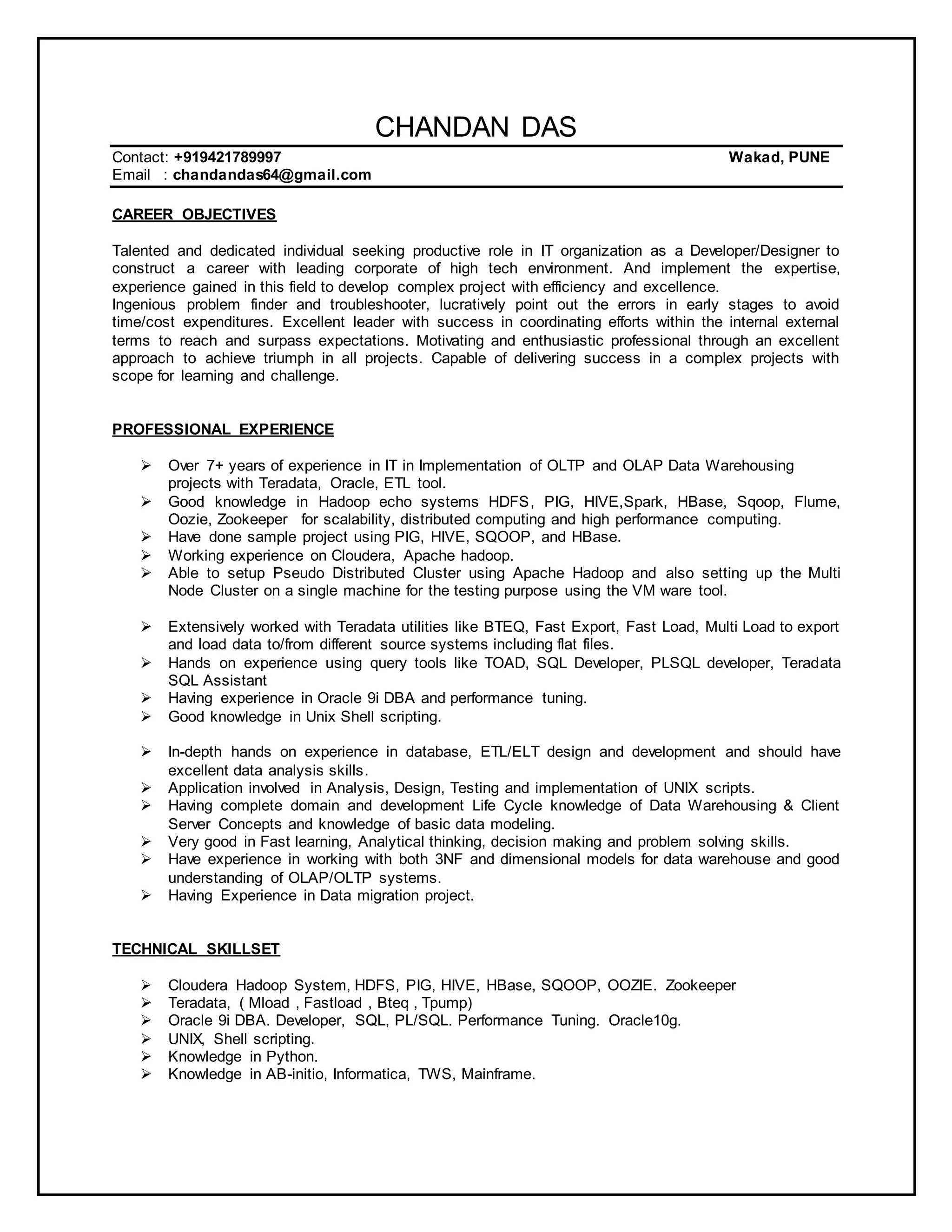

Chandan Das is a developer/designer with over 7 years of experience in IT implementation projects using technologies like Teradata, Oracle, Hadoop, Pig, Hive, and Sqoop. He has extensive experience in data warehousing, ETL, and database administration. His career objective is to obtain a productive role in an IT organization where he can implement his expertise in developing complex projects efficiently and meeting expectations. He provides details of his professional experience, technical skills, key achievements and completed projects.