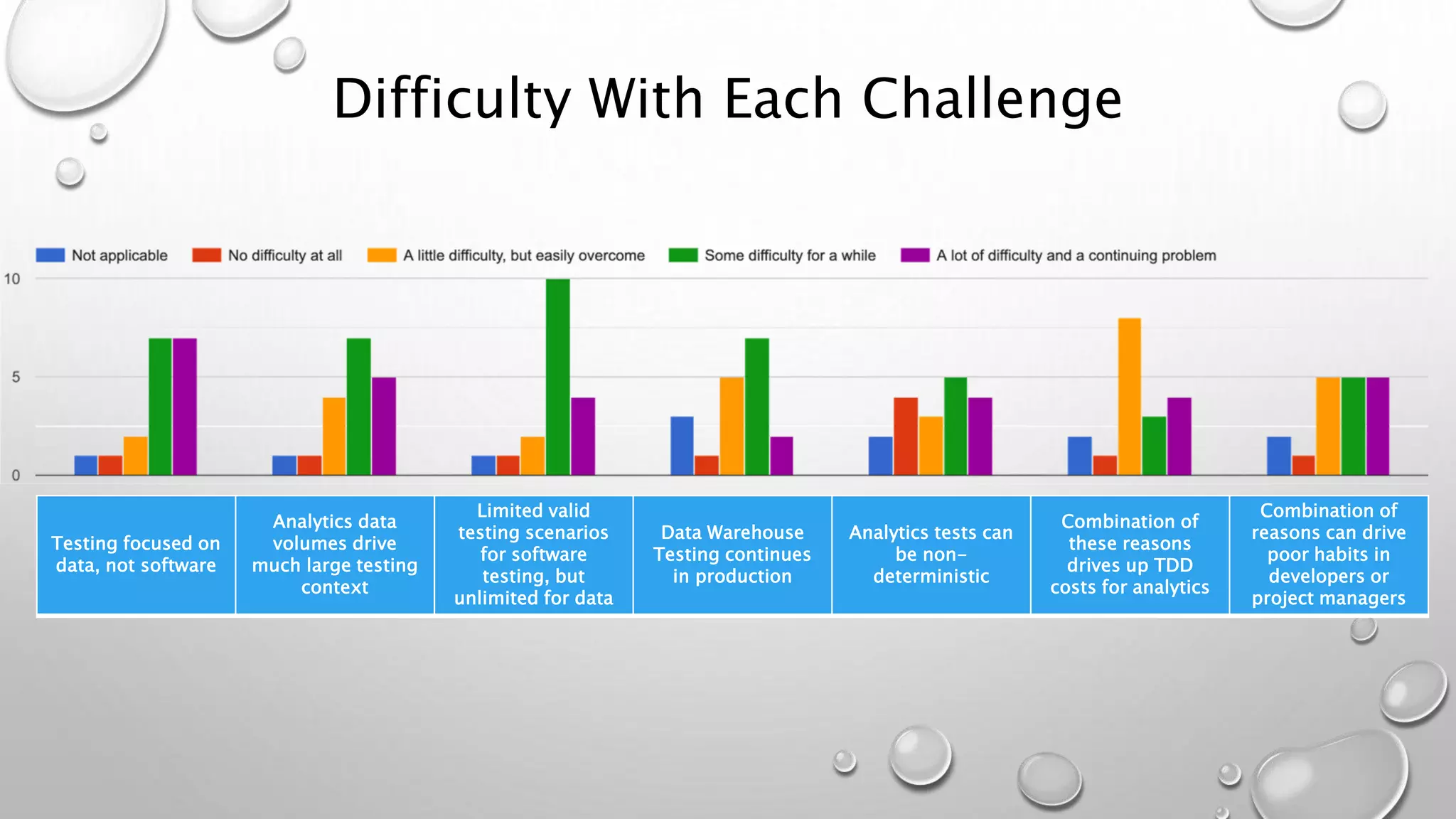

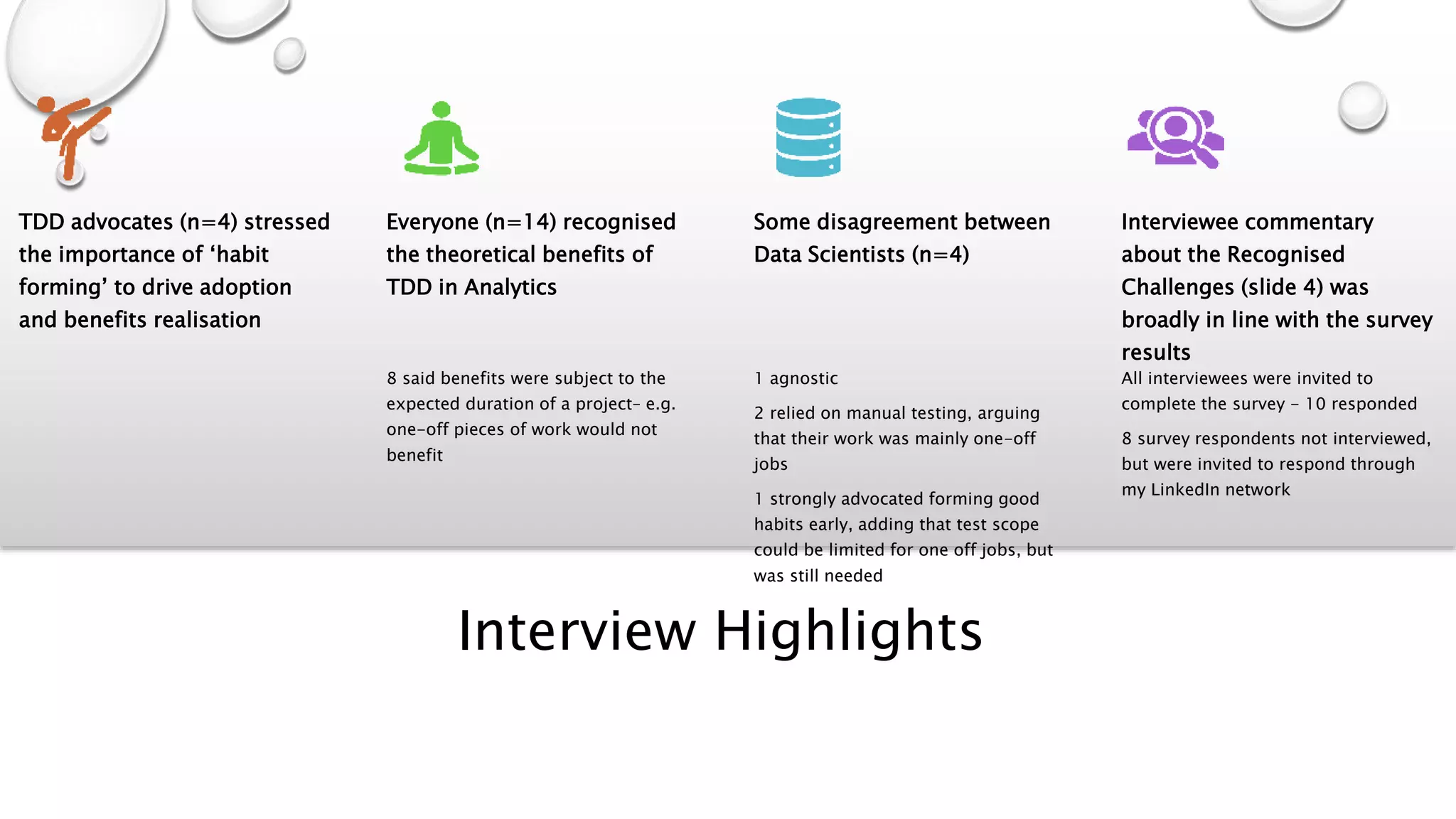

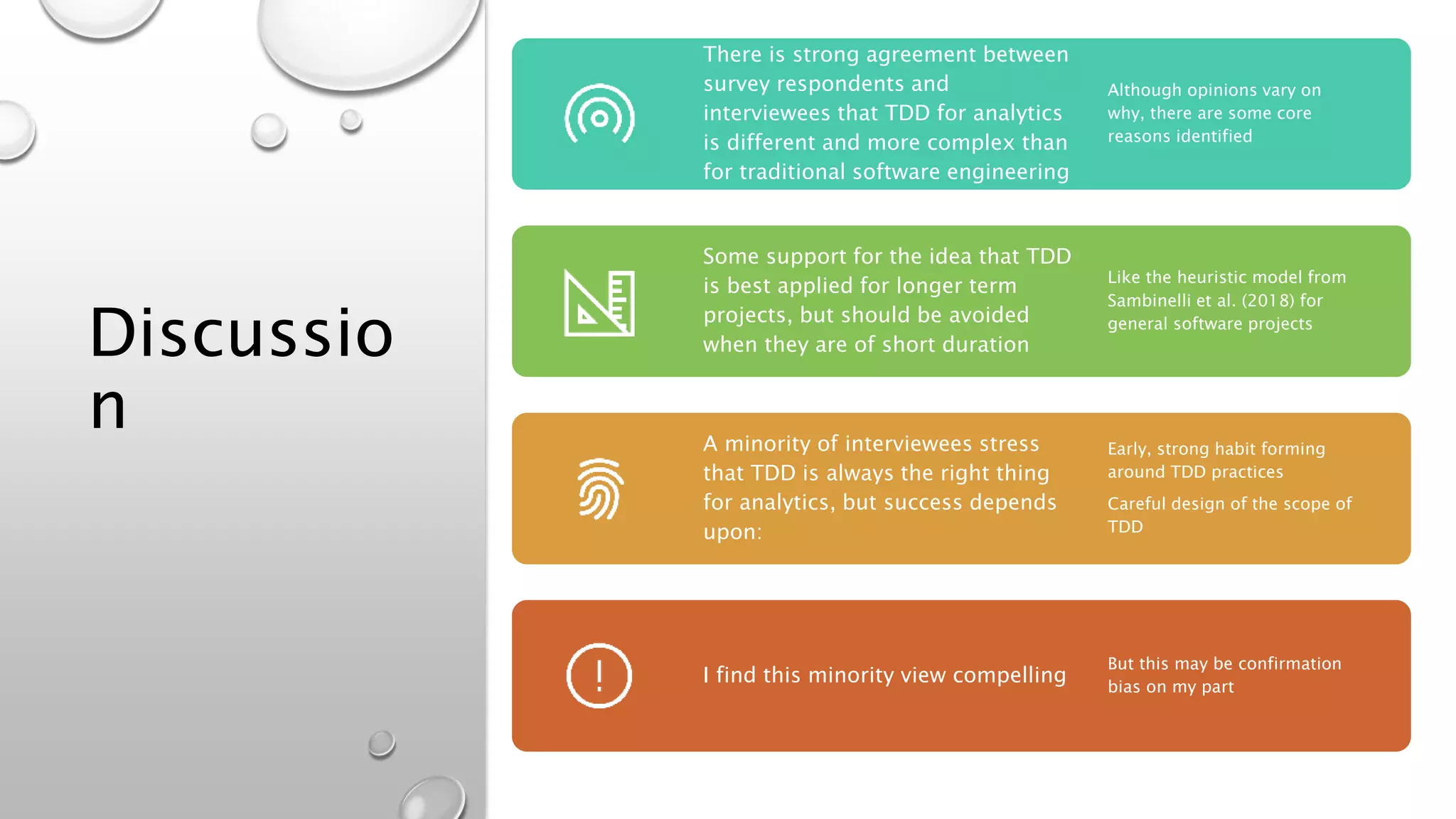

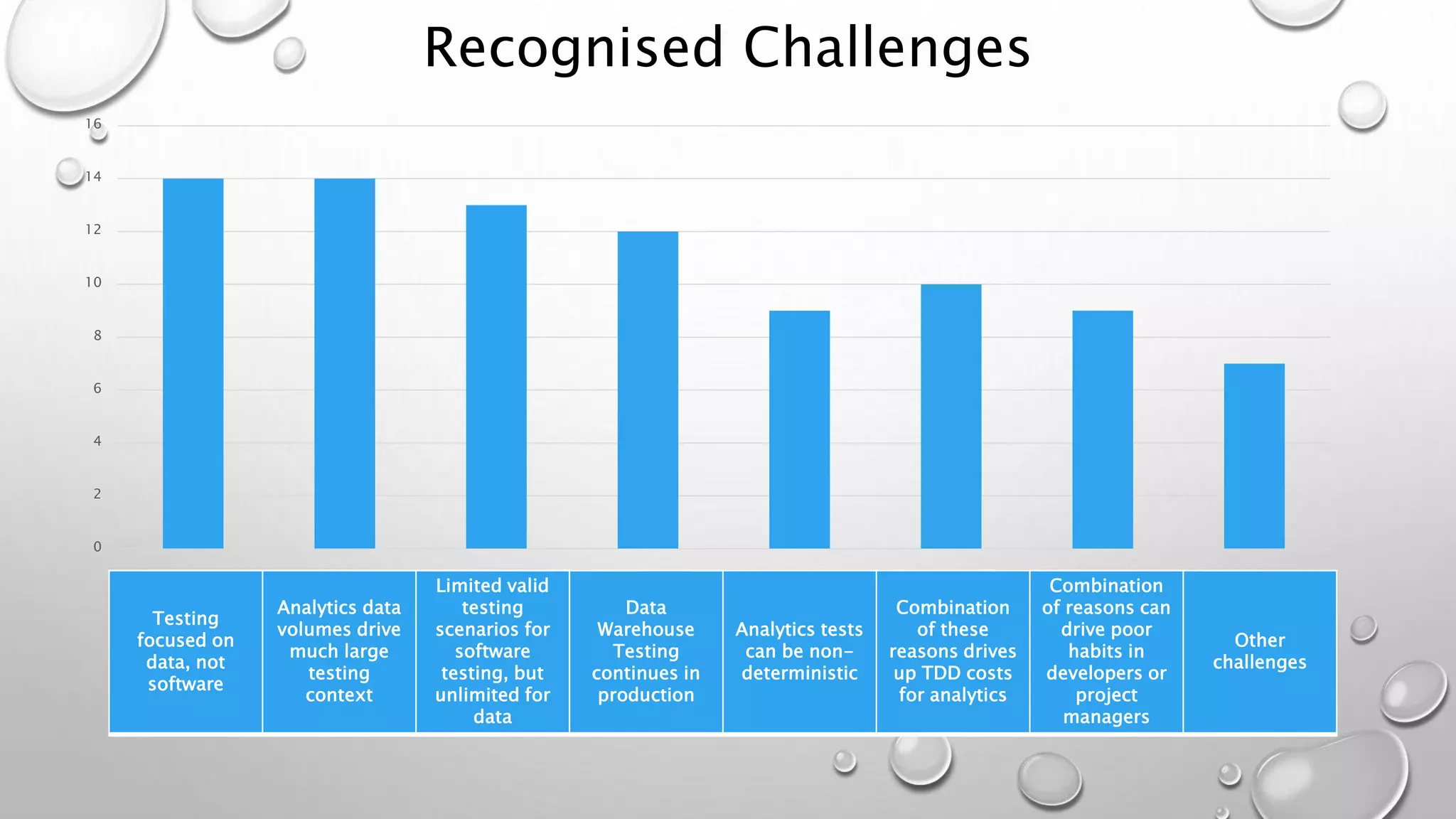

This document outlines Phil Watt's oral thesis presentation on the challenges of adopting test-driven development (TDD) for analytics and data projects. It begins with an introduction to the problem that while TDD is an established best practice, it is rarely used for analytics projects. It then reviews literature identifying challenges such as testing large data volumes and non-deterministic outputs. Next, it describes the author's mixed methodology of interviews and an online survey of analytics professionals to understand recognized challenges and their perceived difficulty. The results showed agreement that TDD for analytics is more complex than for software but opinions varied on solutions. Further work could study automation case studies and impact of other productivity factors.

![Other

challenge

s

• DWH can have complex logic related to delta processing,

historical delta etc which makes it even more difficult to

automate [testing]. Multiple source systems which can

inject a different type of data due to their own changes

make it even more complex.

• Capability to handle end-to-end complexity of

development task is rare

• 1. People with a software background may not

understand analytics. 2. DW bugs not fixed post

deployment. 3. DW not tested for other purposes. eg.

Marketing analytics.

• Dev Teams / Leaders don't think of testing in this way

• Analysts and Data Scientists rarely have the personality

or training to do TDD effectively.](https://image.slidesharecdn.com/philwattoralpresentationisys901112019tm4-191209032258/75/Why-is-Test-Driven-Development-for-Analytics-or-Data-Projects-so-Hard-9-2048.jpg)