PhD Defense Slides - Automatic Non-functional Testing and Tuning of Configurable Generators

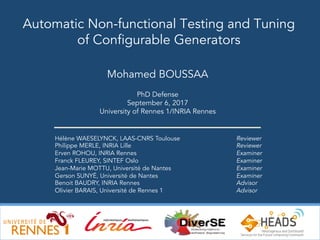

- 1. 1 Hélène WAESELYNCK, LAAS-CNRS Toulouse Philippe MERLE, INRIA Lille Erven ROHOU, INRIA Rennes Franck FLEUREY, SINTEF Oslo Jean-Marie MOTTU, Université de Nantes Gerson SUNYÉ, Université de Nantes Benoit BAUDRY, INRIA Rennes Olivier BARAIS, Université de Rennes 1 Automatic Non-functional Testing and Tuning of Configurable Generators Mohamed BOUSSAA PhD Defense September 6, 2017 University of Rennes 1/INRIA Rennes Reviewer Reviewer Examiner Examiner Examiner Examiner Advisor Advisor

- 2. Context 2

- 3. 3 Context Arduino UNO ATmega328 8-bit 16MHz Intel Edison Atom 2 500Mhz 1Gb Espressif ESP8266 32-bit, 80MHz, WiFi Software Innovation Software Diversity -New programming languages -Software platforms -Execution environments Hardware Innovation Hardware Heterogeneity -New CPU architecture, ISAs -2x faster, smaller -Cheaper, more capable, etc. Raspberry Pi (B+/2) ARM v7 – 700MHz -> Building software in heterogeneous environment is complex

- 4. 4 Generative software development Code generation DSL s GPLs GUIs Models SPECs Design Runtime - Develop the code easily and rapidly - Handle the heterogeneity of target so[ware/hardware pla]orms by automa^cally genera^ng code

- 5. 2 1 Automatic code generation 5 Machine Code Generators GPLs High-level program specifica^on GPLs Templates Configurations Files Flags a highly configurable process

- 6. 2 1 Automatic code generation 6 Machine Code GPLs HAXE programs GPLs a highly configurable process GPLs Variants Target Platform

- 7. 2 1 Automatic code generation 7 Machine Code C Code a highly configurable process Variants Optimisation flags (e.g., CFLAGS) HAXE programs GPLsGPLs Variants -Os -ftree-vectorize -Og -O3 -Ofast -O2

- 8. Automatic code generation 8 a highly configurable process 2 1 GPLs High-level program specifica^on Templates Configurations Files Flags Generated code must be effectively tested Generators

- 9. Automatic code generation 9 a highly configurable process 2 1 GPLs High-level program specifica^on Templates Configurations Files Flags Tests pass: no bugs in generators Generators I will never write code again !

- 10. Automatic code generation 10 a highly configurable process 2 1 GPLs High-level program specifica^on Templates Configurations Files Flags Tests fail: bugs must be fixed ! Generators I will never use generators again !

- 11. Automatic code generation 11 a highly configurable process 2 1 GPLs High-level program specifica^on Templates Configurations Files Flags Tests pass, but what about the non-functional properties (quality) of generated code ? Generators It is too slow ! I am running out of memory

- 12. 12 Generator experts Genrerator users Build and maintain I need to produce more efficient code. Turn on op5misa5ons? I need to evaluate the quality of generated code Non-functional testing and tuning of generators How can I automa^cally detect the non-func^onal issues? Use and configure Which configura^on should I select?

- 13. 13 Generator experts Genrerator users Build and maintain I need to produce more efficient code. Turn on op5misa5ons? I need to automa5cally evaluate the quality of generated code Challenges How can I detect non-func^onal issues? Use and configure Which configura^on should I select? Non-functional testing of generators • Oracle problem Auto-tuning configurable generators • Huge configura^on space Collecting the non-functional metrics • Handling the diversity of so[ware and hardware pla]orms

- 14. Related work 14 ¤ Tes5ng generators • Func^onal tes^ng: executable models, differen^al tes^ng [Conrad et al. ’10, Stuermer et al. ’07] Do not address the NF proper^es ¤ Auto-tuning generators: • Auto-tuning: a mono objec^ve op^miza^on [Bashkansky et al. ’07, Stephenson et al. ’03] • Phase ordering problem [Kulkarni et al. ‘06, Cooper et al. ’99] • Predic^ng op^miza^ons: a machine learning op^miza^on [Fursin et al. 11’] • Conflic^ng objec^ves: a mul^-objec^ve op^miza^on [Hoste et al. 08’, Mar^nez et al. ’14] Do not exploit recent advances in SBSE (e.g., diversity-based explora^on) 14

- 16. 16 Contribution II: An auto-tuning approach Contribution I: An automatic non-functional testing approach Select the best configuration Generator experts Build/maintain Genrerator users Identify code generator issues Contribution III: A lightweight environment for monitoring and testing the generated code Use/configure

- 17. Contribution I: Automatic non-functional testing of code generators https://testingcodegenerators.wordpress.com/ 17

- 18. 2 1 Non-functional testing of source code generators 18 Machine Code GPLs High-level program specifica^on GPLs Templates Configurations Files Flags Generators

- 20. Code generator families 20 Defini5on (Code generator family): A set of code generators that takes as input the same language/model and generate code for different target so9ware pla;orms 20 High-level program specifica^on Compare the non- func^onal behavior of programs generated from the same code generator family Test cases Code generator family 20

- 21. Leveraging metamorphic testing to automatically detect inconsistencies Metamophic tes5ng1 (MT): - Oracles can be derived from proper^es of the system under test - Exploit the rela^on between the inputs and outputs of special test cases of the system under test to derive metamorphic rela^ons (MRs) defined as test oracles for new test cases 21 Metamorphic Rela5on Derive 21 21 Original test cases Outputs Verify New test cases Outputs 21 1Chen et al., Metamorphic tes^ng: a new approach for genera^ng next test cases, University of Science and Technology, Hong Kong, 1998.

- 22. Metamorphic Relation (MR) This MR is equivalent to say: If a set of func^onally equivalent programs are generated using the same code generator family ((P1(^), P2(^),...,Pn(^)), and with the same input test suite ti, then the comparison of their non-func^onal outputs defined by the varia^on , should not exceed a specific threshold value T. 22 22 22 Equivalent programs Non-func^onal outputs (e.g., execu^on ^me, memory usage) 22

- 23. Statistical methods o We propose two varia^on analysis approaches to define the threshold value 23 23 23 R-Chart (Range Chart) PCA (Principal Component Analysis) Ø A mul^variate sta^s^cal approach Ø Reduce the dimensionality of the original data to a two dimensions (PC1 & PC2) Ø A score distance SD measures how far an observa^on lies from the rest of the data within the PCA subspace Ø SD with cutoff value higher than 97.5%-Quan^le Q of the Chi-square distribu^on are detected as outliers Ø Evaluate the varia^on as a Range R (Max - Min) Ø Control limits (LCL and UCL) represent the limits of varia^on that should be expected from a process LCL < R < UCL T < 23

- 24. 24 I(ti) Code generator family Pn(ti) P1(ti) Detect inconsistencies Repeat Generated programs + test cases Metamorphic testing process P2(ti) . . . Execution f(Pn(ti)) f(P1(ti)) f(P2(ti)) . . . MR Verification Non-functional output: Execution time or memory usage Input program + test cases

- 25. Evaluation Ø 1 code generator family: – Haxe code generators – Five target programming languages: JAVA, JS, C++, C#, and PHP Ø 2 non-functional metrics: – Performance and Memory usage Ø 7 benchmark libraries: 25 RQ: “ How effec^ve is our metamorphic tes^ng approach to automa^cally detect inconsistencies in code generator families? ” Experimental setup:

- 26. R-chart results: performance variations 26 11 performance inconsistencies are identified

- 27. R-chart results: memory variations 27 15 memory usage inconsistencies are identified

- 28. PCA results: performance variations 28 PCA Detected outliers 4 performance inconsistencies are identified JAVA: 4.1 JS: 1 CPP: 5.4 C#: 5.7 PHP: 481.6 JAVA: 1.28 JS: 2.9 CPP: 1 C#: 3.3 PHP: 261.3 JAVA: 1.1 JS: 2.7 CPP: 1 C#: 3.6 PHP: 258.9 JAVA: 1 JS: 12 CPP: 3.1 C#: 4 PHP: 80 Test suites PC1 PC2

- 29. PCA results: memory variations 29 PCA Detected outliers 4 memory usage inconsistencies are identified JAVA: 250.7 JS: 71.7 CPP: 1 C#: 69.9 PHP: 454.1 JAVA: 11.9 JS: 1 CPP: 14.6 C#: 36.1 PHP: 620.2 JAVA: 214.5 JS: 92.4 CPP: 1 C#: 57.6 PHP: 224.4 JAVA: 1.2 JS: 1 CPP: 1.7 C#: 3.6 PHP: 675 Test suites PC1 PC2

- 30. Analysis 30 v For Core_TS4 in PHP: • We observe the intensive use of « arrays » • Arrays in PHP are allocated dynamically, leading to a slower wr^ng speed • We replace « arrays » by « SplFixedArray » ⇒ Speedup x5 ⇒ Memory usage reduc^on x2 ⇒ Issue fixed by the Haxe community Key findings: - The lack of use of specific types that exist in the standard library shows a real impact on the non-func^onal behavior of generated code. 30

- 31. Conclusion § A non-functional metamorphic relation is used to detect code generator inconsistencies − Two statistical methods are applied to find the right MR definition § The evaluation results show that: − 11 performance and 15 memory usage inconsistencies, violating the metamorphic relation for Haxe code generators − The analysis of test suites triggering the inconsistencies shows that there exist potential issues in some code generators, affecting the quality of delivered software 31

- 32. Contribution II: NOTICE: An approach for auto-tuning generators https://noticegcc.wordpress.com 32

- 33. 2 1 Auto-tuning compilers 33 GPLs High-level program specifica^on GPLs Generators Machine CodeOptimisation flags (e.g., CFLAGS)

- 34. Motivating example ¤ GCC 4.8.4: - 78 optimizations - 278 combina^ons 34 Speedup, Memory, etc. Resource Constraints WHY ALWAYS ME !! -BOSS: Clients complain about the high memory consumption -BOSS: Is it possible to consume less CPU? we don’t have enough resources/money -BOSS: Please, can we optimize even more ? Good luck Son !! 34 - Tes^ng each op^miza^on configura^on is impossible - Heuris^cs are needed 34 34

- 35. Compiler auto-tuning is complex 35 ¤ Construc^ng a good set of op^miza^on levels (-Ox) is hard • Conflic^ng objec^ves • Complex interac^ons • Unknown effect of some op^miza^ons 35 35

- 36. Contribu5on ¤ Novel formula^on of the compiler op^miza^on problem using Novelty Search1 ü Diverse op^miza^on sequences ü Explore the large search space by considering Novelty as the main objec^ve We propose: 36 36 1Lehman et al., Exploi^ng Open-Endedness to Solve Problems Through the Search for Novelty. In ALIFE 2008

- 37. Diversity-based explora5on gcc –c test.c –fno-dce –fno-dse -fno-align-loops … Muta5on: Crossover: Best solu5on: Solu5on with best non-func5onal improvement 0 0 1 0 … Step 2: Evalua5on … Archive: Novelty metric: Step 3: Selec5on Step 4: Evolu5onary operators 0 1 1 1 0 … 0 1 1 1 0 … 1 0 0 1 1 … Go To Step 2 Solu5on representa5on: Saves solu>ons that get a novelty metric value higher than a specific novelty threshold value. Calculate the distance of one solu>on from its K Nearest neighbors in current popula>on and in the Archive. Step 1: Random genera5on 37 Select solu>ons to evolve based on novelty scores. Tournament selec5on: 37

- 38. Evalua5on Ø Programs under test – Csmith code generator – 111 Csmith generated programs – 6 Cbench benchmark programs Ø Evolutionary algorithms Ø Compiler under test – GCC 4.8.4 Ø Evaluation metrics – Speedup (S) – Memory/CPU Consumption Reduction (MR and CR) – Tradeoff <Speedup – Memory usage> 38 Mono Objec^ve Novelty Search (NS) Gene^c Algo (GA) Random Search (RS) Mul^ Objec^ve Novelty Search (NS-II) NSGA-II Over -O0

- 39. Research Questions RQ1: Mono-objec5ve SBSE Valida5on. Op^miza^ons Non-func^onal metric Training set programs Best sequence RQ2: Sensi5vity of input programs to op5miza5on sequences. Unseen programs Non-func^onal improvement Best sequence in RQ1 RQ3: Impact of speedup on resource consump5on. RQ4: Trade-offs between non-func5onal proper5es. Best Speedup Sequence In RQ1 Impact on resource consump^on Op^miza^ons Pareto front solu^ons 39 Training set programs Mul^-objec^ve search Mono-objec^ve search Non-func^onal Trade-off <^me-memory> Input program 39

- 40. RQ1- Results RQ1: Mono-objec5ve SBSE Valida5on. - Training set: 10 Csmith programs - Average S, MR, and CR - Comparison: Ox, RS, GA and NS Key findings for RQ1: – Best discovered op^miza^on sequences using mono-objec^ve search techniques always provide beber results than standard GCC op^miza^on levels. – Novelty Search is a good candidate to improve code in terms of non-func^onal proper^es since it is able to discover op^miza^on combina^ons that outperform RS and GA. Search for best op^miza^on sequence Best sequence Op^miza^ons Non-func^onal Metric Training set programs 4040

- 41. RQ2- Results Key findings for RQ2: – It is possible to build general op^miza^on sequences that perform beber than standard op^miza^on levels – Best discovered sequences in RQ1 can be mostly used to improve the memory and CPU consump^on of Csmith programs. To answer RQ2, Csmith programs are sensi^ve to compiler op^miza^ons. RQ2: Sensi5vity. - 100 unseen Csmith programs - O2 vs O3 vs NS Unseen programs Non-func^onal improvement Best Sequence In RQ1 4141

- 42. RQ3- Results RQ3: Impact of op5miza5ons on resource consump5on. - Ox vs RS vs GA vs NS Key findings for RQ3: – Op^mizing so[ware performance can induce undesirable effects on system resources. – A trade-off is needed to find a correla^on between so[ware performance and resource usage. Best Speedup Sequence In RQ1 Training set programs Impact on Resource CPU & memory 42 Memory reduc^on Increase of resource usage CPU reduc^on 42

- 43. RQ4- Results RQ4: Trade-offs between non-func5onal proper5es. - 1 Csmith program - Trade-off <execu^on ^me-memory usage> Key findings for RQ4: – NOTICE is able to construct op^miza^on levels that represent op^mal trade-offs between non-func^onal proper^es. – NSGA-II performs beber than our NS adapta^on for mul^-objec^ve op^miza^on. However, NS-II performs clearly beber than standard GCC op^miza^ons and previously discovered sequences in RQ1. 43 Op^miza^ons Pareto front solu^ons Mul^-objec^ve search Trade-off ^me/memory Input program Pareto front NS-II (mul^-objec^ve) Ofast O3 O2 O1 Best CPU reduc^on (mono-objec^ve) Best memory reduc^on (mono-objec^ve) Pareto front NSGA-II (mul^-objec^ve) 43

- 44. Conclusion § Novel formulation of the compiler optimization problem based on Novelty Search § Novelty Search is able to generate effective optimizations − Generated sequeces perform better than standard levels − Our approach outperfroms classical approaches (GA and RS) § Trade-offs between non-functional properties are constructed − NSGA-II performs better than NS and mono-objective approaches 44

- 45. Contribution III: A lightweight environment for monitoring and testing the generated code 45

- 46. 2 1 Challenges 46 Machine Code Generators GPLs High-level program specifica^on GPLs Templates Configurations Files Flags Heterogeneity -Monitoring the resource usage of generated code -Control and limit resources Reproducibility -Reproduce tests across different enviornement settings Efficiency -Deploy automatically the generated code without affecting the system performance

- 47. Infrastructure Overview 47 ¤ We propose: • A micro-service infrastructure, based on system containers (Docker) as execu^on pla]orms, that allow generator experts/users to evaluate the non-func^onal proper^es of generated code 47 RuntimeMonitoringEngine Container C Container B Container A SUT SUT SUT Generate Generate Generate Code Generator A Code Generator B Code Generator C Code Generation Runtime monitoring engineCode Execution Container A’ Container B’ Container C’ Footprint C’ Footprint A’ HTTP requests Footprint B’Request Resource usage extraction Resource usage DB

- 48. Microservice-based infrastructure 48 ¤ Execute and monitor of the generated code using system containers ü Different configura^ons, instances, images, machines, etc ü Resource isola^on and management ü Less performance overhead 48

- 49. Monitoring environment Component Under Test Back-end Database Component Cgroup file systems Running… Monitoring records Front-end: Visualiza^on Component Time-series database HTTP Requests CPU Memory … 49 8086: Monitoring Component … Code Genera^on + Compila^on Software Tester 49

- 50. Conclusion § Effective support for automatically deploying, executing, and testing the generated code in different environment settings § The conducted experiments showed the usefulness of this infrastructure for tuning and testing generators 50

- 52. 52 Generator experts Genrerator users Build and maintain I can now easily determine the best configuration settings for my generator I am now able to automa5cally test my code generator family in terms of NFP Conclusion Effective support for testing and resource usage monitoring Use and configure

- 53. Perspectives 53 v Combine the proposed black-box approach with tracability tools: • Tracking the source of code generator inconsistencies v Speed up the ^me required to tune and test generators: • Deploy tests on many nodes in the cloud using mul^ple containers in parallel v Automa^c test case genera^on: • Test amplifica^on • Evaluate the quality of executed tests (e.g., code coverage) v Improve the auto-tuning approach: • Evaluate other compilers (e.g., LLVM, Clang) • Explore more tradeoffs among resource usage metrics • Evaluate different hardware se}ngs

- 54. Publications • Mohamed Boussaa, Olivier Barais, Benoit Baudry, Gerson Sunyé: Automa5c Non-func5onal Tes5ng of Code Generators Families. In The 15th Interna^onal Conference on Genera^ve Programming: Concepts & Experiences (GPCE 2016), Amsterdam, Netherlands, October 2016. • Mohamed Boussaa, Olivier Barais, Benoit Baudry, Gerson Sunyé: NOTICE: A Framework for Non- func5onal Tes5ng of Compilers. In 2016 IEEE Interna^onal Conference on So[ware Quality, Reliability & Security (QRS 2016), Vienna, Austria, August 2016. • Mohamed Boussaa, Olivier Barais, Benoit Baudry, Gerson Sunyé: A Novelty Search-based Test Data Generator for Object-oriented Programs. In Gene^c and Evolu^onary Computa^on Conference Companion (GECCO 2015), Madrid, Spain, July 2015. • Mohamed Boussaa, Olivier Barais, Benoit Baudry, Gerson Sunyé: A Novelty Search Approach for Automa5c Test Data Genera5on. In 8th Interna^onal Workshop on Search-Based So[ware Tes^ng (SBST@ICSE 2015), Florence, Italy, May 2015. Under review: • Mohamed Boussaa, Olivier Barais, Benoit Baudry, Gerson Sunyé: Leveraging Metamorphic Tes5ng to Automa5cally Detect Inconsistencies in Code Generator Families. IEEE Transac^ons on Reliability, August 2017. 54

- 55. Thank you for your attention 55

- 56. ThingML case study 56 § 3 targets: C, JAVA, JS § 120 test cases § Memory usage § 2 identified inconsistencies

- 58. NSGA-II overview (I) 58 • NSGA-II: Non-dominated Sorting Genetic Algorithm (K. Deb et al., ’02) Parent Population Offspring Population Non-dominated sorting F1 F2 F3 F4 Crowding distance sorting Population in next generation MOEA Framework hbp://moeaframework.org/