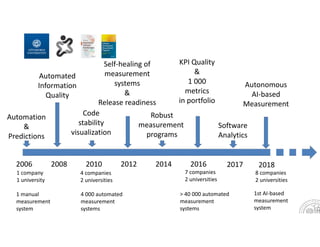

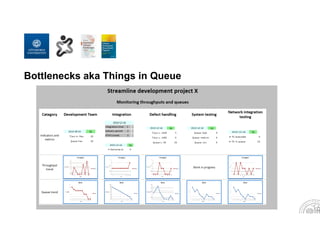

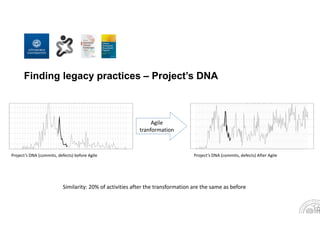

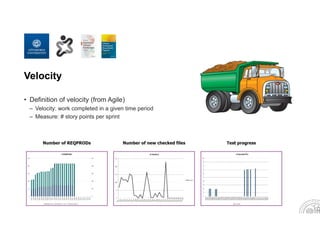

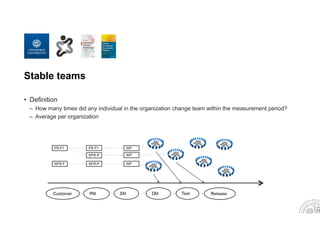

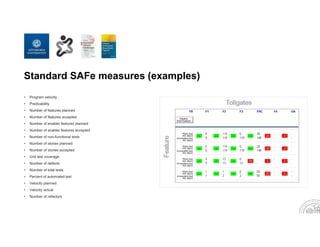

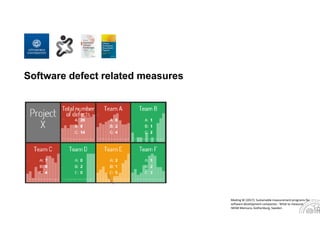

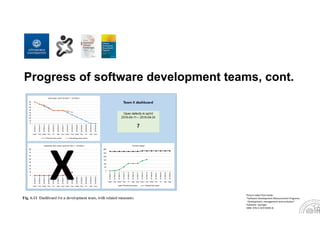

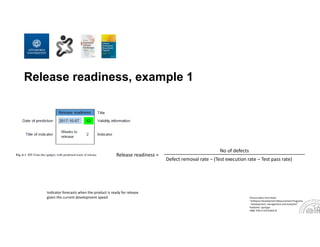

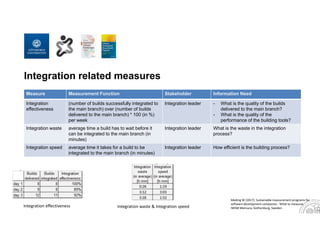

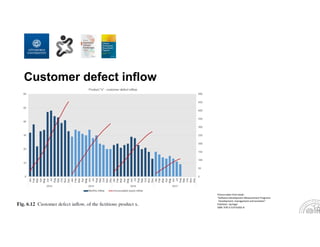

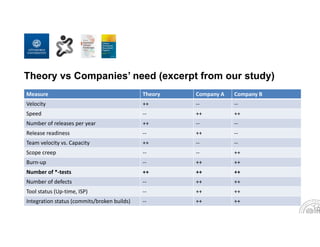

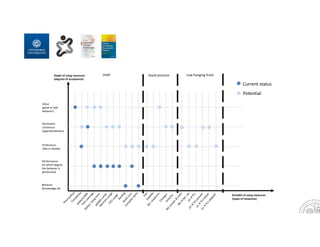

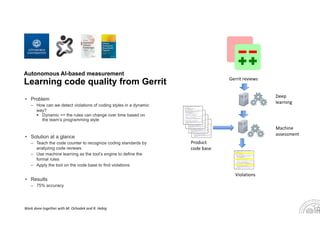

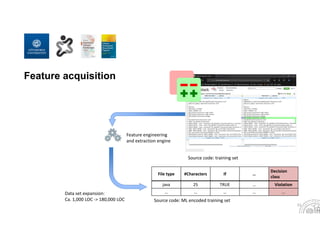

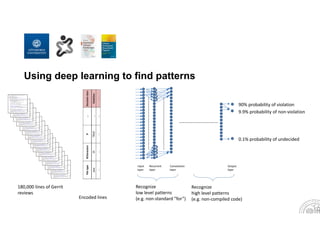

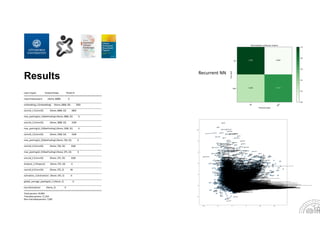

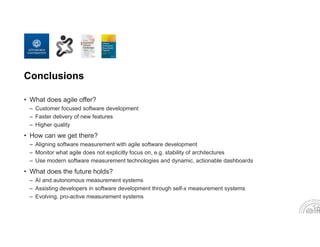

This document summarizes a presentation on measuring agile software development given by Miroslaw Staron, a professor of software engineering, and Wilhelm Meding, a senior measurement leader at Ericsson. It discusses how their research has evolved from manual measurement systems in 2006 to now using artificial intelligence and machine learning for automated measurement. Examples are provided of traditional measures like velocity and new measures like integration effectiveness that are more relevant for agile teams. The challenges of applying agile measures in practice versus in theory are also covered, along with emerging areas like using deep learning to detect code violations.