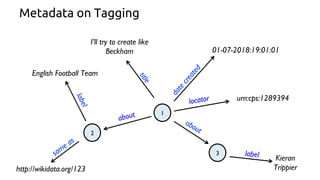

This document discusses building materialized views of linked data systems using microservices. It outlines challenges with current architectures and proposes a new architecture using microservices to build materialized views that map closely to different query profiles. The views would be updated via publish APIs and distributed to read APIs. Join operations could be handled during writes, reads, or in the views. Other considerations include tracking ontology changes and maintaining a single source of truth.

![{

”@id": "urn:article:01",

"about": [

"urn:tag:01",

"urn:tag:02",

…

]

}

{

”@id": "urn:tag:01",

"label": "Nigeria",

”@type": "Place"

}](https://image.slidesharecdn.com/augustine-kwamashie-connected-data-talk-181123070809/85/Building-materialised-views-for-linked-data-systems-using-microservices-27-320.jpg)

![{

”@id": "urn:article:01",

"about": [

{

”@id": "urn:tag:01",

"label": "Nigeria",

”@type": "Place"

},

…

]

}](https://image.slidesharecdn.com/augustine-kwamashie-connected-data-talk-181123070809/85/Building-materialised-views-for-linked-data-systems-using-microservices-28-320.jpg)