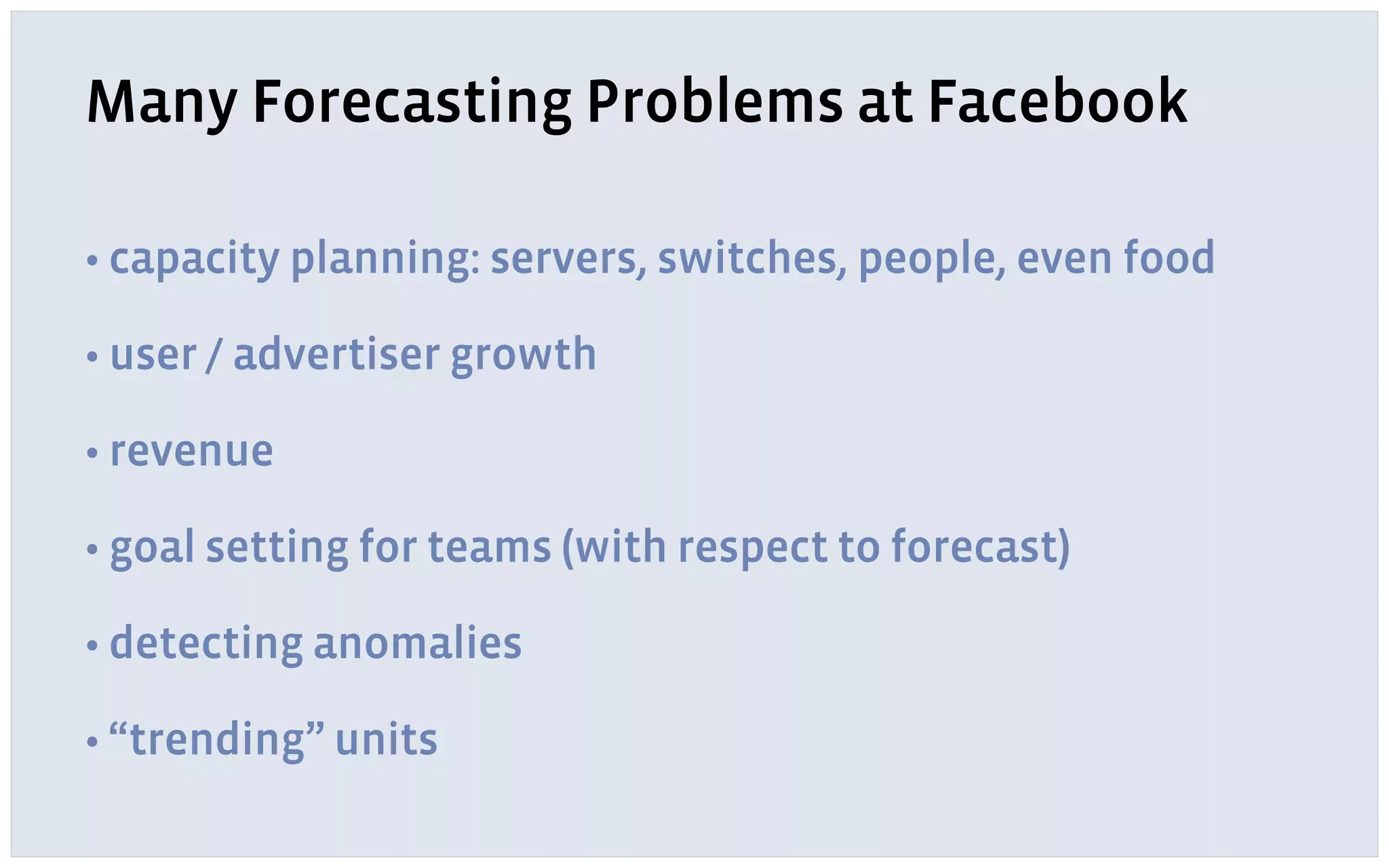

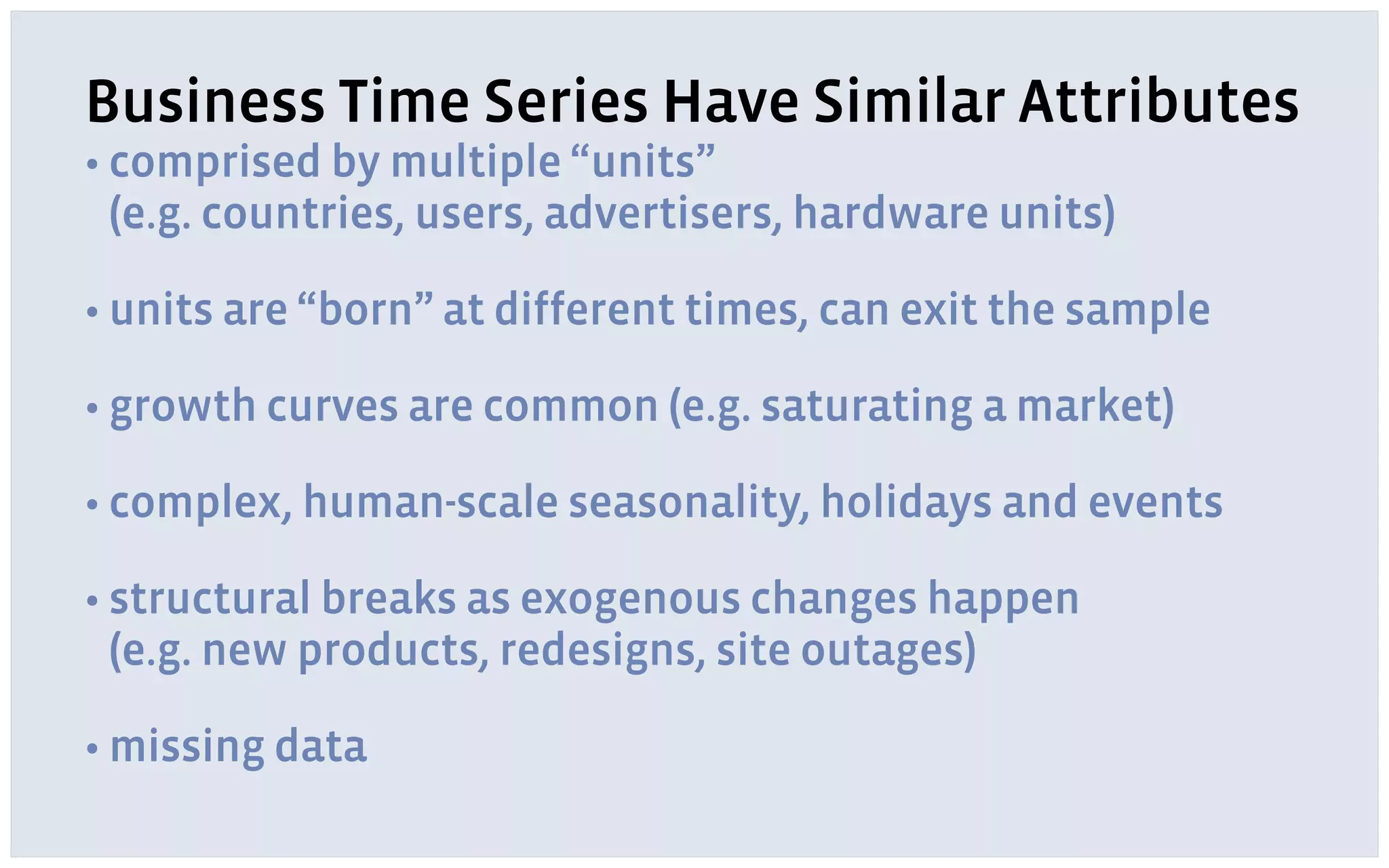

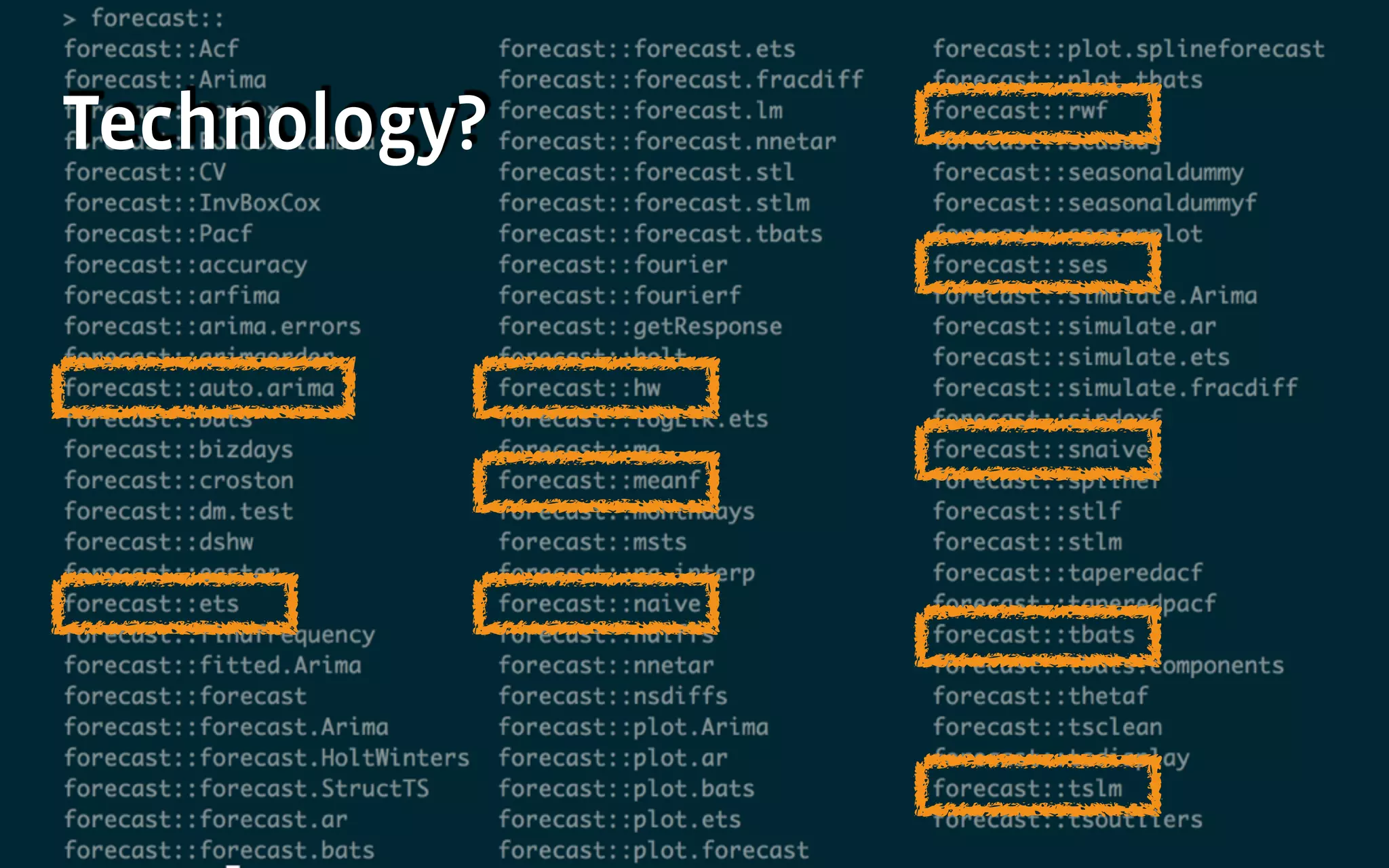

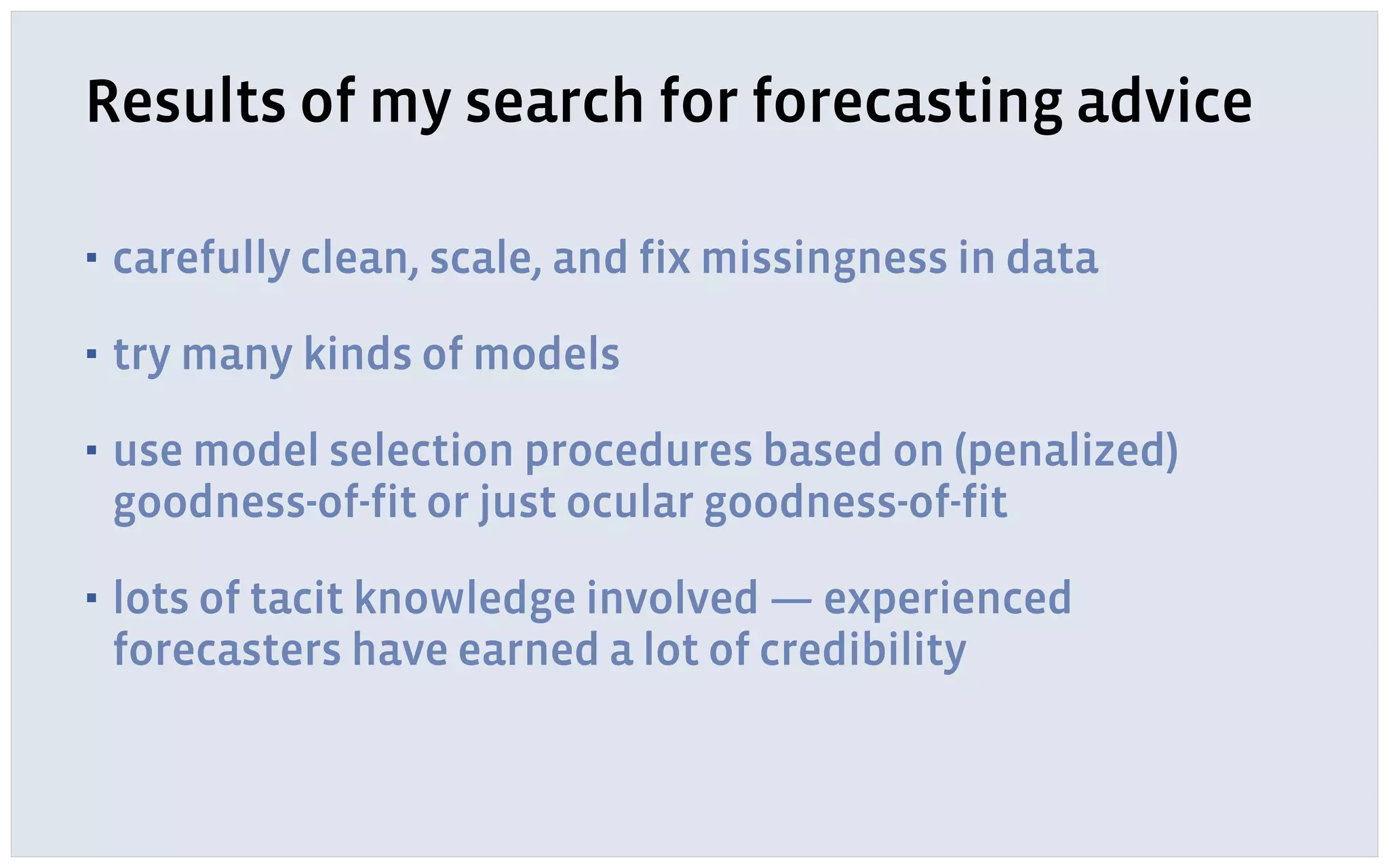

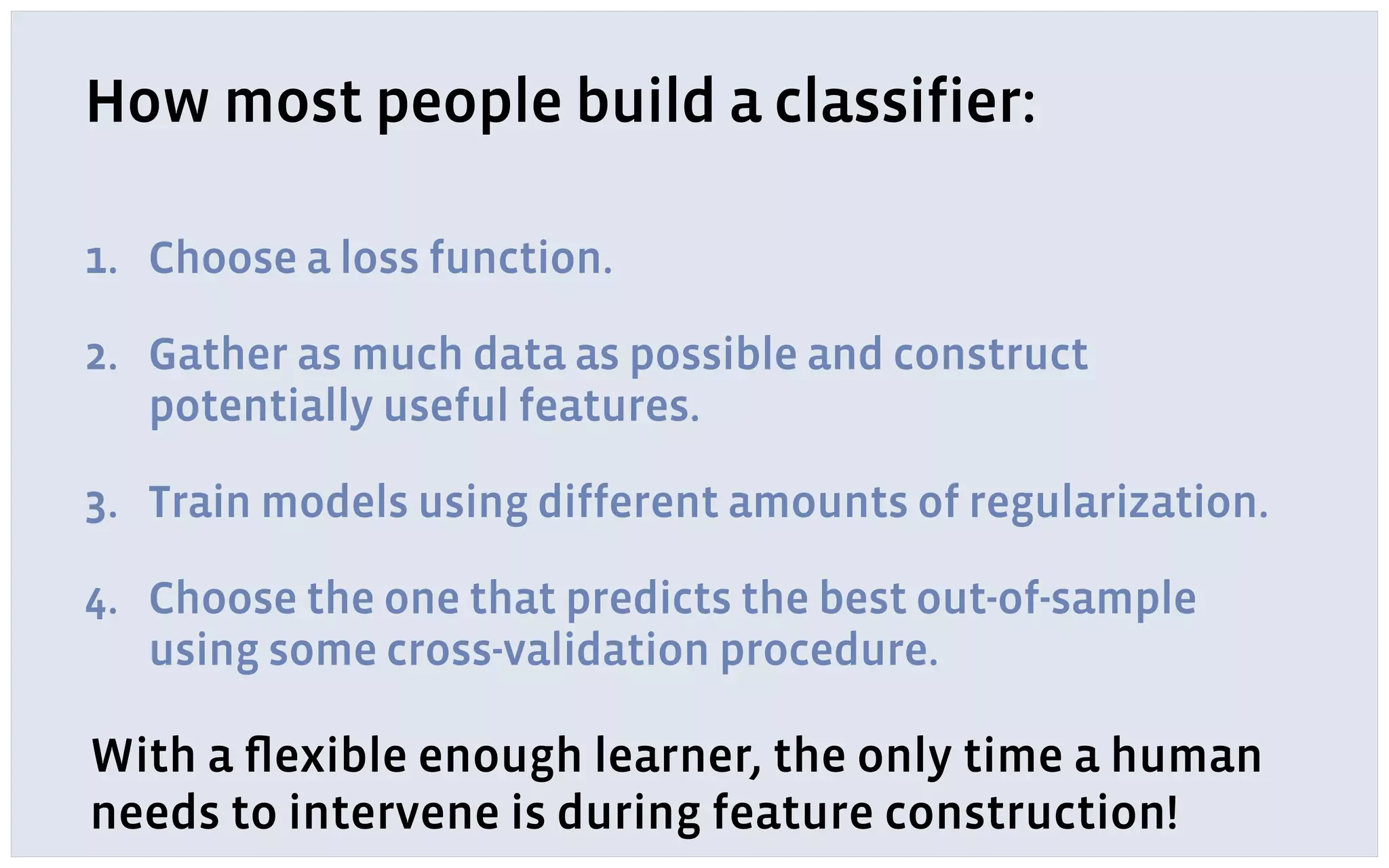

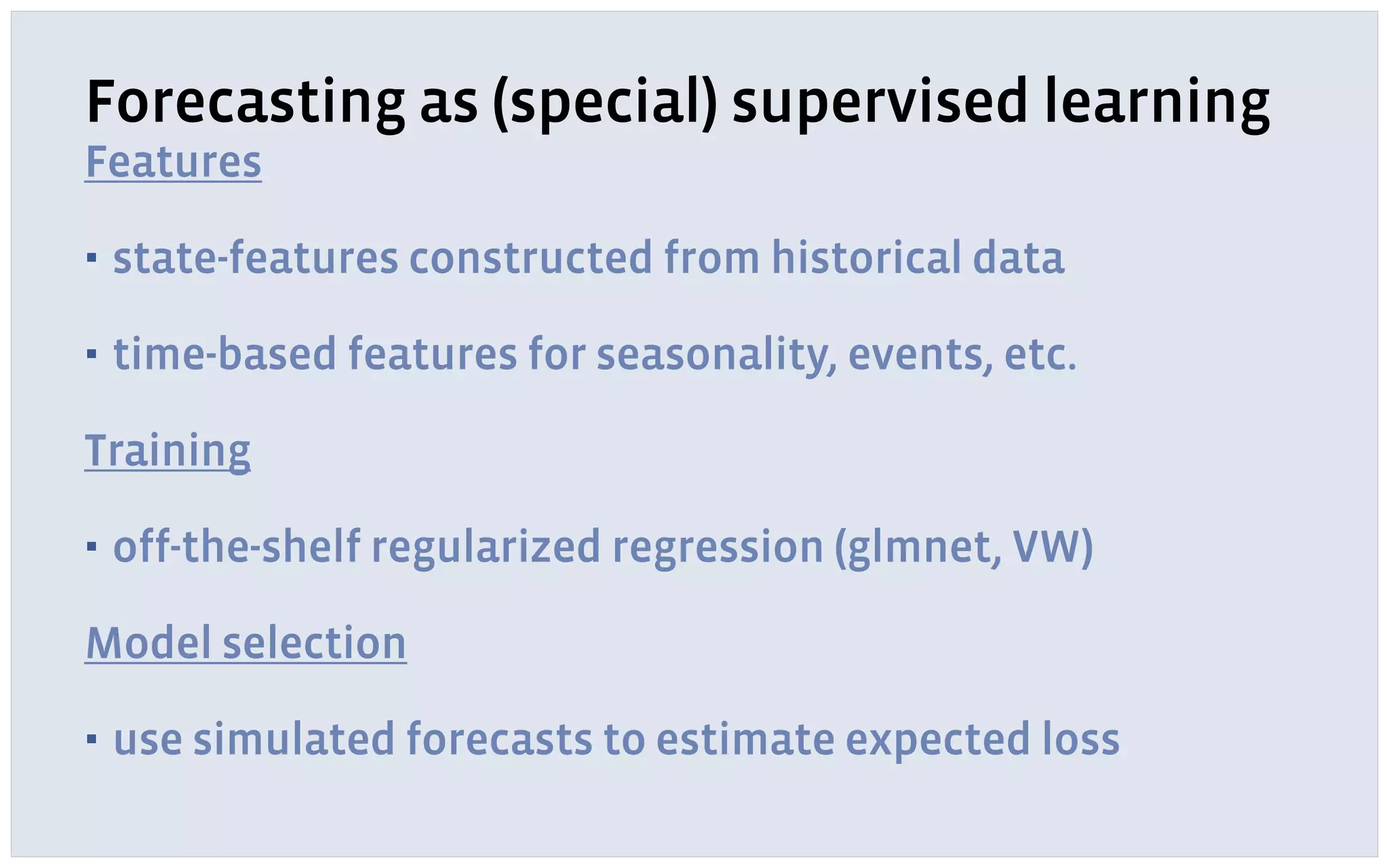

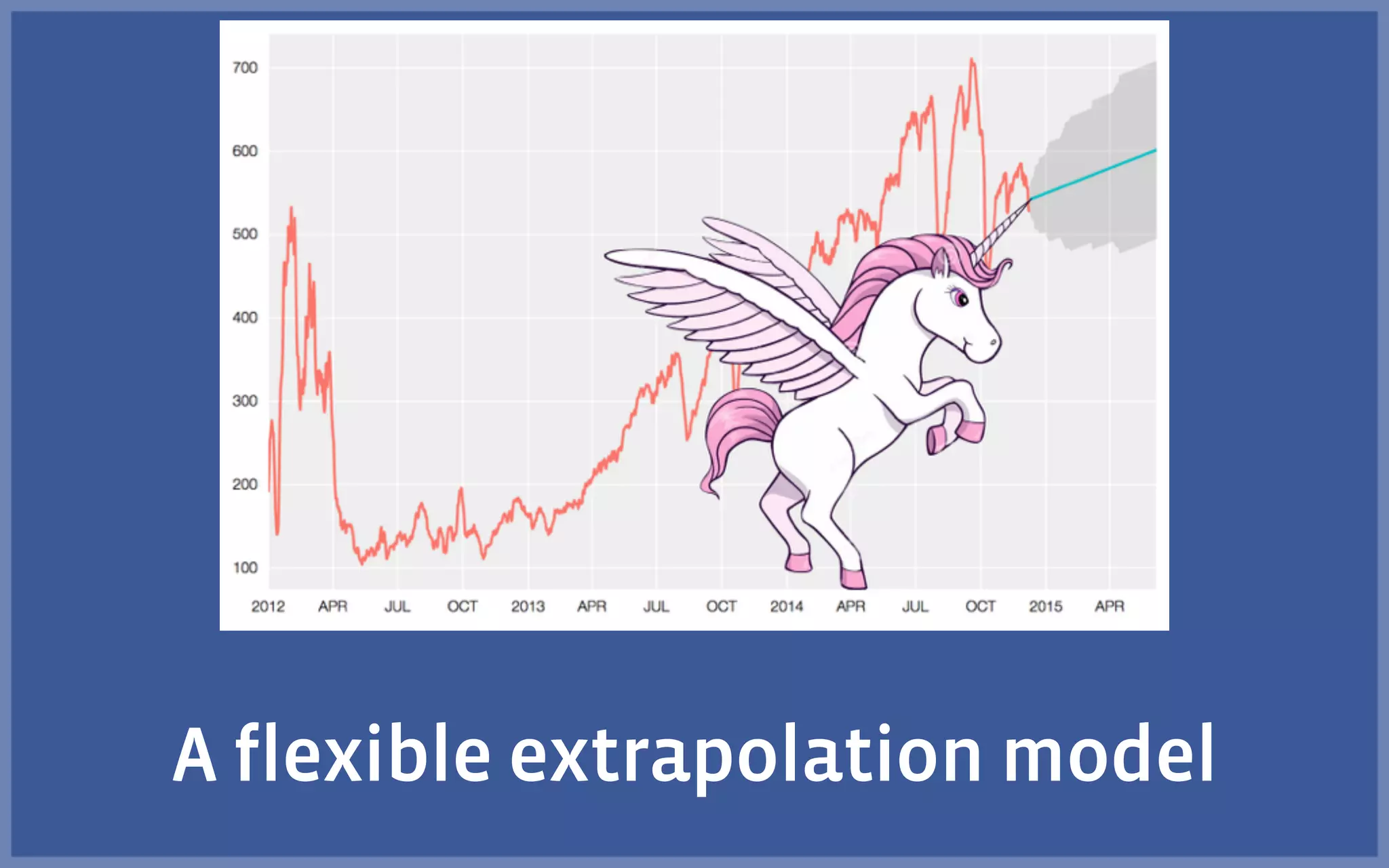

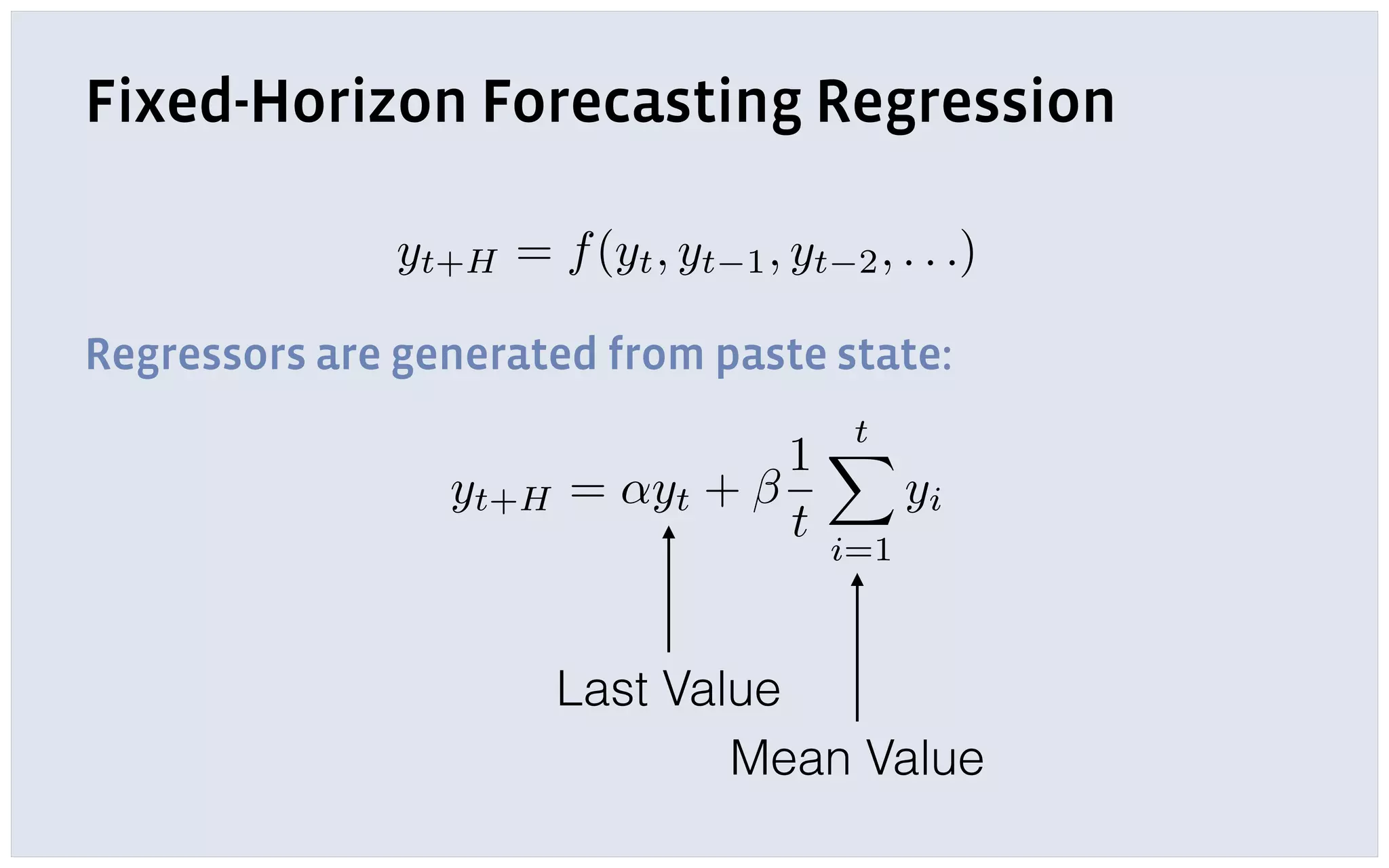

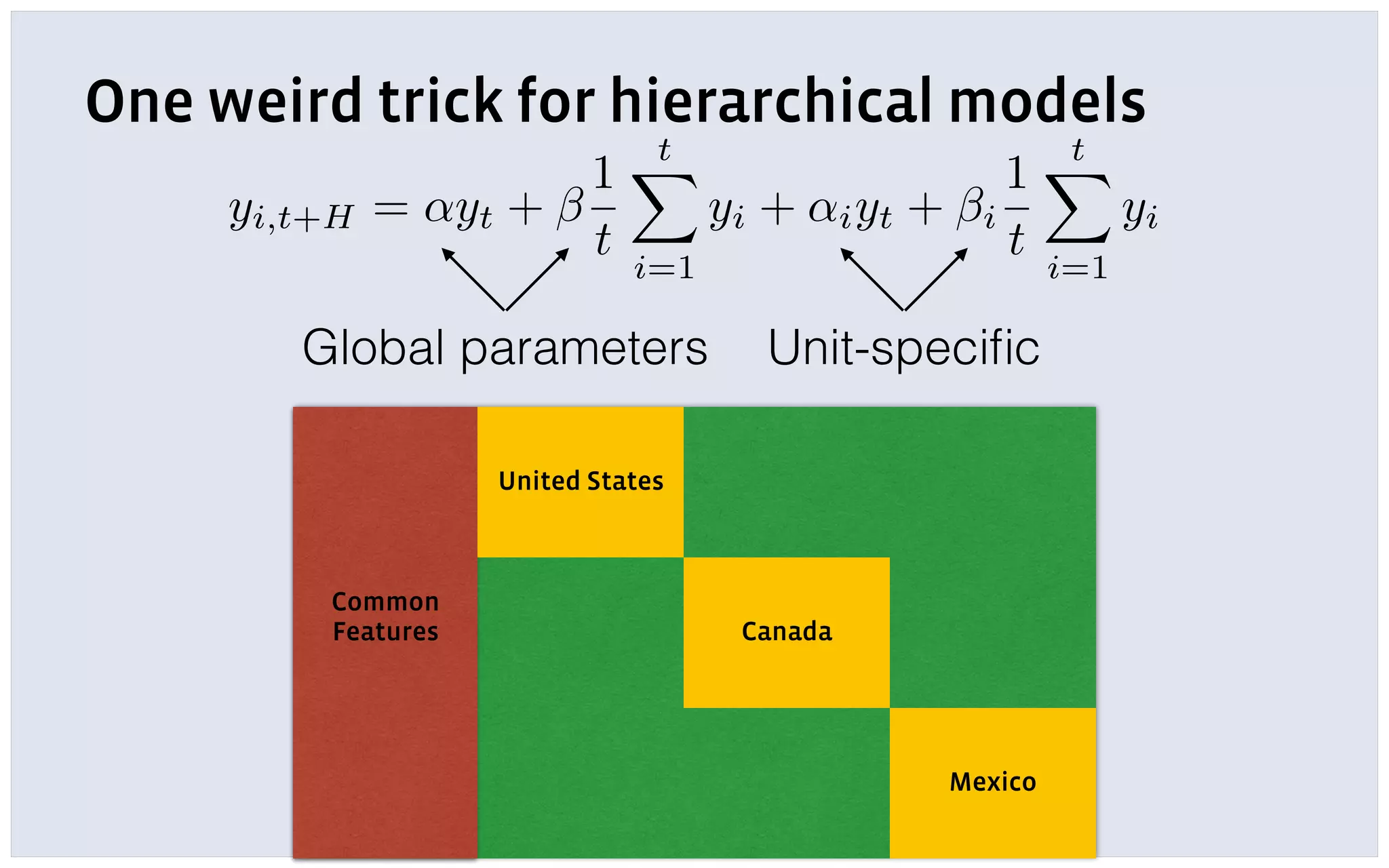

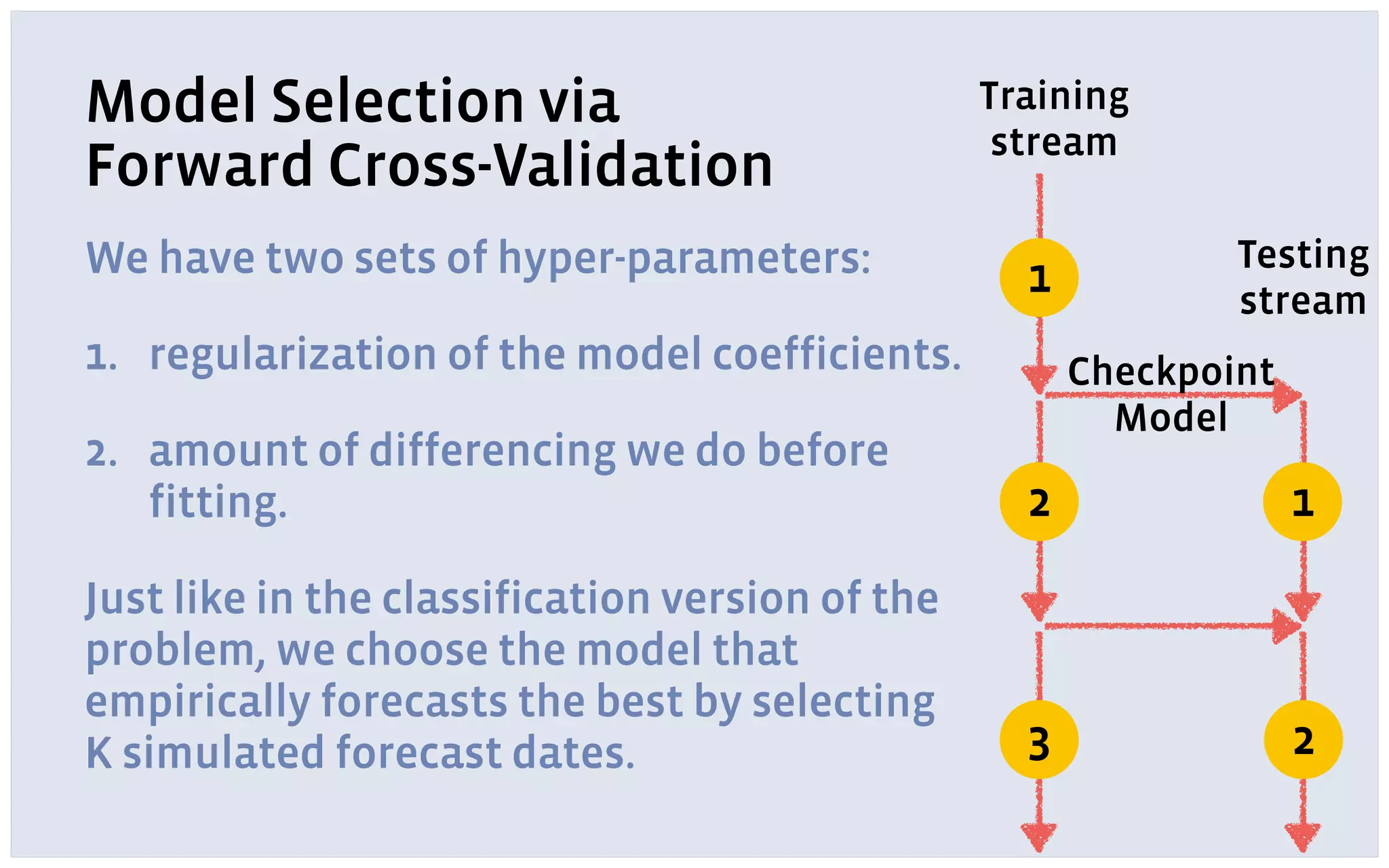

The document discusses automatic forecasting challenges and techniques at scale, particularly at Facebook, focusing on various forecasting problems like capacity planning and user growth. It emphasizes creating user-friendly technology for non-experts through model selection, feature construction, and hierarchical modeling while addressing issues like missing data and computational challenges. Key recommendations include starting with a single use-case, applying online learning, and quantifying forecast uncertainty using predictive intervals.