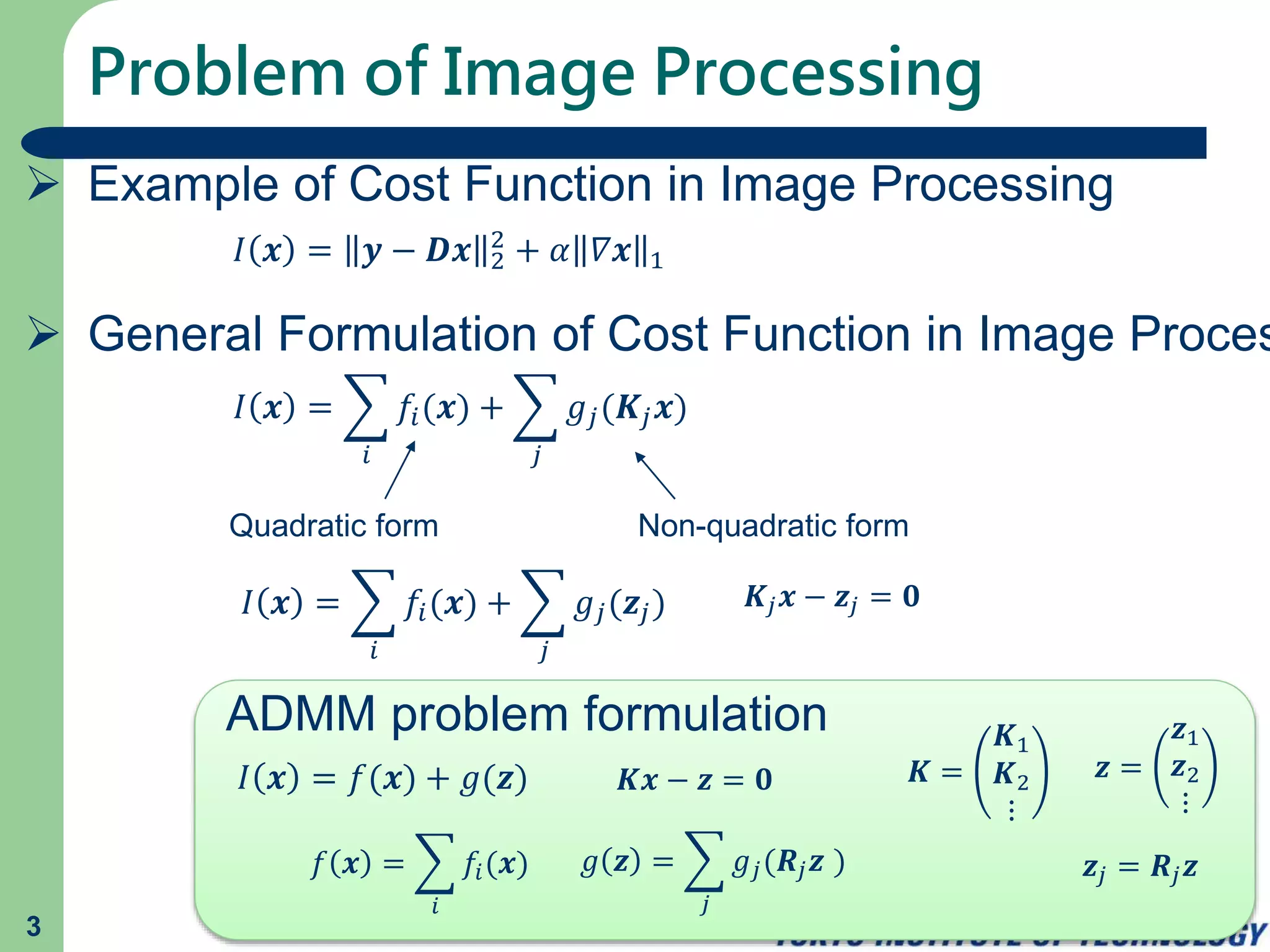

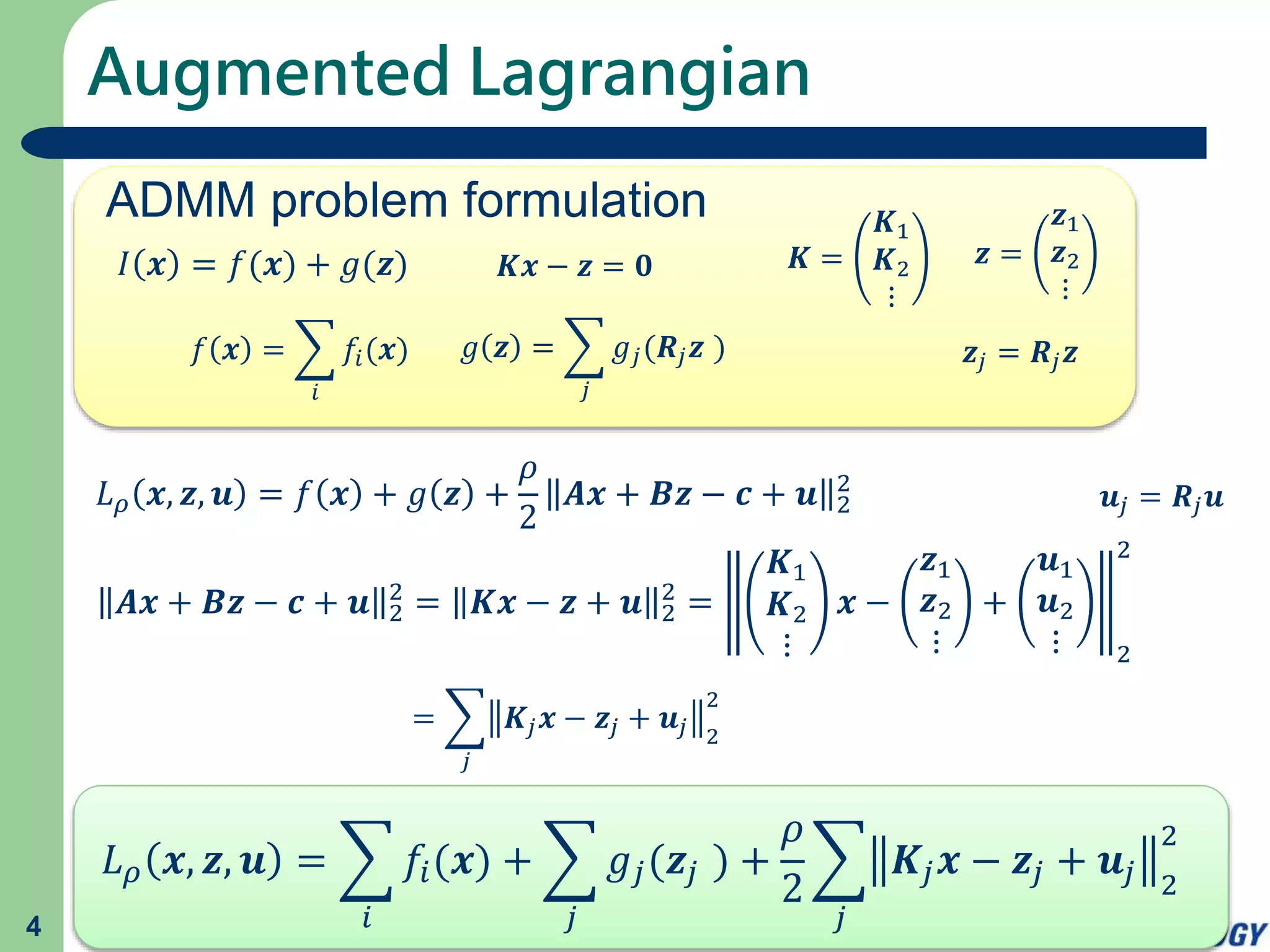

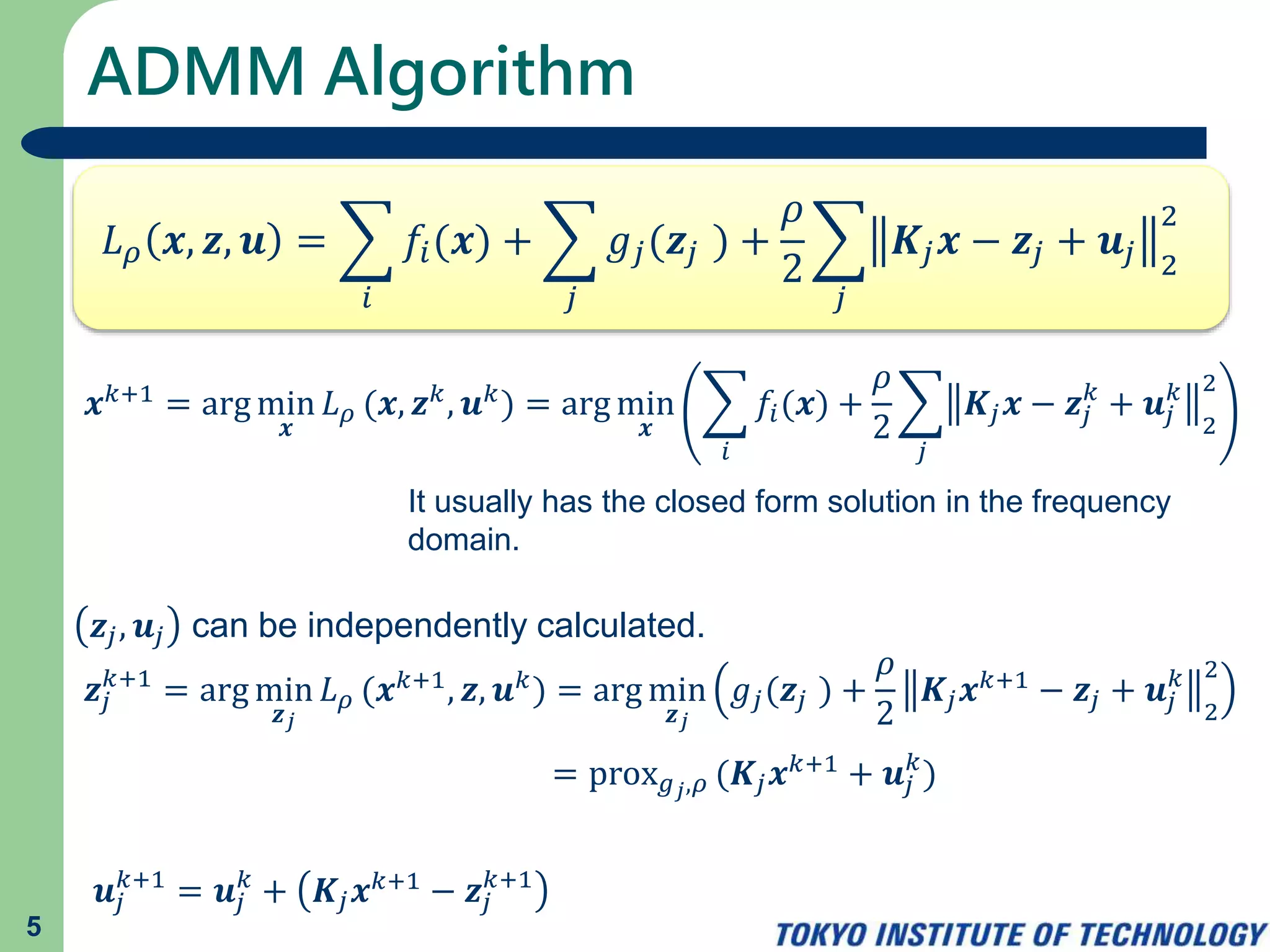

This document summarizes the Alternating Direction Method of Multipliers (ADMM) algorithm. It discusses how ADMM can be used to solve optimization problems of the form minimize f(x) + g(z) subject to Kx - z = 0, where Kx - z decomposes the problem into separable subproblems for x and z. The algorithm alternates between optimizing the augmented Lagrangian Lρ(x, z, u) with respect to x, z, and their dual variables u. Each subproblem can be solved efficiently using proximal operators, often having closed-form solutions in frequency space.

![Masayuki Tanaka

Aug. 24, 2016

ADMM algorithm in ProxImaL [1]](https://image.slidesharecdn.com/proximal-160824100021/75/ADMM-algorithm-in-ProxImaL-1-2048.jpg)

![Proximal operator [2]

prox 𝑓,𝜌 𝒗 = arg min

𝒙

𝑓 𝒙 +

𝜌

2

𝒙 − 𝒗 2

2

1](https://image.slidesharecdn.com/proximal-160824100021/75/ADMM-algorithm-in-ProxImaL-2-2048.jpg)

![Alternating Direction Method of Multipliers

[2]

ADMM problem

Minimize 𝐼 𝒙, 𝒛 = 𝑓 𝒙 + 𝑔(𝒛) subject to 𝑨𝒙 + 𝑩𝒛 = 𝒄

Augmented Lagrangian

𝐿 𝜌 𝒙, 𝒛, 𝒚 = 𝑓 𝒙 + 𝑔 𝒛 + 𝒚 𝑇

𝑨𝒙 + 𝑩𝒛 − 𝒄 +

𝜌

2

𝑨𝒙 + 𝑩𝒛 − 𝒄 2

2

= 𝑓 𝒙 + 𝑔 𝒛 +

𝜌

2

𝑨𝒙 + 𝑩𝒛 − 𝒄 + 𝑢 2

2

+ const. with scaled dual variables 𝒖 =

1

𝜌

𝒚

𝐿 𝜌 𝒙, 𝒛, 𝒖 = 𝑓 𝒙 + 𝑔 𝒛 +

𝜌

2

𝑨𝒙 + 𝑩𝒛 − 𝒄 + 𝒖 2

2

ADMM algorithm

𝒙 𝑘+1 = arg min

𝒙

𝐿 𝜌 (𝒙, 𝒛 𝑘, 𝒖 𝑘) = arg min

𝒙

𝑓 𝒙 +

𝜌

2

𝑨𝒙 + 𝑩𝒛 𝑘 − 𝒄 + 𝒖 𝑘

2

2

𝒛 𝑘+1 = arg min

𝒛

𝐿 𝜌 (𝒙 𝑘+1, 𝒛, 𝒖 𝑘) = arg min

𝒛

𝑔 𝒛 +

𝜌

2

𝑨𝒙 𝑘+1 + 𝑩𝒛 − 𝒄 + 𝒖 𝑘

2

2

𝒖 𝑘+1 = 𝒖 𝑘 + 𝑨𝒙 𝑘+1 + 𝑩𝒛 𝑘+1 − 𝒄

2](https://image.slidesharecdn.com/proximal-160824100021/75/ADMM-algorithm-in-ProxImaL-3-2048.jpg)

![References

[1] F. Heide et al, ProxImaL: Efficient Image Optimization using Proximal

Algorithms, SIGGRAPH 2016.

https://graphics.stanford.edu/~niessner/heide2016proximal.html

[2] S. Boyd et al, Distributed Optimization and Statistical Learning via the

Alternating Direction Method of Multipliers, Foundations and Trends in

Machine Learning 2011.

http://web.stanford.edu/~boyd/papers/admm_distr_stats.html

6](https://image.slidesharecdn.com/proximal-160824100021/75/ADMM-algorithm-in-ProxImaL-7-2048.jpg)