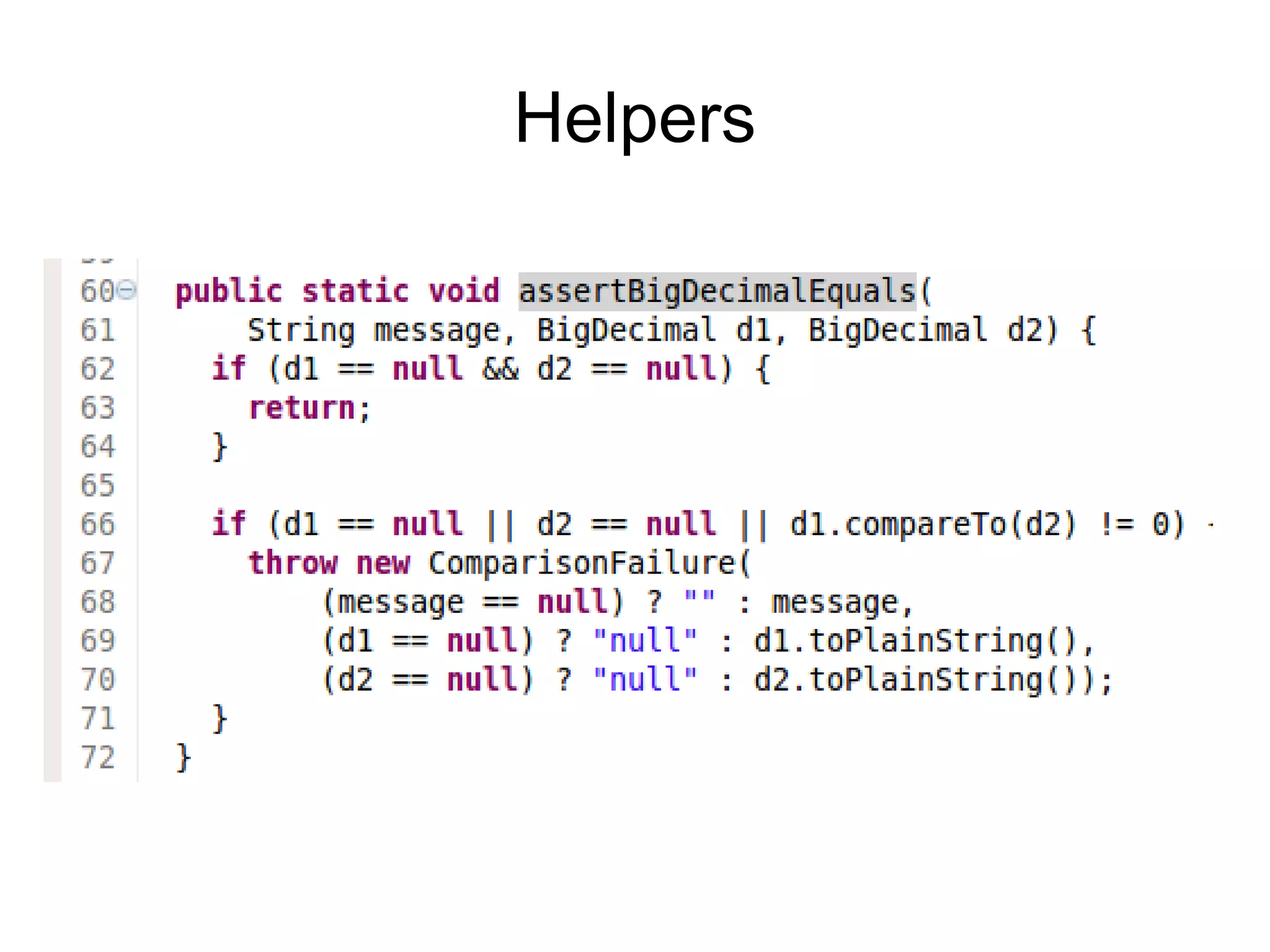

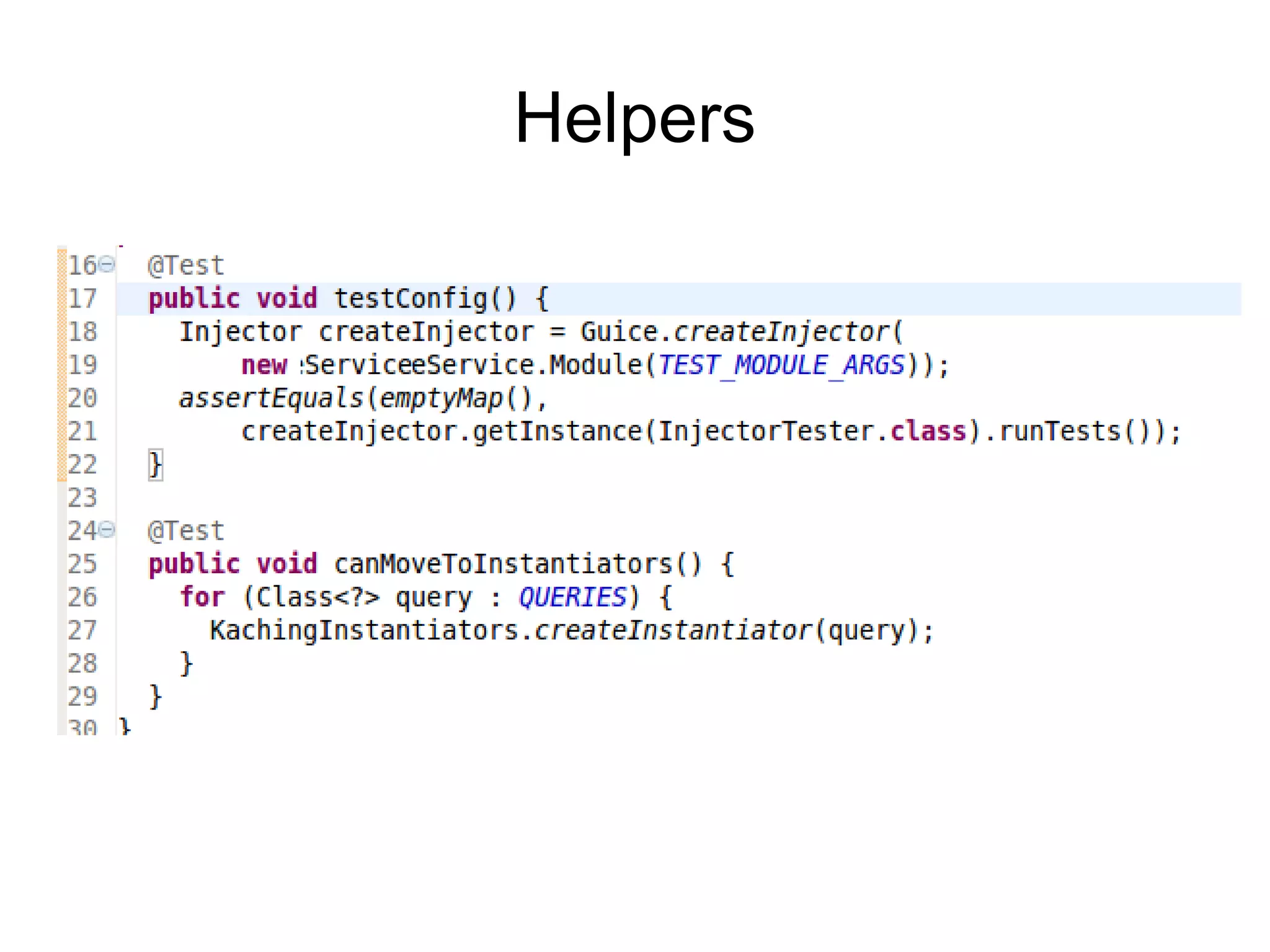

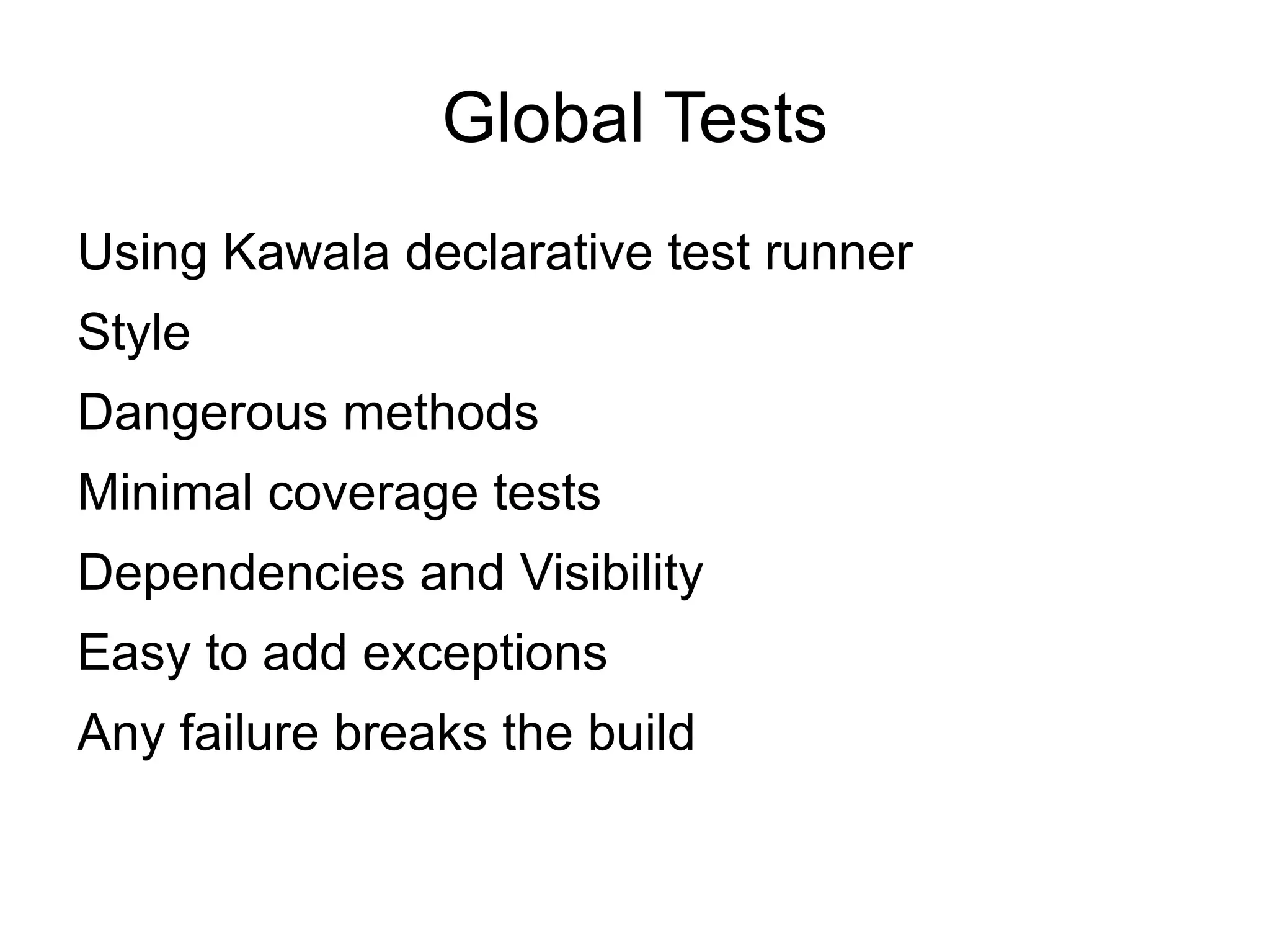

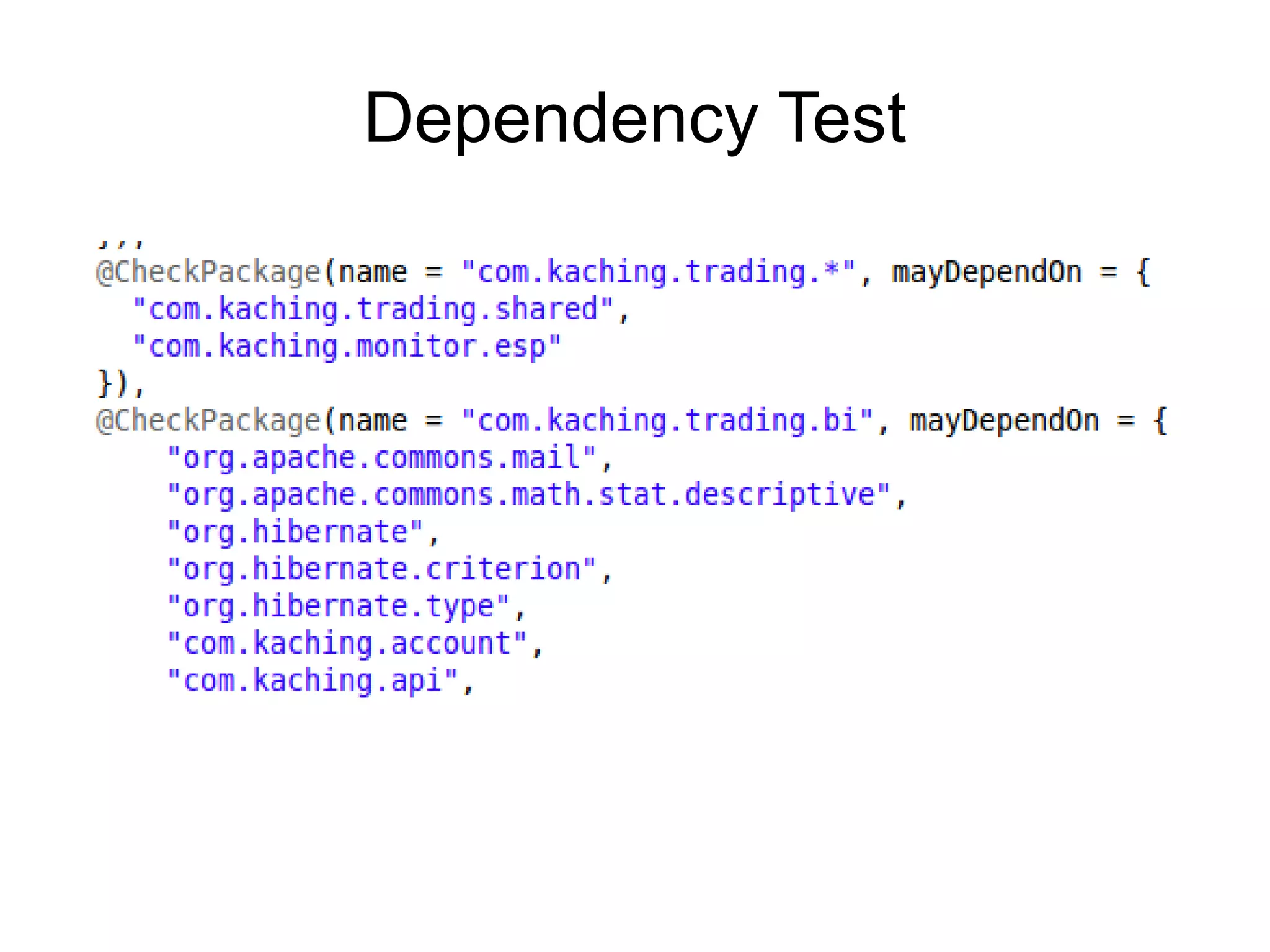

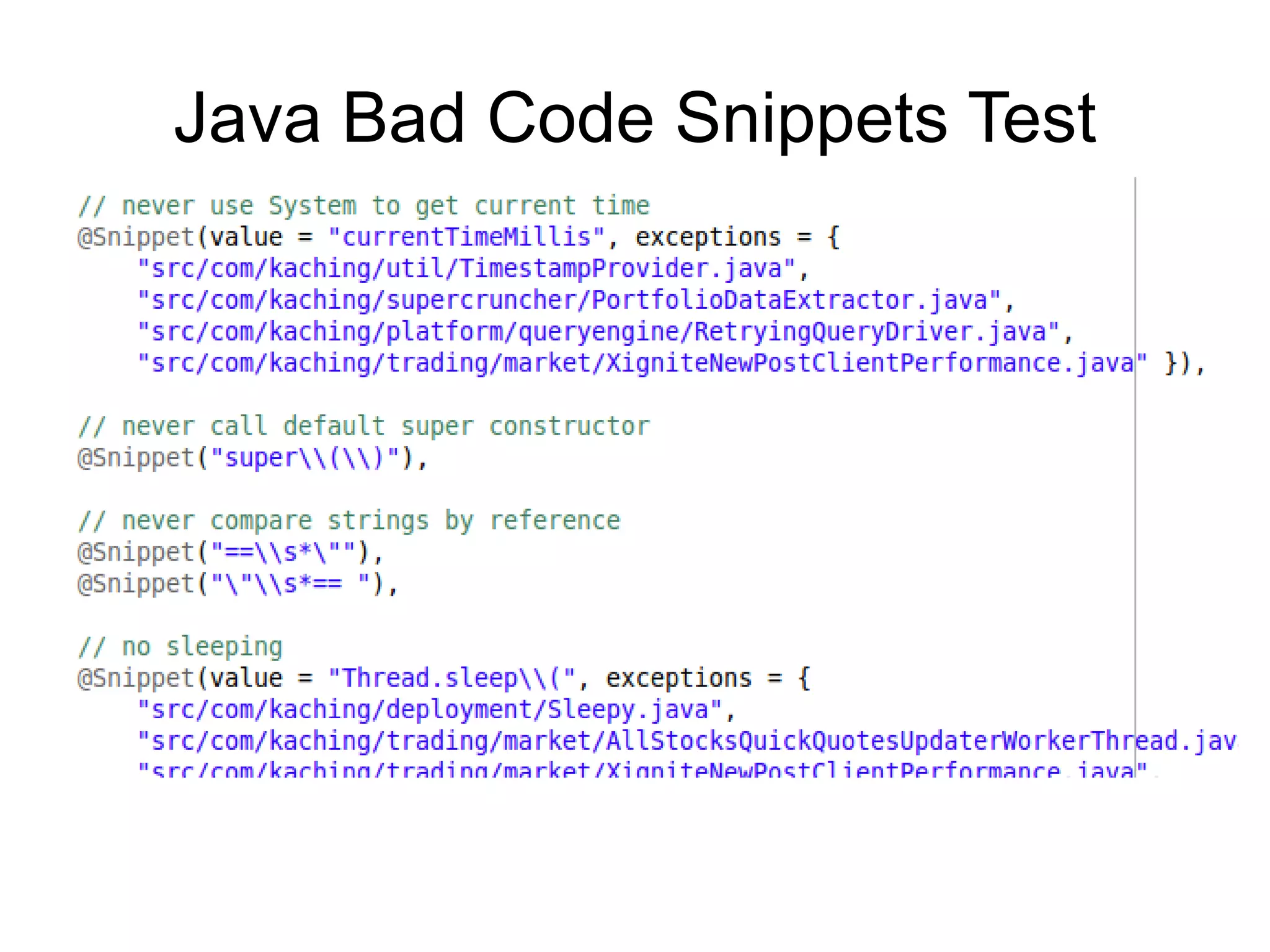

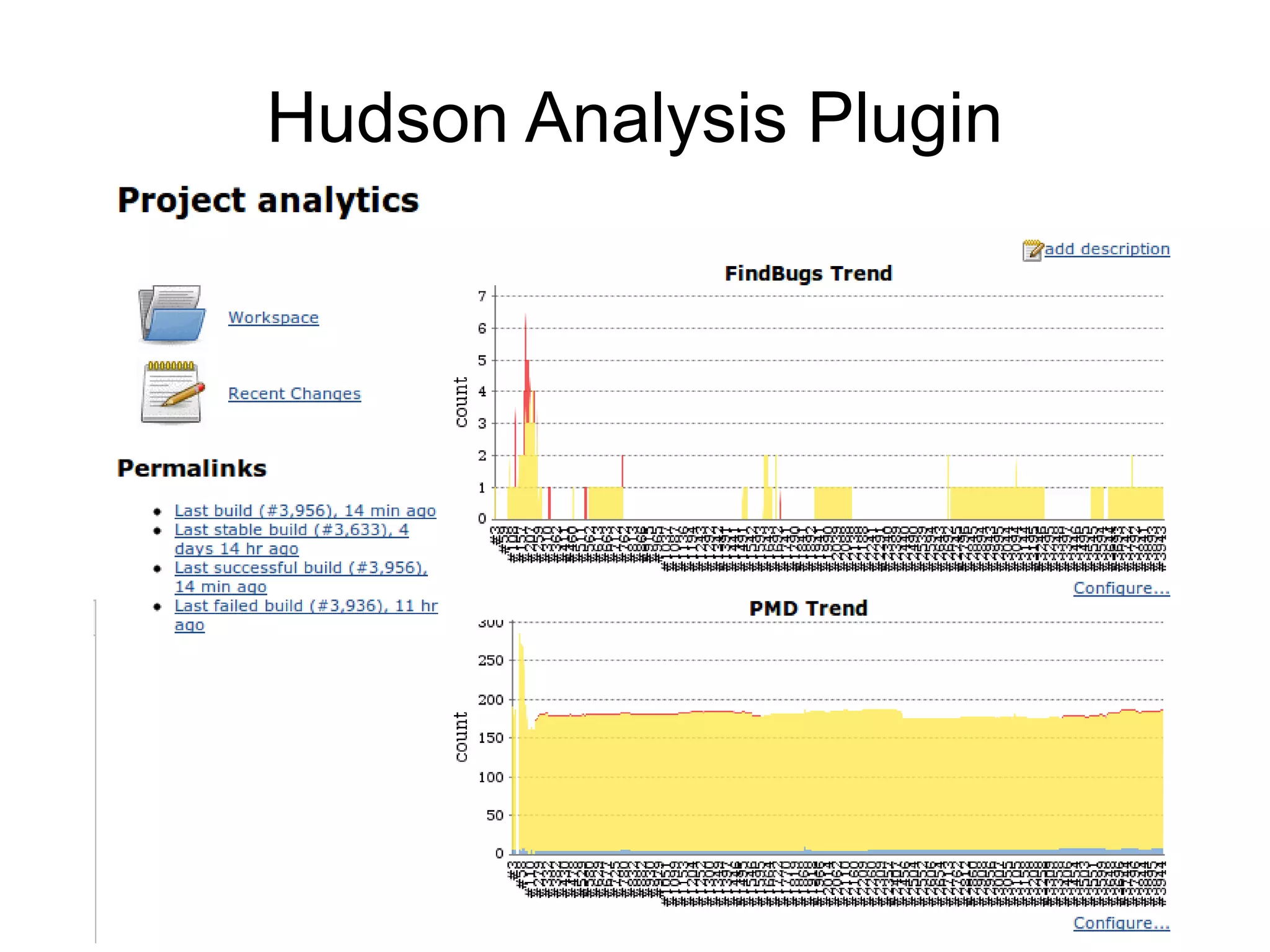

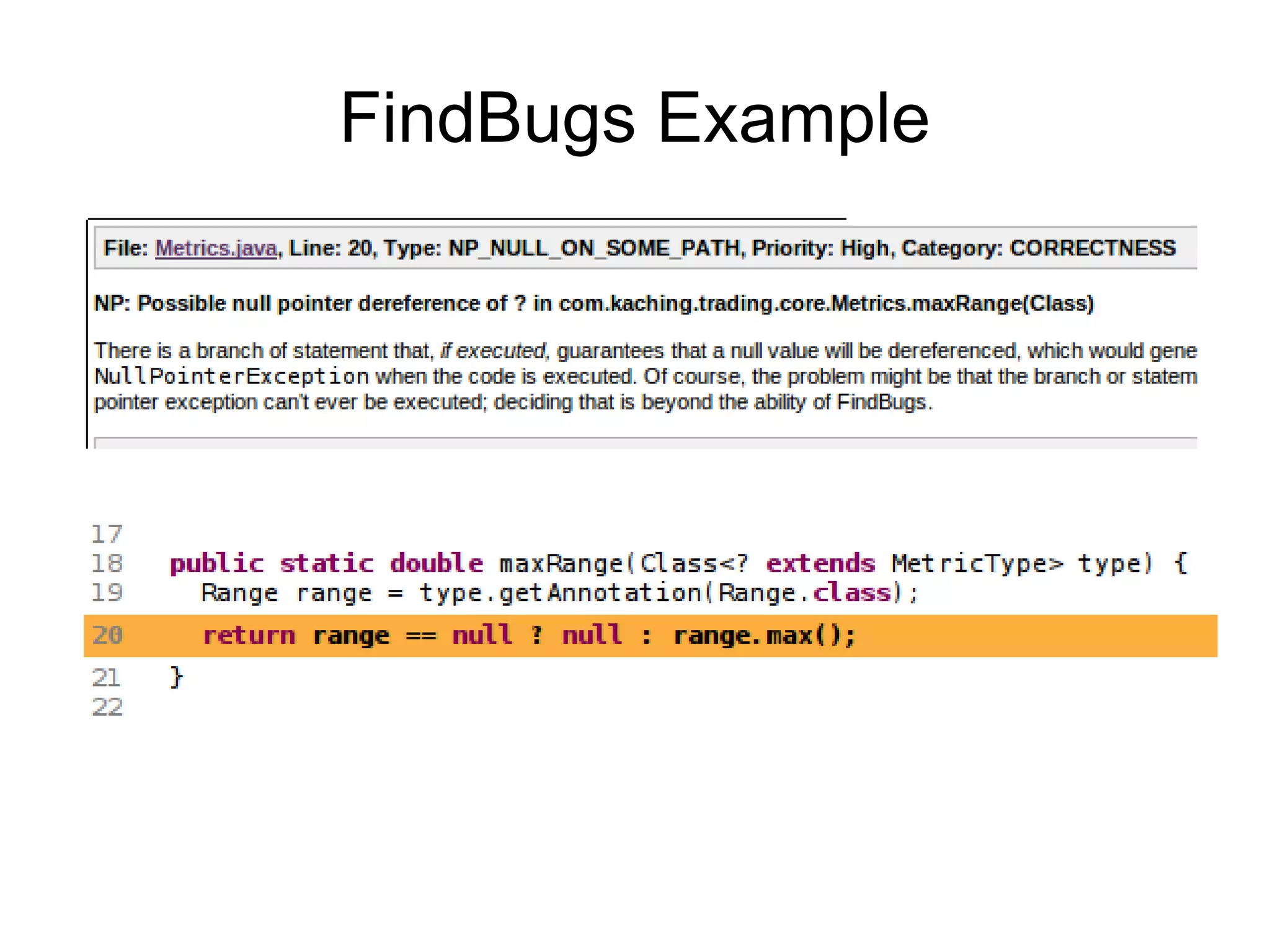

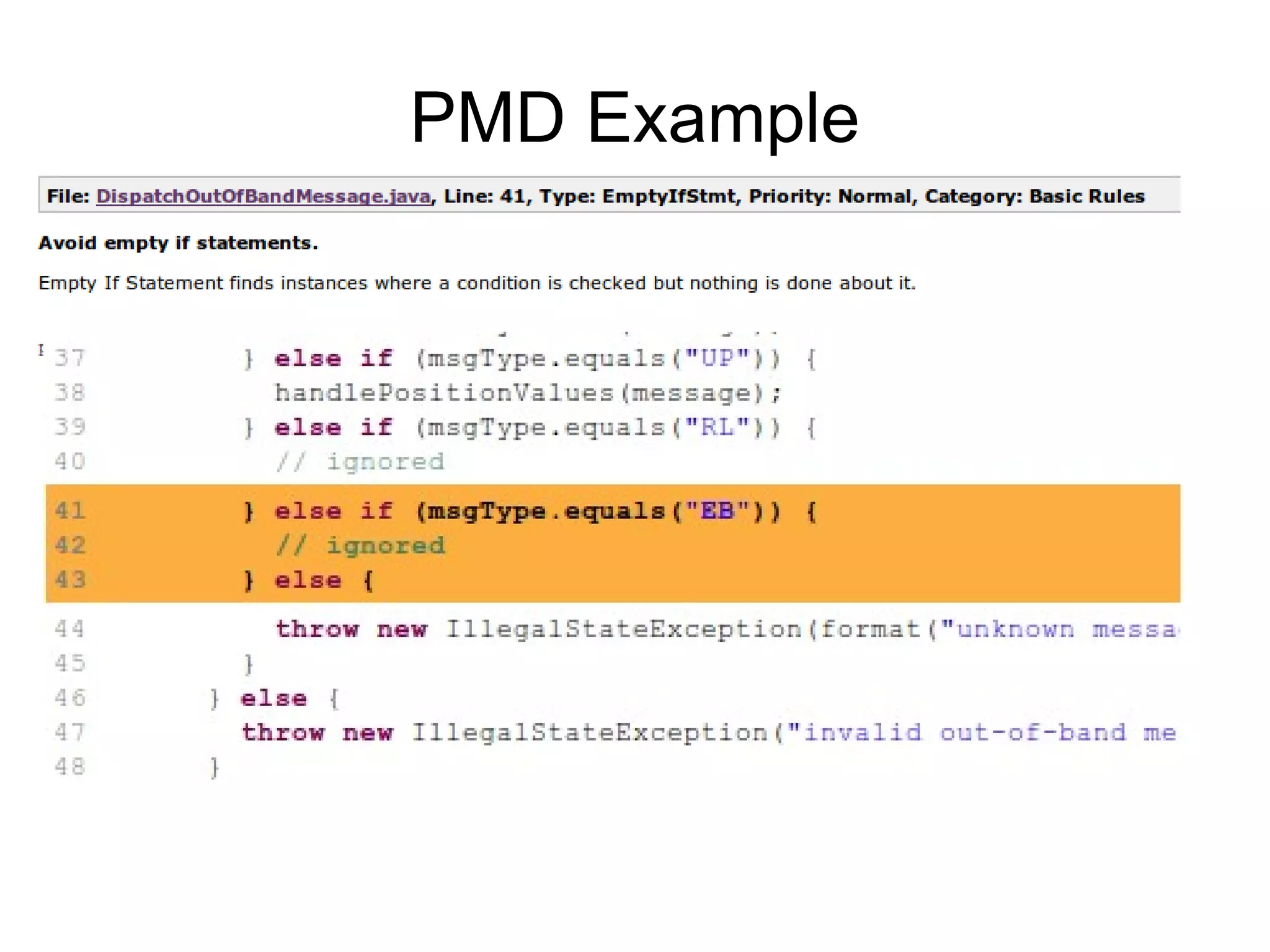

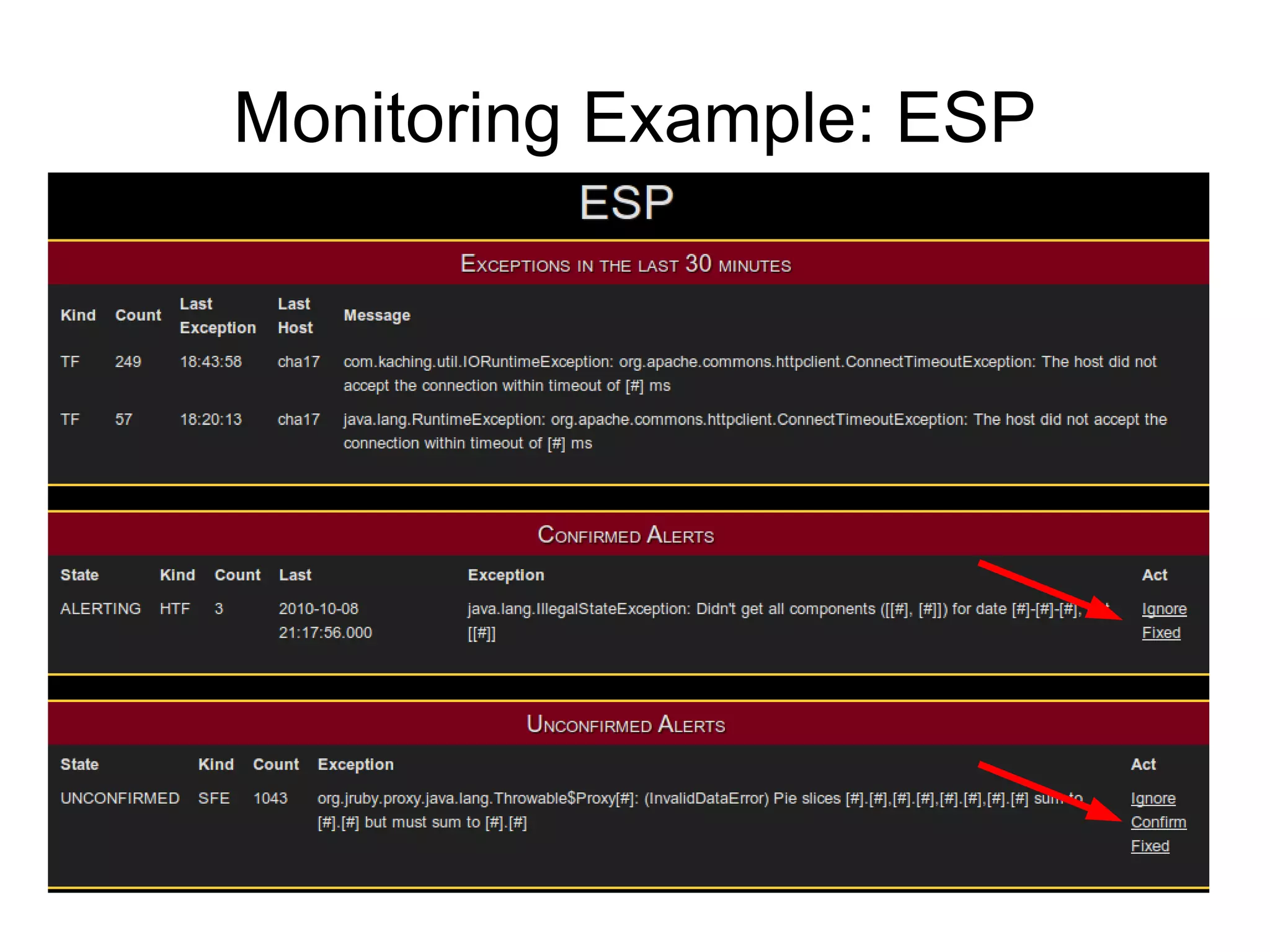

The document discusses various techniques for automating good coding practices, including unit tests, integration tests, static analysis, and automated monitoring. It emphasizes that unit tests ensure code is functioning as intended, global tests check for errors across codebases, and monitoring verifies code continues working over time. While coverage metrics and formal code reviews have value, the document argues automation is more effective and comments should be replaced with well-written tests.