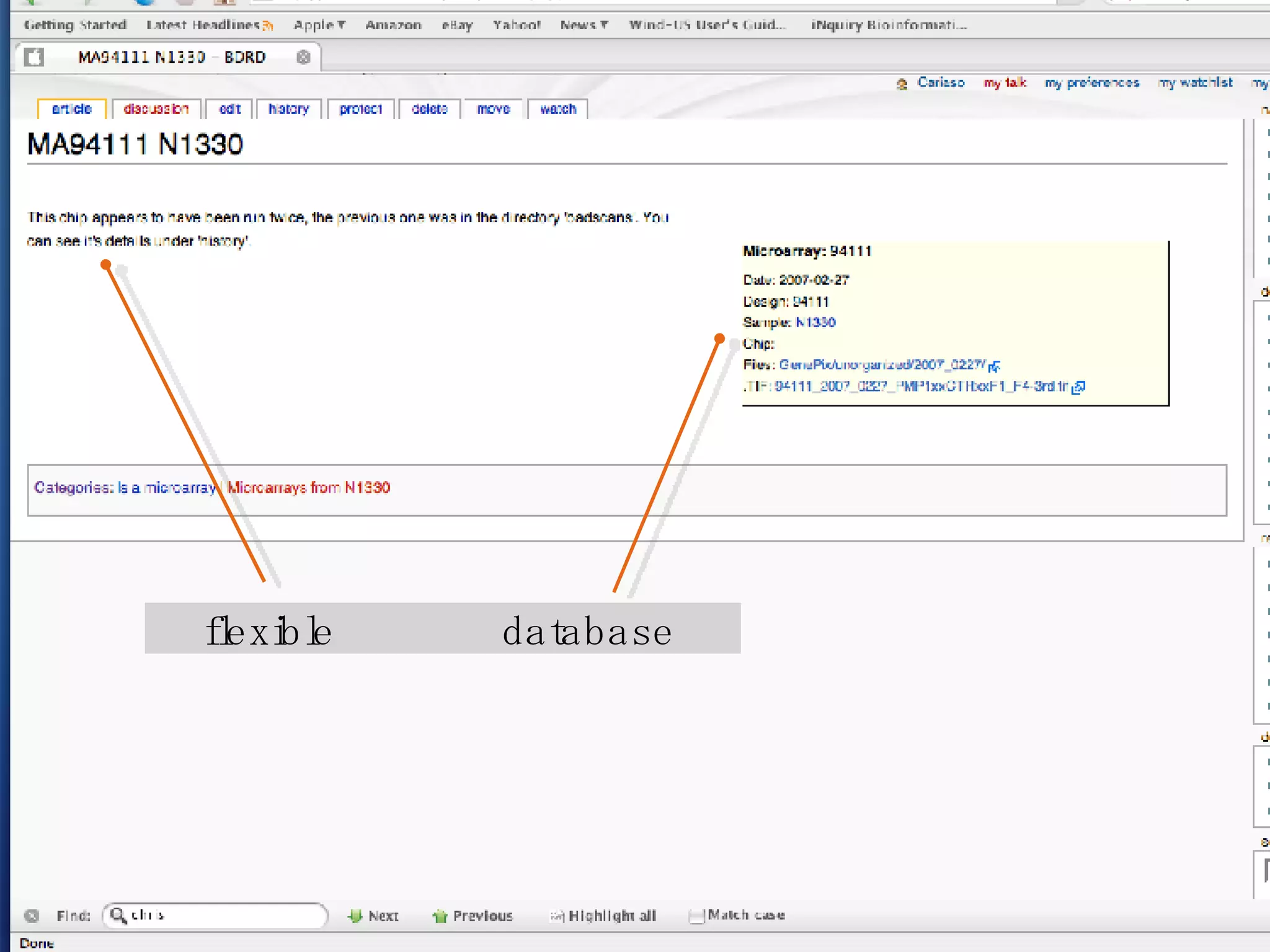

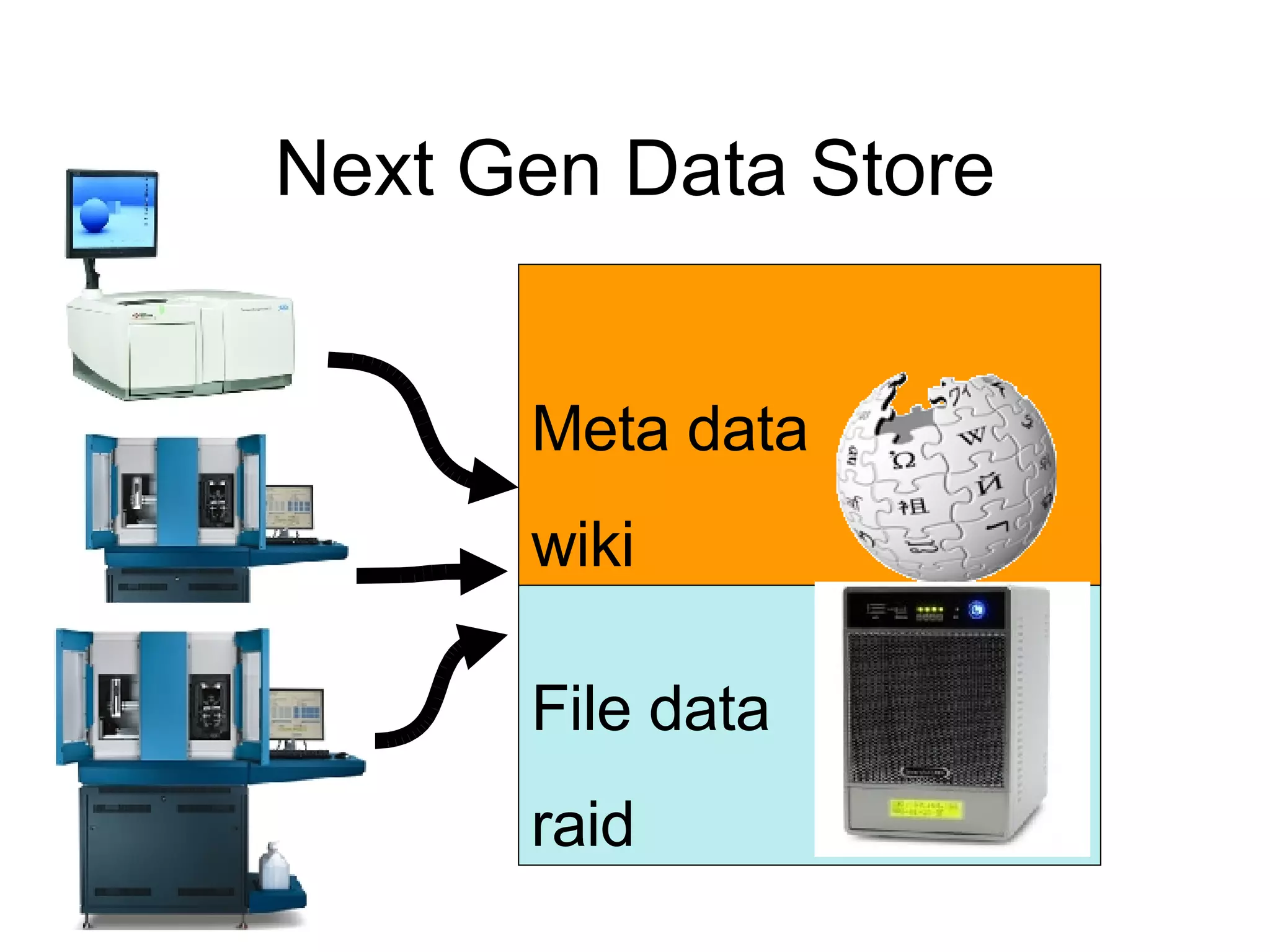

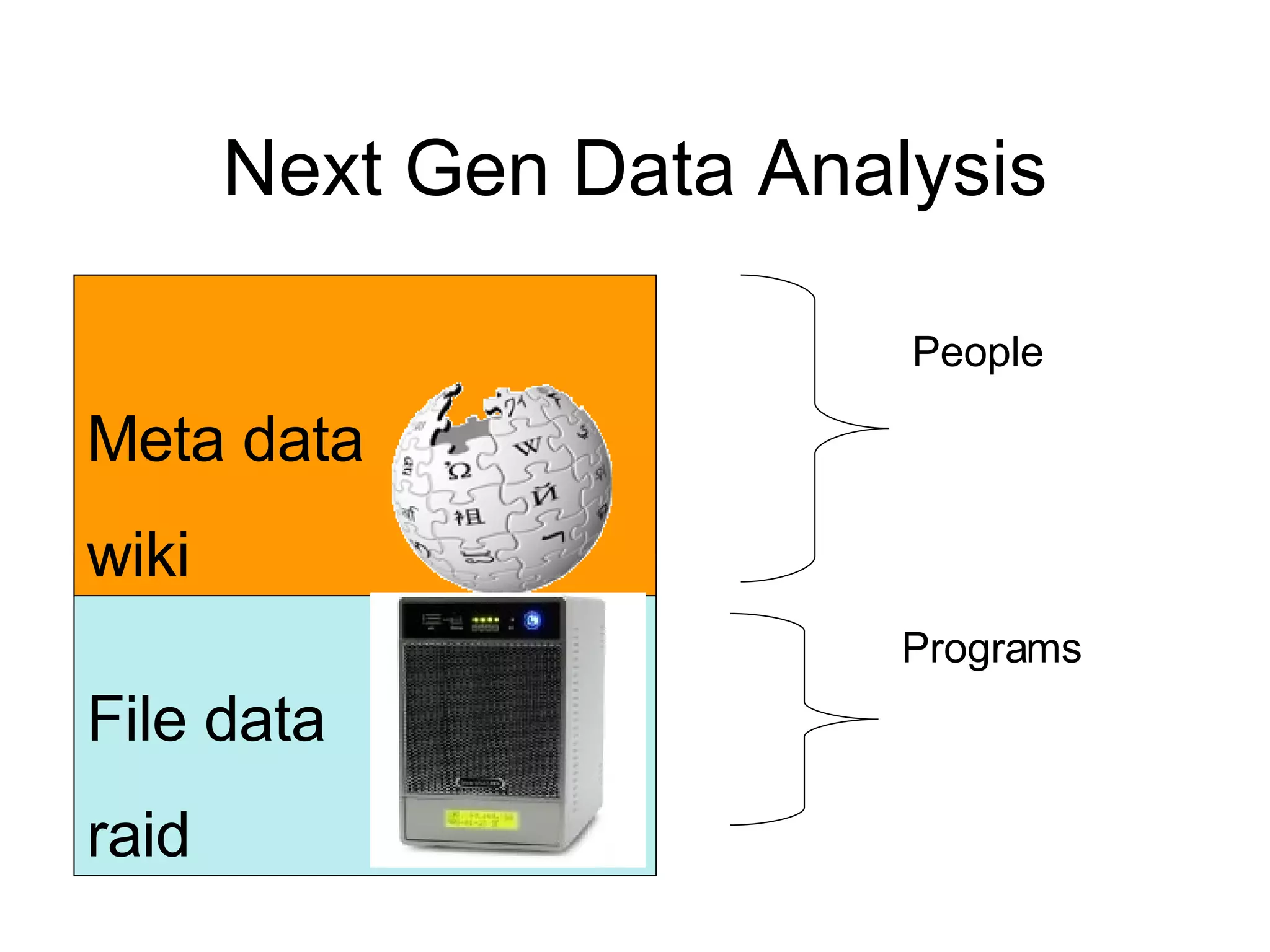

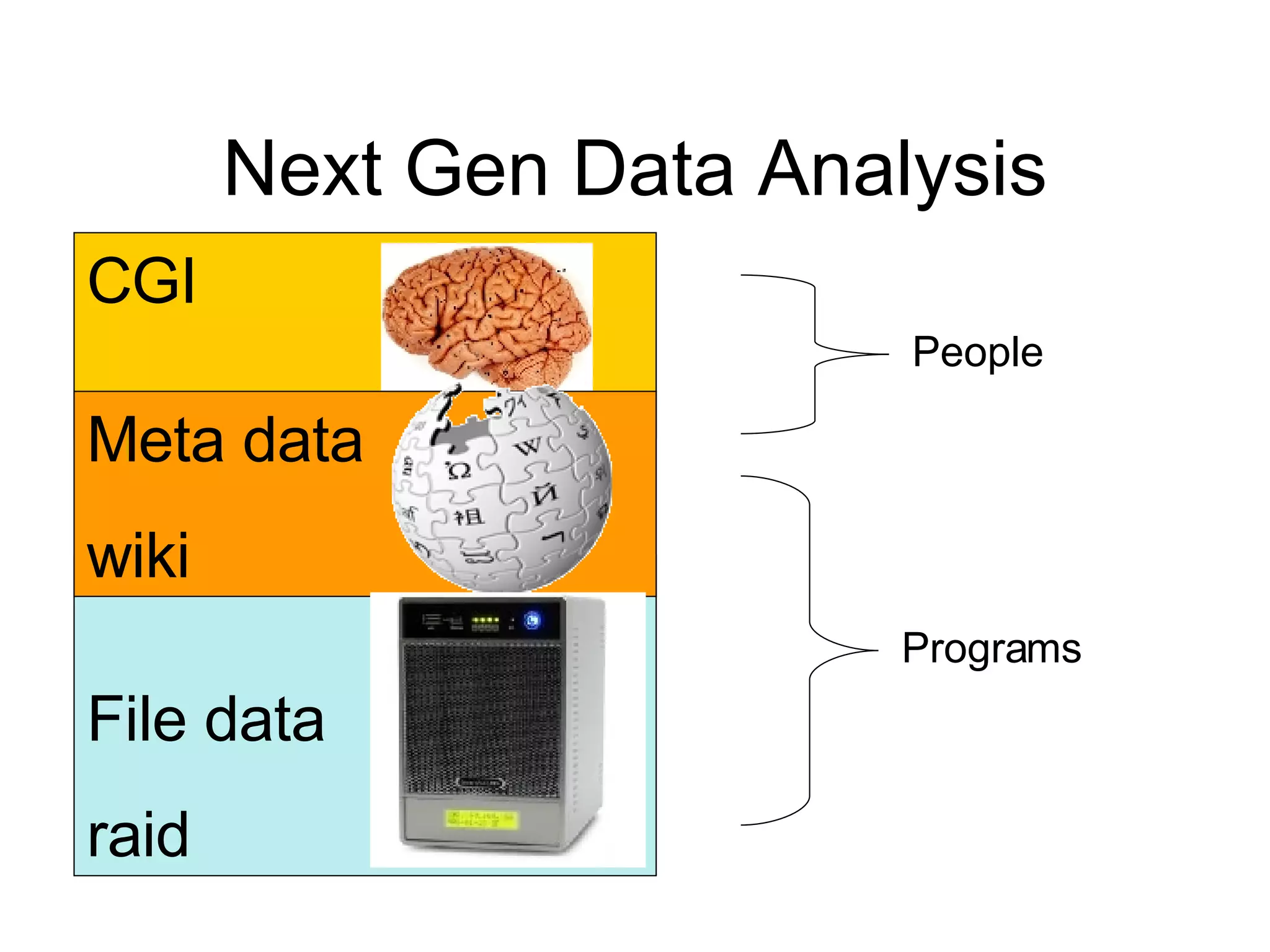

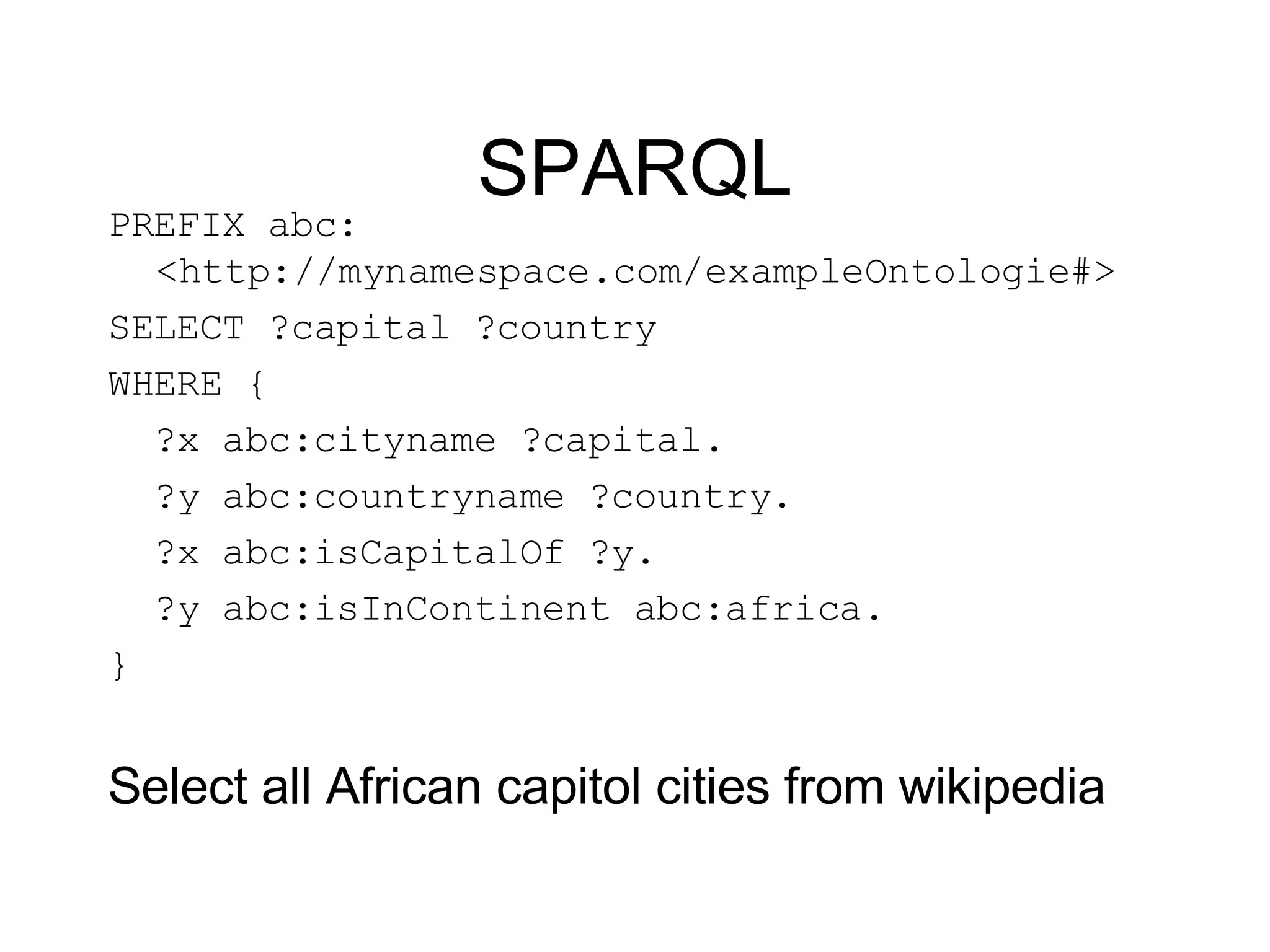

1) The document discusses using a wiki as a laboratory information management system (LIMS) to store metadata and track samples from multiple next generation DNA sequencers.

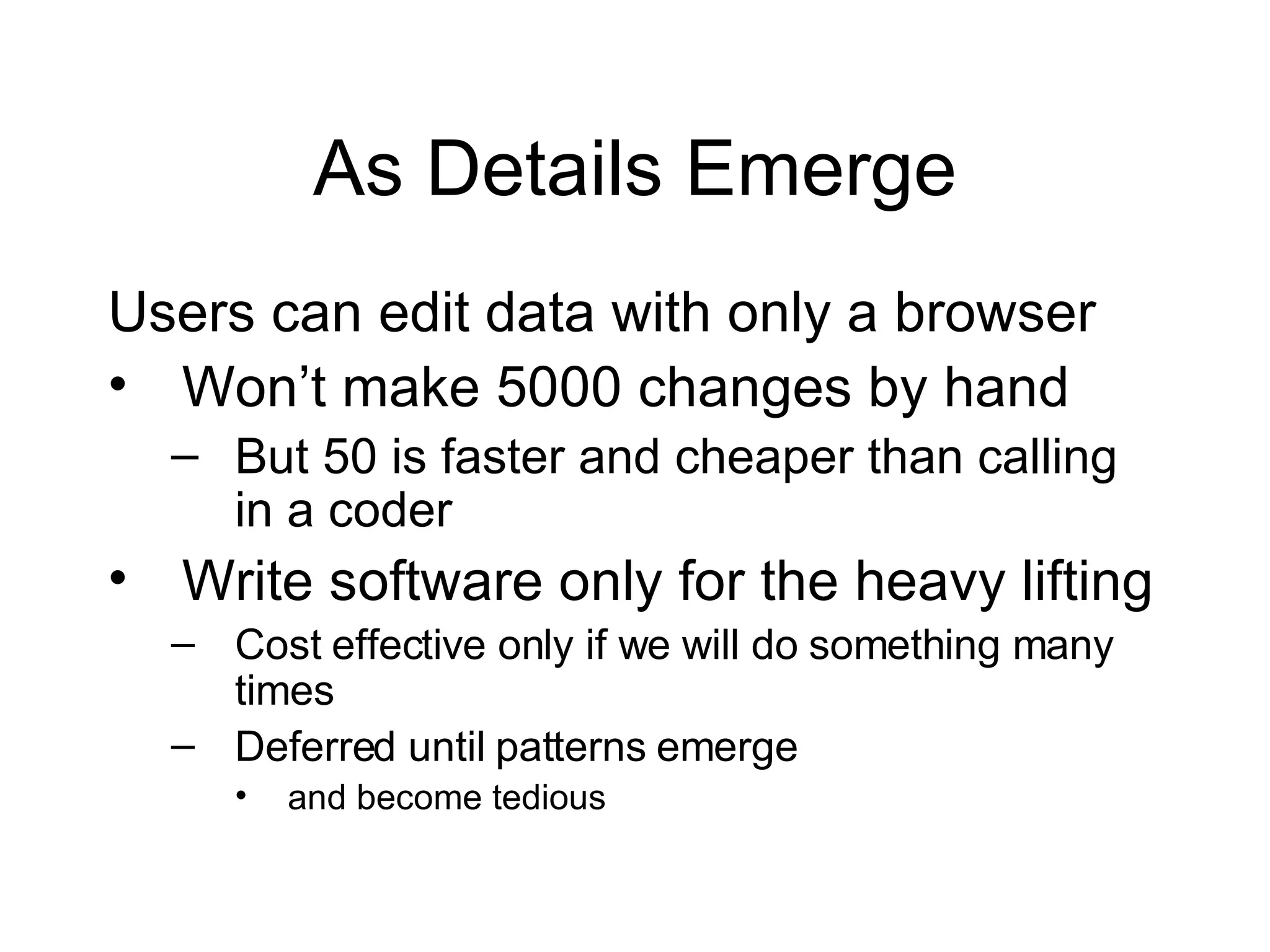

2) Key benefits of the wiki LIMS include flexibility to handle a variety of sample types and details, full version history and audit trail, and the ability to initiate and track tasks.

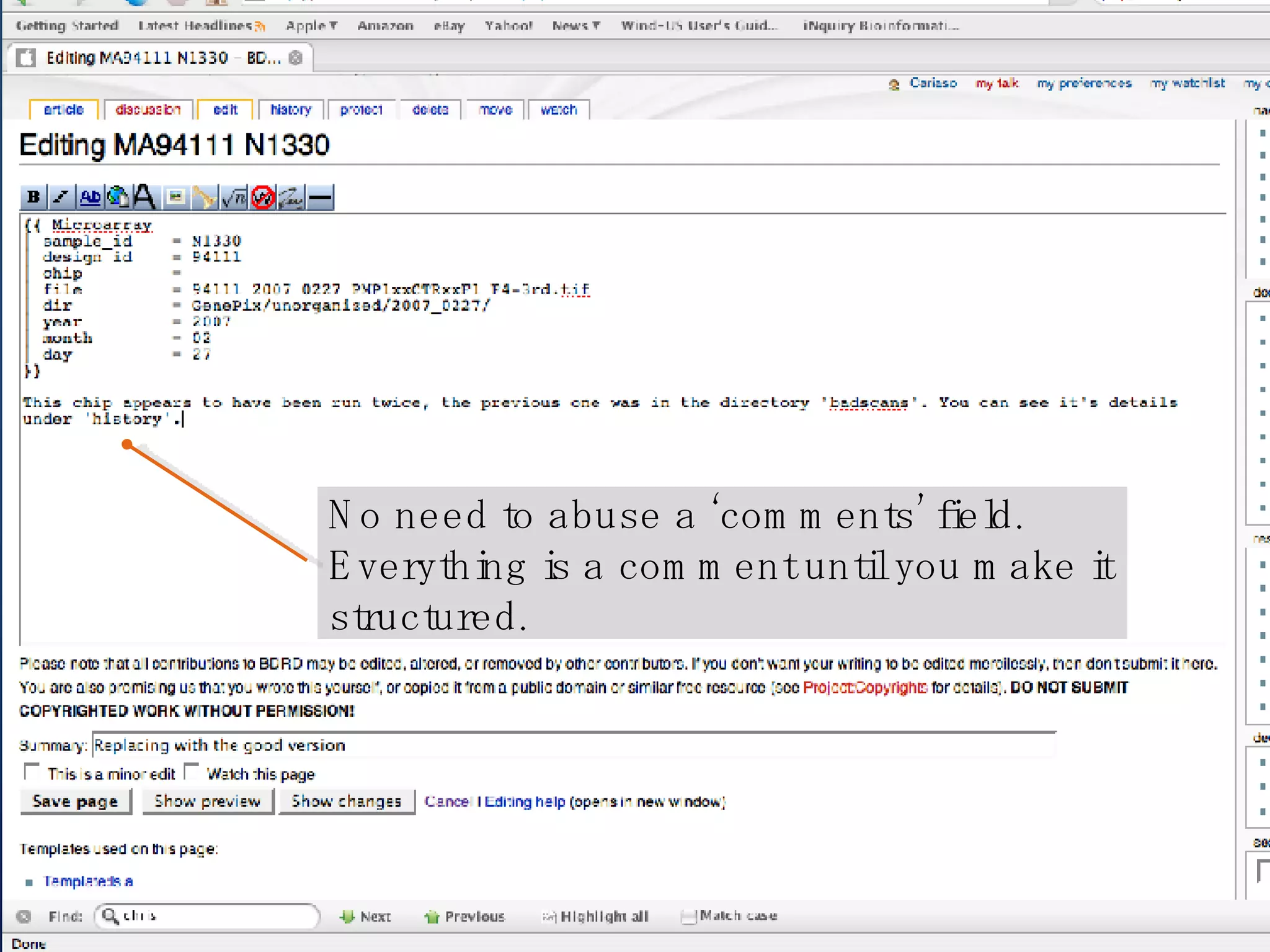

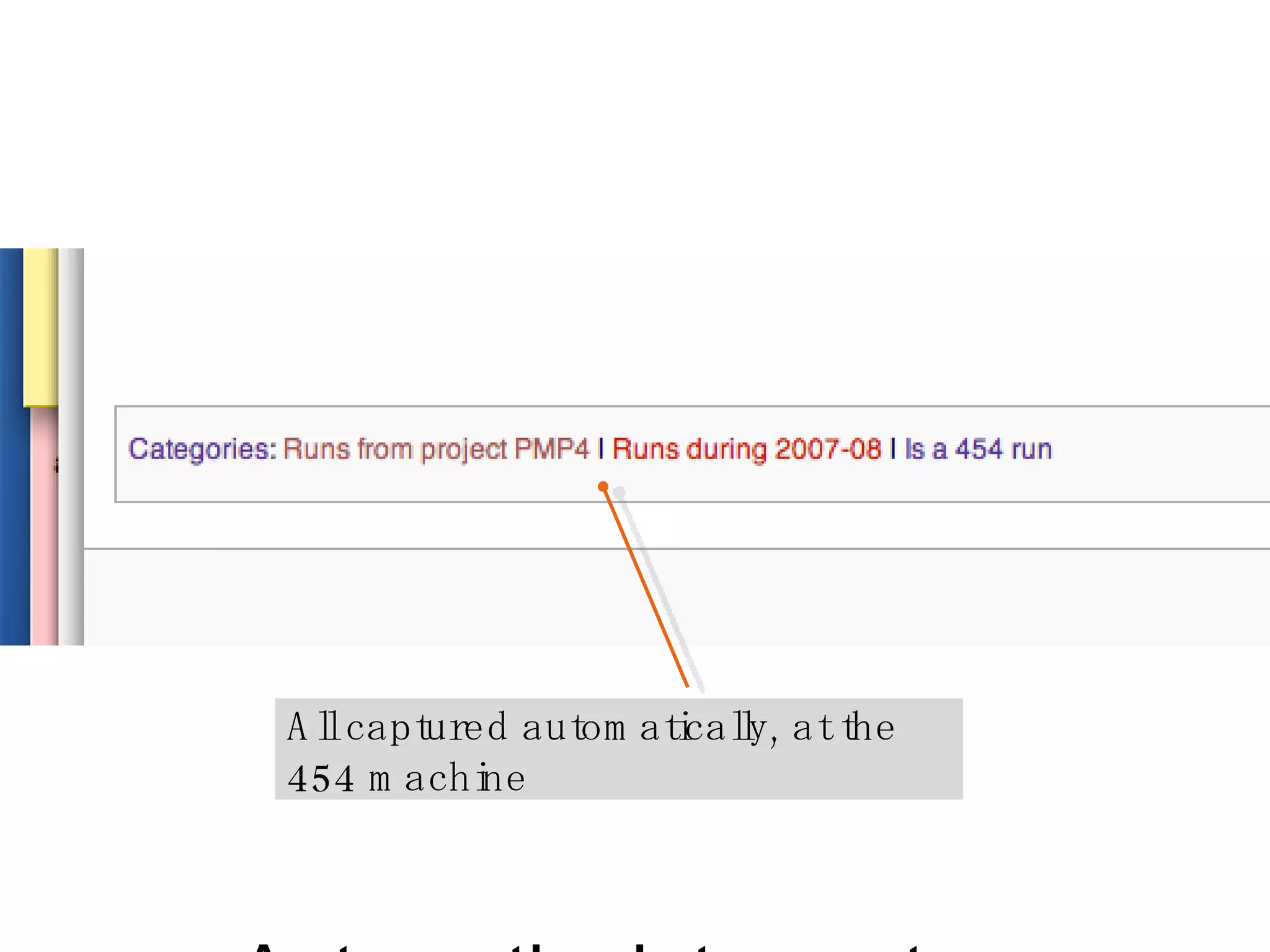

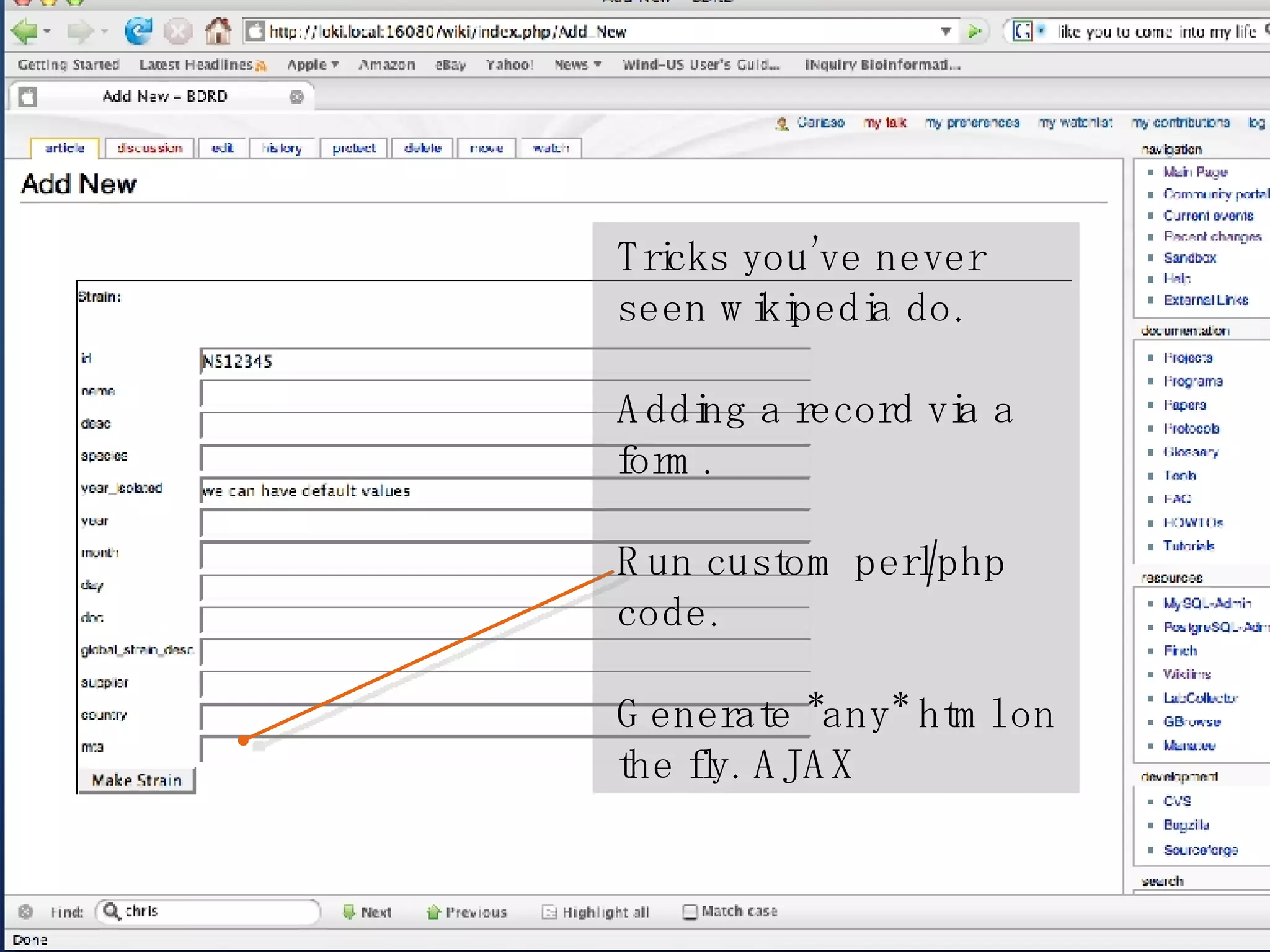

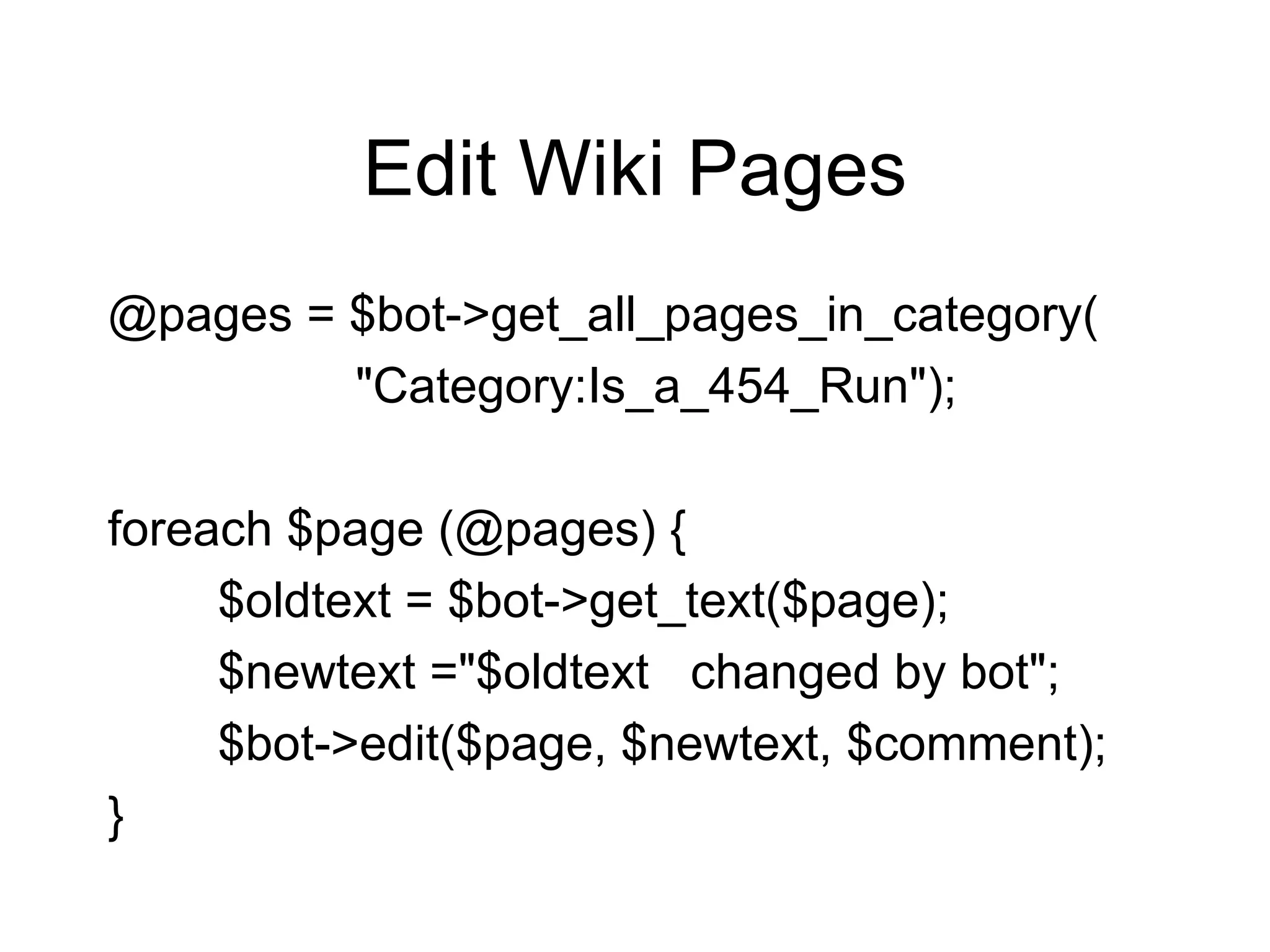

3) The wiki can be accessed and edited via a web browser and customized using Perl scripts to read and write wiki pages programmatically.

![The 454 Solution A single strict [A-Z0-9]+ field Intended as an external primary key Makes sample tracking an upstream problem Part of the results directory name R_TIMESTAMP_MACHINEID_USER_YOURFIELD Clean technical solution](https://image.slidesharecdn.com/wikilims-road41141/75/Wikilims-Road4-6-2048.jpg)