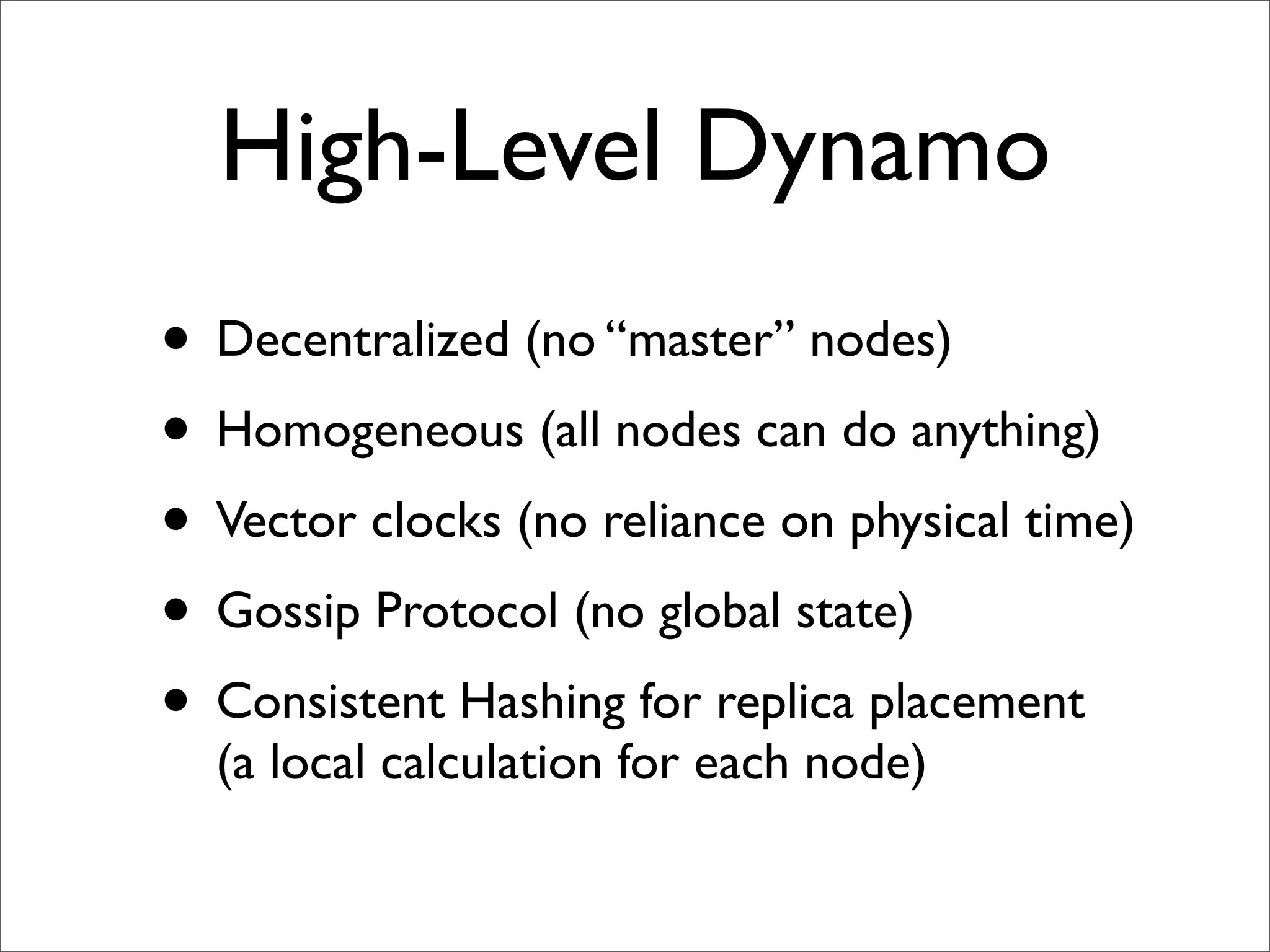

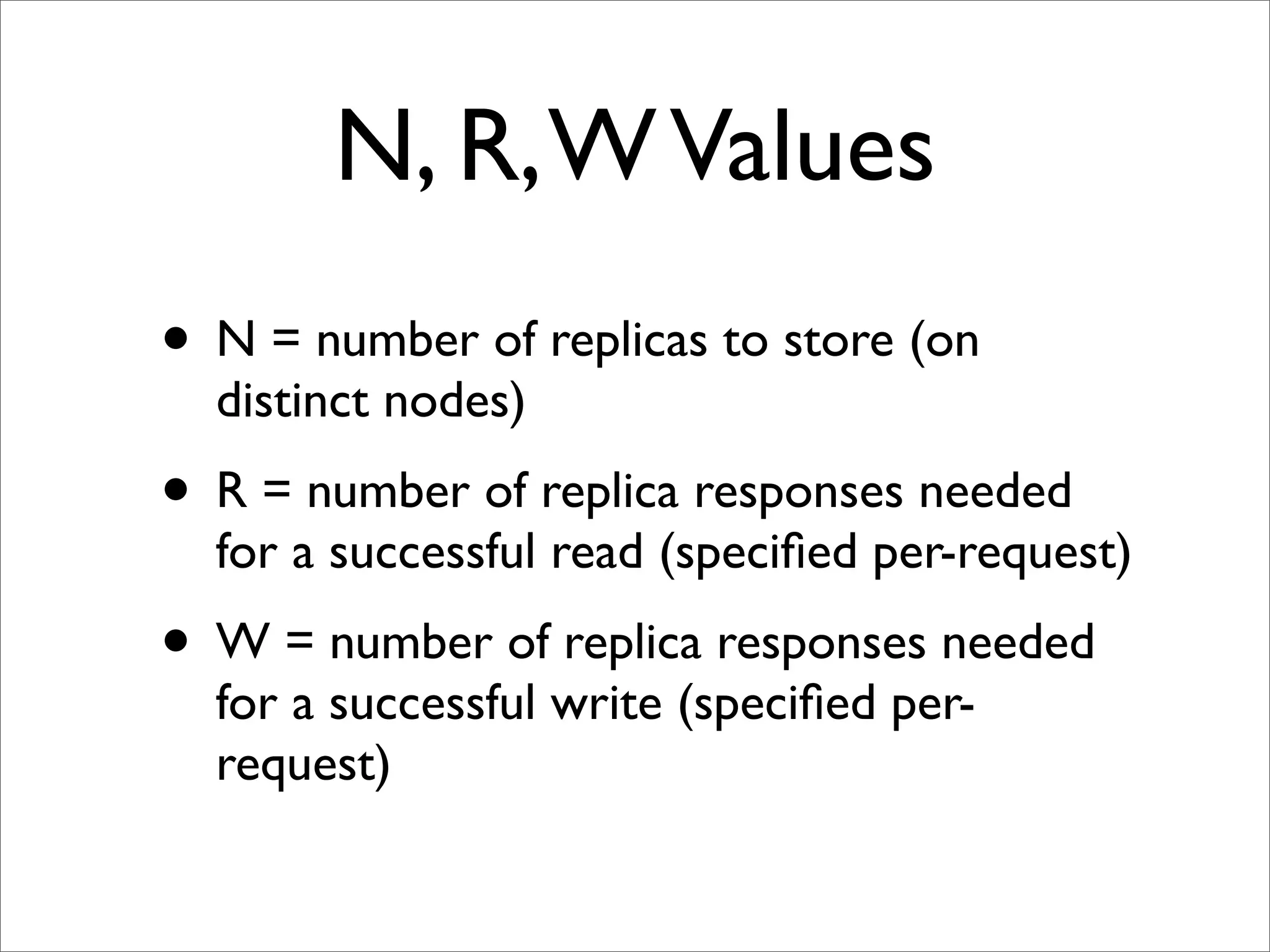

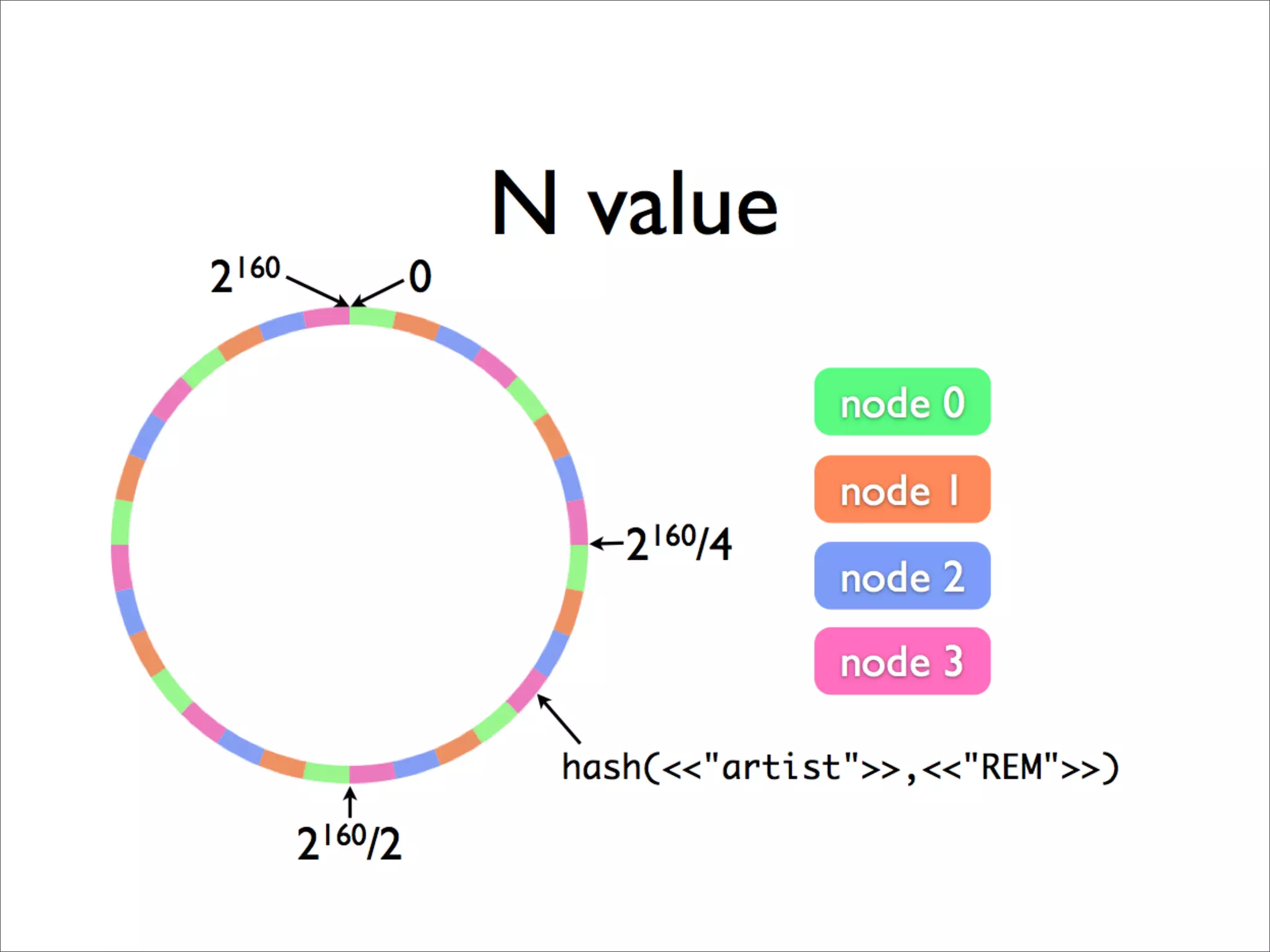

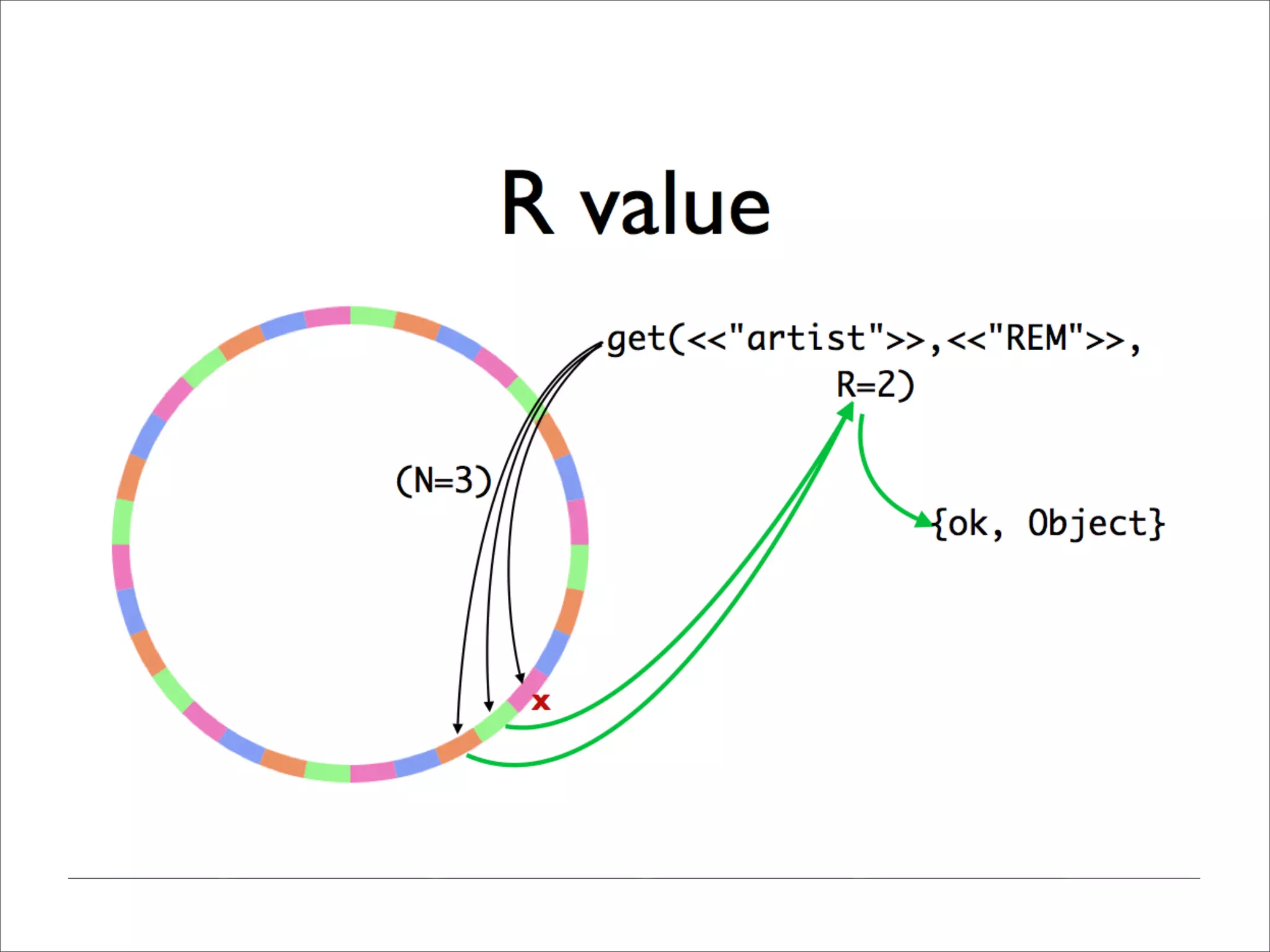

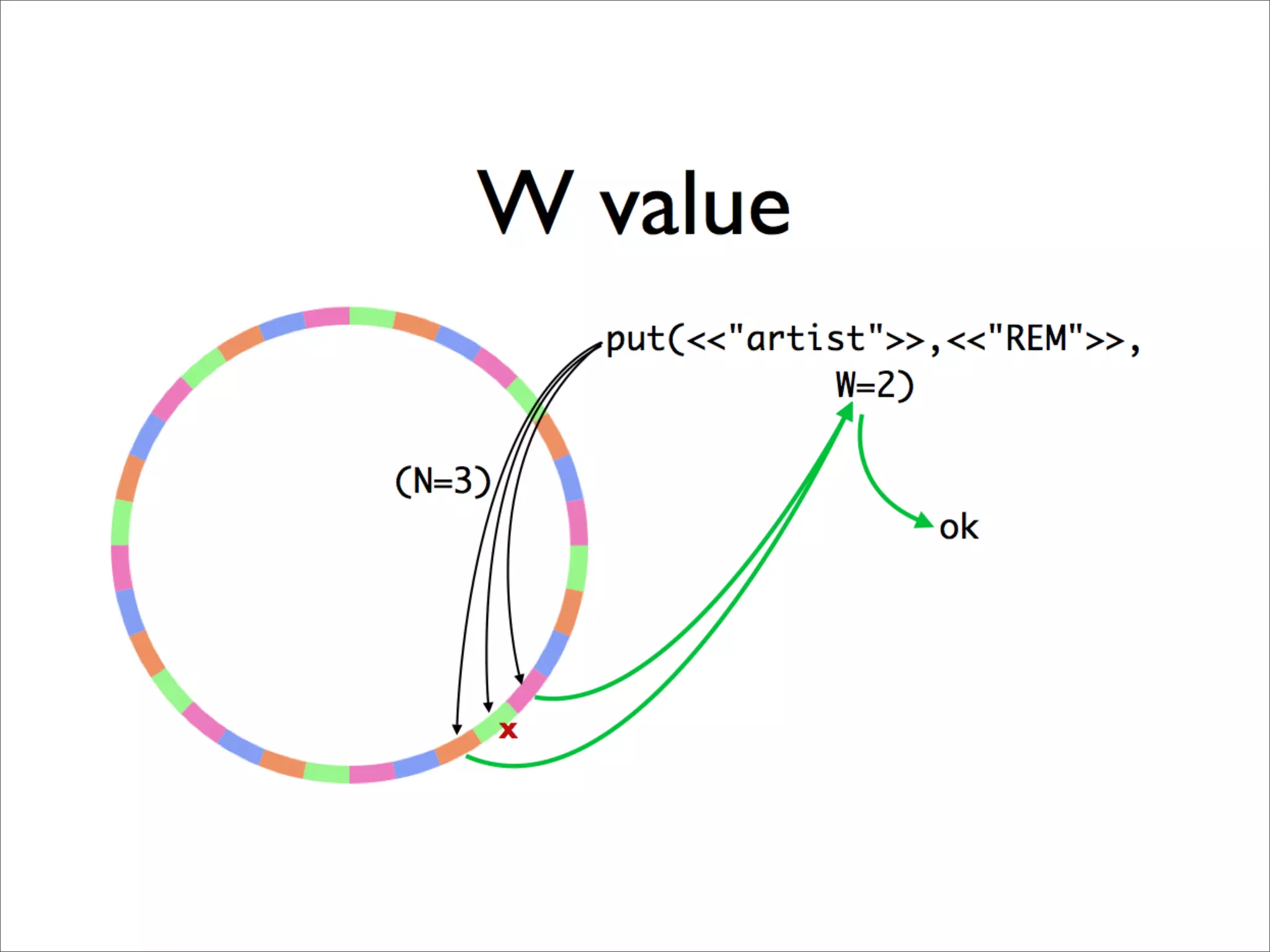

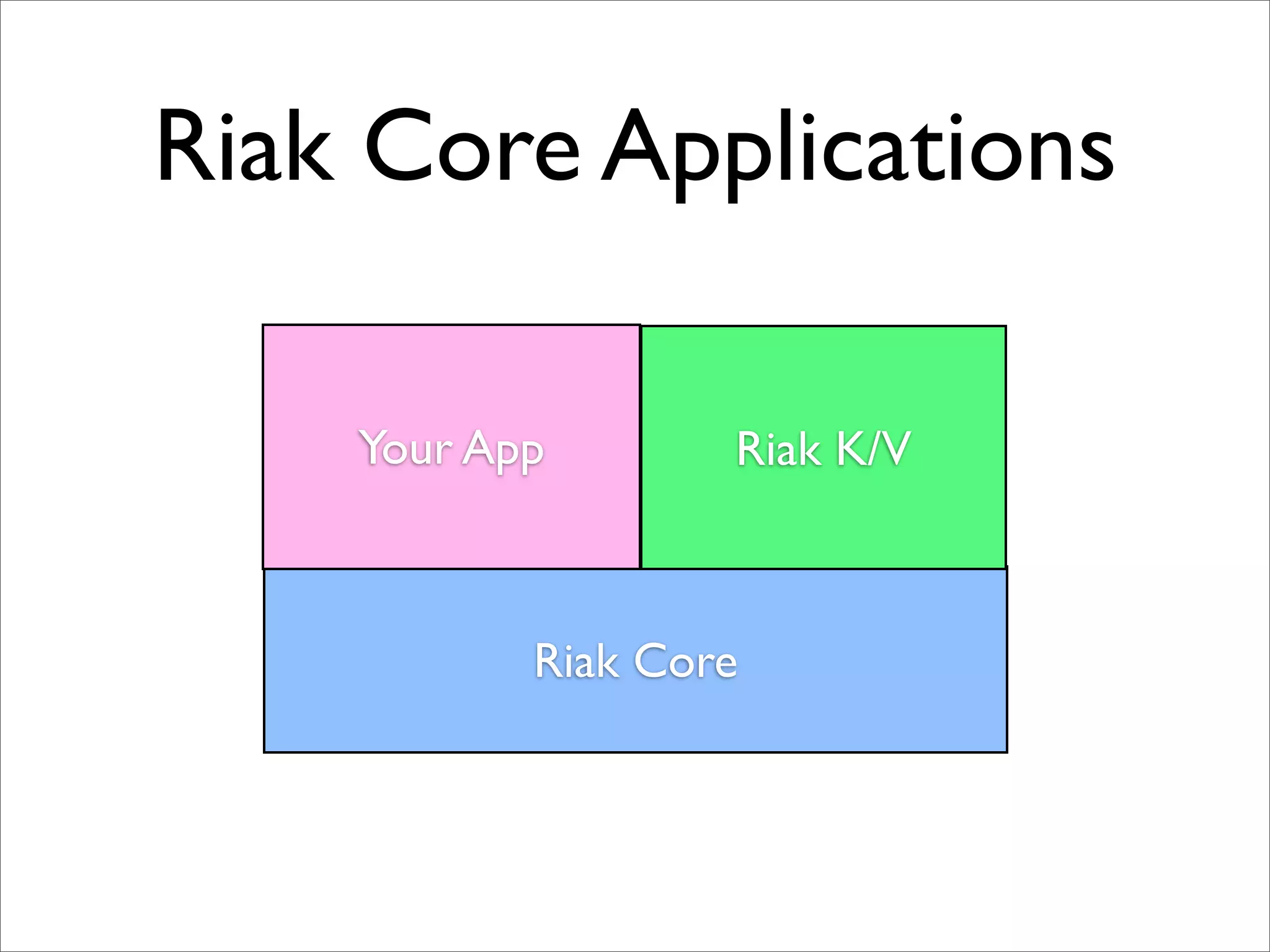

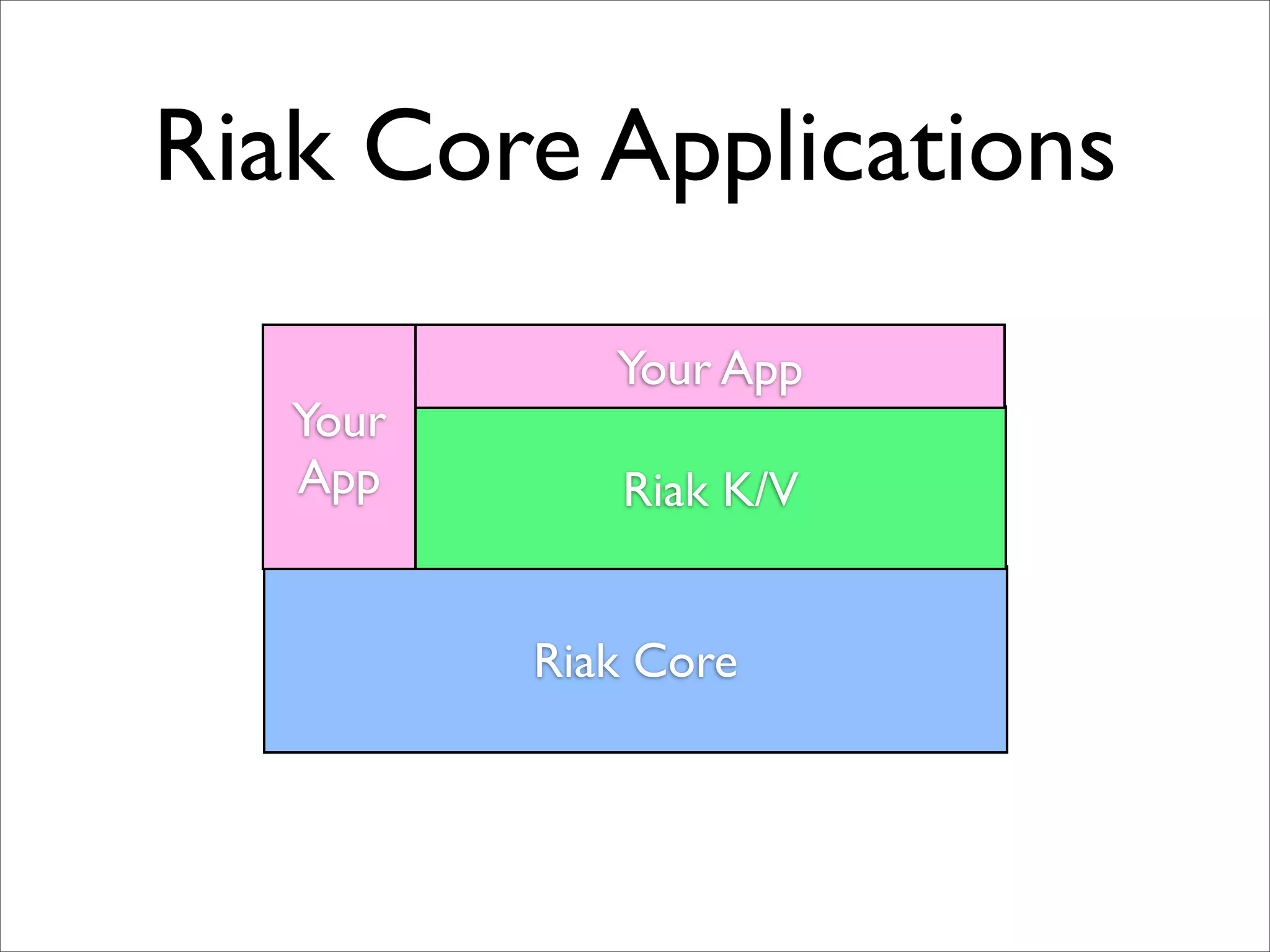

Andy Gross from Basho discussed Riak Core, an open source distributed systems framework extracted from Riak. Riak Core provides abstractions like virtual nodes, preference lists, and event watchers to help developers build distributed applications. It is currently Erlang-only but will support other languages. Riak Core aims to allow developers to outsource complex distributed systems tasks and implement their own distributed systems more easily.