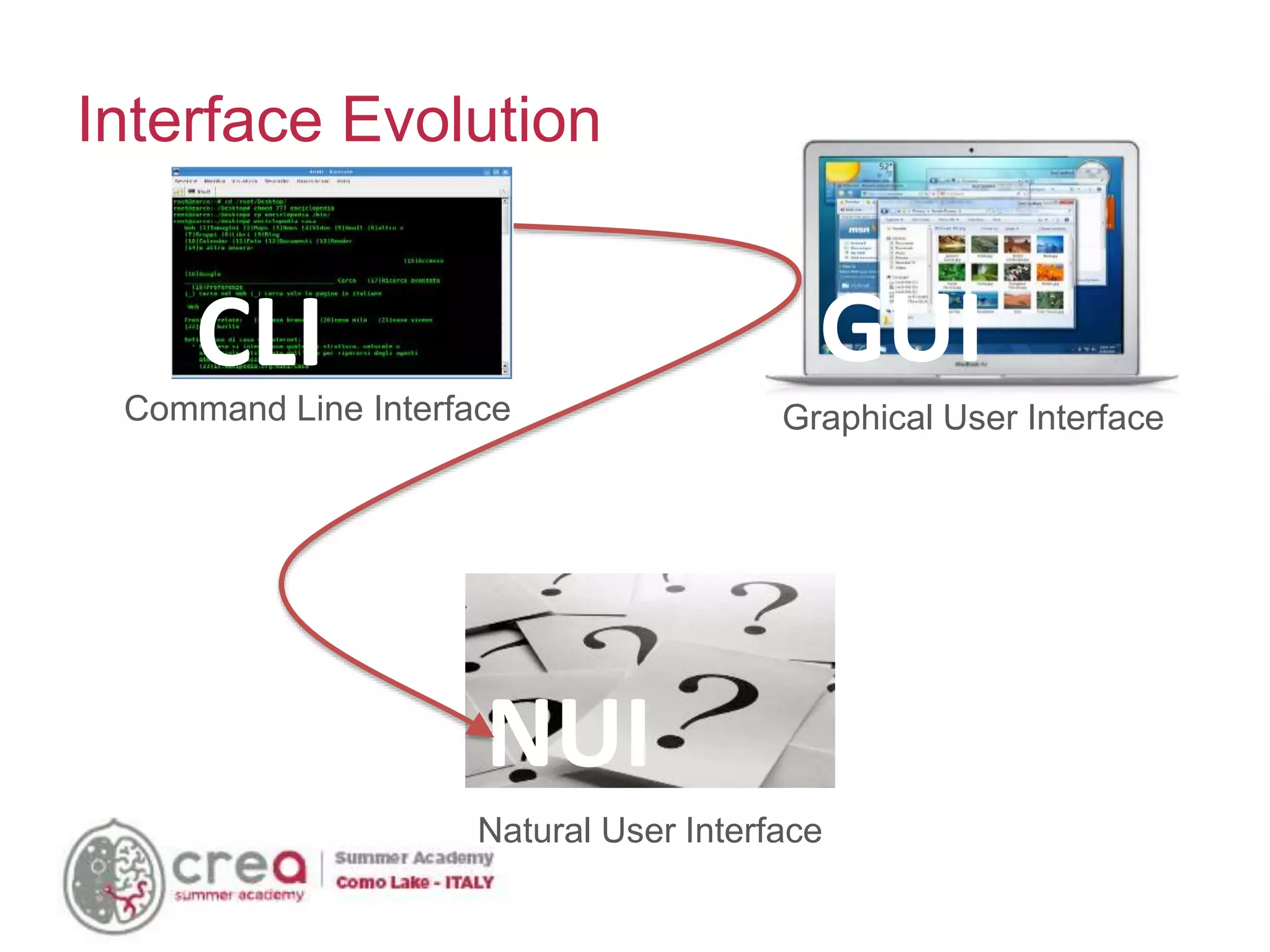

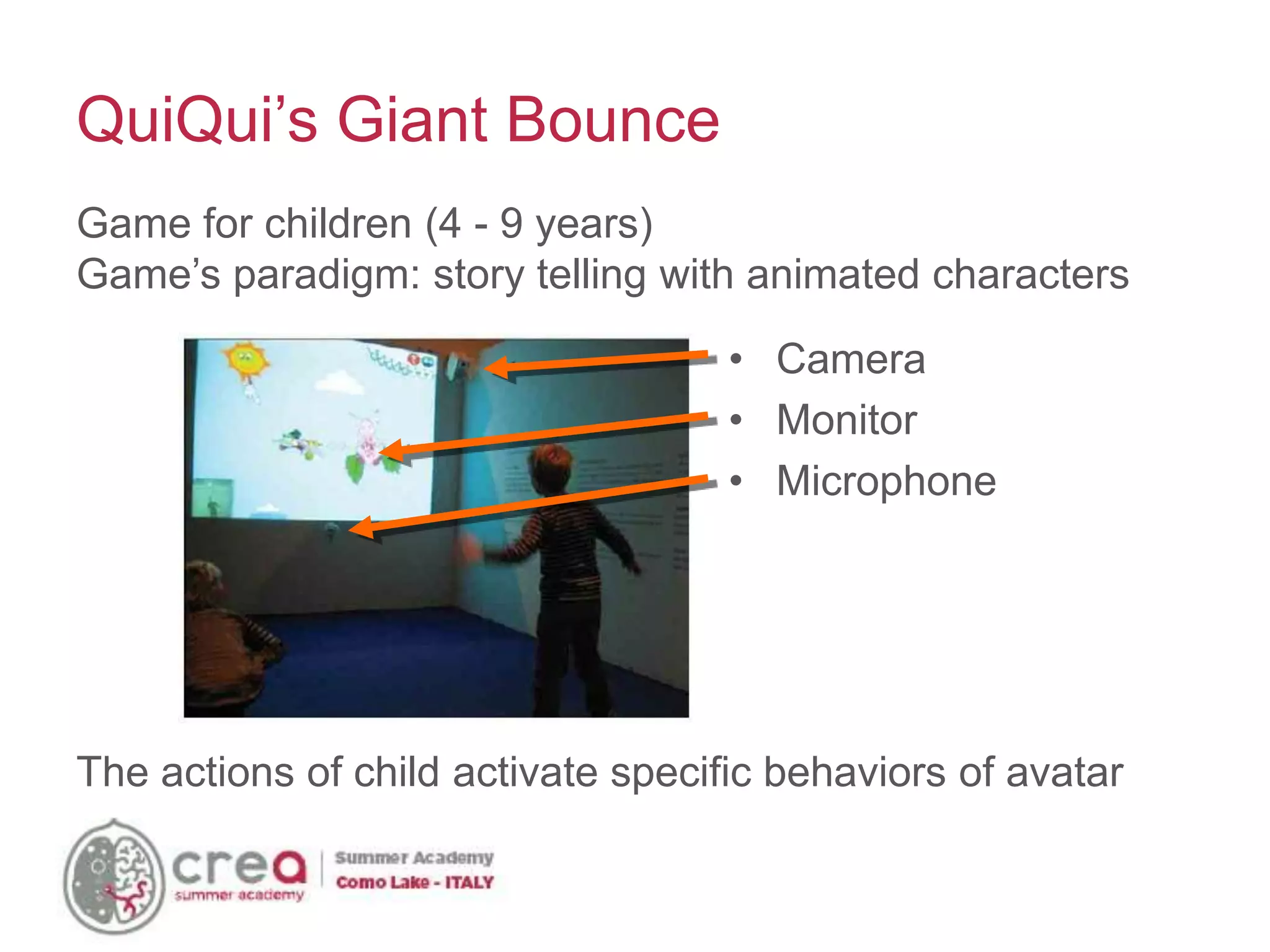

The document discusses advancements in natural user interfaces (NUI) and the evolution from traditional user interfaces, emphasizing the shift towards smart computing and ambient intelligence. It explores various input methods such as gesture recognition, facial recognition, and multi-touch technologies, while addressing design considerations and usability challenges. Additionally, it highlights the significance of creating collaborative, user-centered experiences through the integration of diverse technologies.

![Costs

Cost Buy Link

Kinect 1 100€ [???]

Kinect 2 150€ http://goo.gl/rskPuD

Real Sense 99$ http://goo.gl/G67TVy

Leap Motion 90€ http://goo.gl/zyVXZZ

Myo 199$ https://goo.gl/ubv6wV

EyeX 99€ http://goo.gl/oGD3Ds](https://image.slidesharecdn.com/nui-151111150638-lva1-app6892/75/Natural-User-Interfaces-54-2048.jpg)