Hadoop Interview Questions

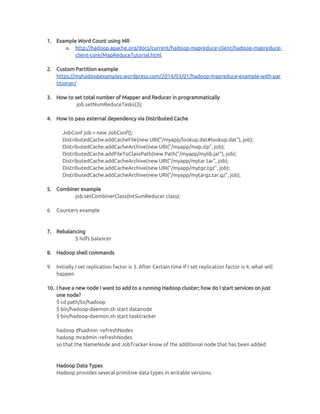

- 1. 1. Example Word Count using MR a. http://hadoop.apache.org/docs/current/hadoop-mapreduce-client/hadoop-mapreduce- client-core/MapReduceTutorial.html 2. Custom Partition example https://myhadoopexamples.wordpress.com/2014/03/01/hadoop-mapreduce-example-with-par titioner/ 3. How to set total number of Mapper and Reducer in programmatically job.setNumReduceTasks(3); 4. How to pass external dependency via Distributed Cache JobConf job = new JobConf(); DistributedCache.addCacheFile(new URI("/myapp/lookup.dat#lookup.dat"), job); DistributedCache.addCacheArchive(new URI("/myapp/map.zip", job); DistributedCache.addFileToClassPath(new Path("/myapp/mylib.jar"), job); DistributedCache.addCacheArchive(new URI("/myapp/mytar.tar", job); DistributedCache.addCacheArchive(new URI("/myapp/mytgz.tgz", job); DistributedCache.addCacheArchive(new URI("/myapp/mytargz.tar.gz", job); 5. Combiner example job.setCombinerClass(IntSumReducer.class); 6. Counters example 7. Rebalancing $ hdfs balancer 8. Hadoop shell commands 9. Initially I set replication factor is 3. After Certain time If I set replication factor is 4, what will happen 10. I have a new node I want to add to a running Hadoop cluster; how do I start services on just one node? $ cd path/to/hadoop $ bin/hadoop-daemon.sh start datanode $ bin/hadoop-daemon.sh start tasktracker hadoop dfsadmin -refreshNodes hadoop mradmin -refreshNodes so that the NameNode and JobTracker know of the additional node that has been added Hadoop Data Types Hadoop provides several primitive data types in writable versions.

- 6. Mention what are the main configuration parameters that user need to specify to run Mapreduce Job ? The user of Mapreduce framework needs to specify - Job’s input locations in the distributed file system - Job’s output location in the distributed file system - Input format - Output format - Class containing the map function - Class containing the reduce function - JAR file containing the mapper, reducer and driver classes Explain what is Sequencefileinputformat? Sequencefileinputformat is used for reading files in sequence. It is a specific compressed binary file format which is optimized for passing data between the output of one MapReduce job to the input of some other MapReduce job. Common Input Formats defined in Hadoop a. TextInputFormat b. KeyValueInputFormat c. SequenceFileInputFormat What is InputSplit in Hadoop? When a Hadoop job is run, it splits input files into chunks and assign each split to a mapper to process. This is called InputSplit. What is the purpose of RecordReader in Hadoop? The InputSplit has defined a slice of work, but does not describe how to access it. The RecordReader class actually loads the data from its source and converts it into (key, value) pairs suitable for reading by the Mapper. The RecordReader instance is defined by the Input Format. After the Map phase finishes, the Hadoop framework does “Partitioning, Shuffle and sort”. Explain what happens in this phase?