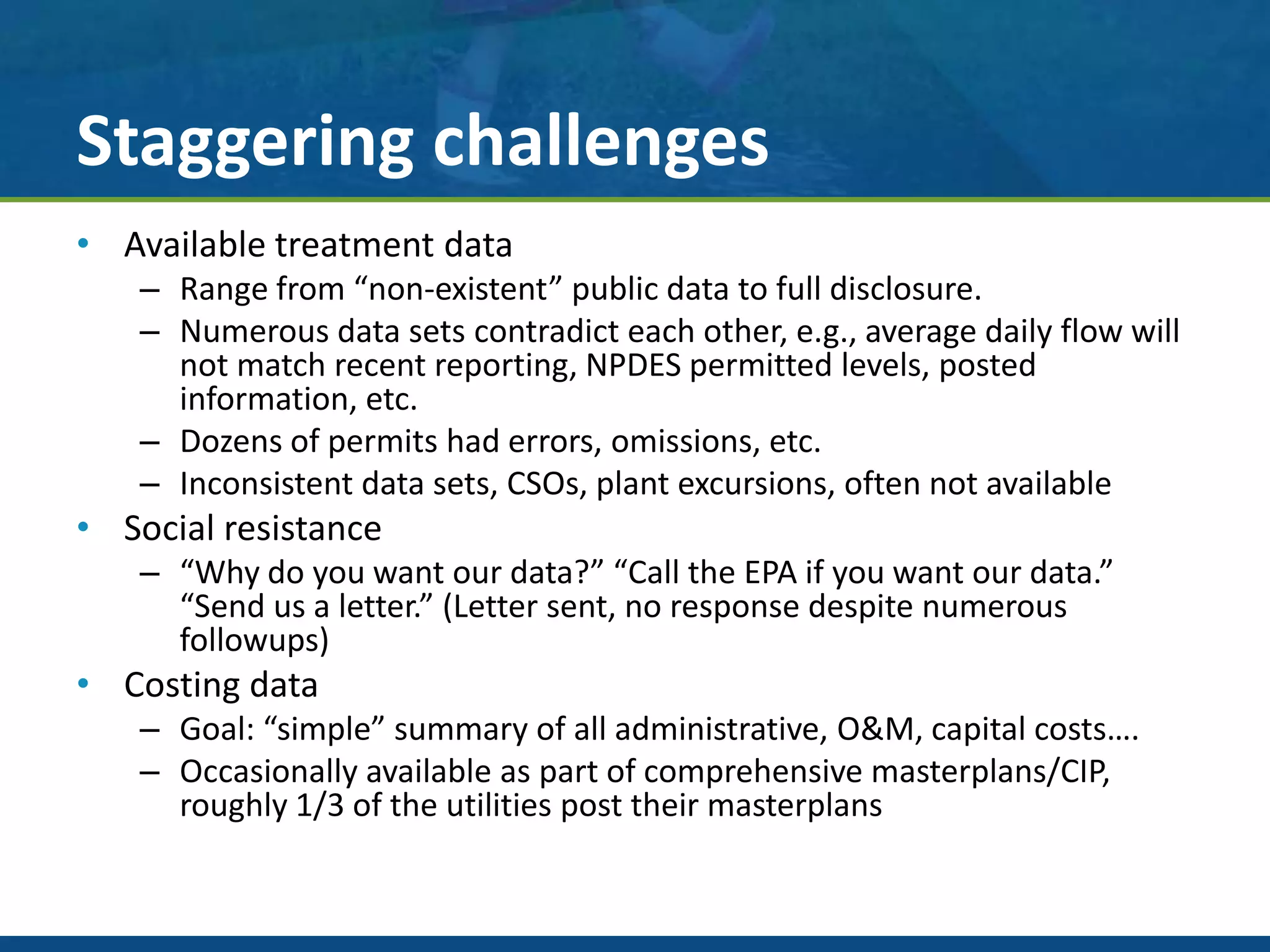

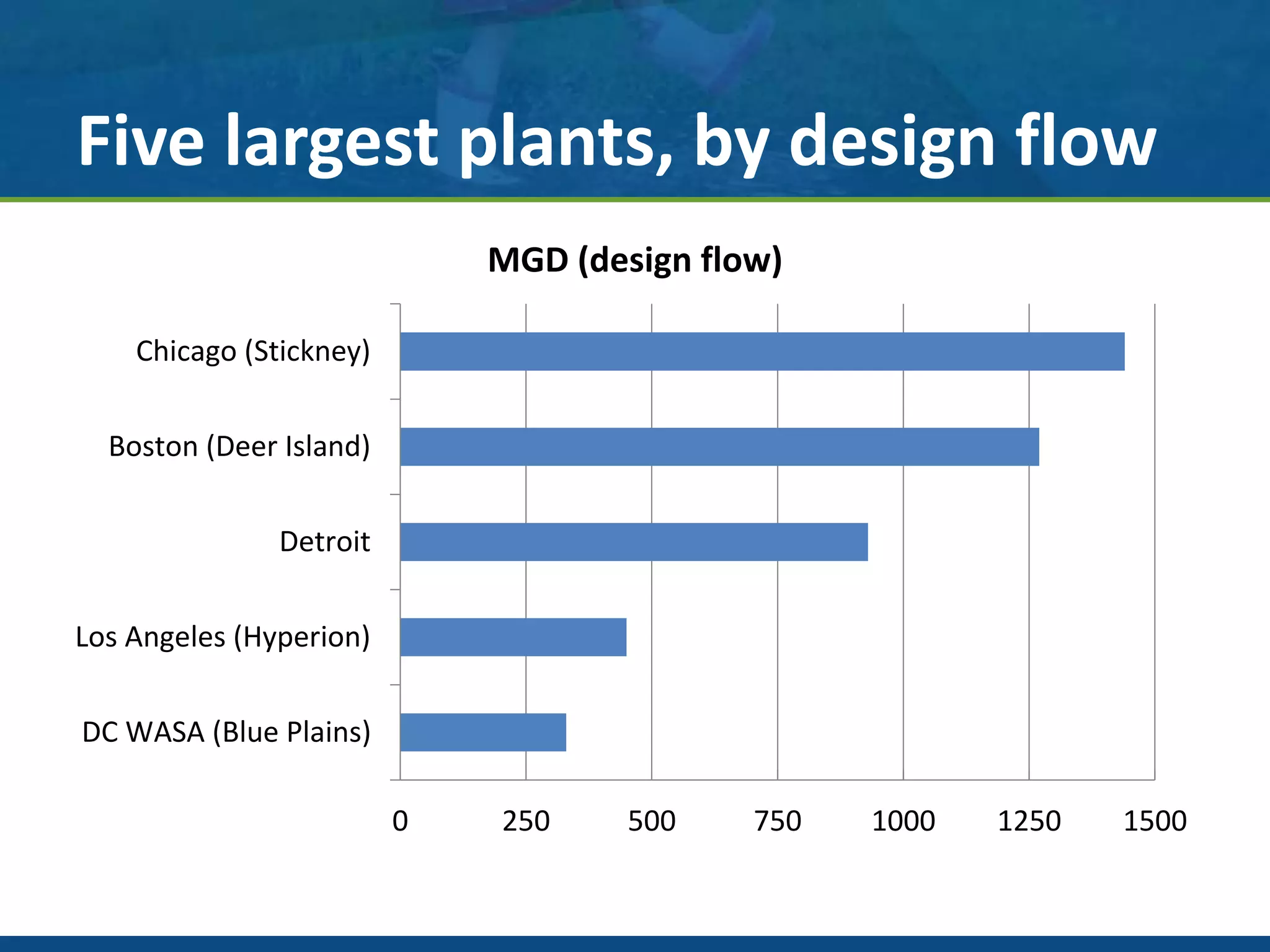

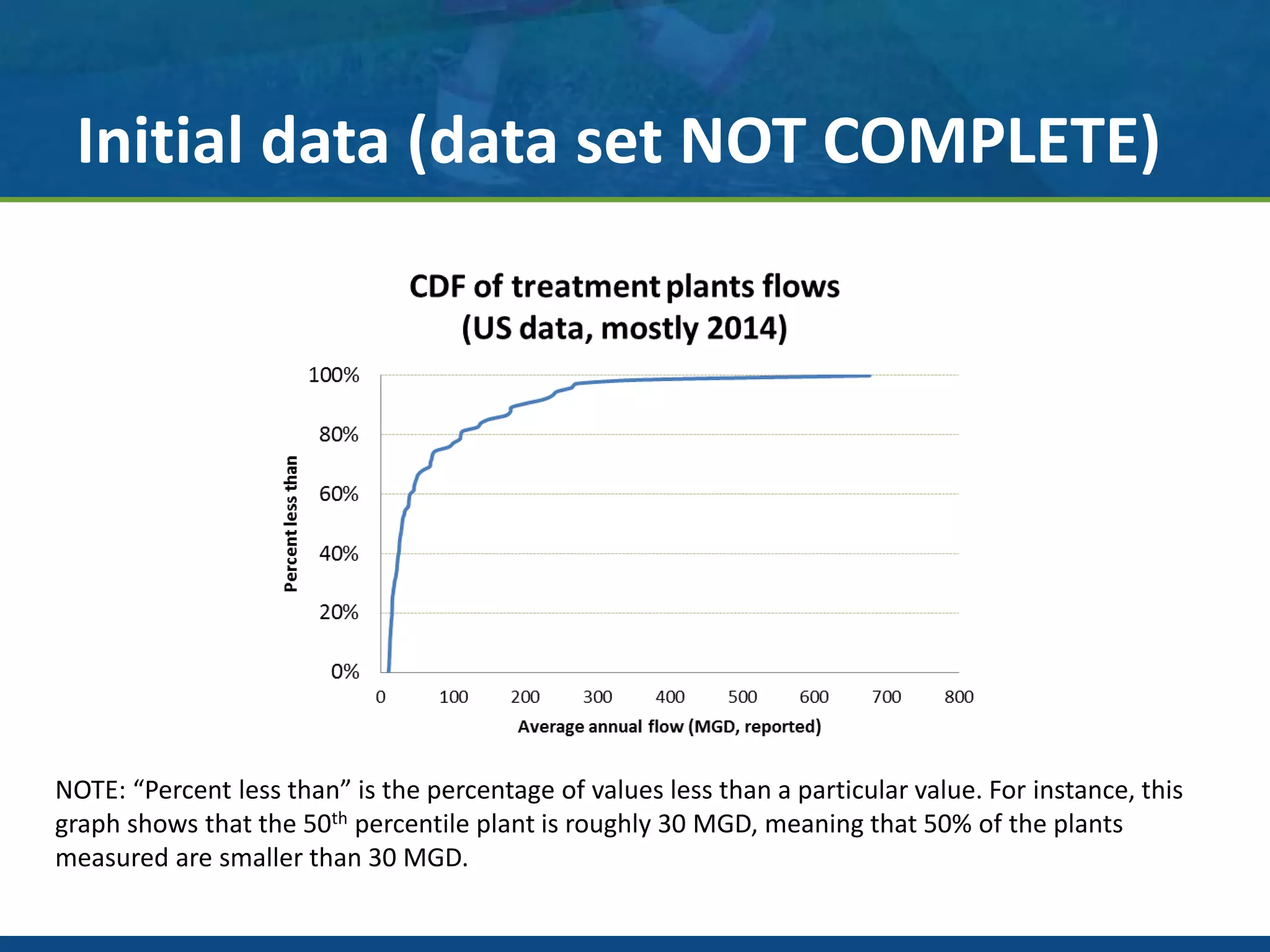

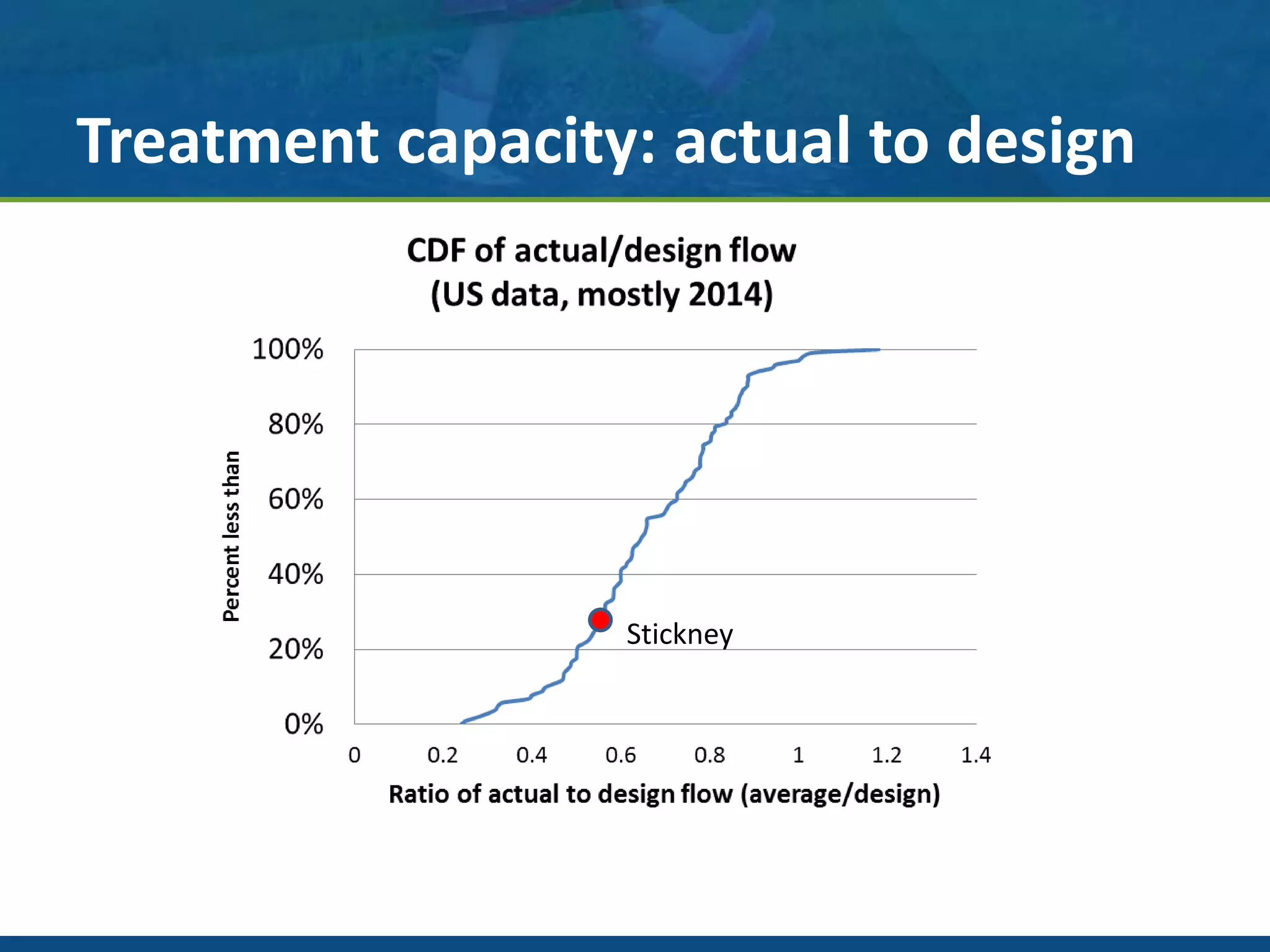

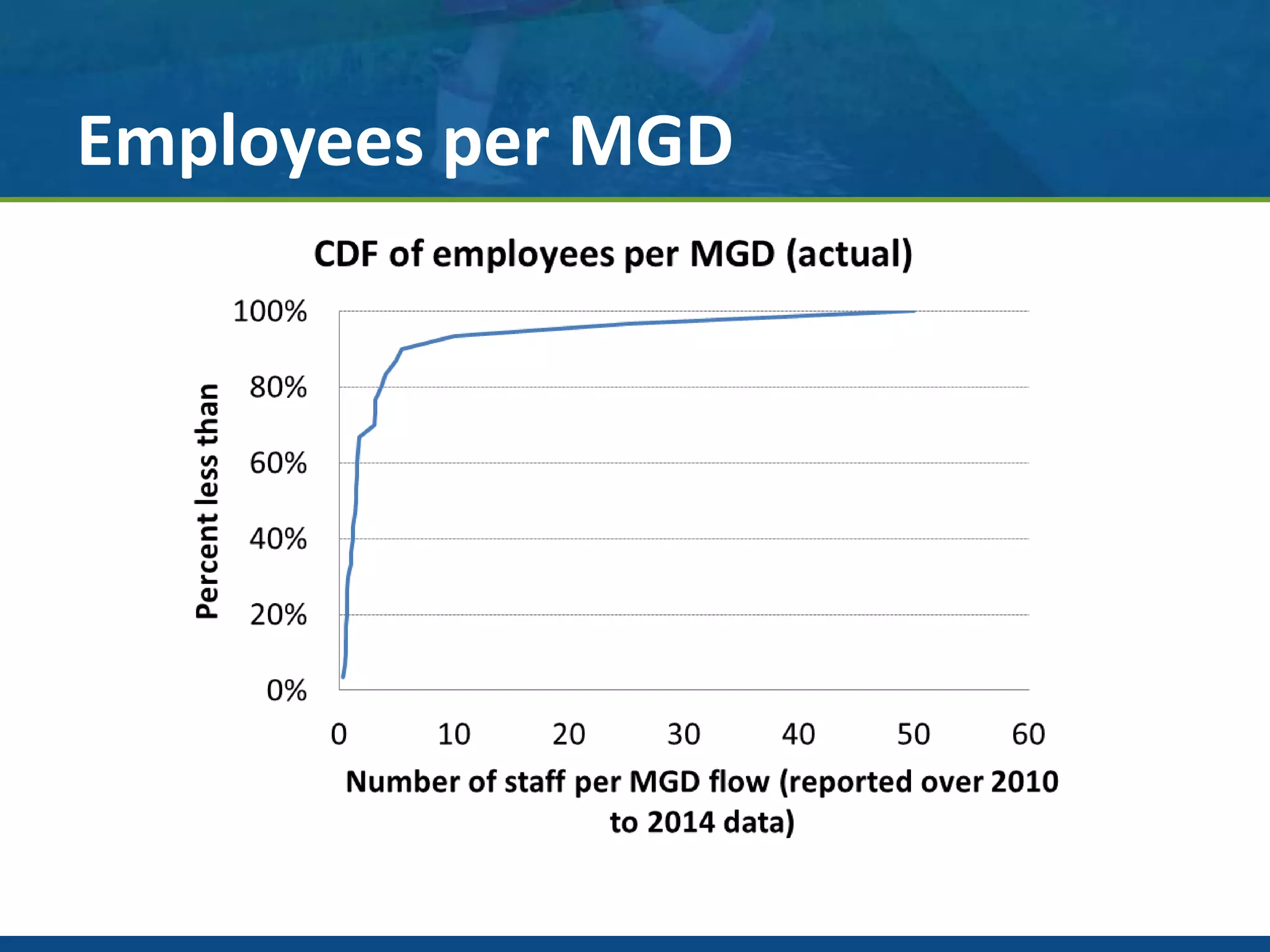

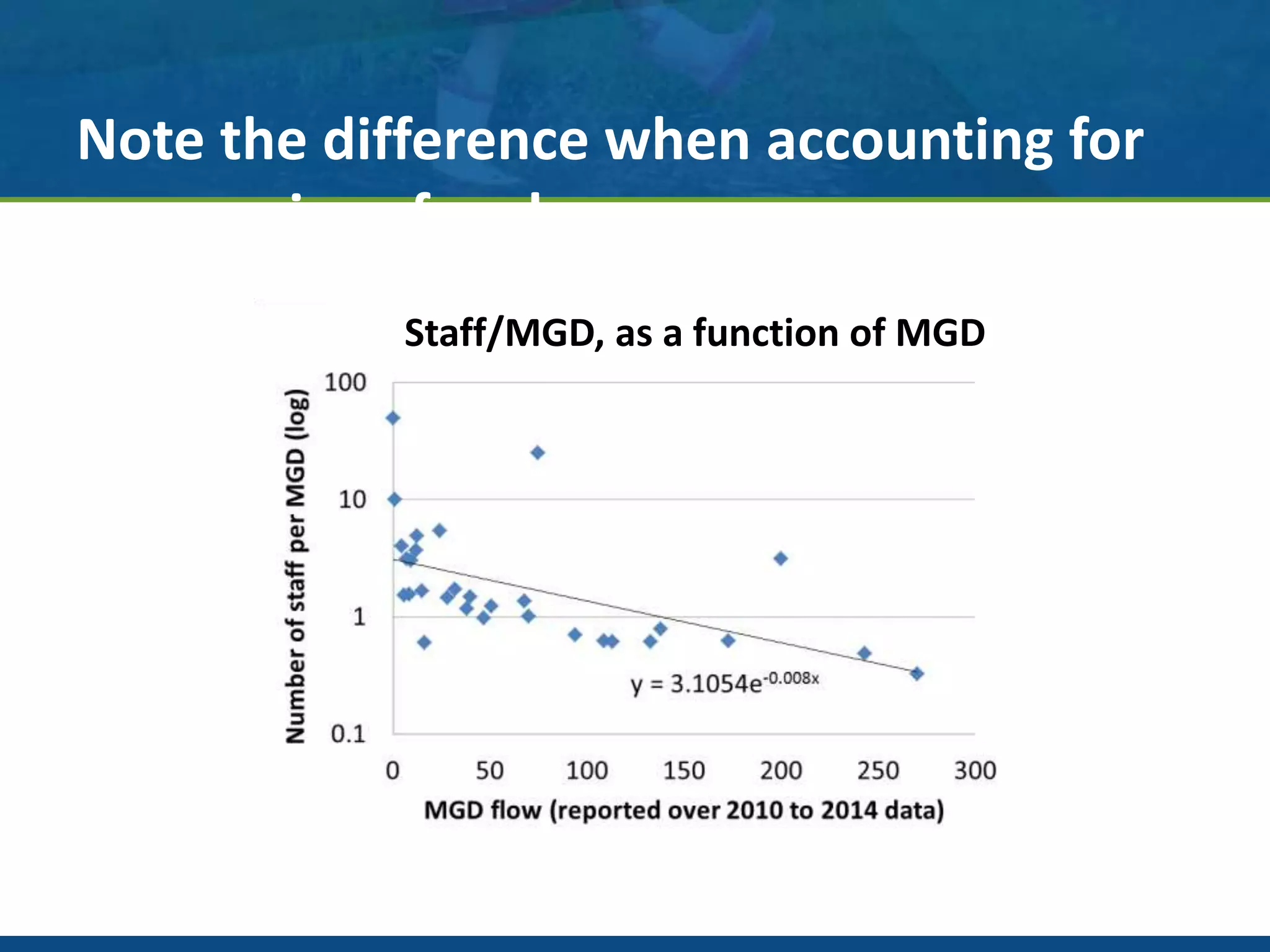

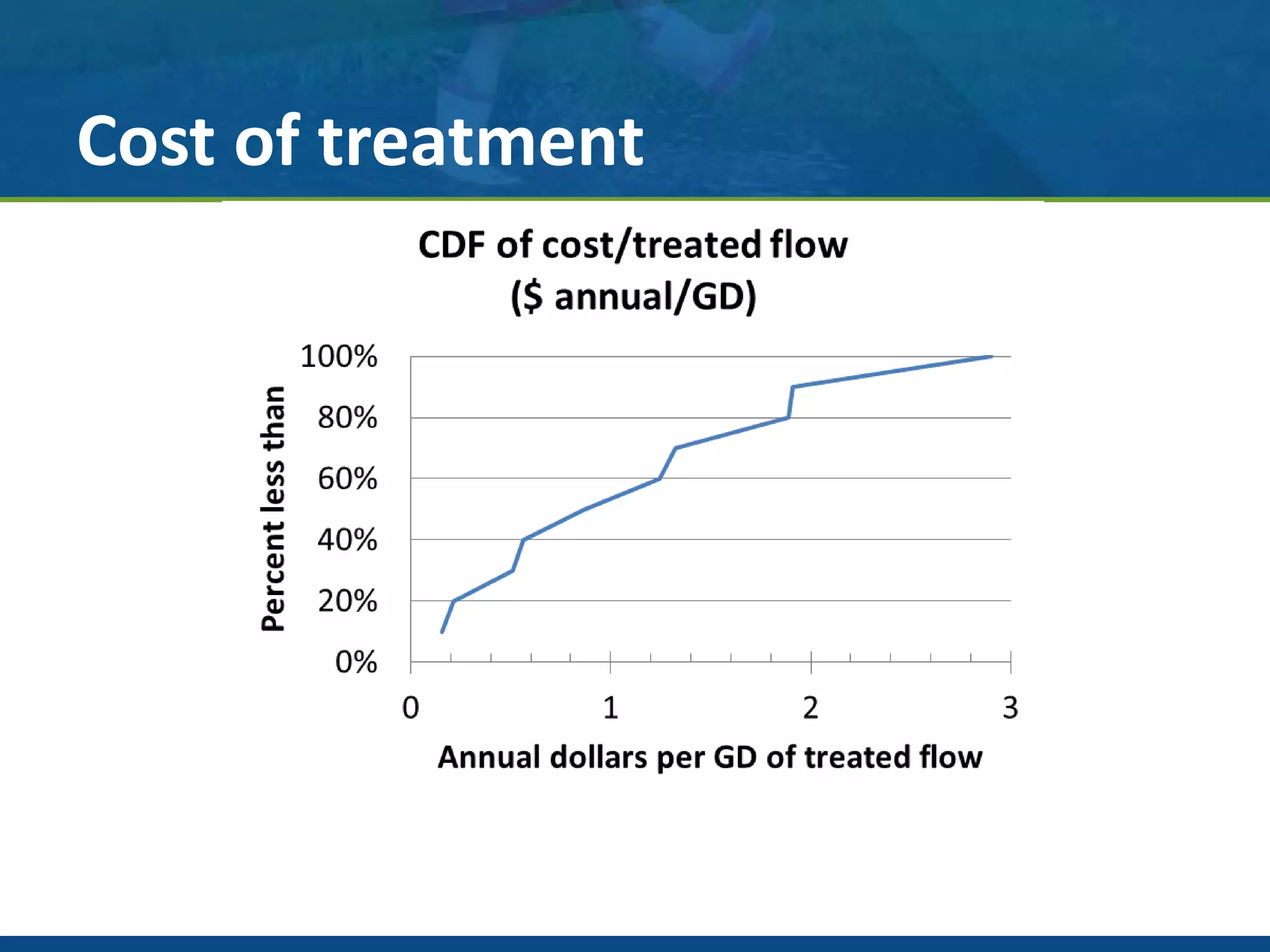

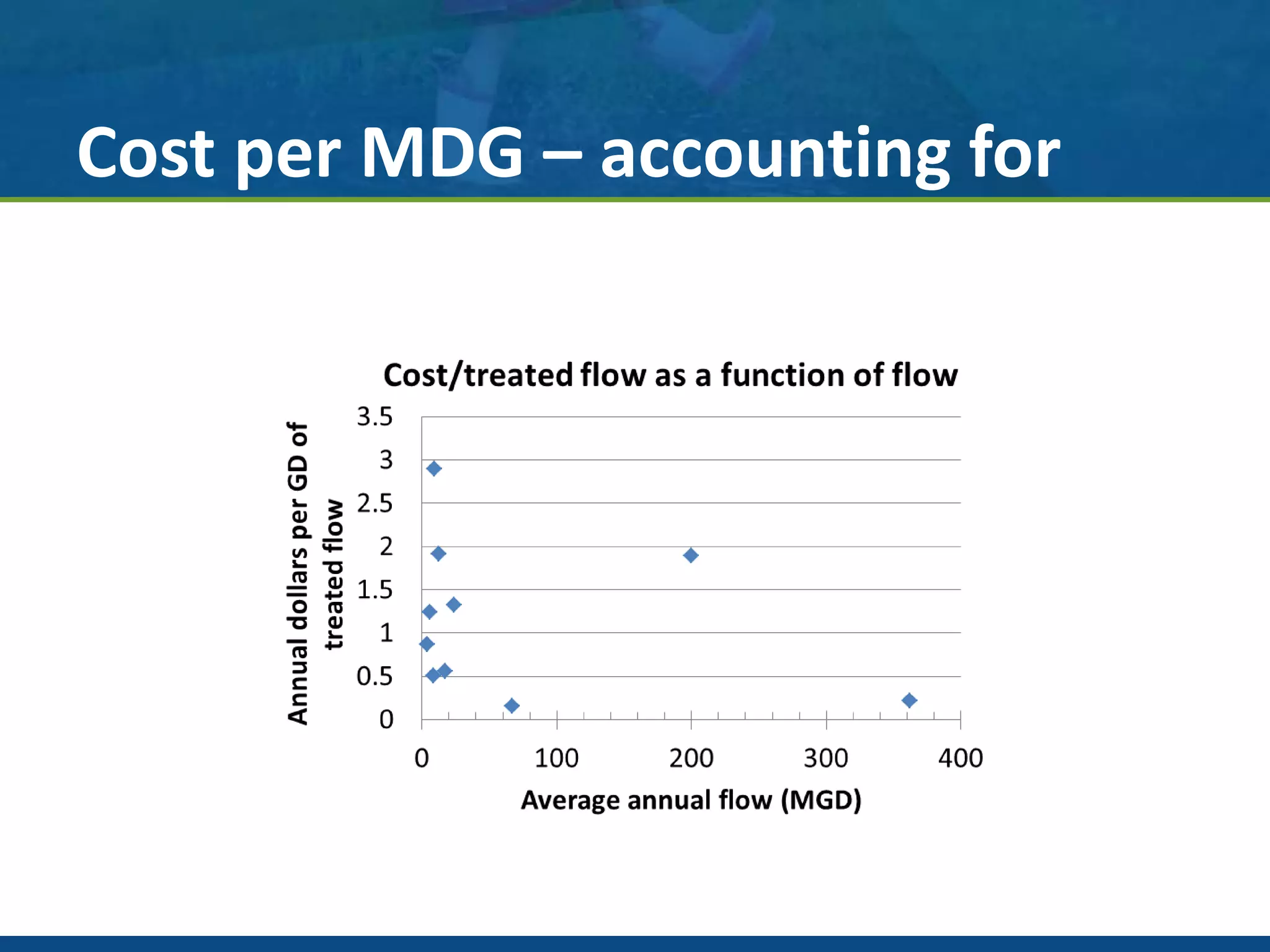

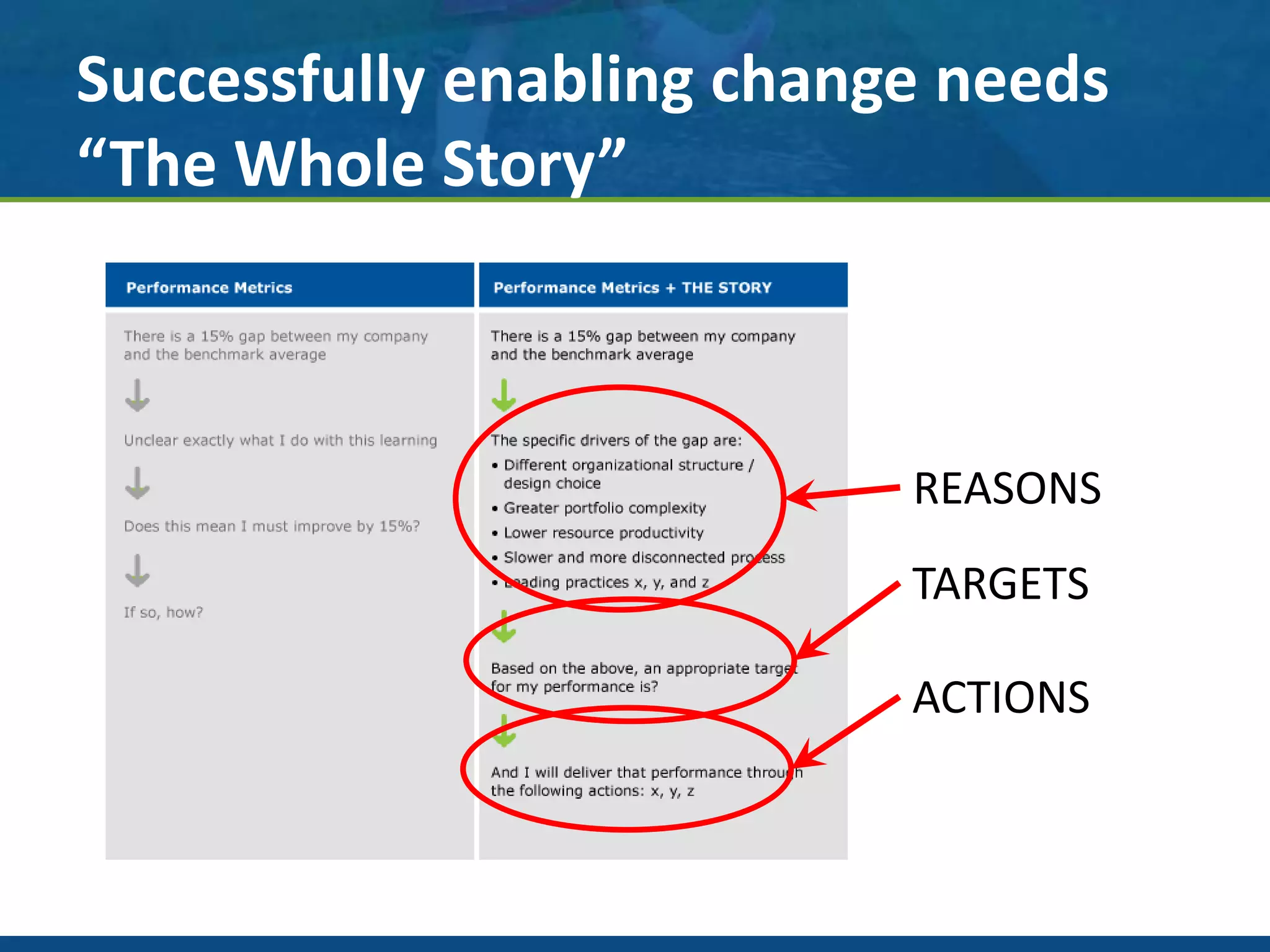

The document summarizes Dr. John Norton's efforts to collect operational and financial benchmarking data from the 250 largest wastewater treatment plants in the US. His preliminary analysis found that complete data was only available for about 20% of plants due to challenges collecting data from utilities. The data collected so far shows wide variations in treatment capacities, costs per million gallons treated, and staffing levels between plants. Benchmarking this type of data across utilities could help identify best practices and innovations to improve performance. Further refinement of the data collection process is needed to develop a more complete benchmarking dataset.