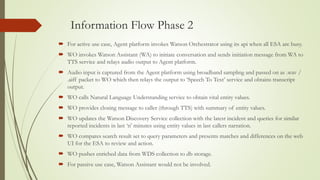

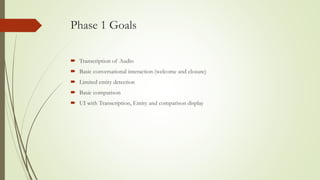

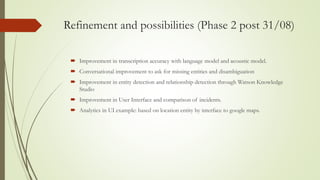

The document outlines a proposed solution to enhance emergency service operations by converting audio calls to text transcripts, identifying key entities, and matching recent similar calls. The solution aims to assist emergency service agents (ESA) both when they are engaged and when they are busy, thereby improving response times and information gathering. The document also details the architecture and phases of implementation, emphasizing future enhancements for accuracy and user interface improvements.