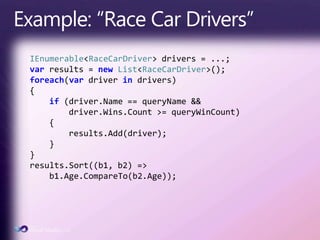

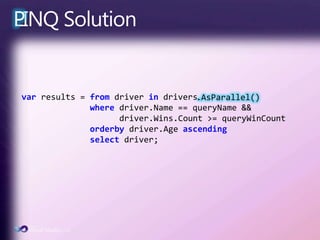

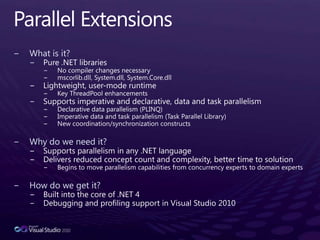

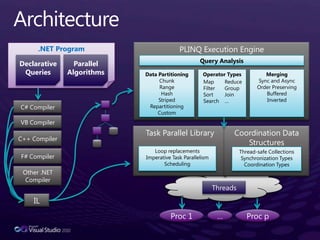

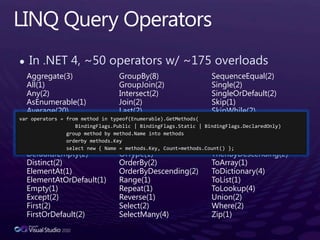

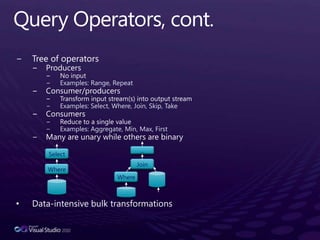

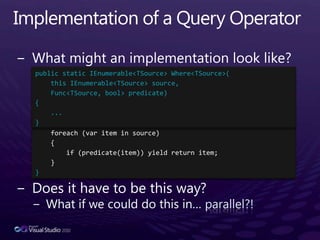

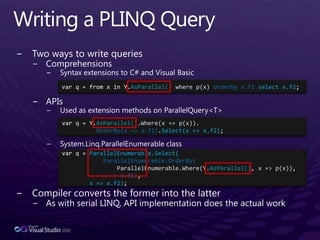

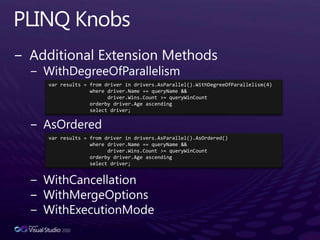

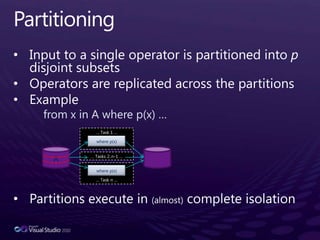

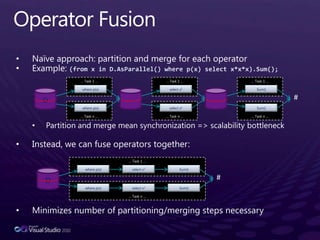

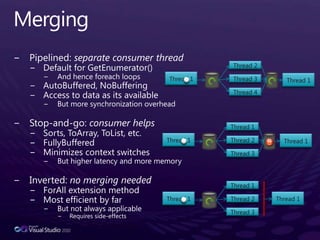

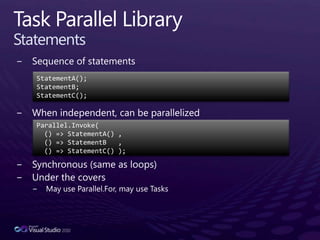

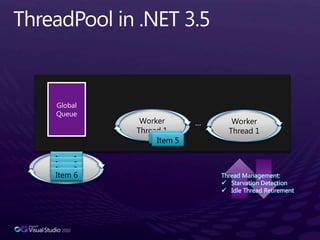

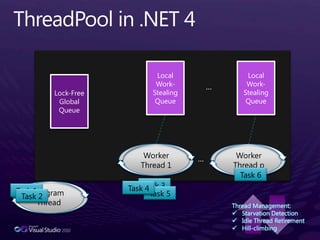

Visual Studio 2010 and .NET 4 provide new tools for parallel programming including Parallel LINQ (PLINQ) and the Task Parallel Library (TPL). PLINQ allows existing LINQ queries to run in parallel by partitioning and merging data across threads. The TPL simplifies writing multithreaded code through tasks that represent asynchronous and parallel operations. These new frameworks make it easier for developers to write scalable parallel code that takes advantage of multicore processors.

![The Manycore Shift“[A]fter decades of single core processors, the high volume processor industry has gone from single to dual to quad-core in just the last two years. Moore’s Law scaling should easily let us hit the 80-core mark in mainstream processors within the next ten years and quite possibly even less.”-- Justin Rattner, CTO, Intel (February 2007)“If you haven’t done so already, now is the time to take a hard look at the design of your application, determine what operations are CPU-sensitive now or are likely to become so soon, and identify how those places could benefit from concurrency.”-- Herb Sutter, C++ Architect at Microsoft (March 2005)](https://image.slidesharecdn.com/toubparallelismtouroct2009-110626234041-phpapp01/85/Toub-parallelism-tour_oct2009-8-320.jpg)

![Parallel LINQ (PLINQ)Utilizes parallel hardware for LINQ queriesAbstracts away most parallelism detailsPartitions and merges data intelligentlySupports all .NET Standard Query OperatorsPlus a few knobsWorks for any IEnumerable<T>Optimizations for other types (T[], IList<T>)Supports custom partitioning (Partitioner<T>)Built on top of the rest of Parallel Extensions](https://image.slidesharecdn.com/toubparallelismtouroct2009-110626234041-phpapp01/85/Toub-parallelism-tour_oct2009-21-320.jpg)

![Parallelism BlockersOrdering not guaranteedExceptionsThread affinityOperations with < 1.0 speedupSide effects and mutability are serious issuesMost queries do not use side effects, but it’s possible…int[] values = new int[] { 0, 1, 2 };var q = from x in values.AsParallel() select x * 2;int[] scaled = q.ToArray(); // == { 0, 2, 4 }?System.AggregateExceptionobject[] data = new object[] { "foo", null, null };var q = from x in data.AsParallel() select o.ToString();controls.AsParallel().ForAll(c => c.Size = ...);IEnumerable<int> input = …;var doubled = from x in input.AsParallel() select x*2;Random rand = new Random();var q = from i in Enumerable.Range(0, 10000).AsParallel() select rand.Next();](https://image.slidesharecdn.com/toubparallelismtouroct2009-110626234041-phpapp01/85/Toub-parallelism-tour_oct2009-36-320.jpg)