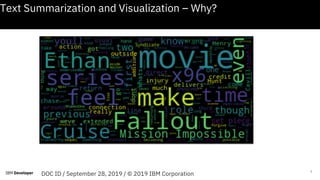

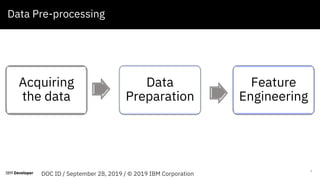

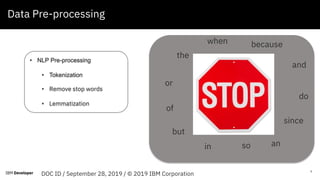

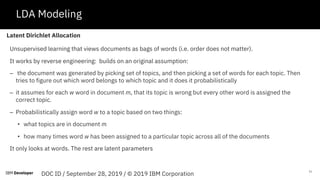

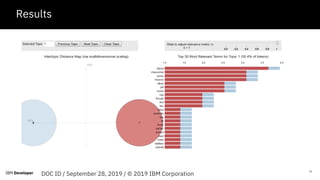

The document discusses text summarization and visualization using IBM Watson Studio. It provides an agenda that includes an introduction to topic modeling and LDA, how to build a topic model, and a Q&A section. The document explains that topic modeling can be used to quickly summarize text, extract important topics, and create visualizations for better data understanding. It also outlines the steps involved, including data preprocessing, feature engineering, and LDA modeling to probabilistically assign words to topics.