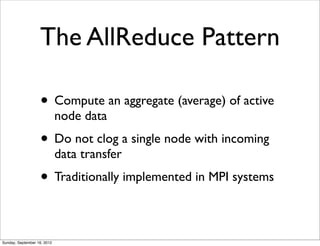

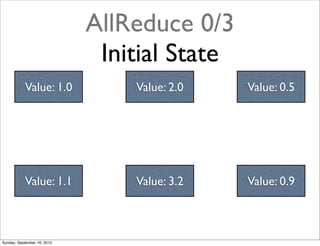

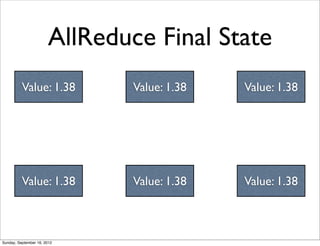

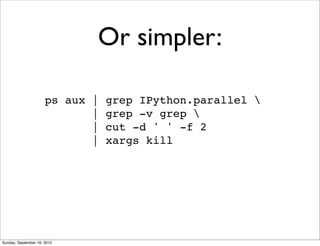

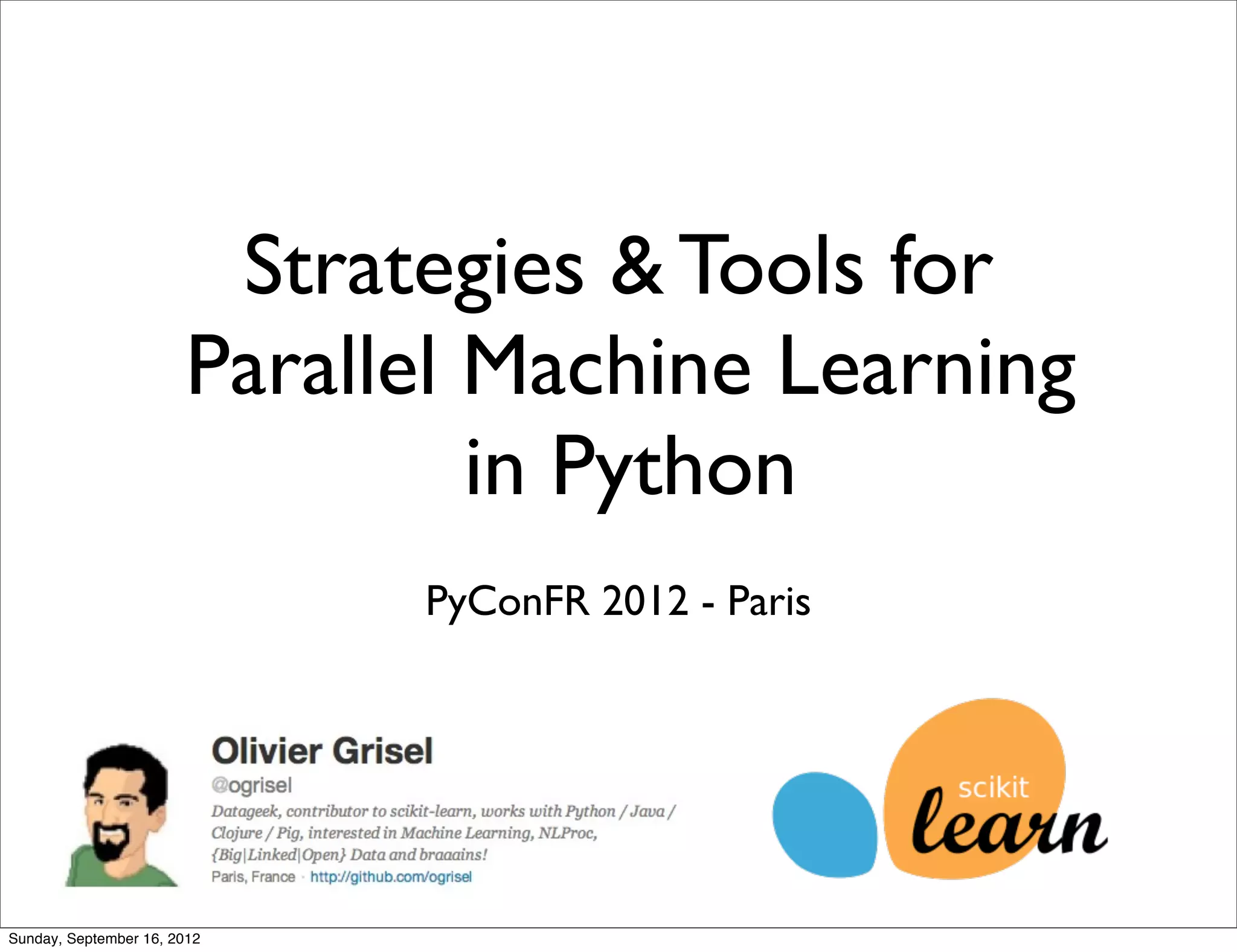

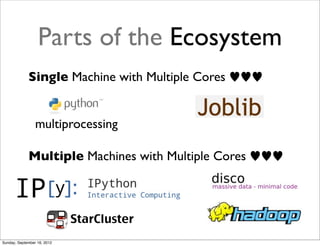

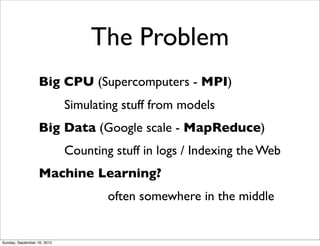

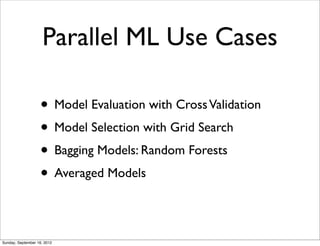

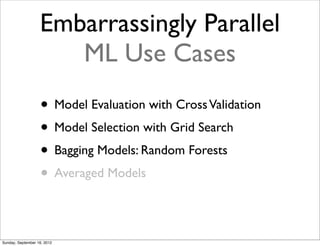

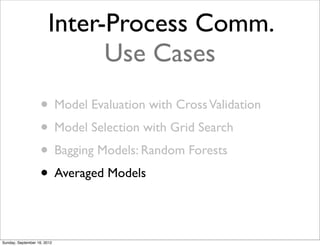

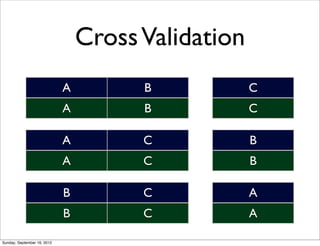

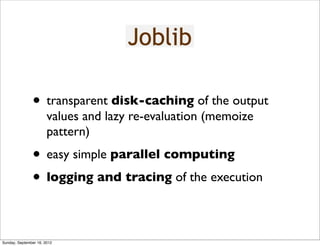

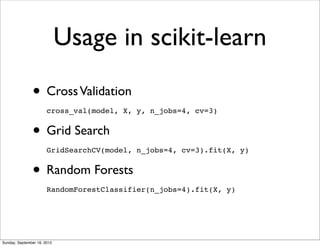

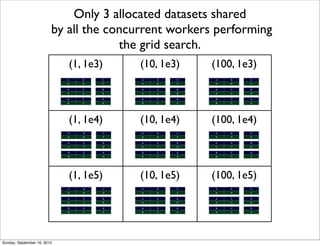

This document summarizes a presentation on strategies and tools for parallel machine learning in Python. It discusses using multiprocessing for parallelization on a single machine with multiple cores. It also covers using joblib and its Parallel class to parallelize tasks like cross-validation, grid search, and random forests. The presentation discusses using IPython for parallel processing across multiple machines with multiple cores. It introduces concepts like MapReduce and the AllReduce pattern for distributed computing.

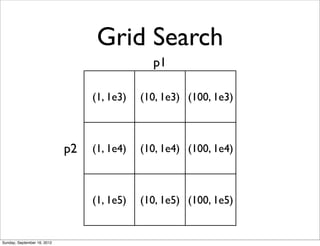

![Model Selection

the Hyperparameters hell

p1 in [1, 10, 100]

p2 in [1e3, 1e4, 1e5]

Find the best combination of parameters

that maximizes the Cross Validated Score

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-13-320.jpg)

![multiprocessing

>>> from multiprocessing import Pool

>>> p = Pool(4)

>>> p.map(type, [1, 2., '3'])

[int, float, str]

>>> r = p.map_async(type, [1, 2., '3'])

>>> r.get()

[int, float, str]

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-20-320.jpg)

![joblib.Parallel

>>> from os.path.join

>>> from joblib import Parallel, delayed

>>> Parallel(2)(

... delayed(join)('/ect', s)

... for s in 'abc')

['/ect/a', '/ect/b', '/ect/c']

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-25-320.jpg)

![joblib.Parallel:

shared memory

>>> from joblib import Parallel, delayed

>>> import numpy as np

>>> Parallel(2, max_nbytes=1e6)(

... delayed(type)(np.zeros(int(i)))

... for i in [1e4, 1e6])

[<type 'numpy.ndarray'>, <class

'numpy.core.memmap.memmap'>]

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-27-320.jpg)

![MapReduce?

mapper

[ [ [

(k1, v1), (k3, v3), reducer (k5, v6),

(k2, v2), mapper (k4, v4), (k6, v6),

... ... reducer ...

] ] ]

mapper

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-30-320.jpg)

![Why MapReduce does

not always work

Write a lot of stuff to disk for failover

Inefficient for small to medium problems

[(k, v)] mapper [(k, v)] reducer [(k, v)]

Data and model params as (k, v) pairs?

Complex to leverage for Iterative

Algorithms

Sunday, September 16, 2012](https://image.slidesharecdn.com/parallelmachinelearning-120921034552-phpapp02/85/Strategies-and-Tools-for-Parallel-Machine-Learning-in-Python-31-320.jpg)