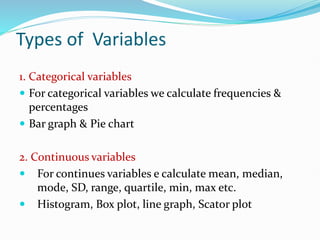

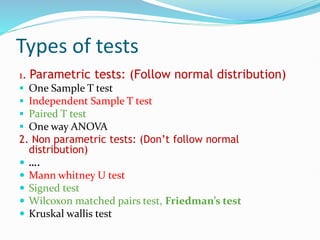

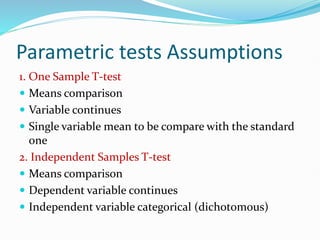

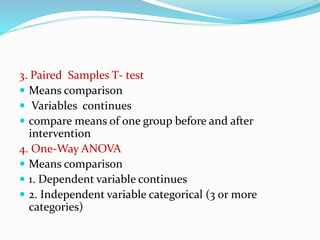

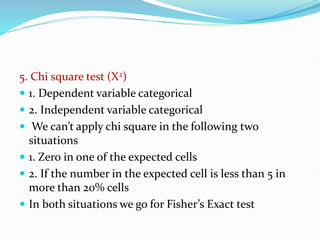

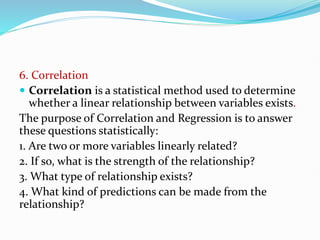

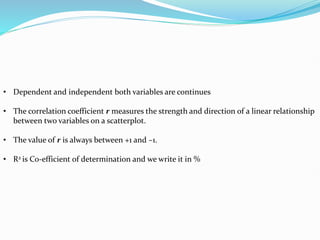

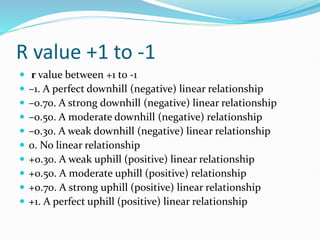

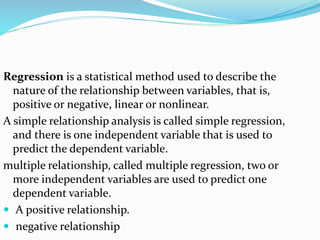

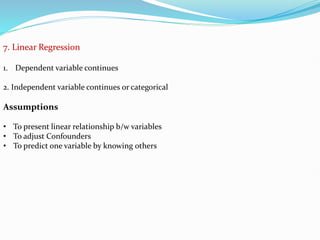

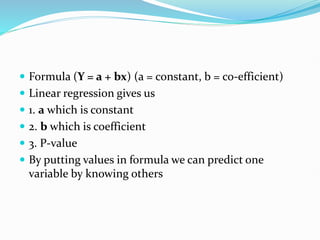

The document outlines the types of variables (categorical and continuous) and their respective mathematical tests, including parametric and non-parametric tests such as t-tests and ANOVA. It further details assumptions for different statistical tests, correlation methods, and regression analysis, including simple and multiple regressions for predicting relationships between variables. Additionally, it highlights the significance of correlation coefficients and regression formulas for analysis in research.