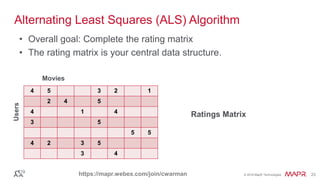

The document provides an overview of Apache Spark, detailing its architecture, core components, and functionalities like Spark SQL and MLlib for machine learning, alongside practical demonstrations. It emphasizes Spark's speed advantage over Hadoop's MapReduce for in-memory data processing, and illustrates its use in collaborative filtering for recommender systems. Furthermore, it touches on Spark's integration with various programming languages and tools, highlighting important concepts such as resilient distributed datasets (RDDs) and dataframes.