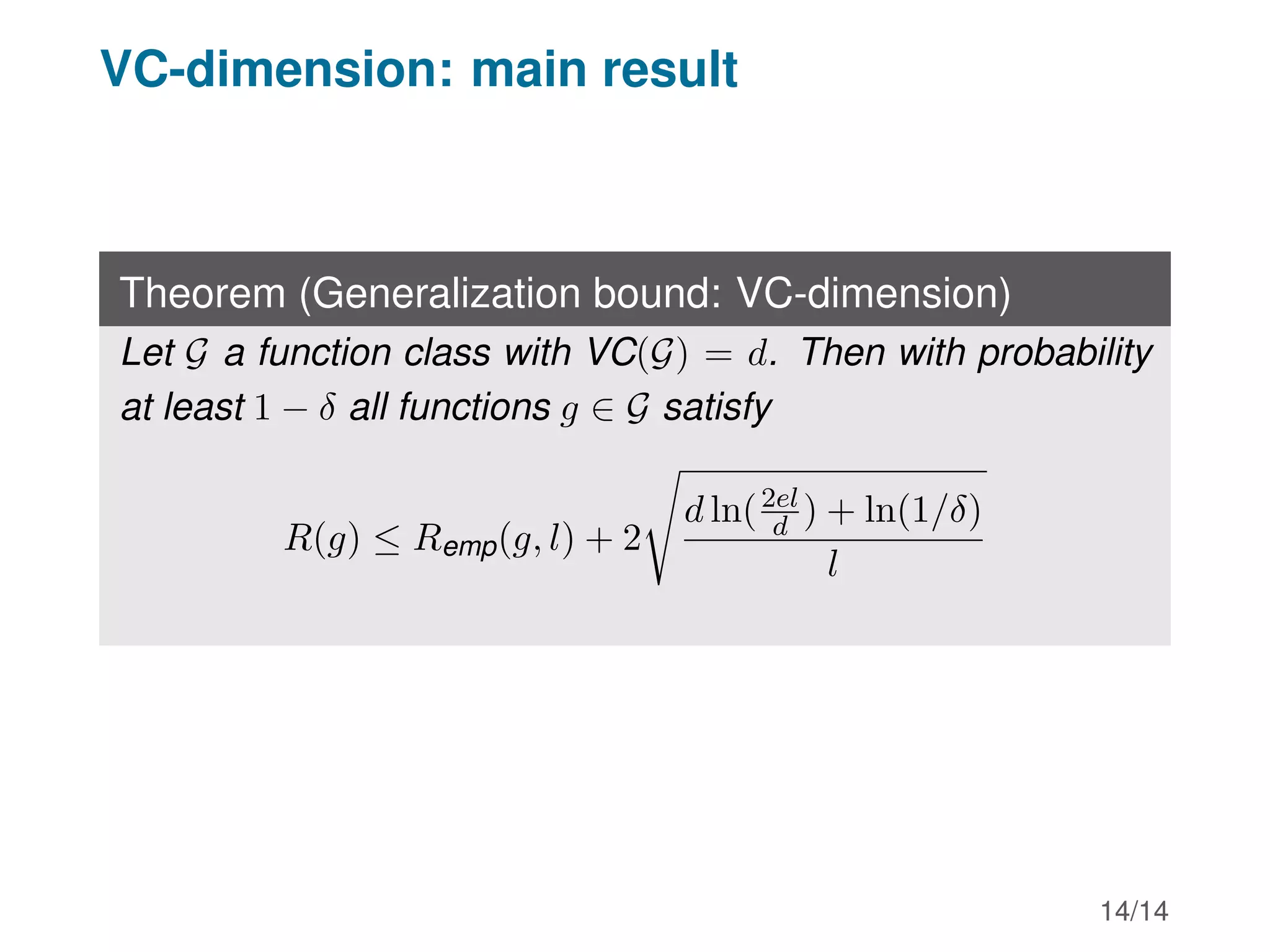

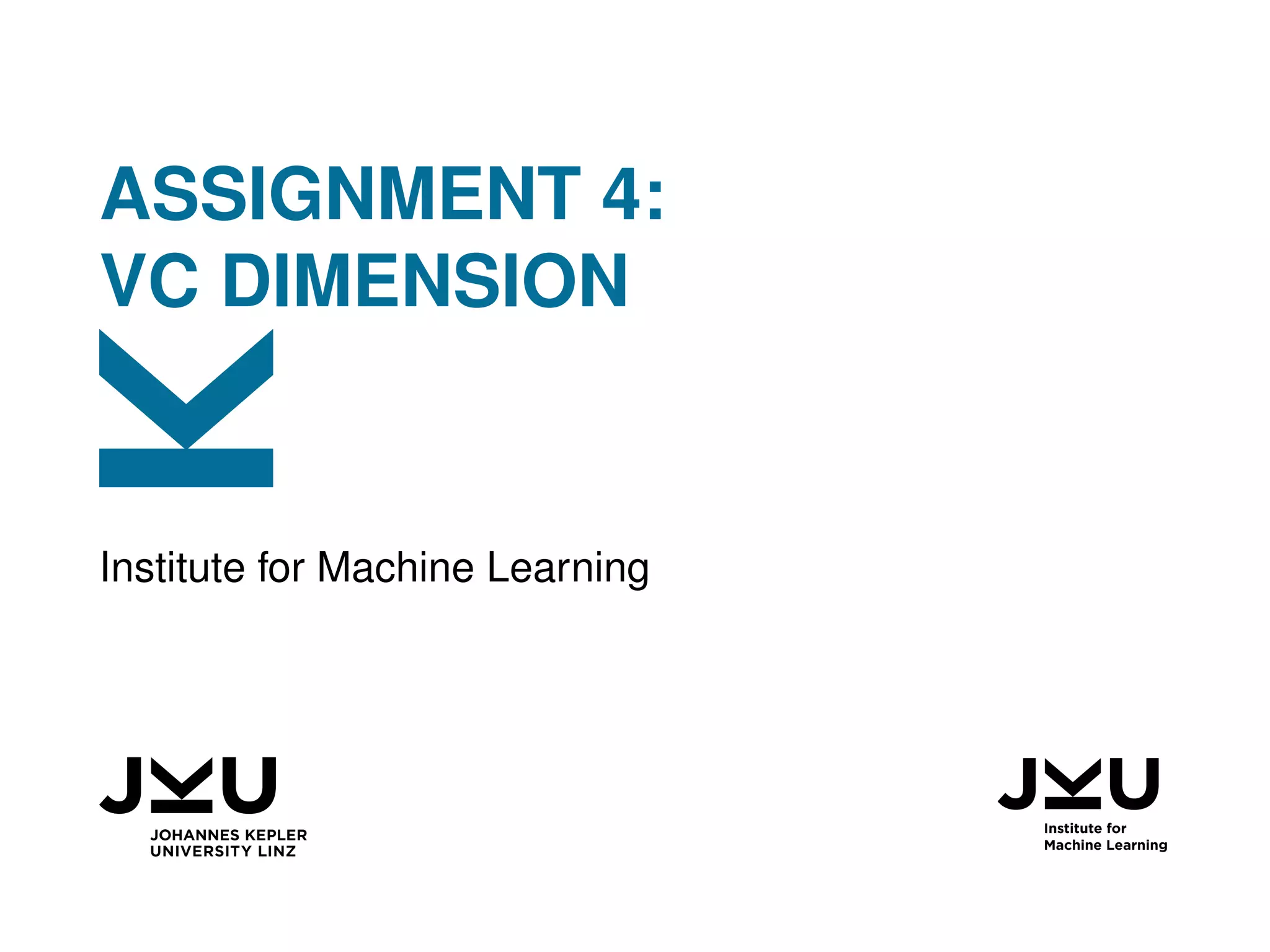

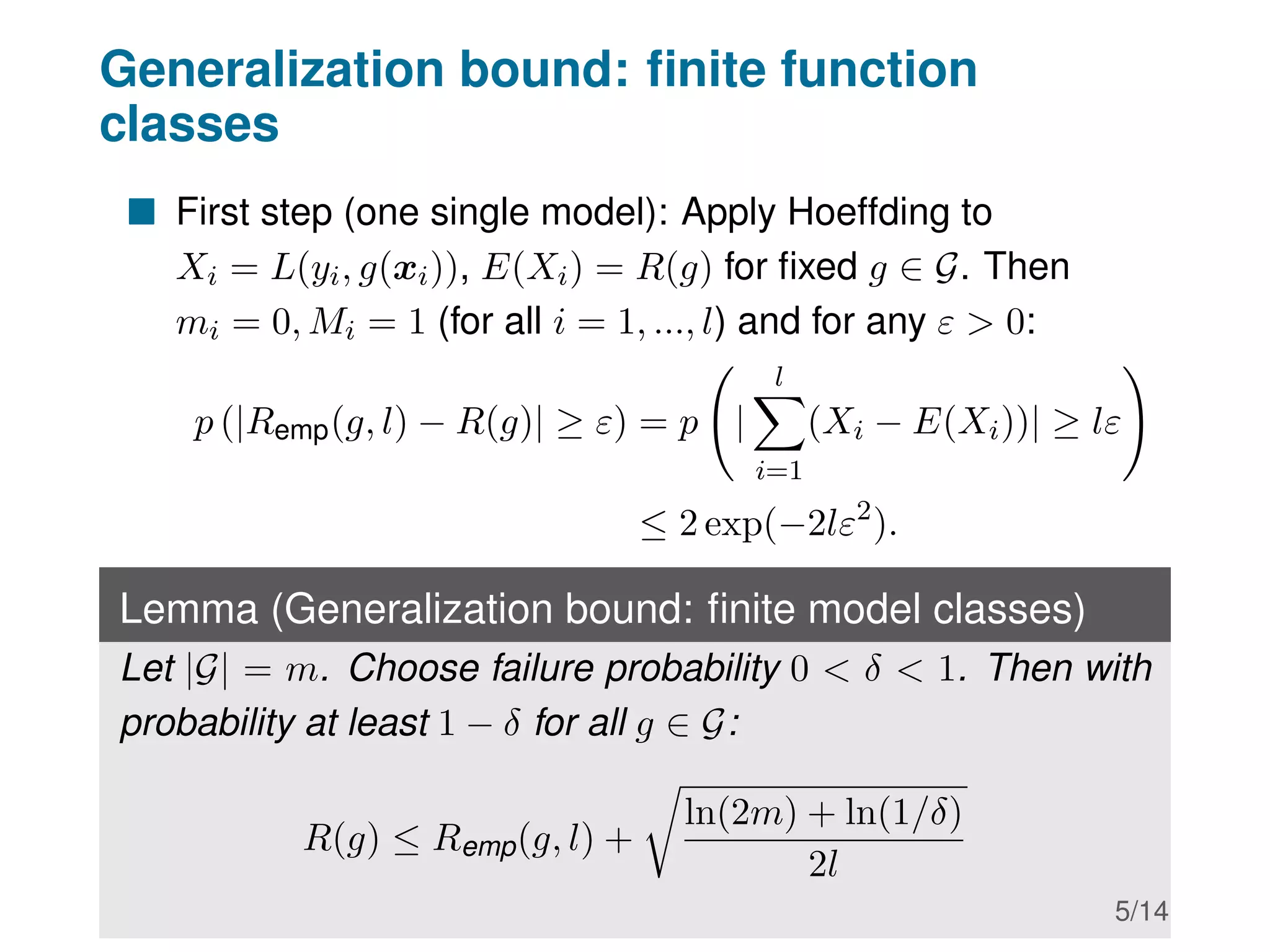

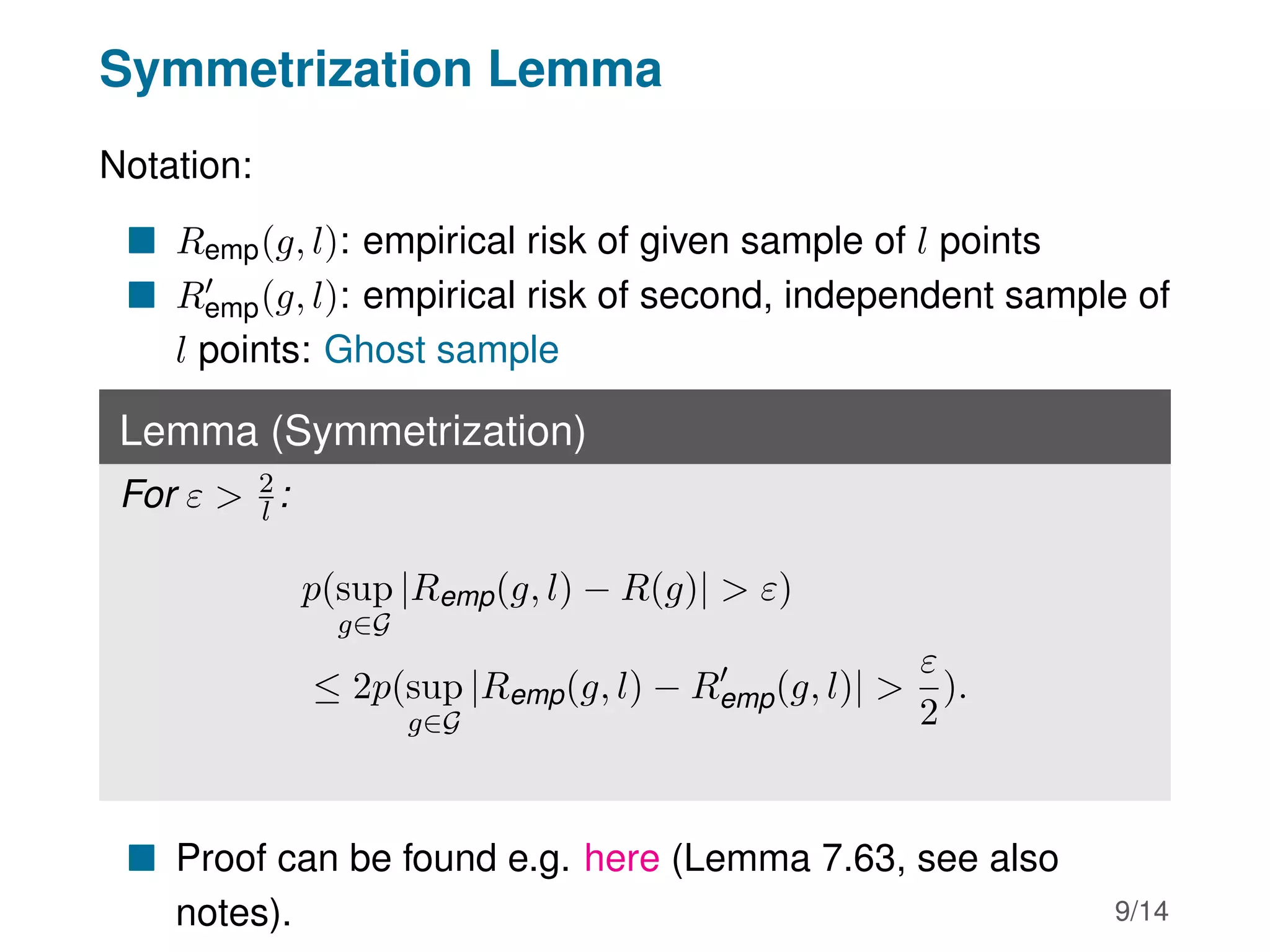

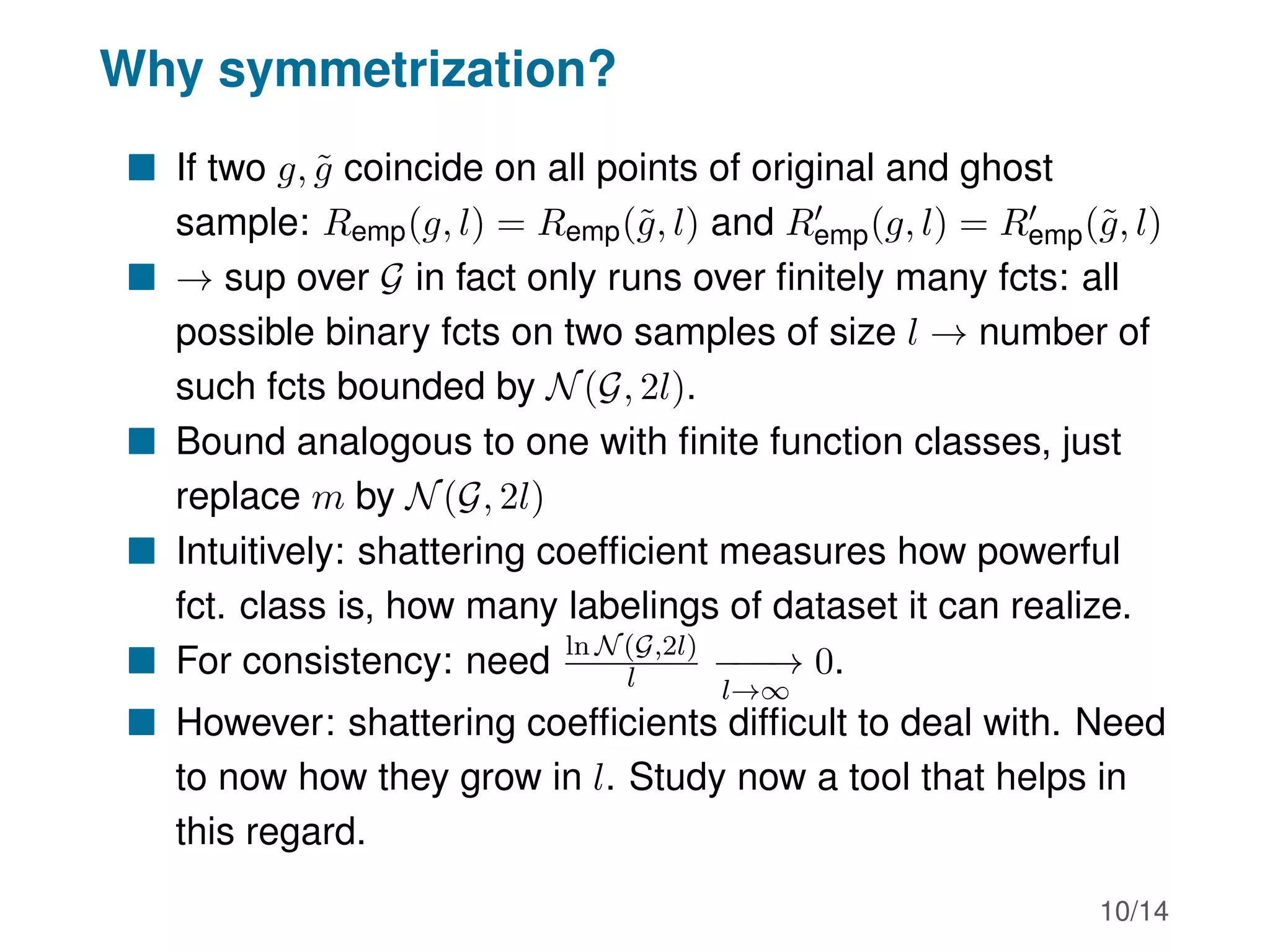

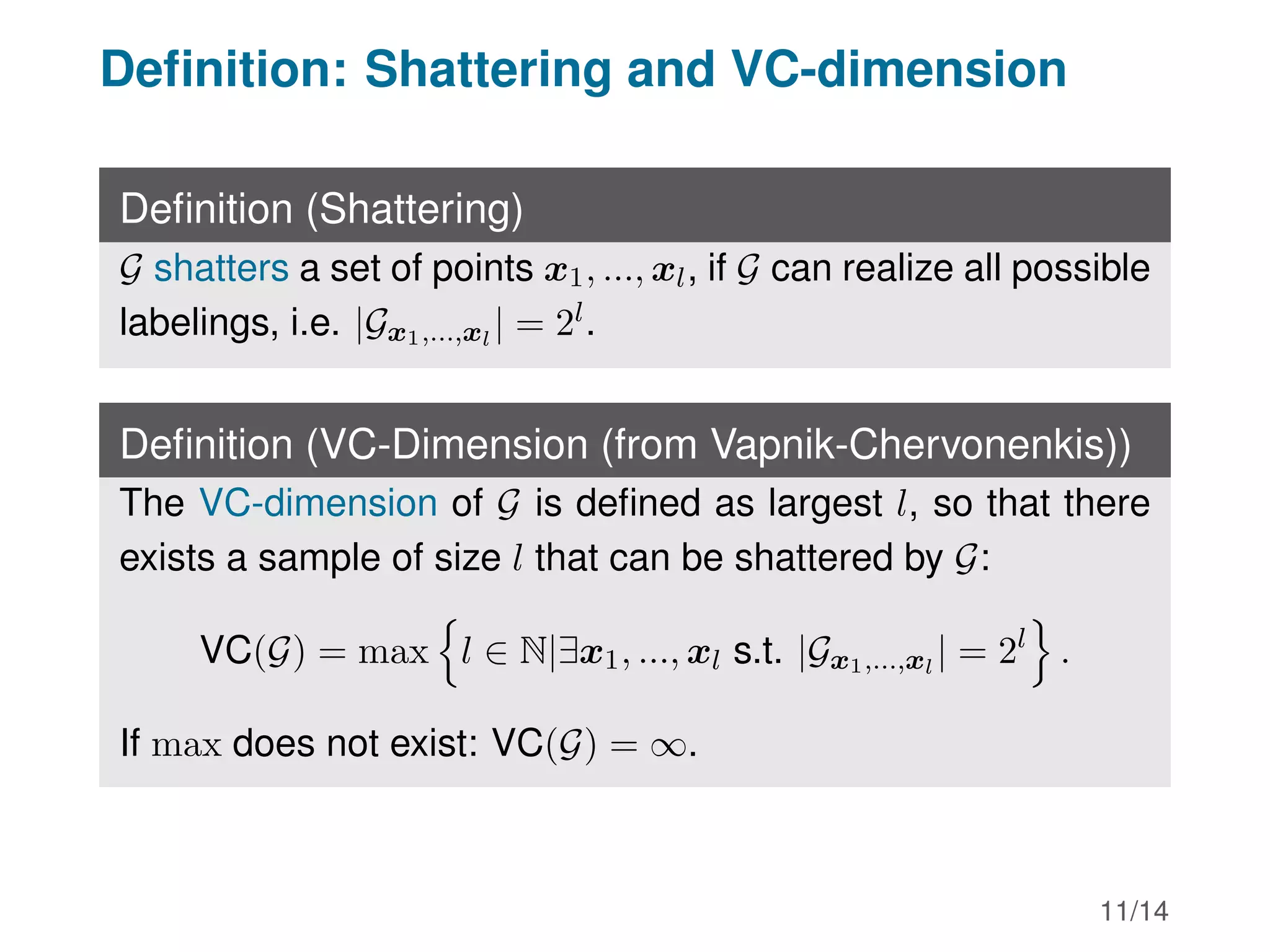

This document discusses the VC dimension concept in machine learning. It begins by introducing the setting of learning a classification function from labeled data points. It then provides generalization bounds relating the true risk of a function to its empirical risk based on the size of the function class or its VC dimension. The VC dimension measures the capacity of a function class to label datasets, and having a finite VC dimension allows controlling the growth of the shattering coefficient and thus obtaining generalization guarantees.

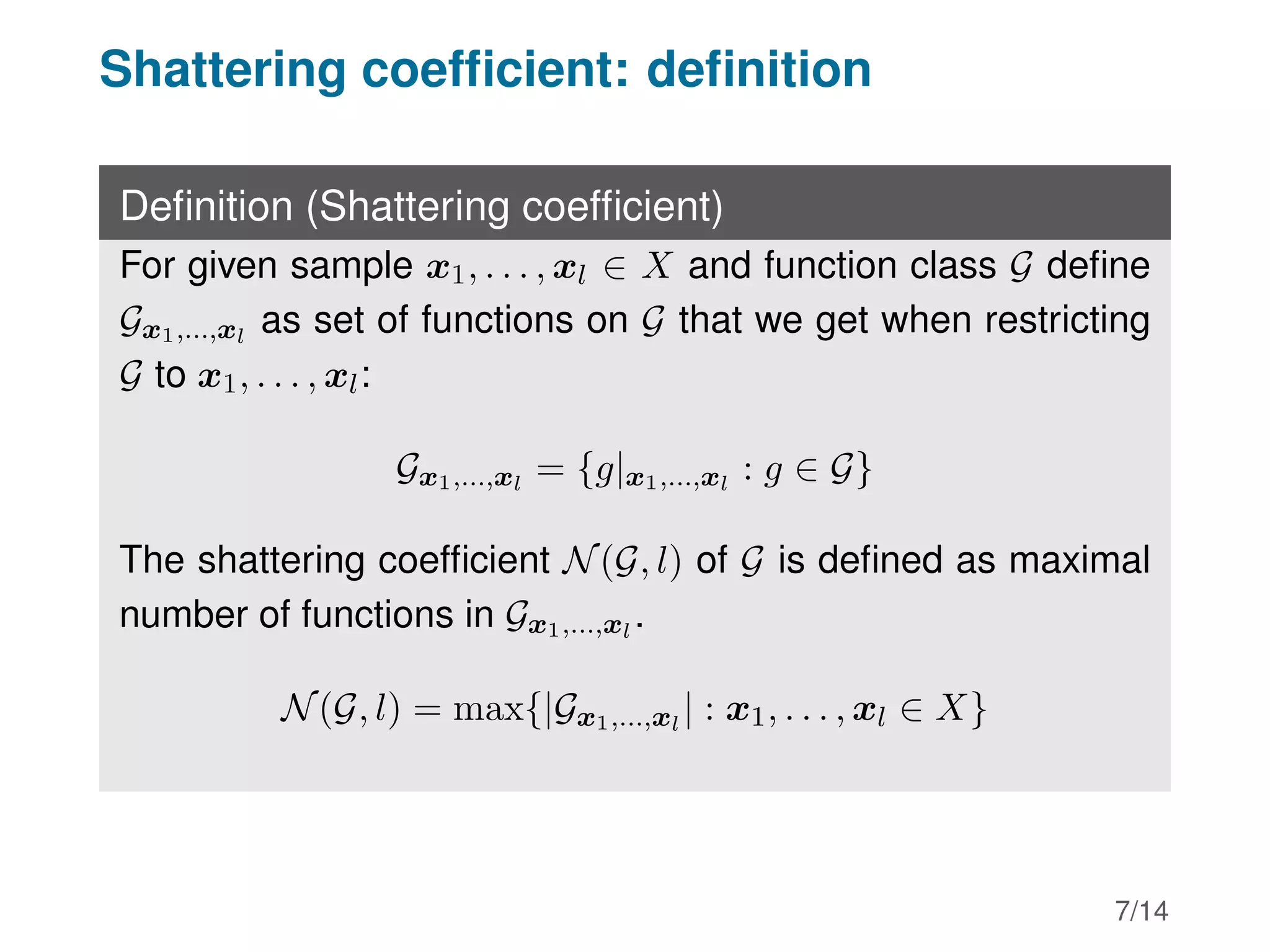

![Hoeffding’s inequality

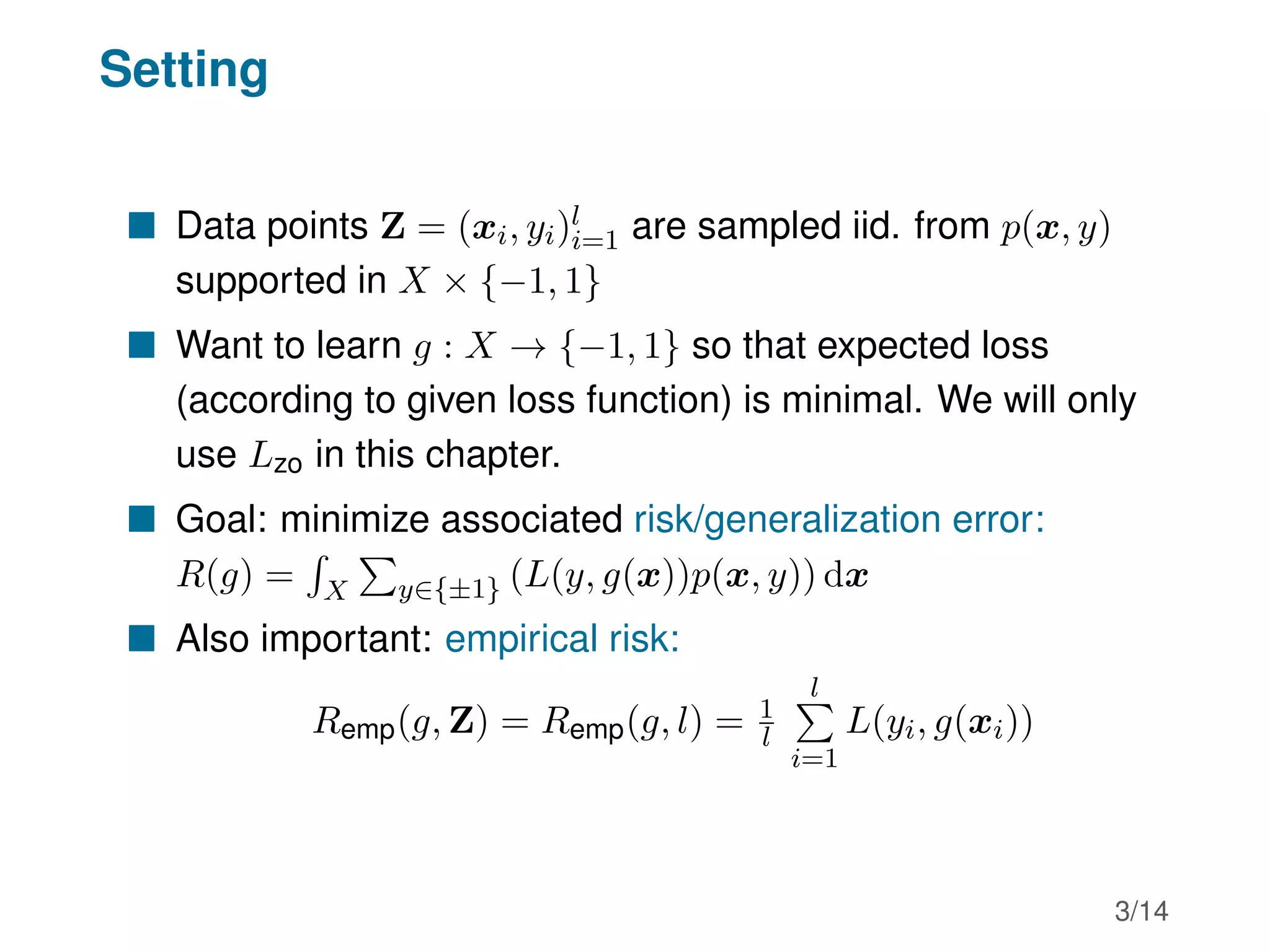

Lemma (Hoeffding)

Let X1, ..., Xl be independent random variables drawn accord-

ing to p. Assume further that Xi ∈ [mi, Mi]. Then for t ≥ 0:

p

l

X

i=1

(Xi − E(Xi)) ≥ t

!

≤ exp −

2t2

Pl

i=1(Mi − mi)2

!

4/14](https://image.slidesharecdn.com/slidesa4-230609200902-54fe85d5/75/Slides_A4-pdf-5-2048.jpg)

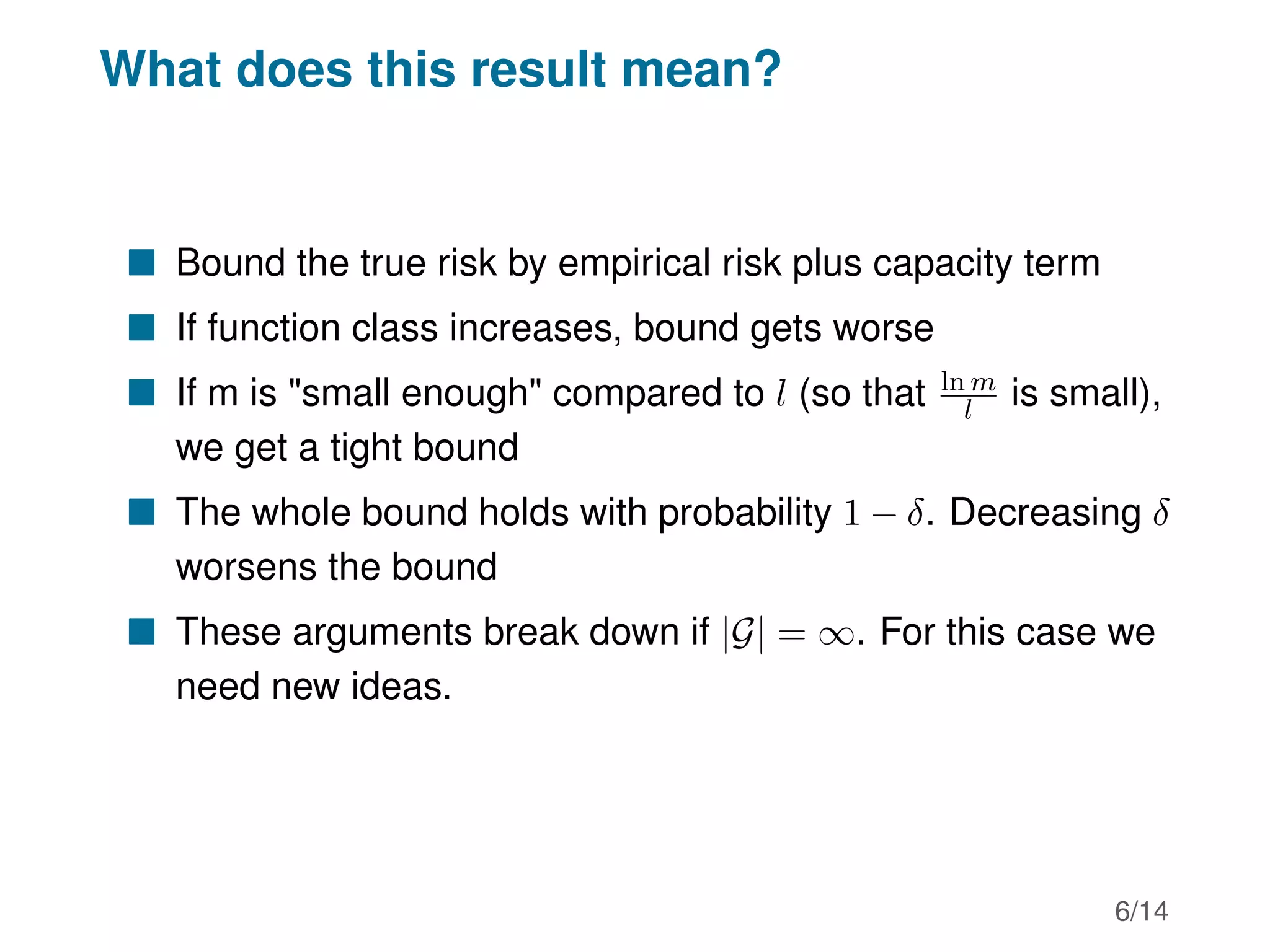

![VC-dimension: examples

X = R, positive class=interior of closed interval, i.e.

G =

1[a,b] : a b ∈ R .

Positive class=interior of right triangles with sides adjacent

to right angle are parallel to aces. Right angle in lower left

corner. X = R2, G = {indicators of right triangles}.

Positive class=interior of convex polygon, X = R2,

G = {indicators of convex polygons with d corners}

X = R, G = {sgn (sin(tx)) : t ∈ R}. Then VC(G) = ∞

X = Rr, G = {area above linear hyperplane}. Show in

exercises: VC(G) = r + 1

X = Rr, ρ 0, G = {hyperplanes with margins at least γ}.

One can prove: if data are restricted to ball of radius R:

VC(G) = min

r, 2R2

γ2

+ 1.

12/14](https://image.slidesharecdn.com/slidesa4-230609200902-54fe85d5/75/Slides_A4-pdf-13-2048.jpg)