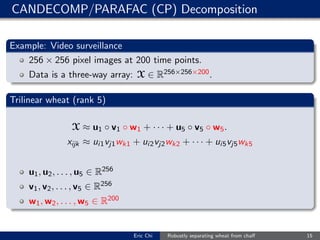

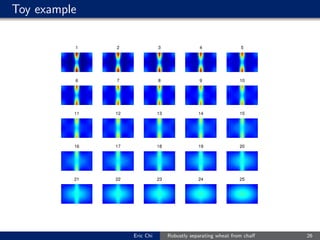

The document discusses methods for separating systematic variation ("wheat") from non-systematic variation ("chaff") in multiway data. It compares using least squares versus least 1-norm optimization to fit multilinear models to data. Least squares assumes Gaussian errors and can be biased by outliers, while least 1-norm is more robust but computationally more difficult. It demonstrates on a toy example how least 1-norm better extracts the underlying signal when the errors are non-Gaussian. The document advocates considering robust loss functions beyond least squares for real-world data that may violate Gaussian assumptions.