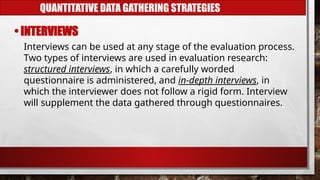

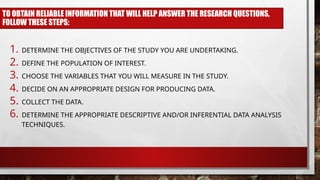

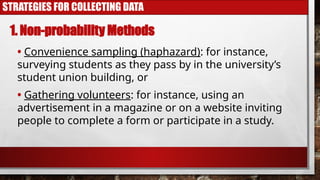

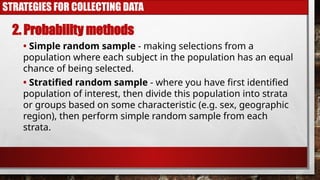

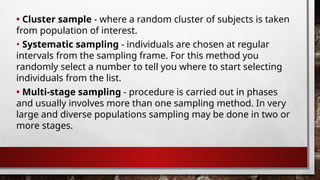

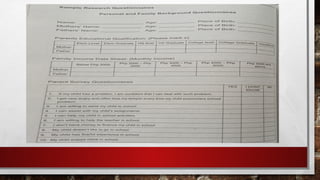

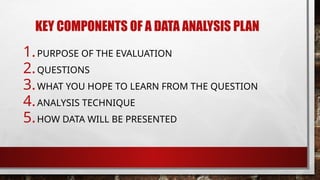

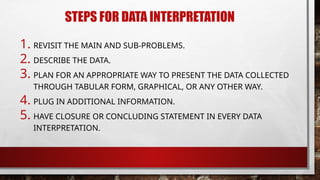

Chapter 5 discusses techniques for data collection, emphasizing the importance of systematic methods in gathering and analyzing information to answer research questions. It covers various quantitative data gathering strategies such as questionnaires, interviews, experiments, and observations, as well as methods for processing and interpreting data. Additionally, it highlights statistical techniques like t-tests, Pearson correlation, and chi-square tests for analyzing data relationships.