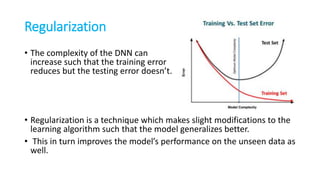

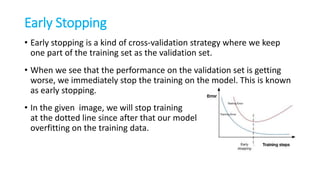

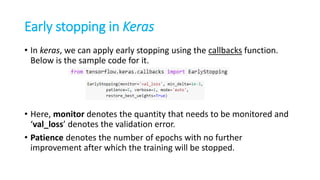

The document discusses different regularization techniques used in deep neural networks to prevent overfitting, including dropout, L1/L2 regularization, and early stopping. Dropout works by temporarily removing neurons from the network during training, forcing other neurons to learn redundant representations to compensate. L1 and L2 regularization add a penalty term to the loss function to discourage large weights. Early stopping monitors validation error during training and stops training if validation error begins to increase, indicating overfitting. These techniques help deep neural networks generalize better to unseen data.