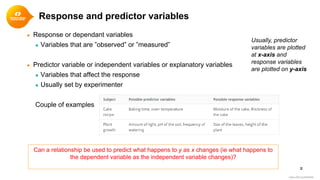

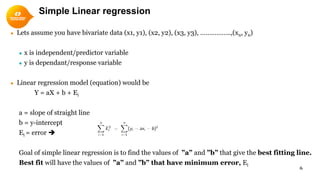

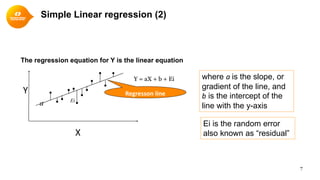

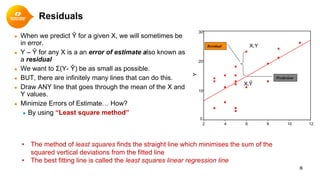

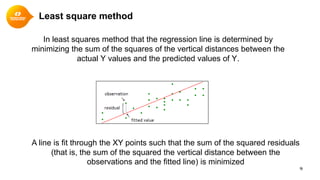

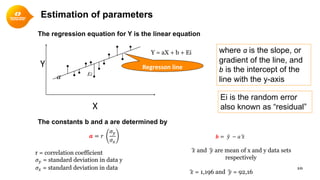

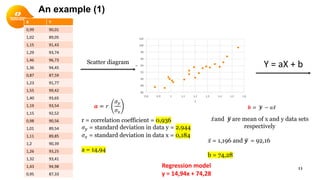

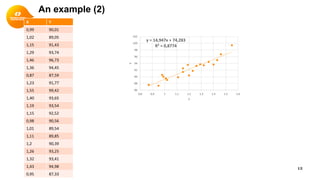

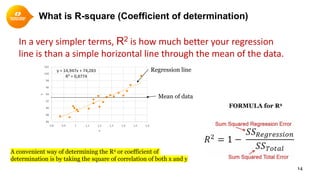

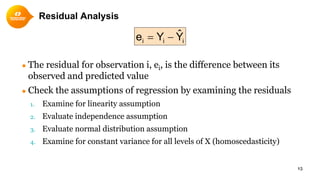

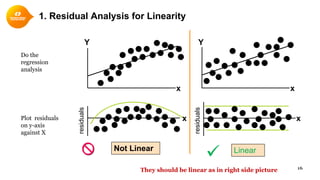

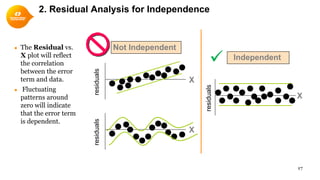

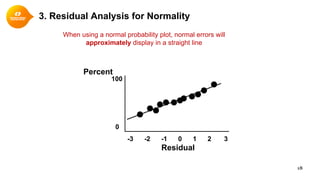

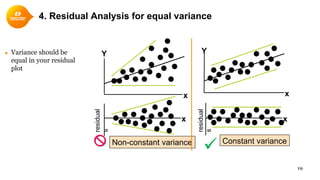

This document provides a summary of simple linear regression. It defines response and predictor variables, and gives examples of using a regression line to model the relationship between two variables. Key aspects covered include estimating slope and y-intercept using the least squares method, evaluating the quality of the regression model using the R-squared statistic, and checking assumptions through residual analysis.