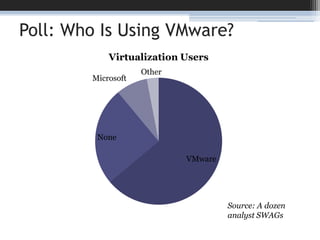

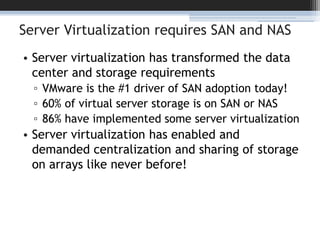

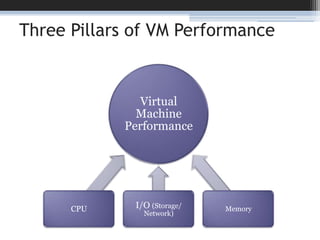

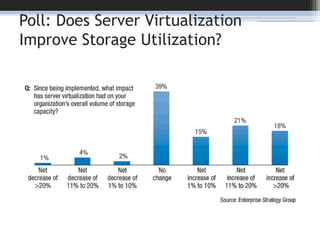

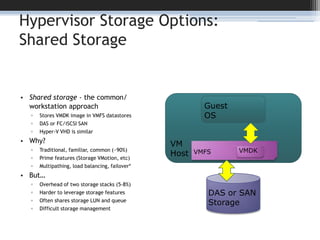

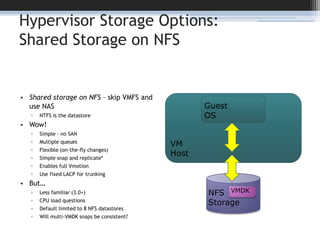

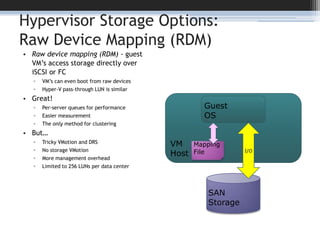

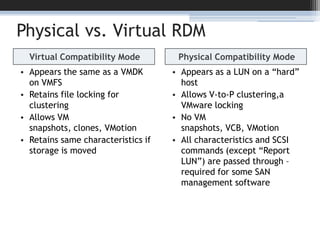

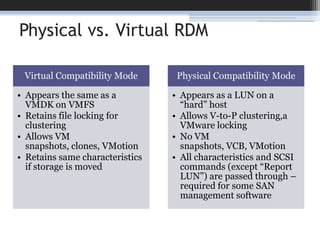

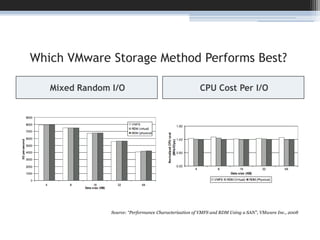

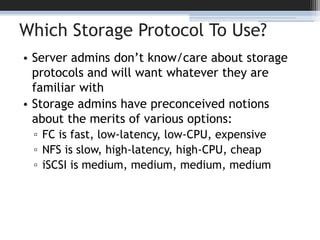

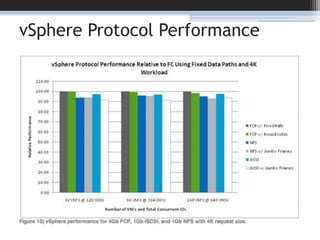

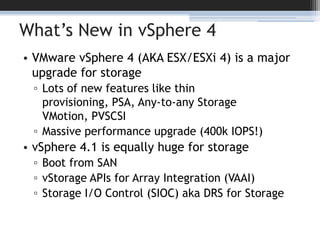

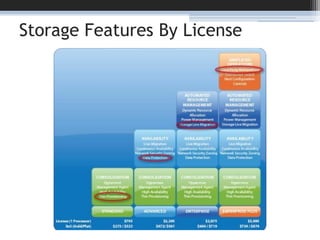

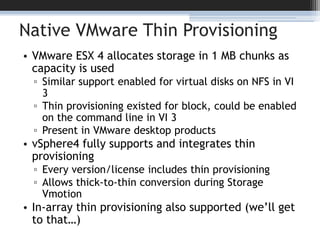

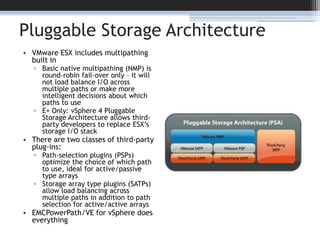

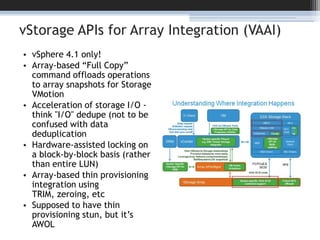

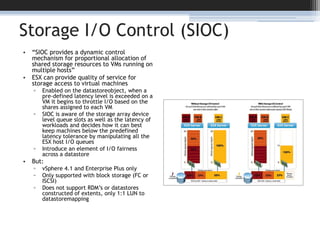

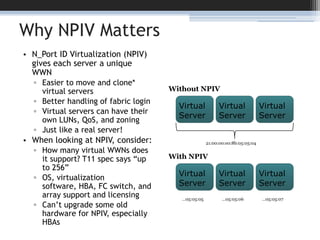

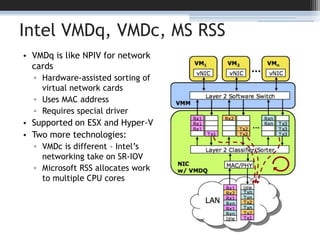

This document summarizes a presentation on rearchitecting storage for server virtualization. It discusses how server virtualization impacts storage by increasing random I/O, challenges of shared storage, and various hypervisor storage approaches like shared storage on SAN/NAS, raw device mapping, and their pros and cons. It also covers storage connectivity options, features in vSphere like thin provisioning and storage I/O control, and technologies like NPIV that are important for virtualization.