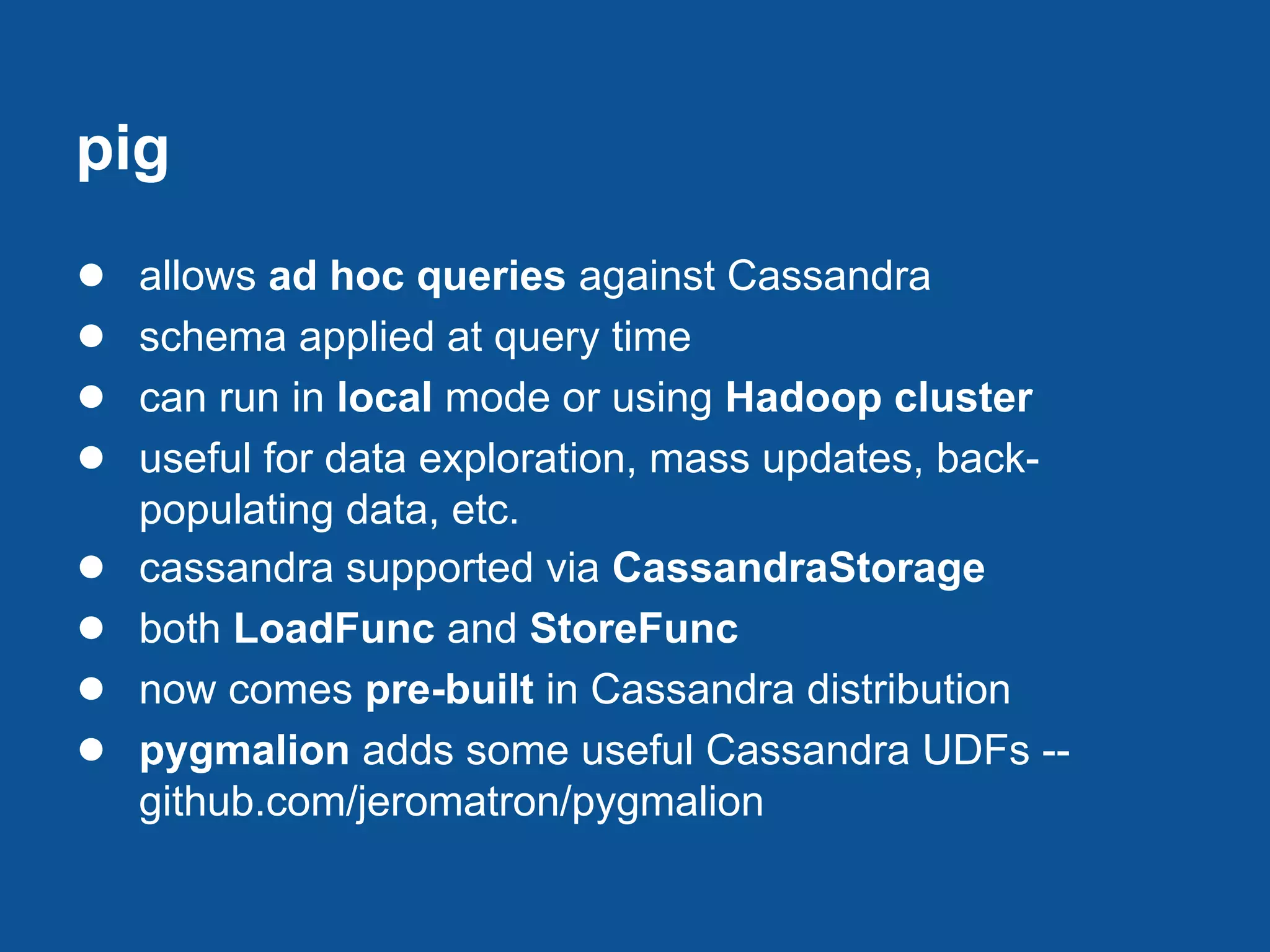

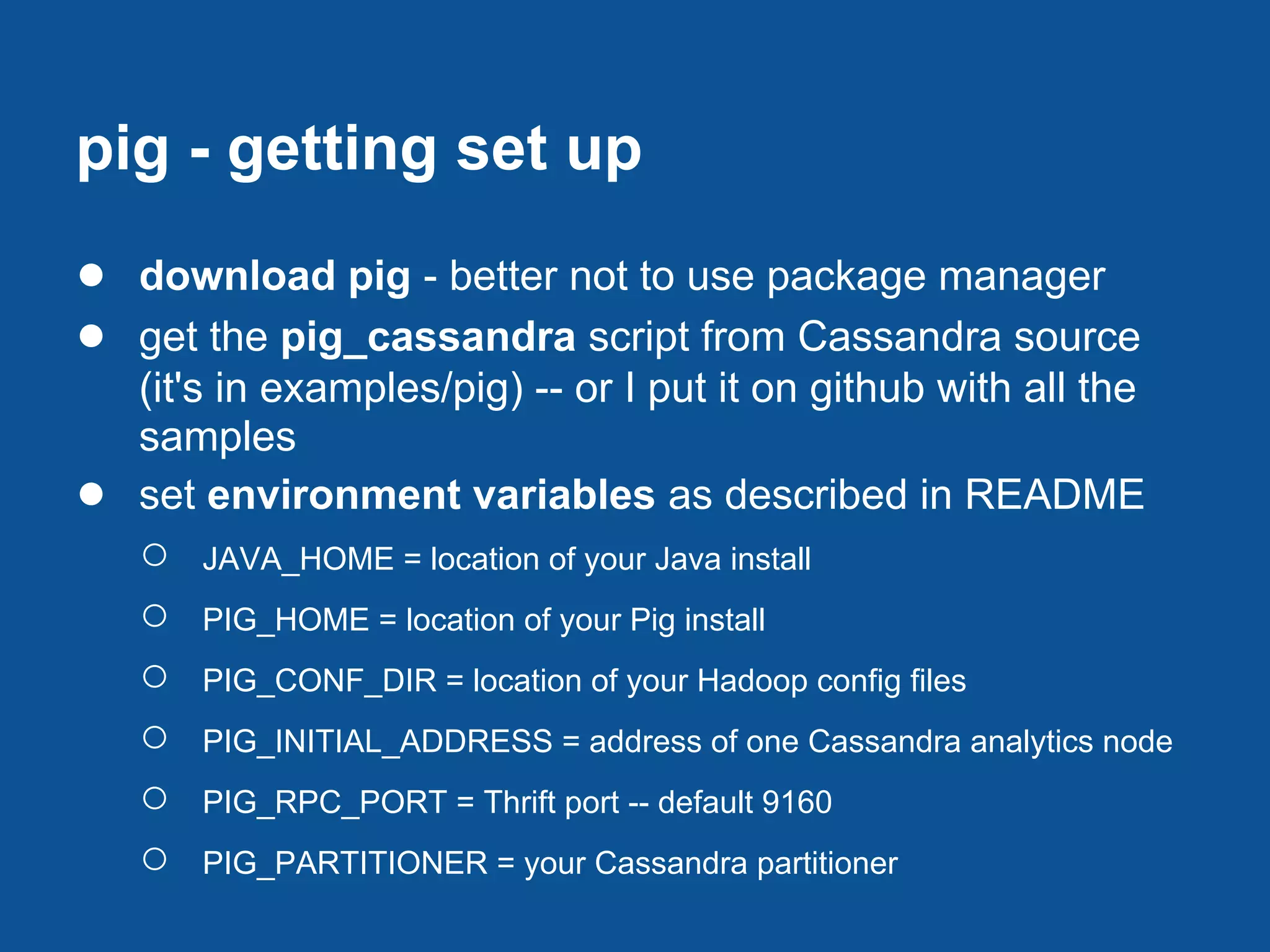

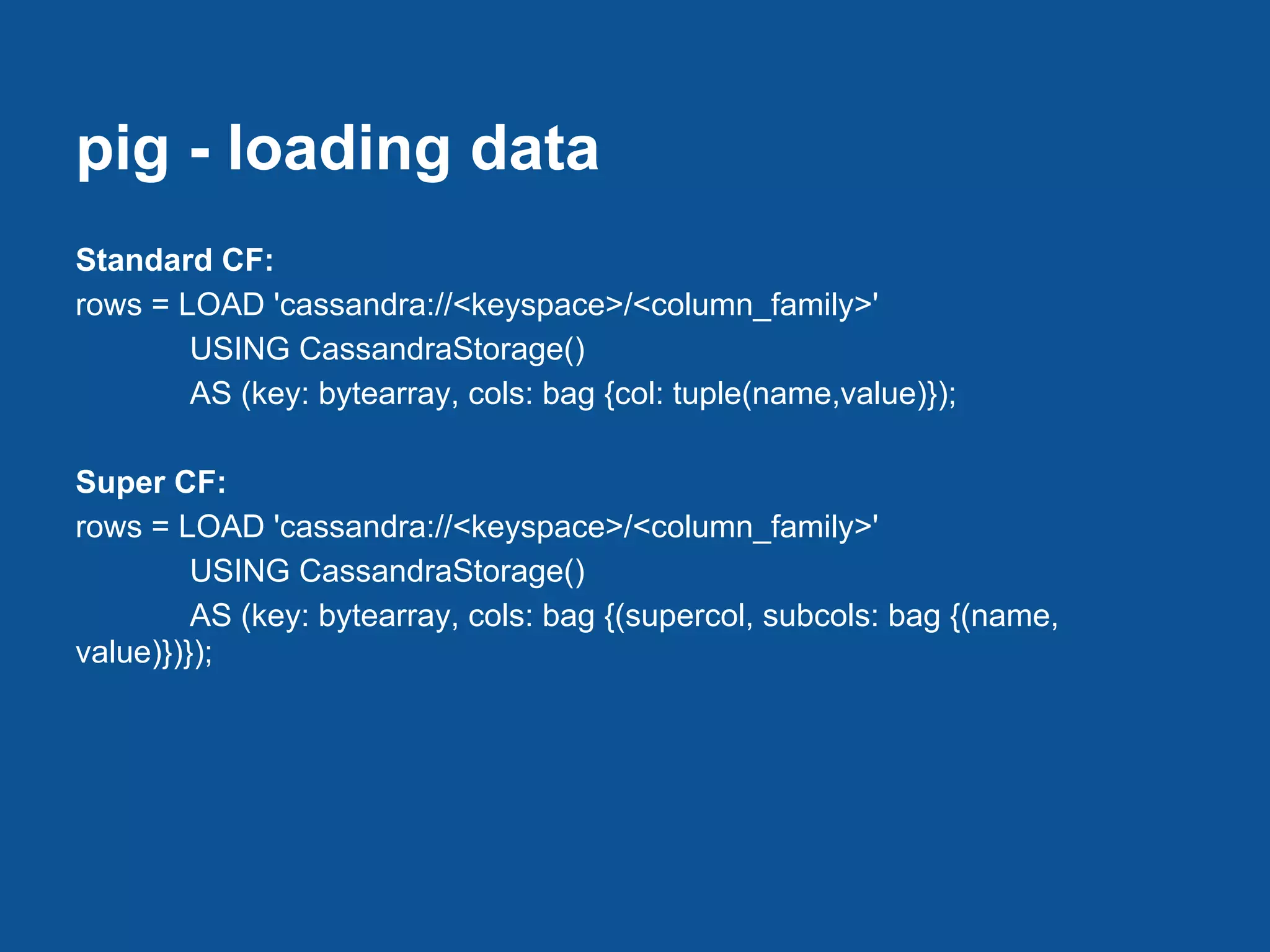

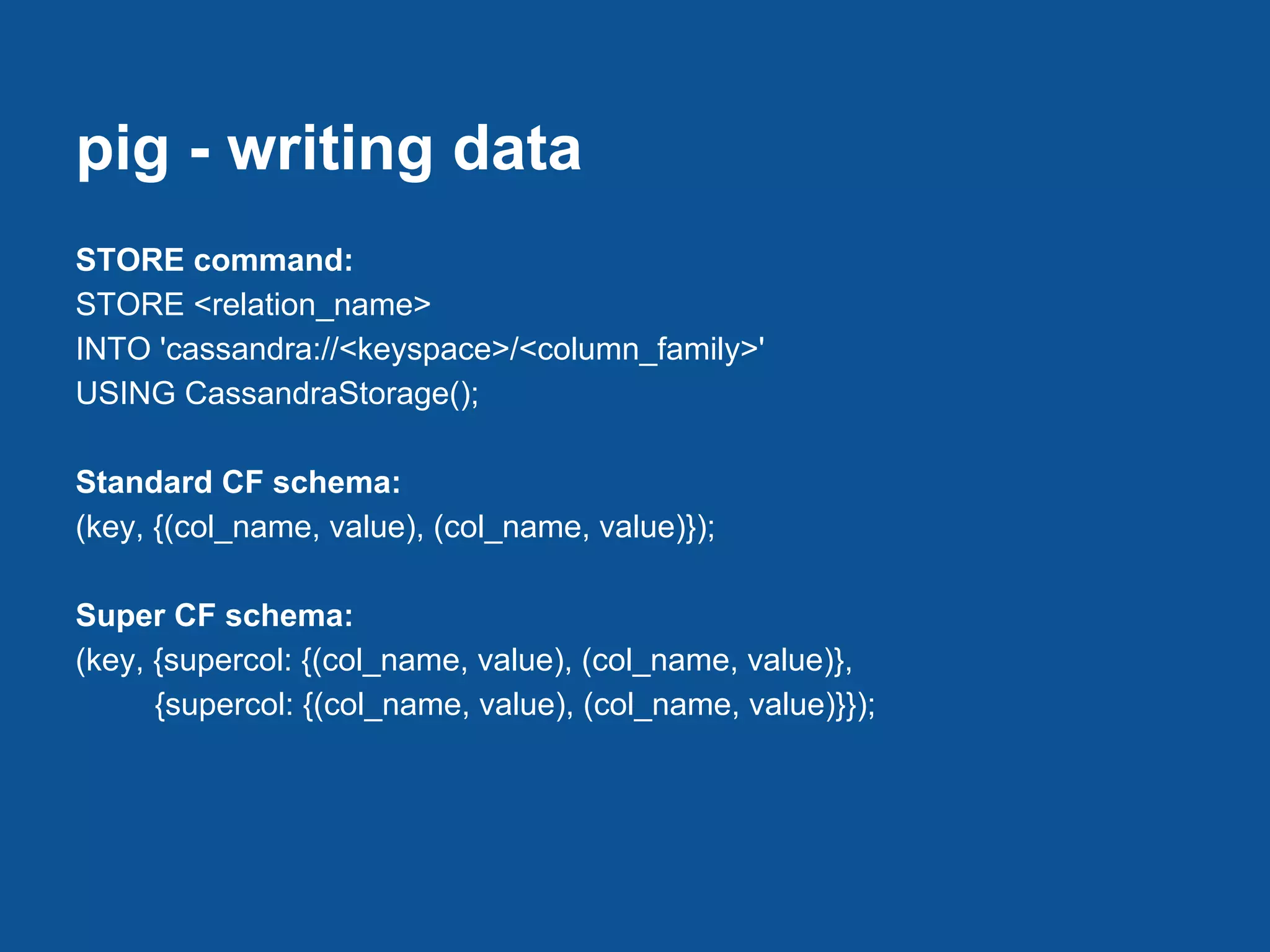

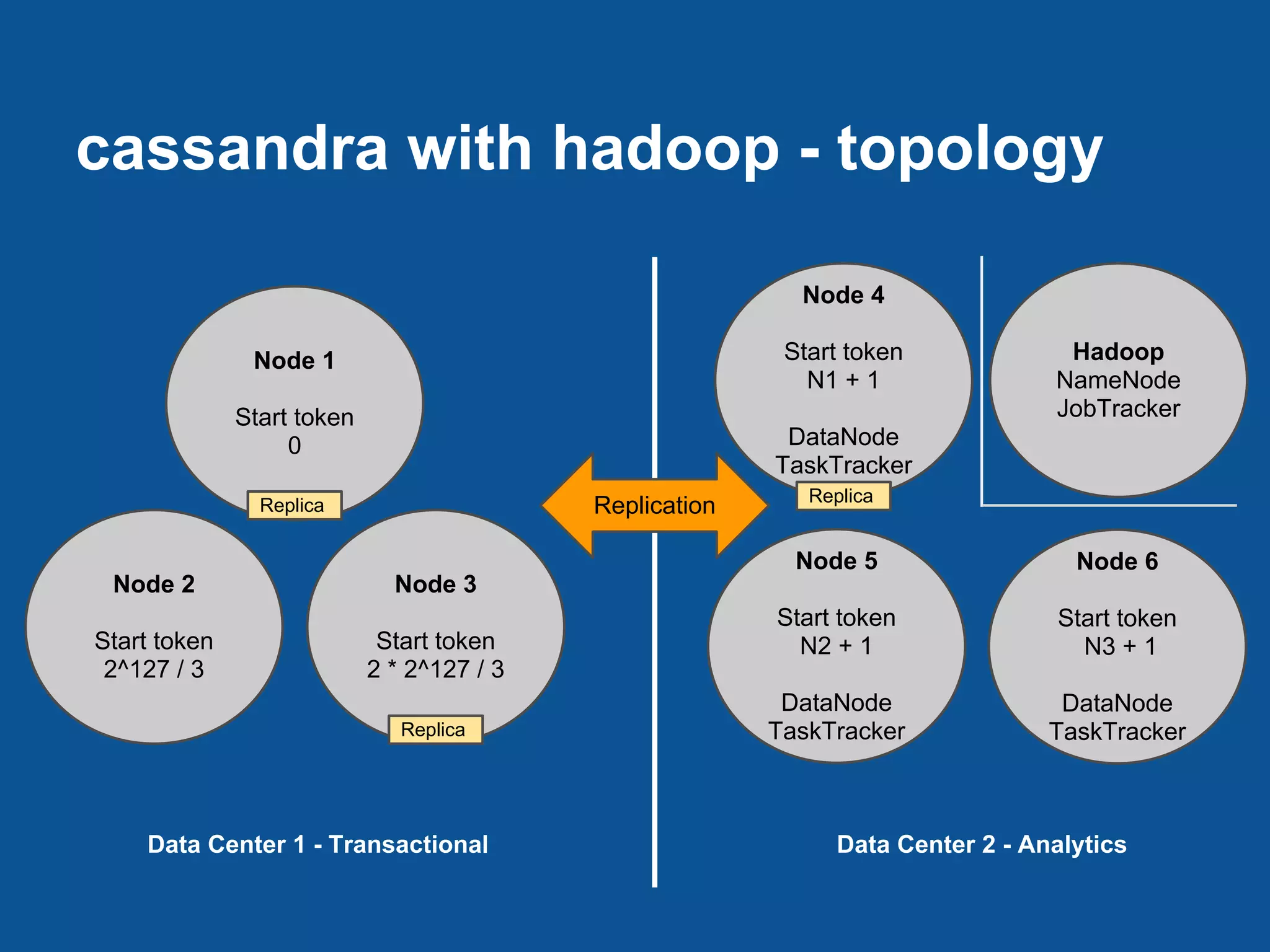

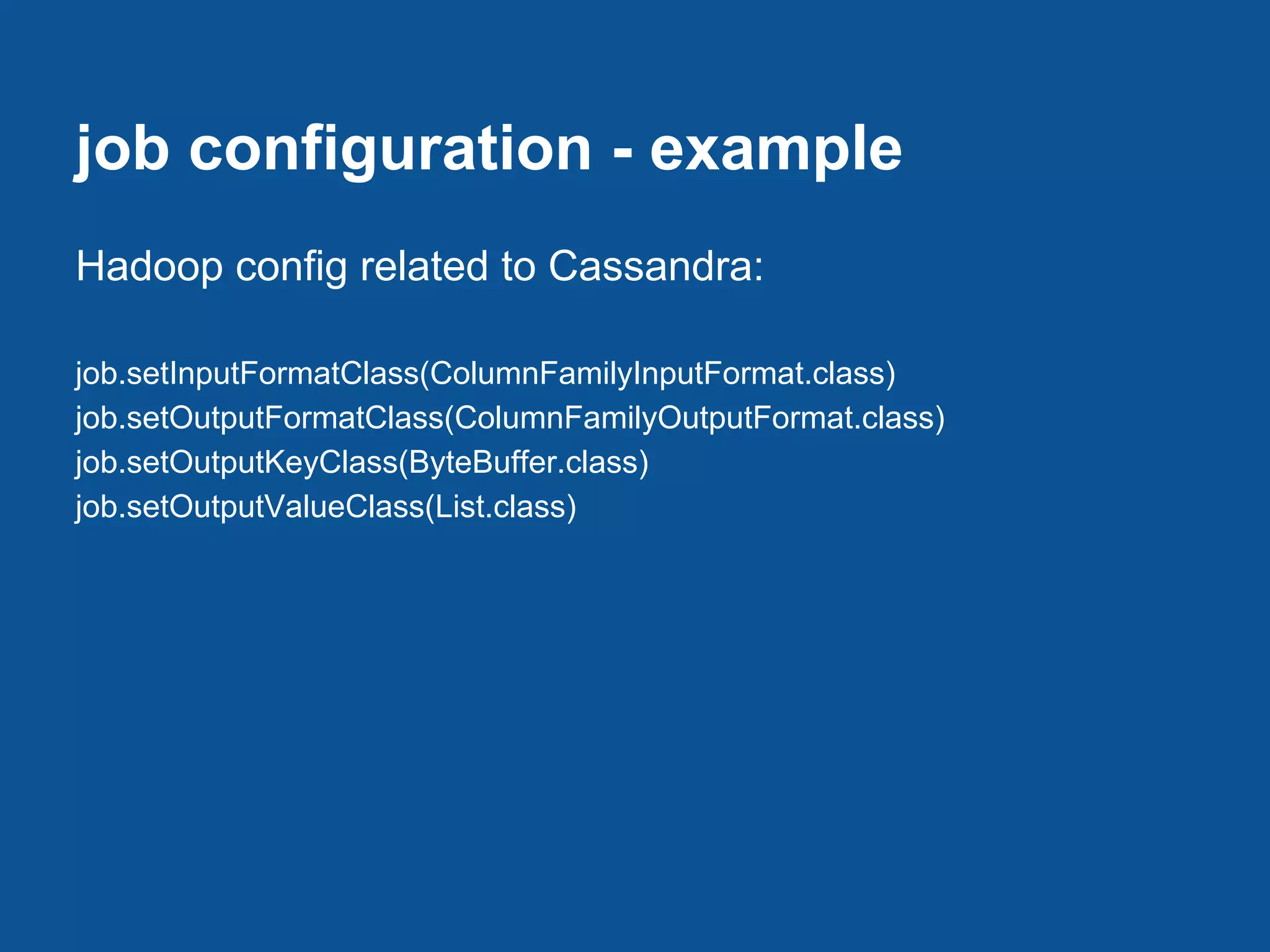

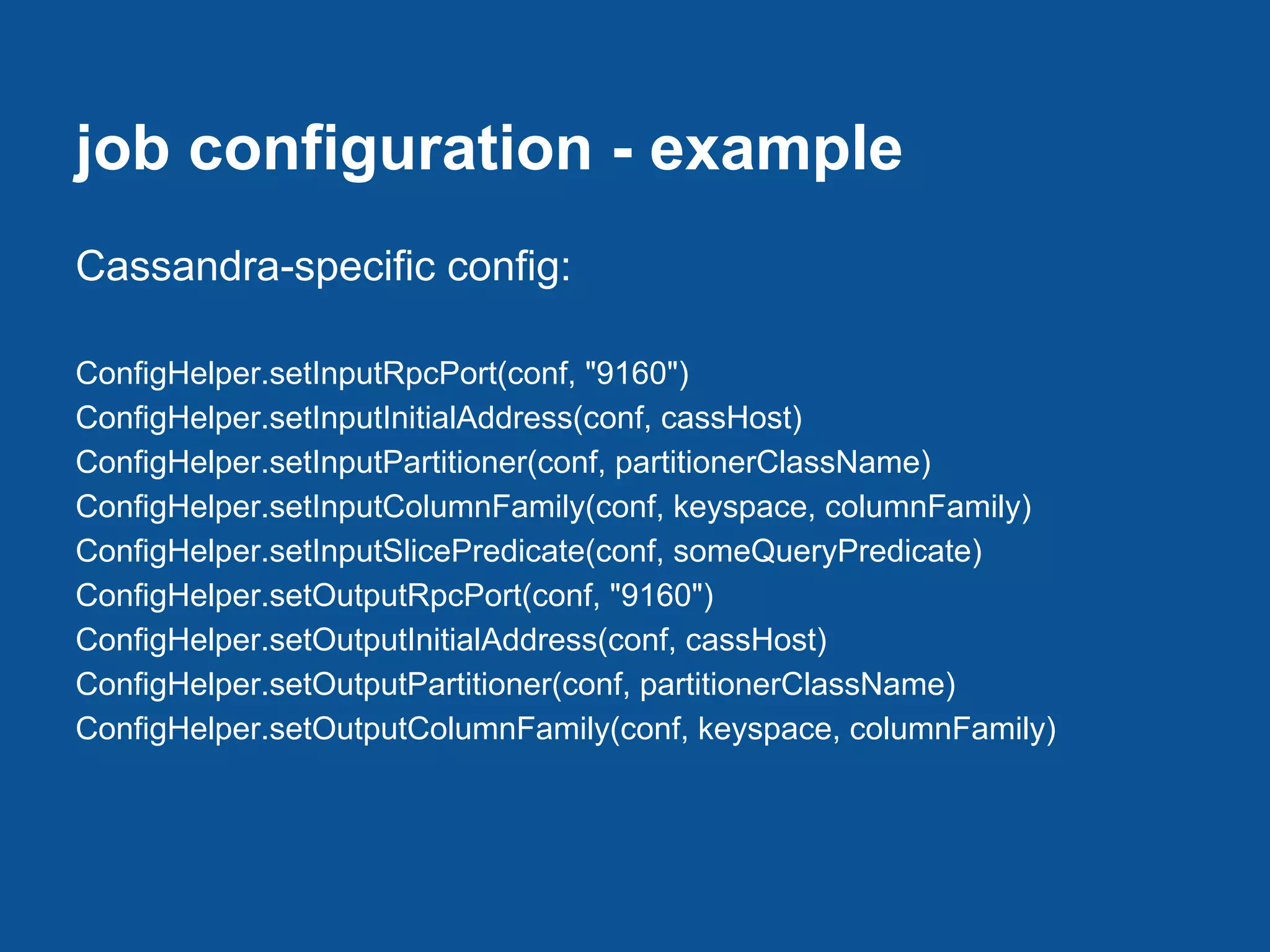

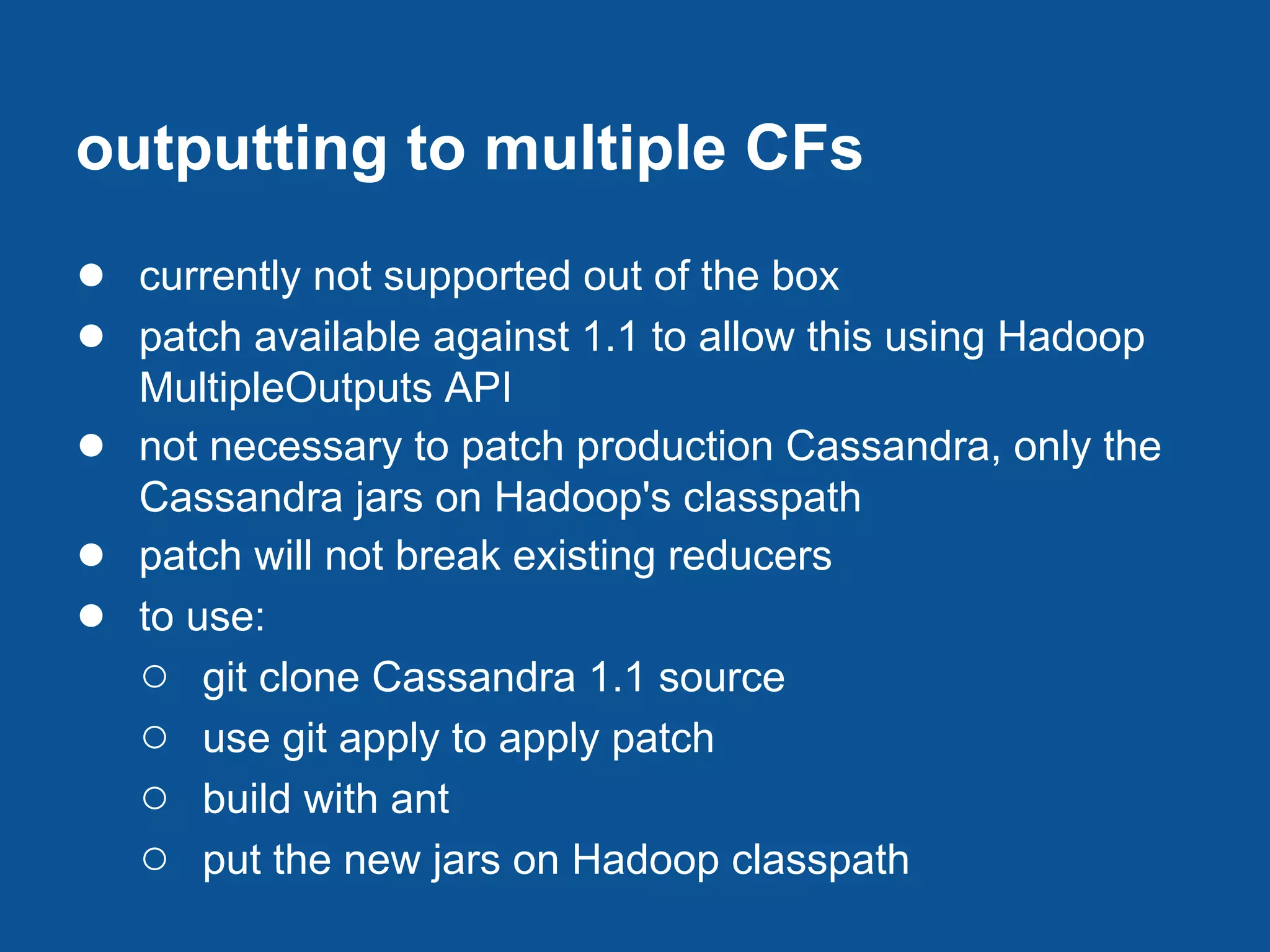

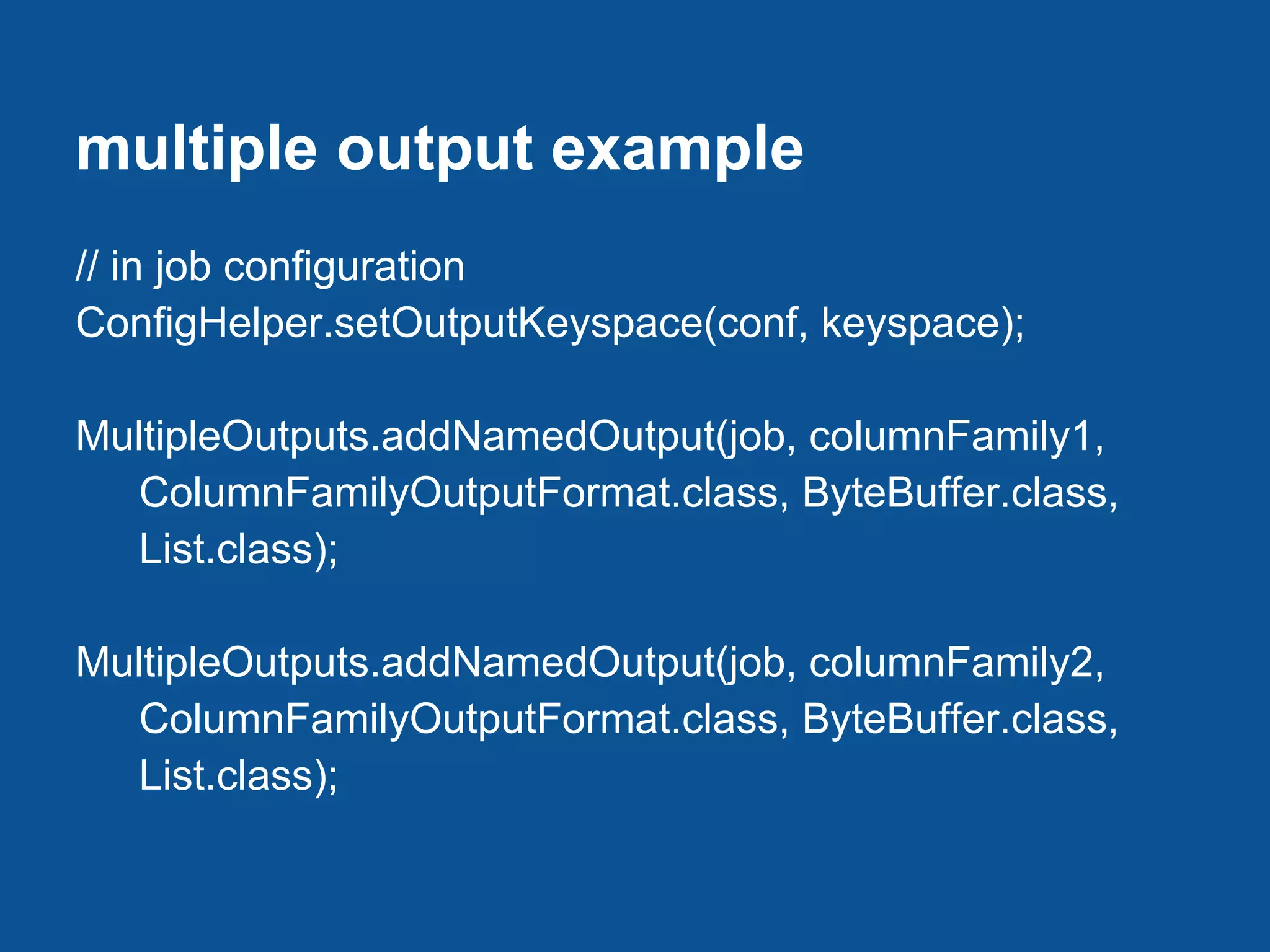

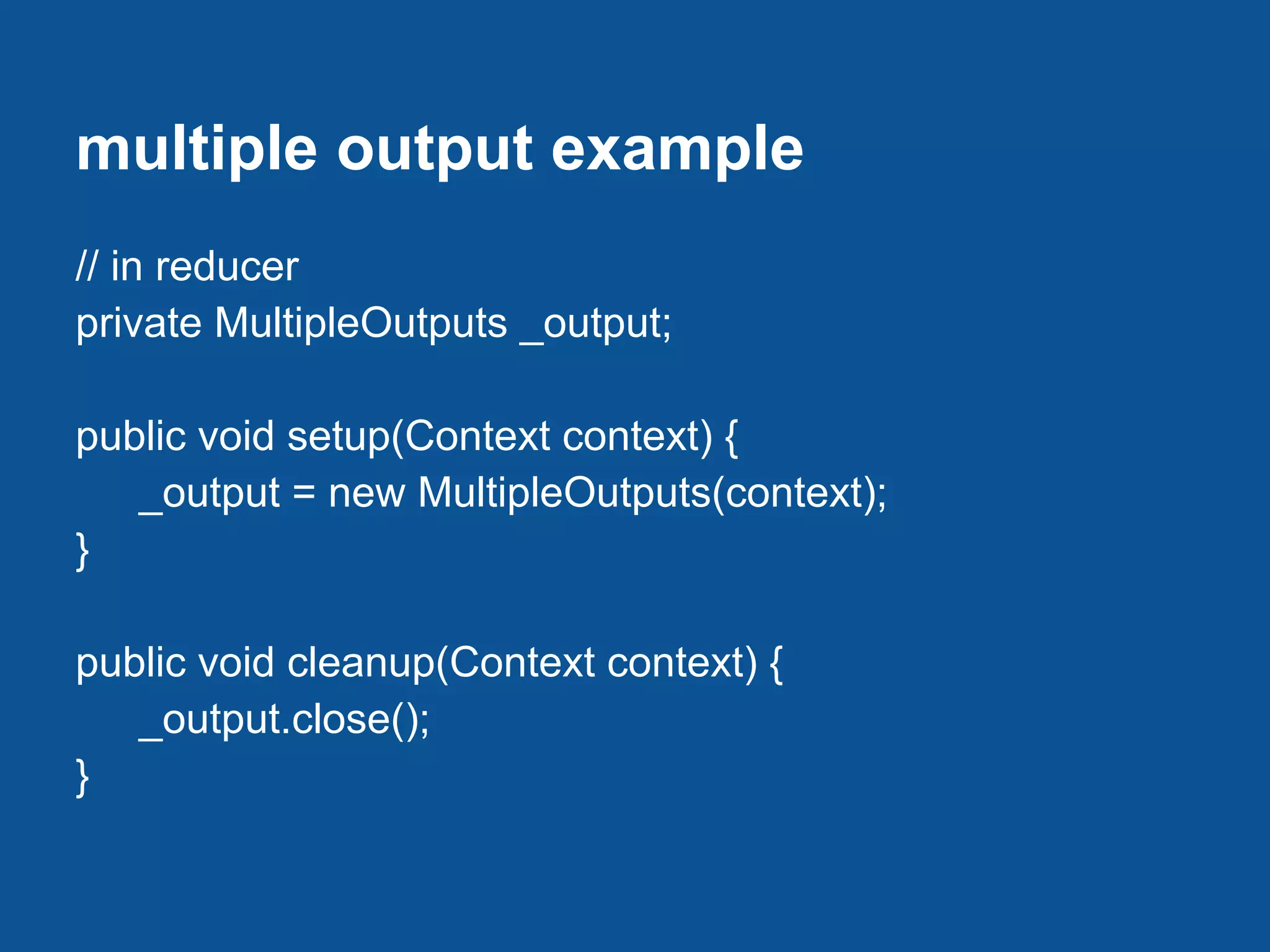

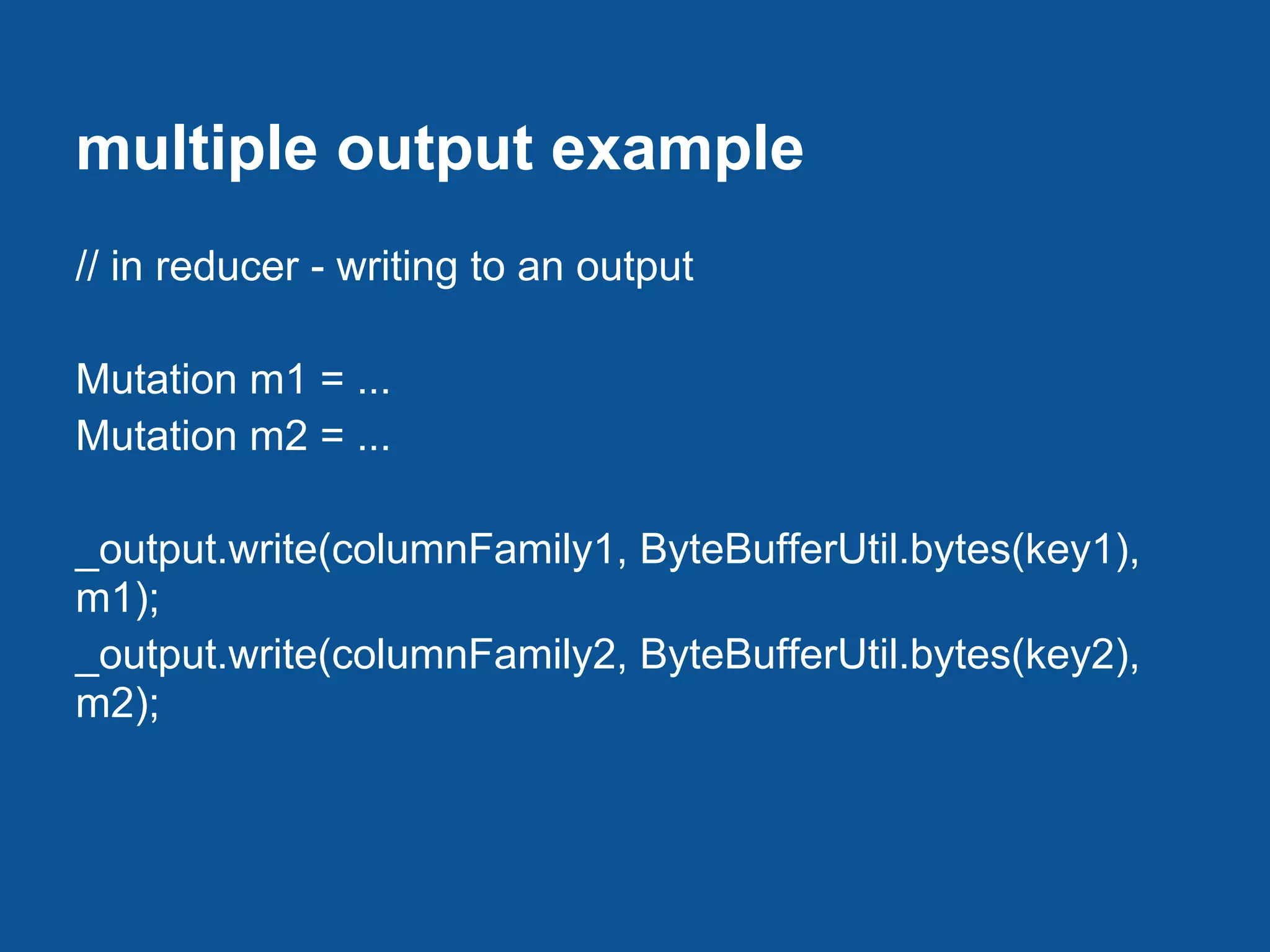

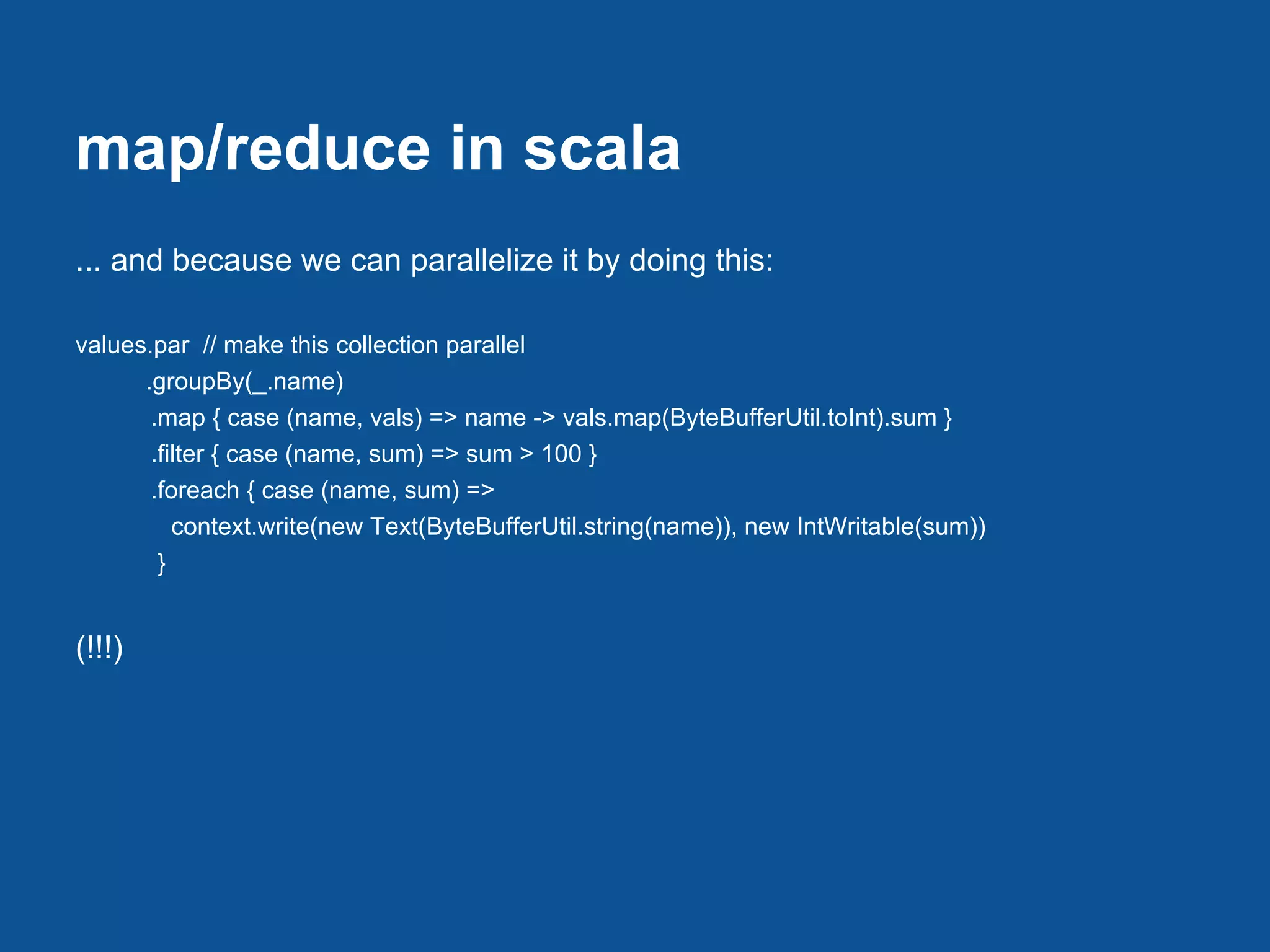

This document covers online analytics using Hadoop and Cassandra, detailing the advantages of integrating the two technologies for live data analysis. It provides an overview of Cassandra's architecture, data model, and query language, and explains how to configure and utilize map/reduce jobs with Scala and Pig for data processing. The document also includes practical examples and best practices for using Cassandra with Hadoop for both transactional and analytics purposes.

![map/reduce in scala

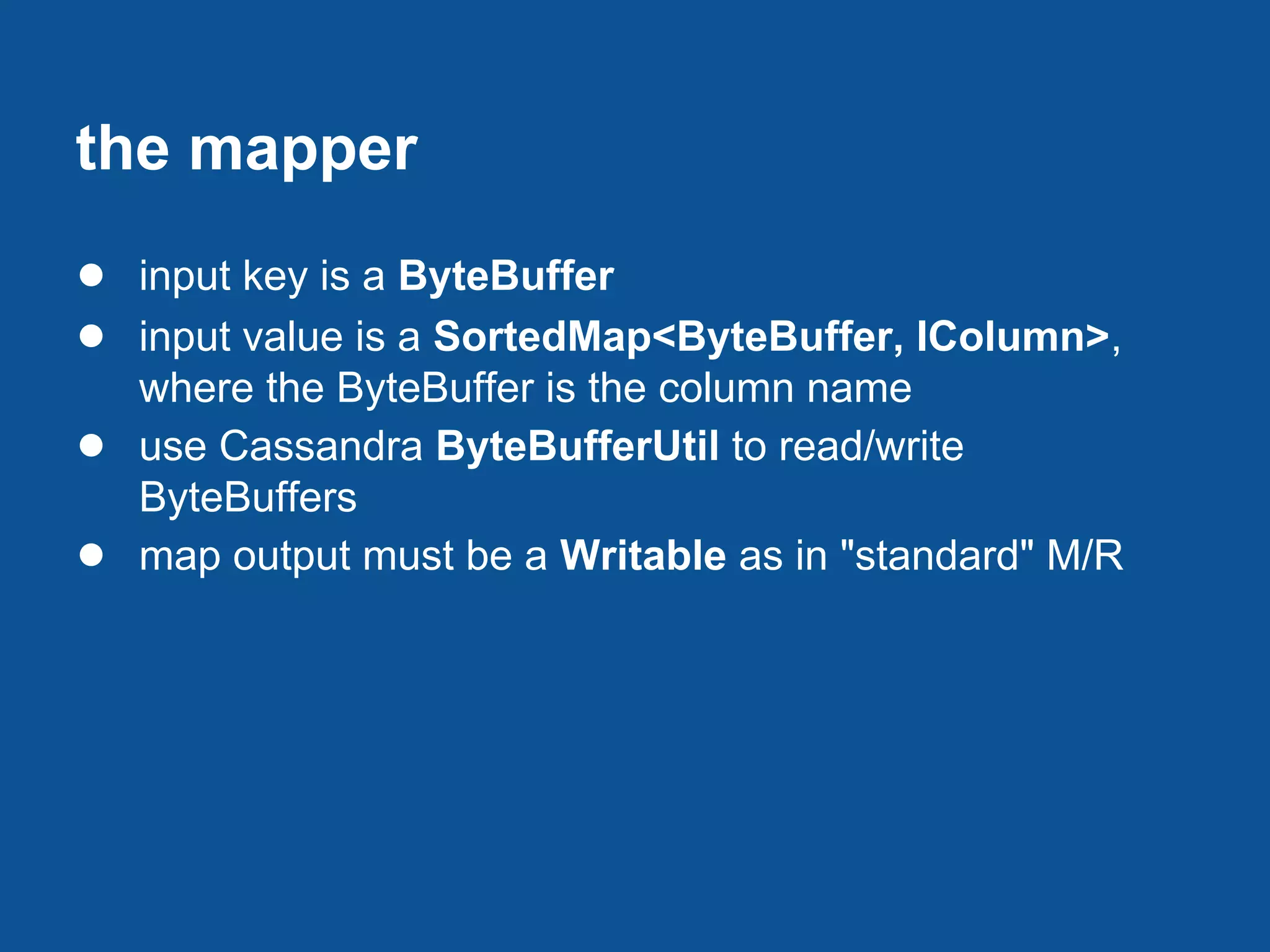

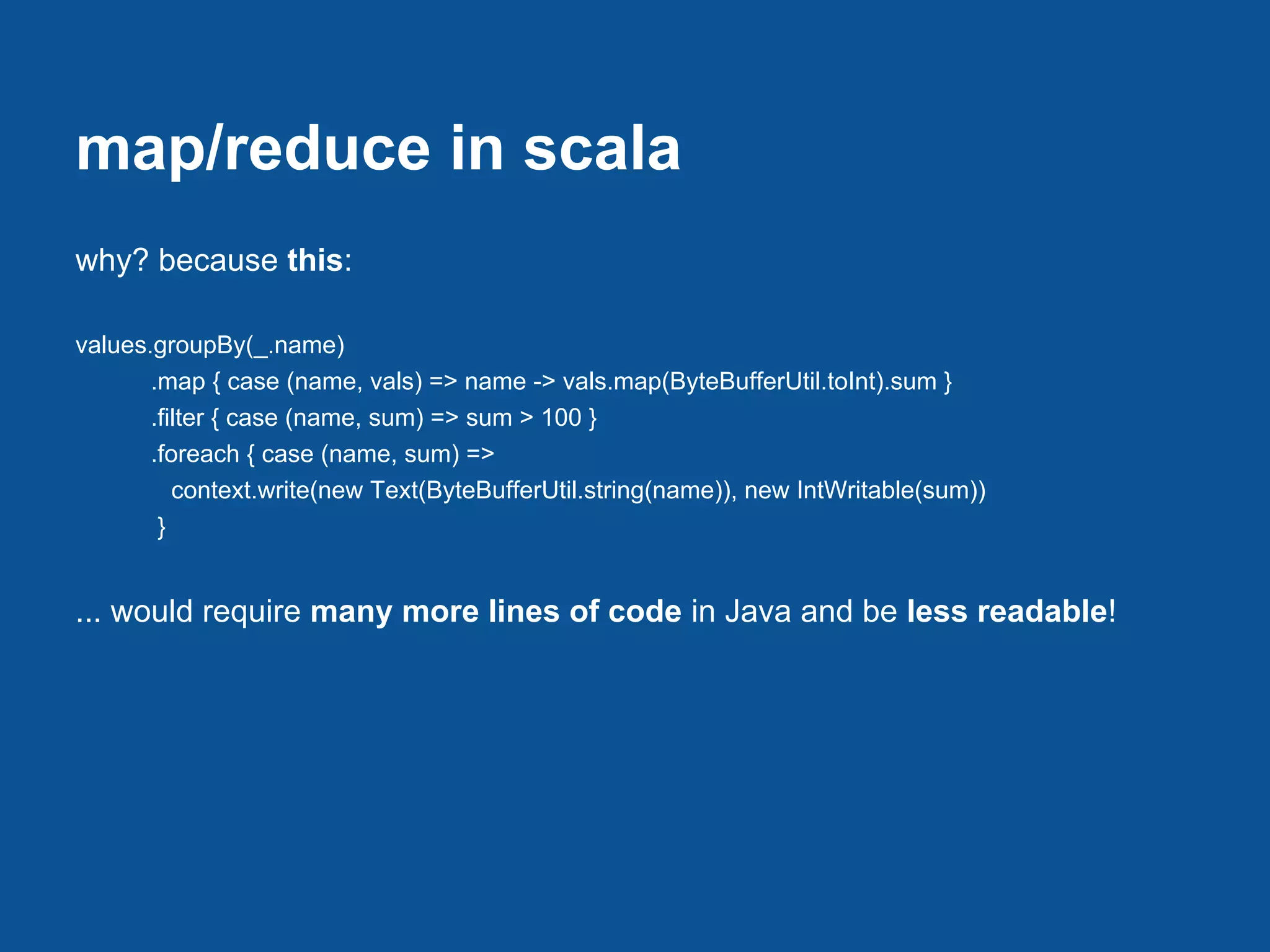

● add Scala library to Hadoop classpath

● import scala.collection.JavaConversions._ to get Scala

coolness with Java collections

● main executable is an empty class with the

implementation in a companion object

● context parm in map & reduce methods must be:

○ context: Mapper[ByteBuffer, SortedMap[ByteBuffer, IColumn],

<MapKeyClass>, <MapValueClass>]#Context

○ context: Reducer[<MapKeyClass>, <MapValueClass>, ByteBuffer,

java.util.List[Mutation]]#Context

○ it will compile but not function correctly without the type information](https://image.slidesharecdn.com/presentation-120518073922-phpapp01/75/Online-Analytics-with-Hadoop-and-Cassandra-34-2048.jpg)