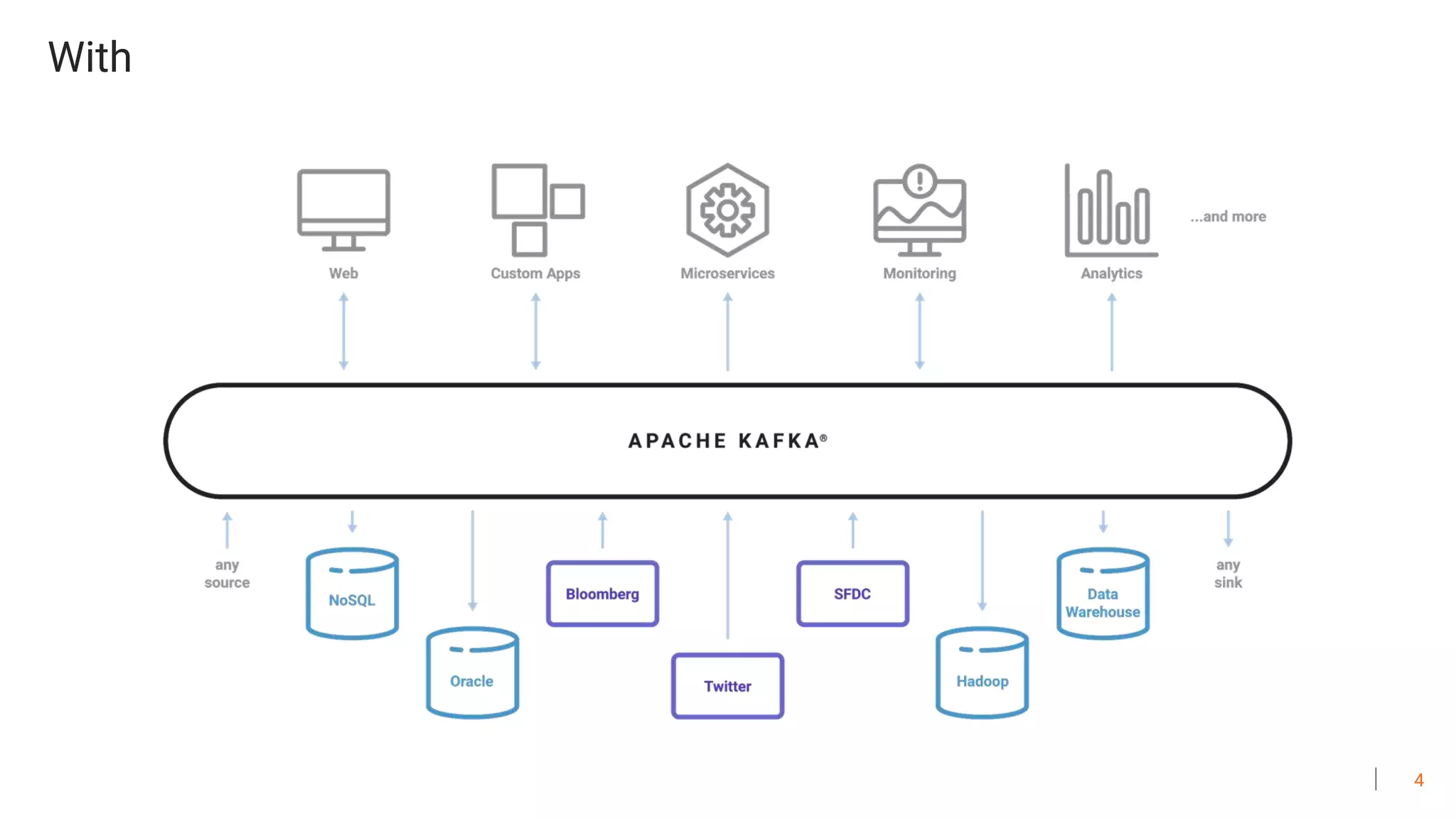

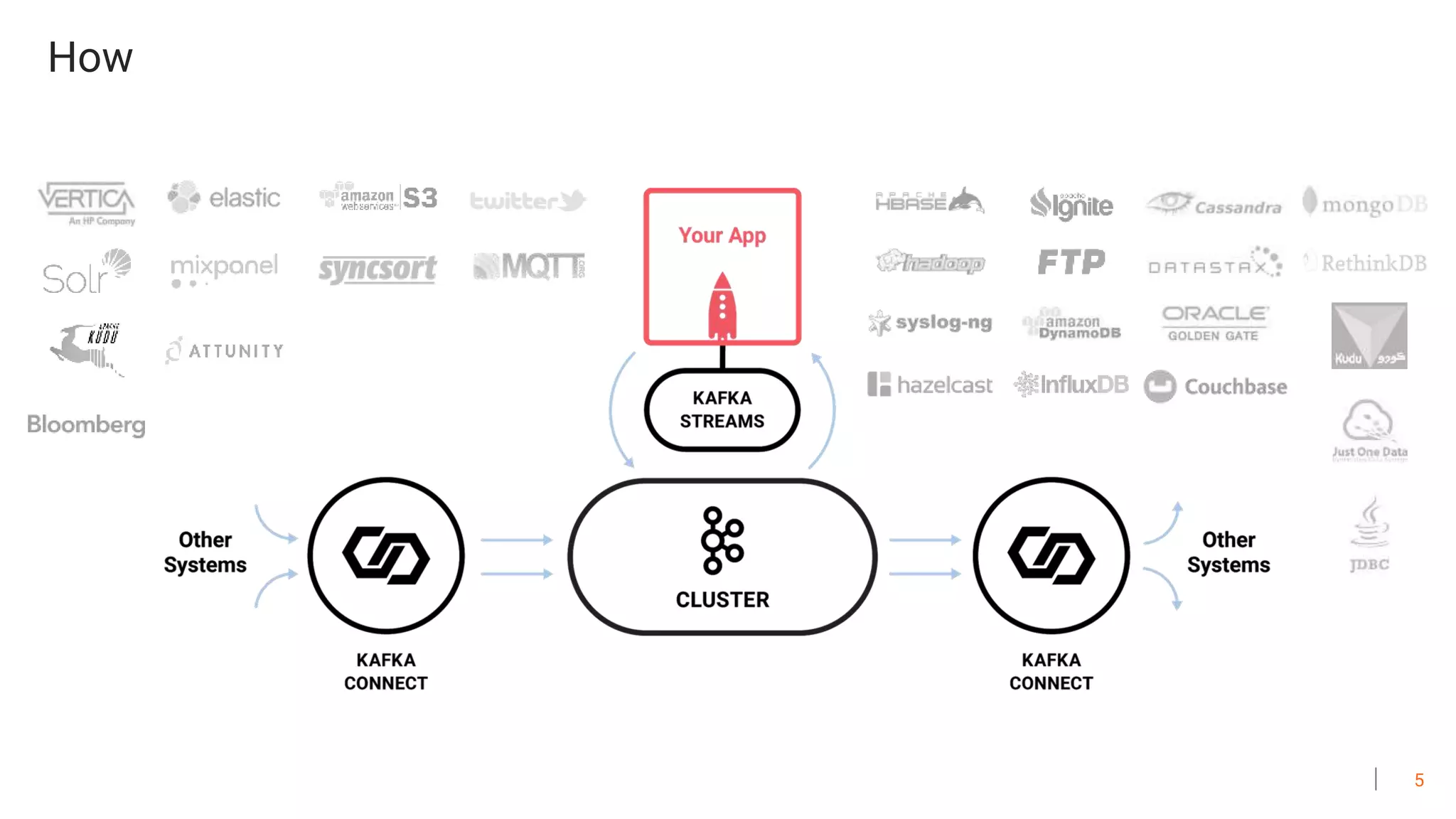

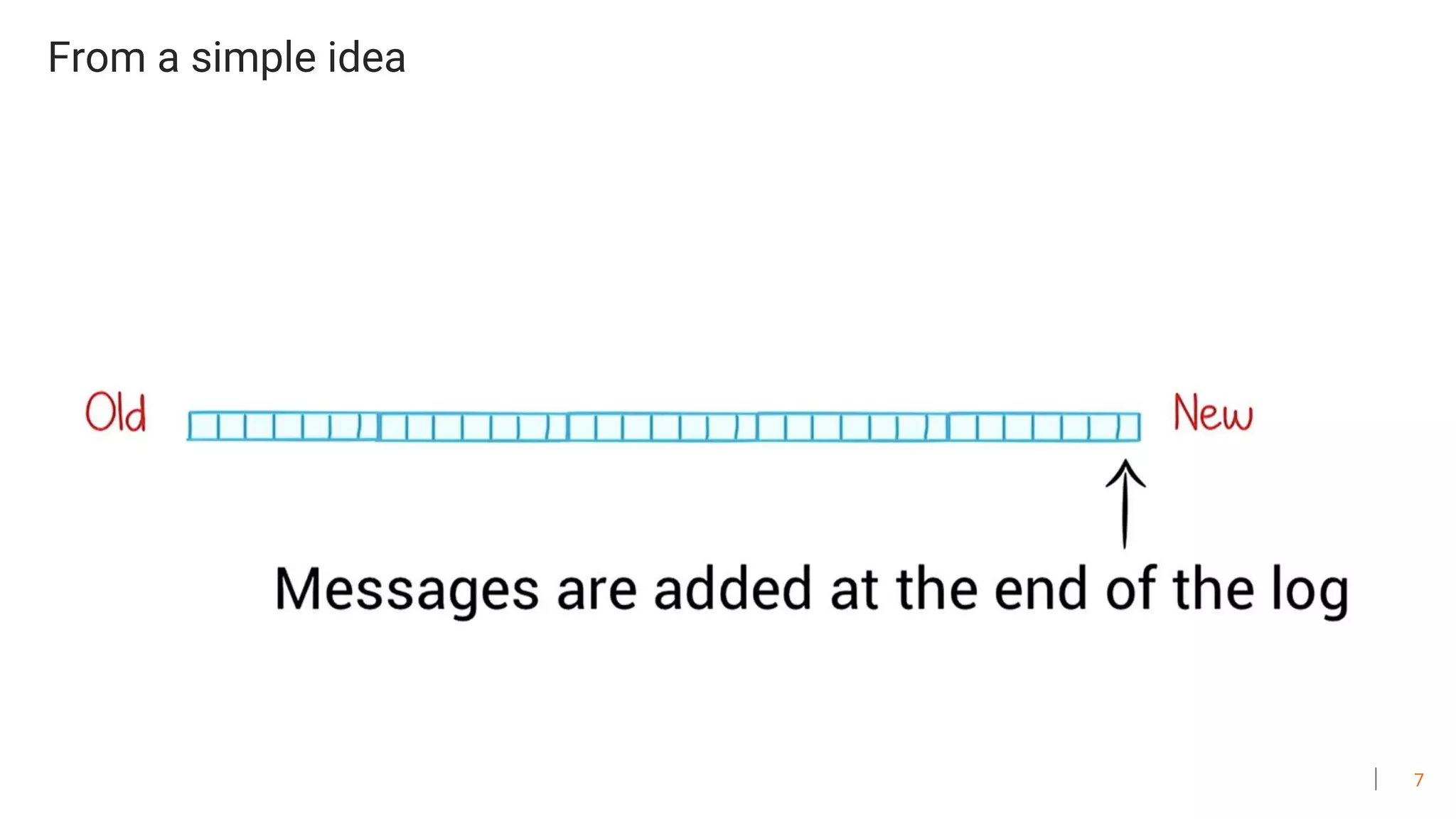

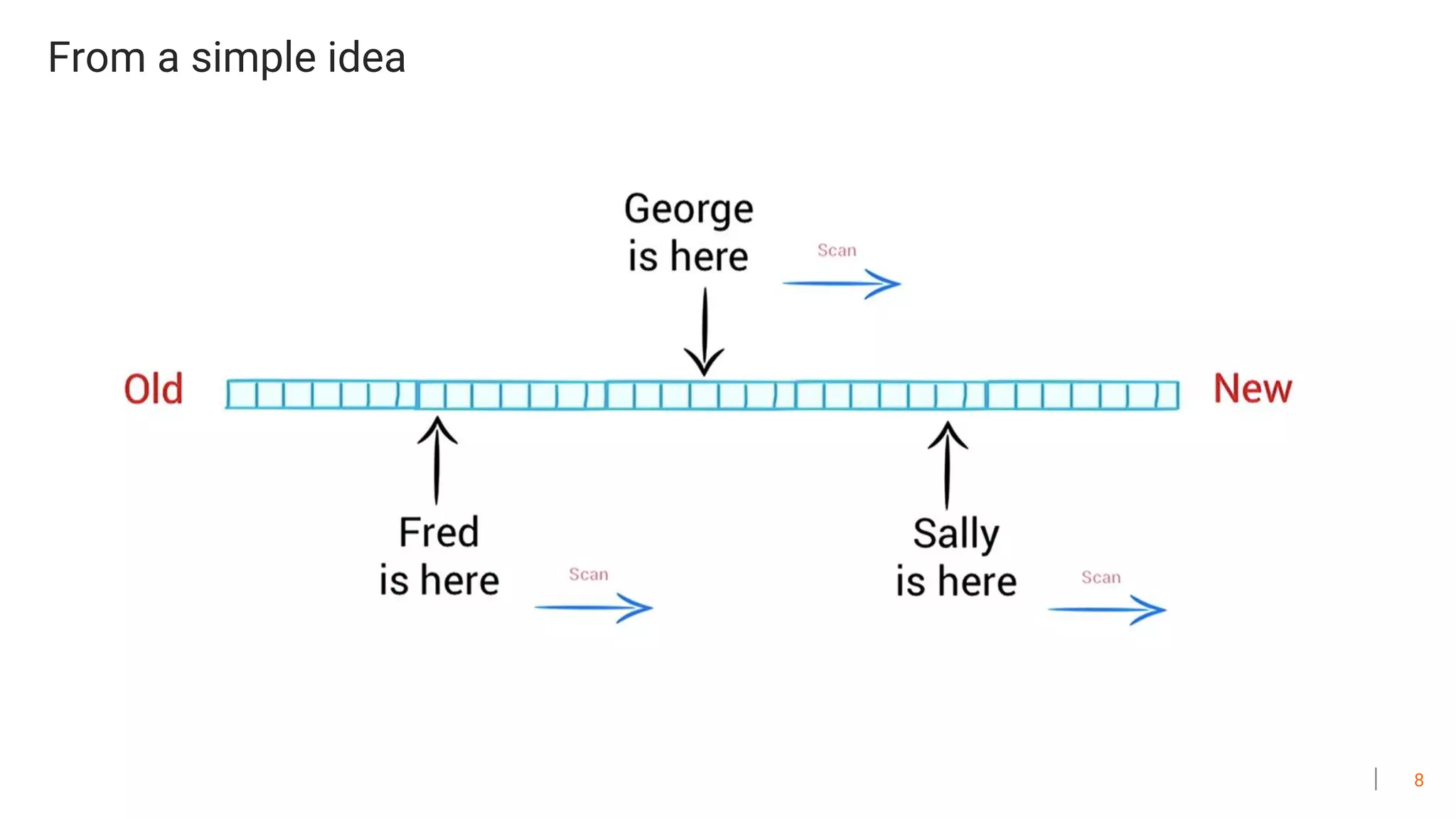

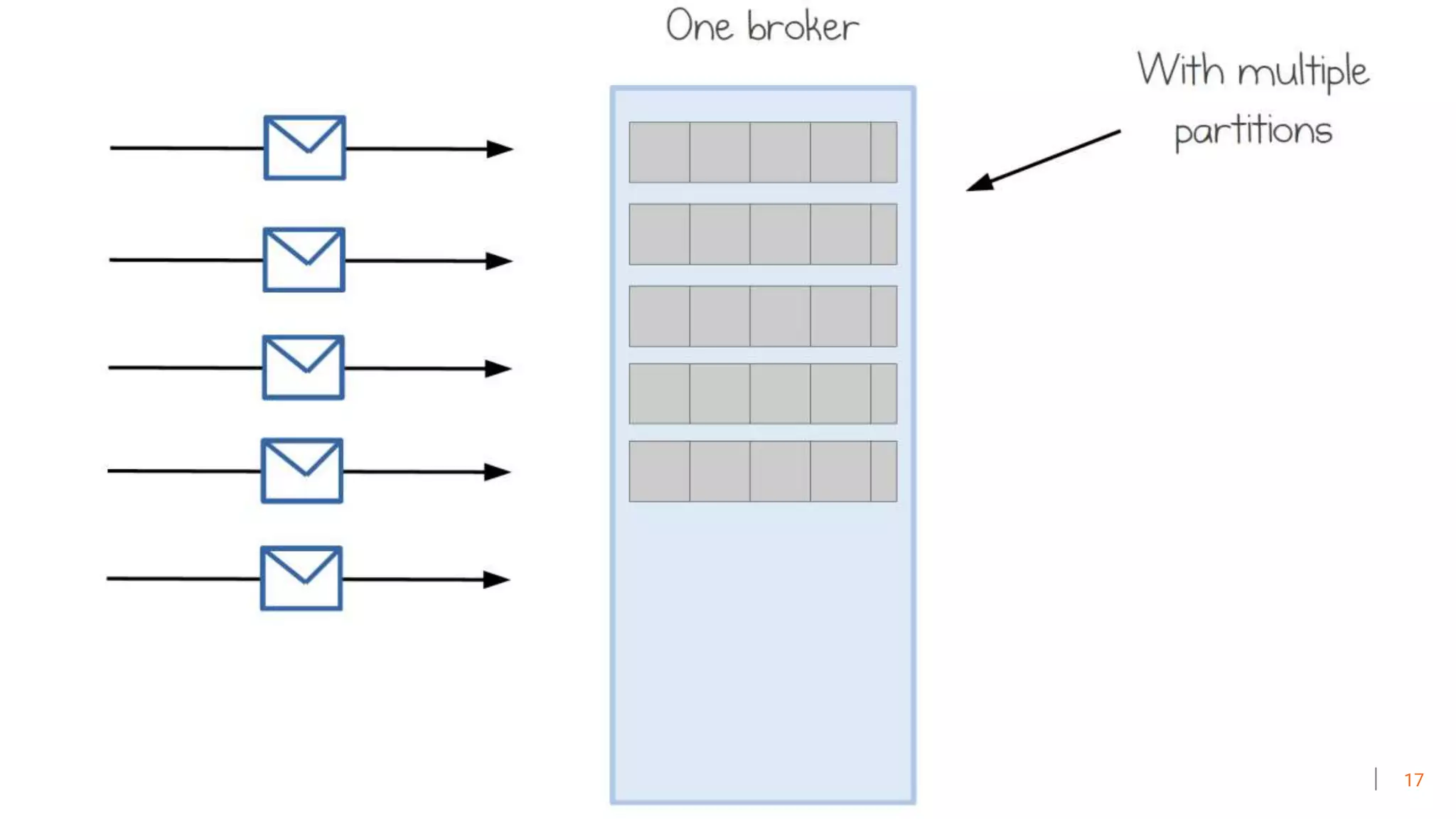

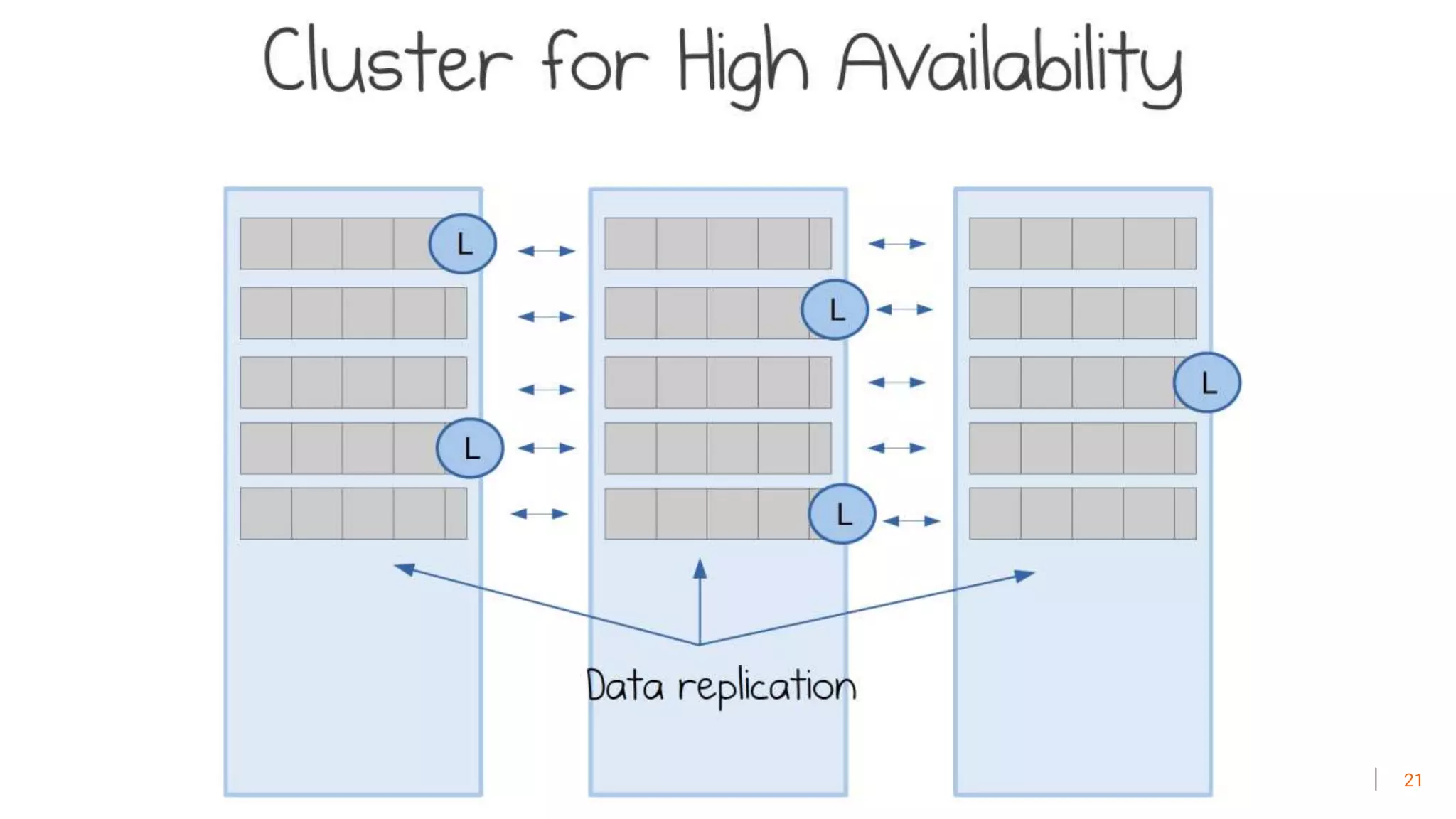

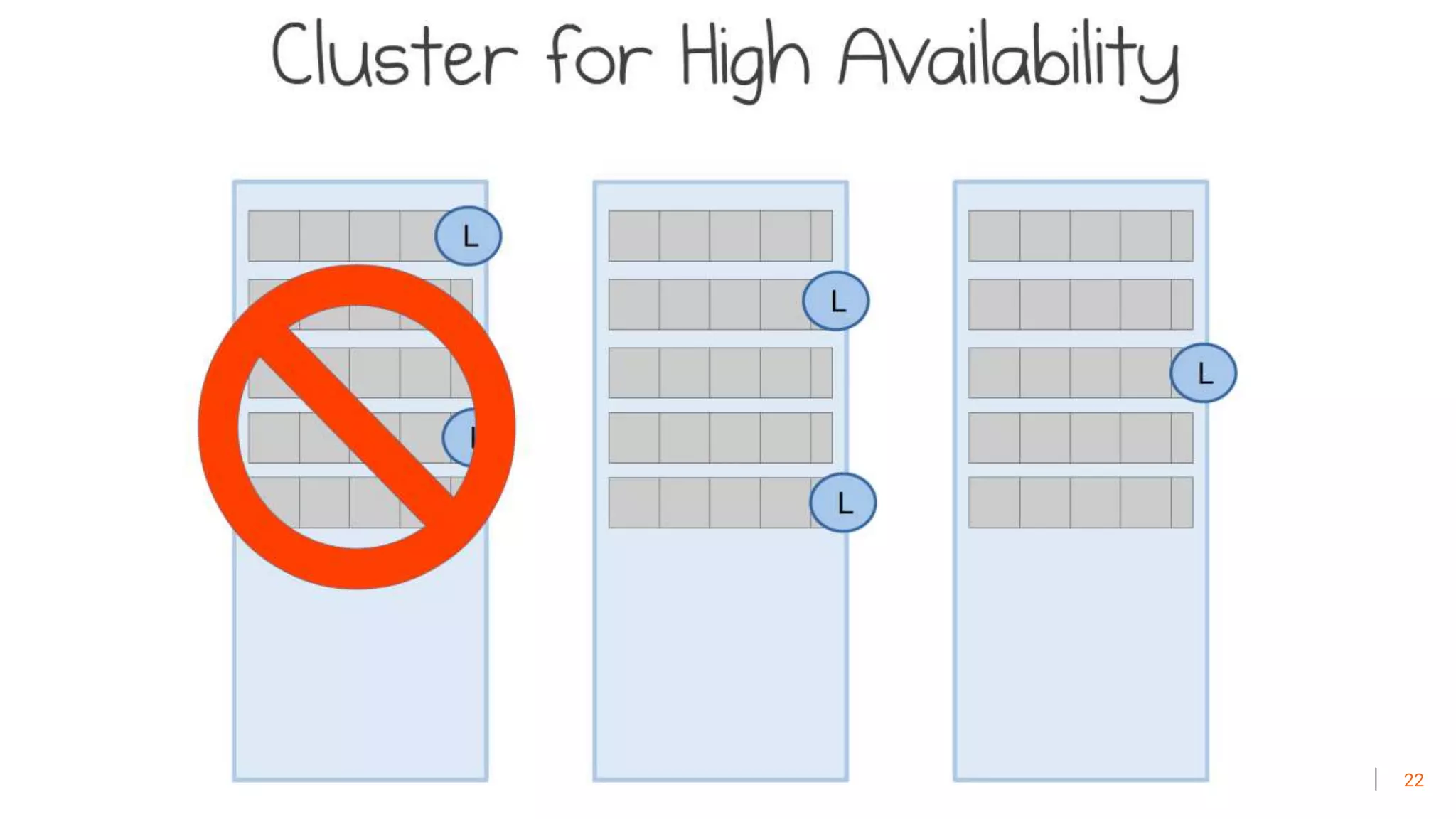

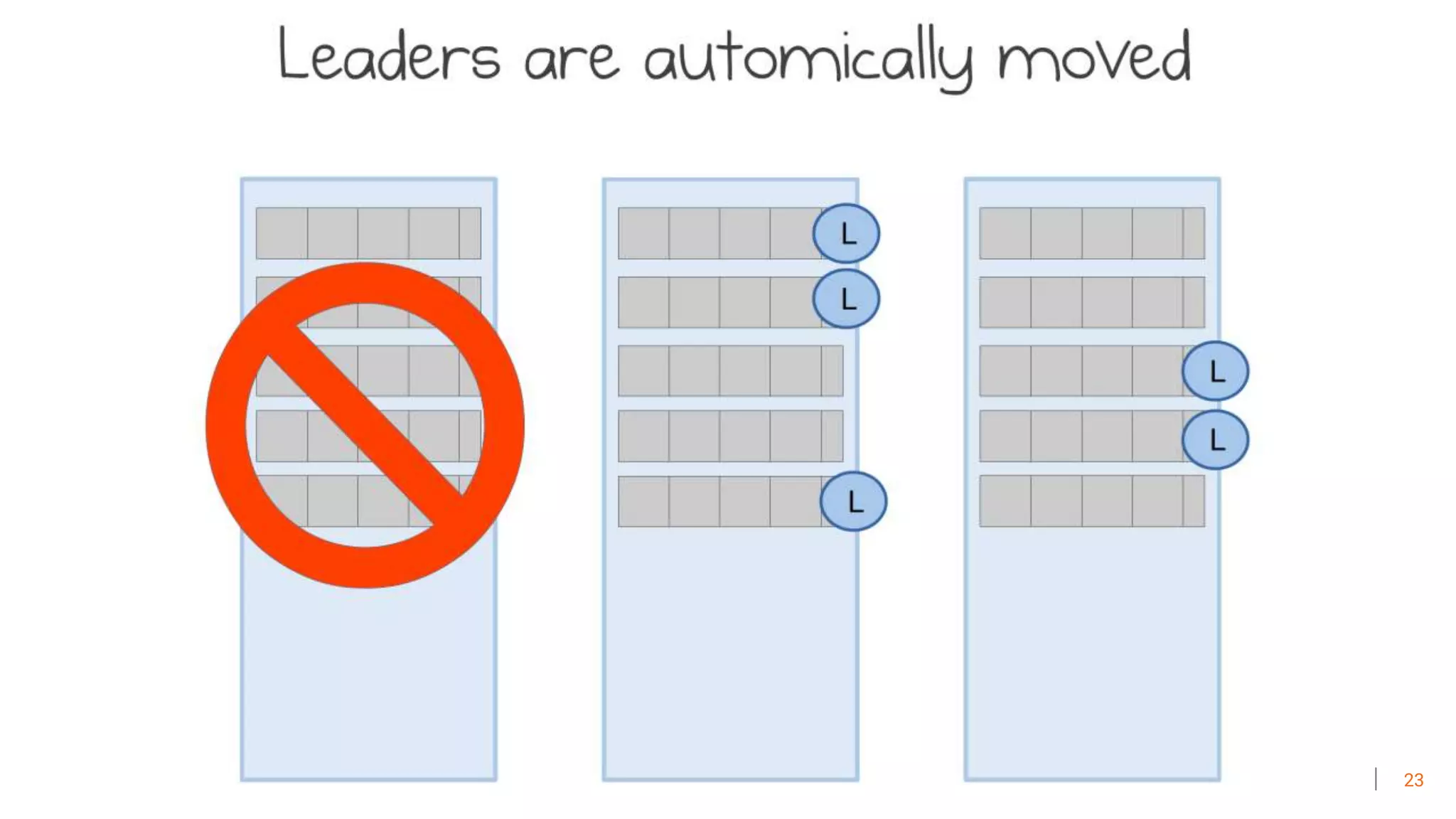

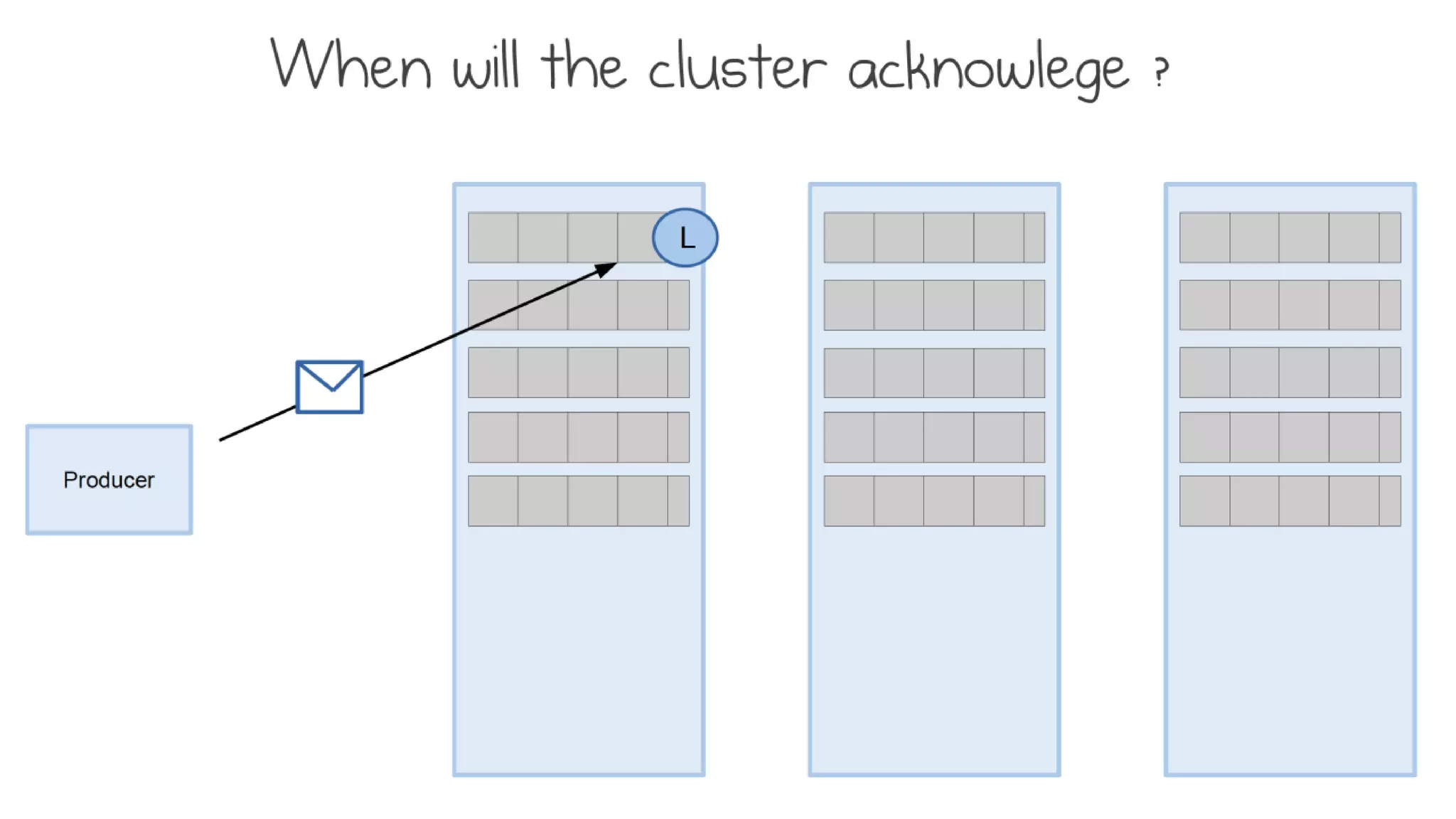

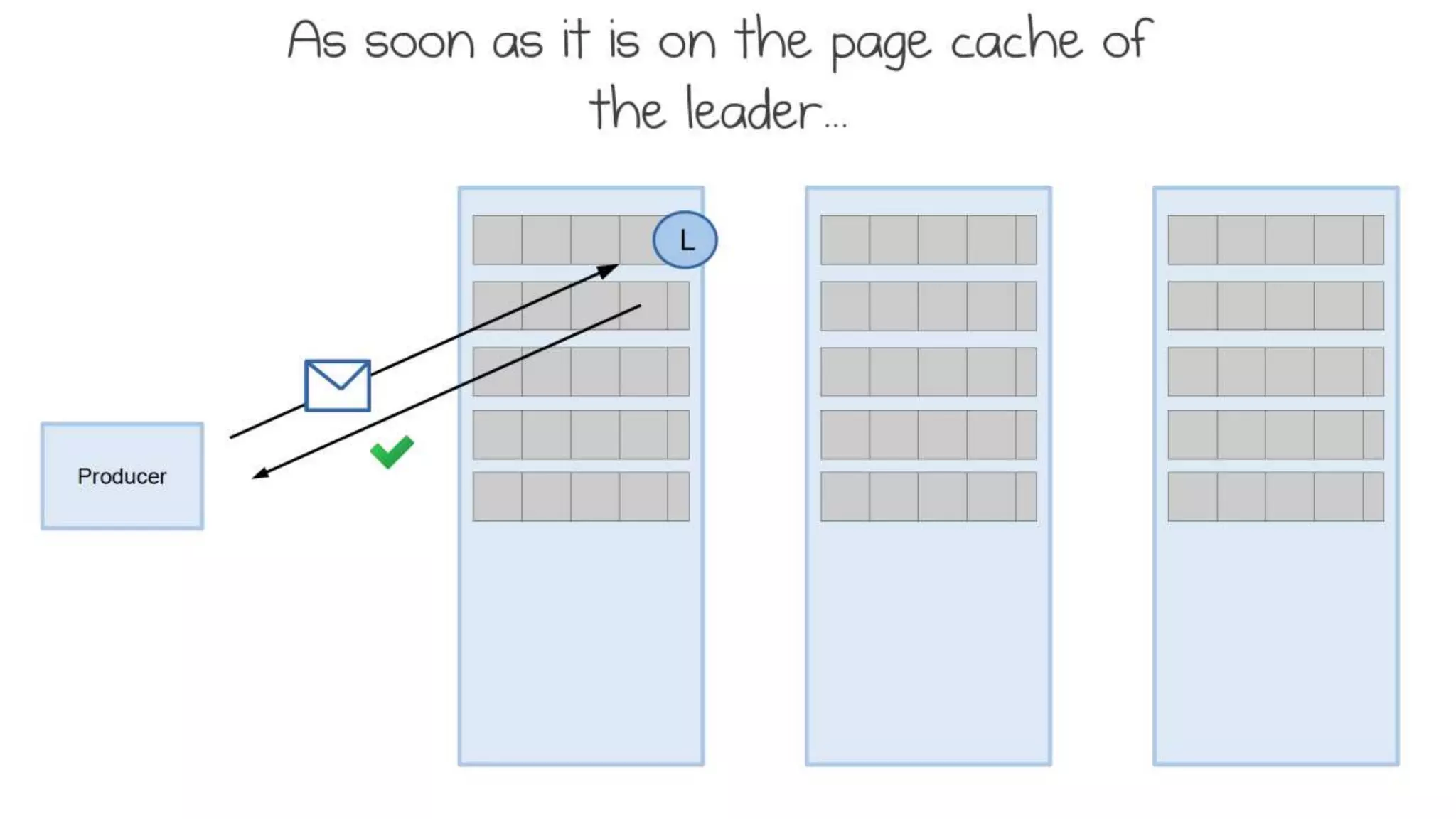

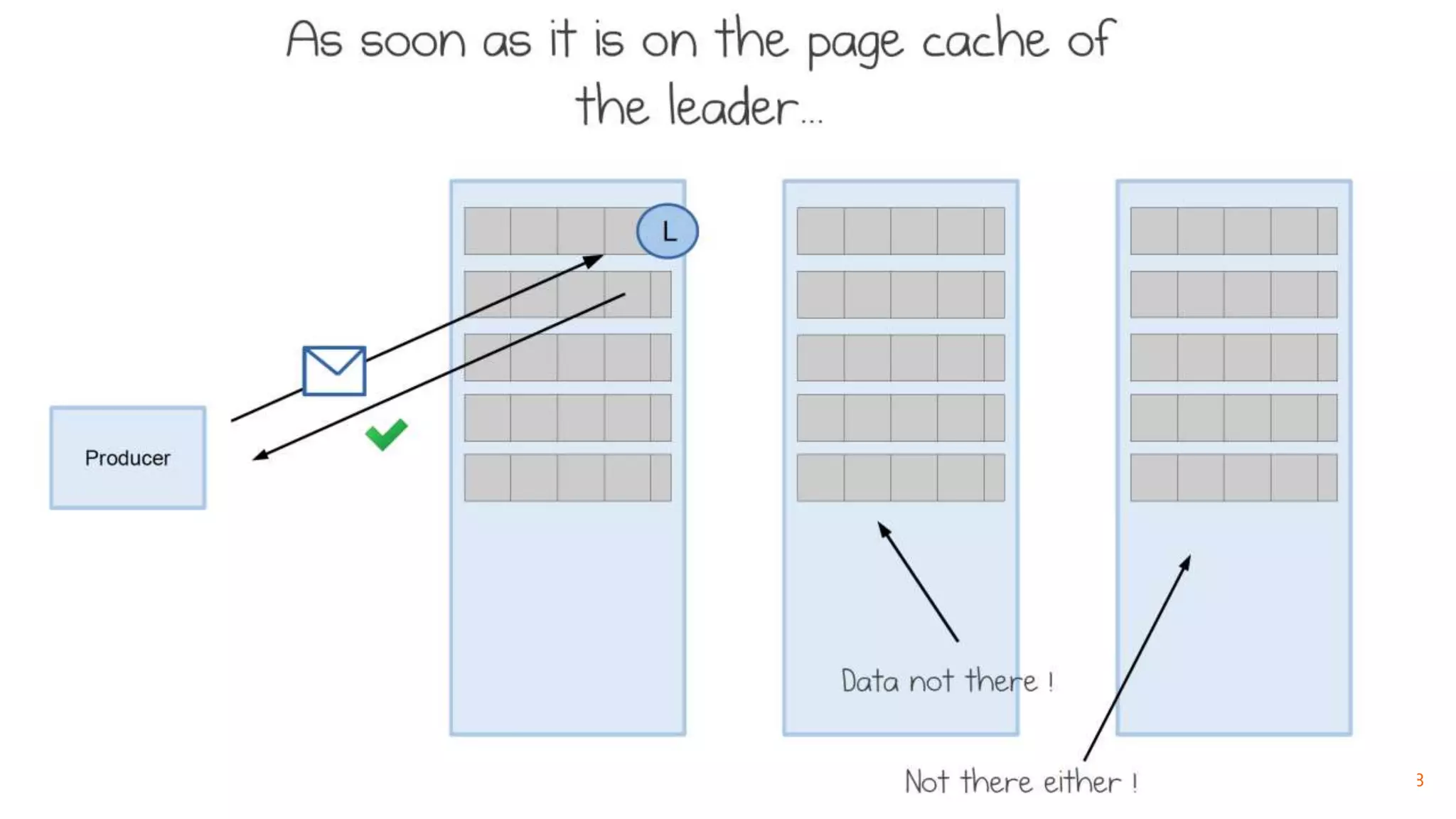

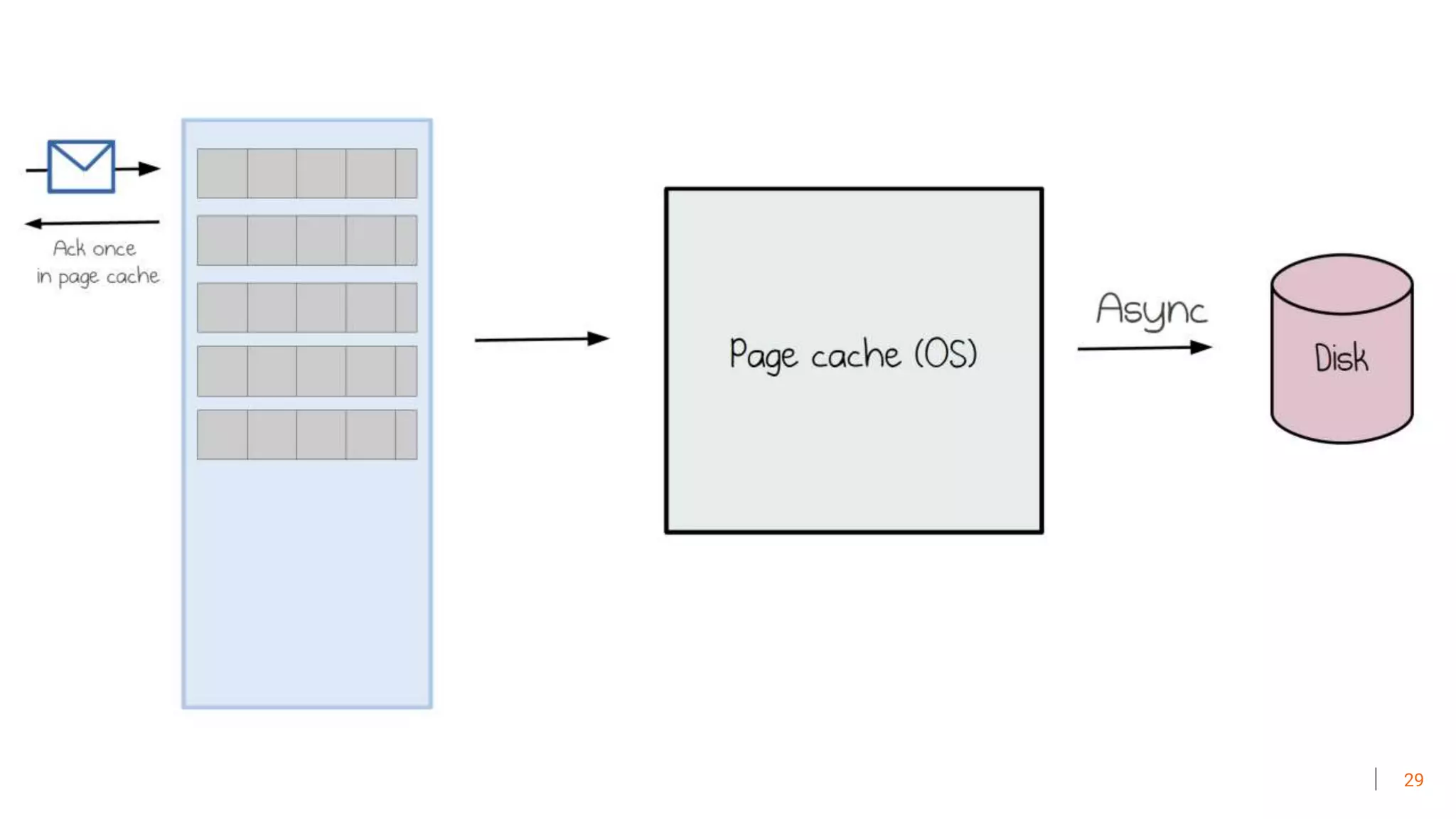

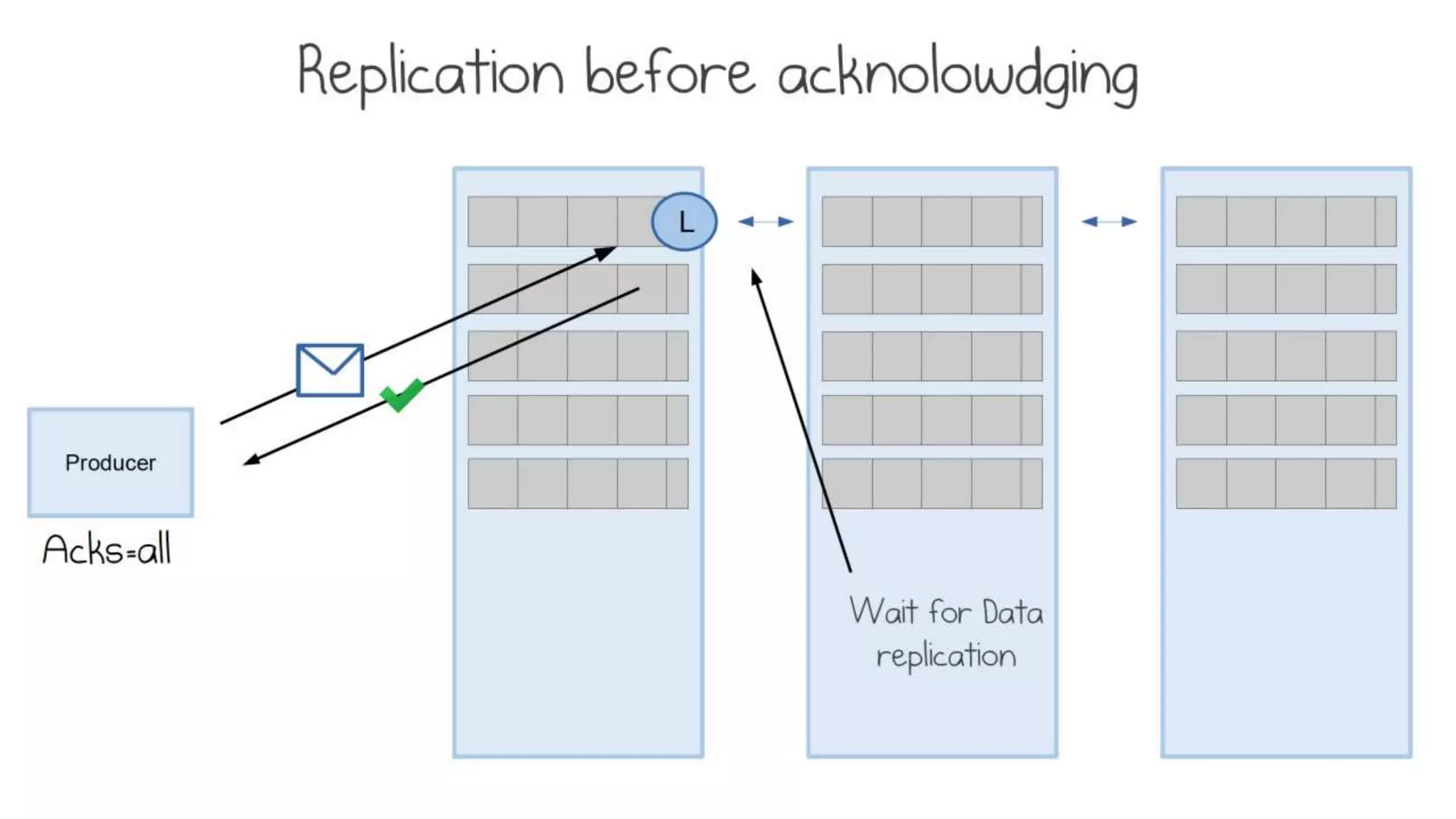

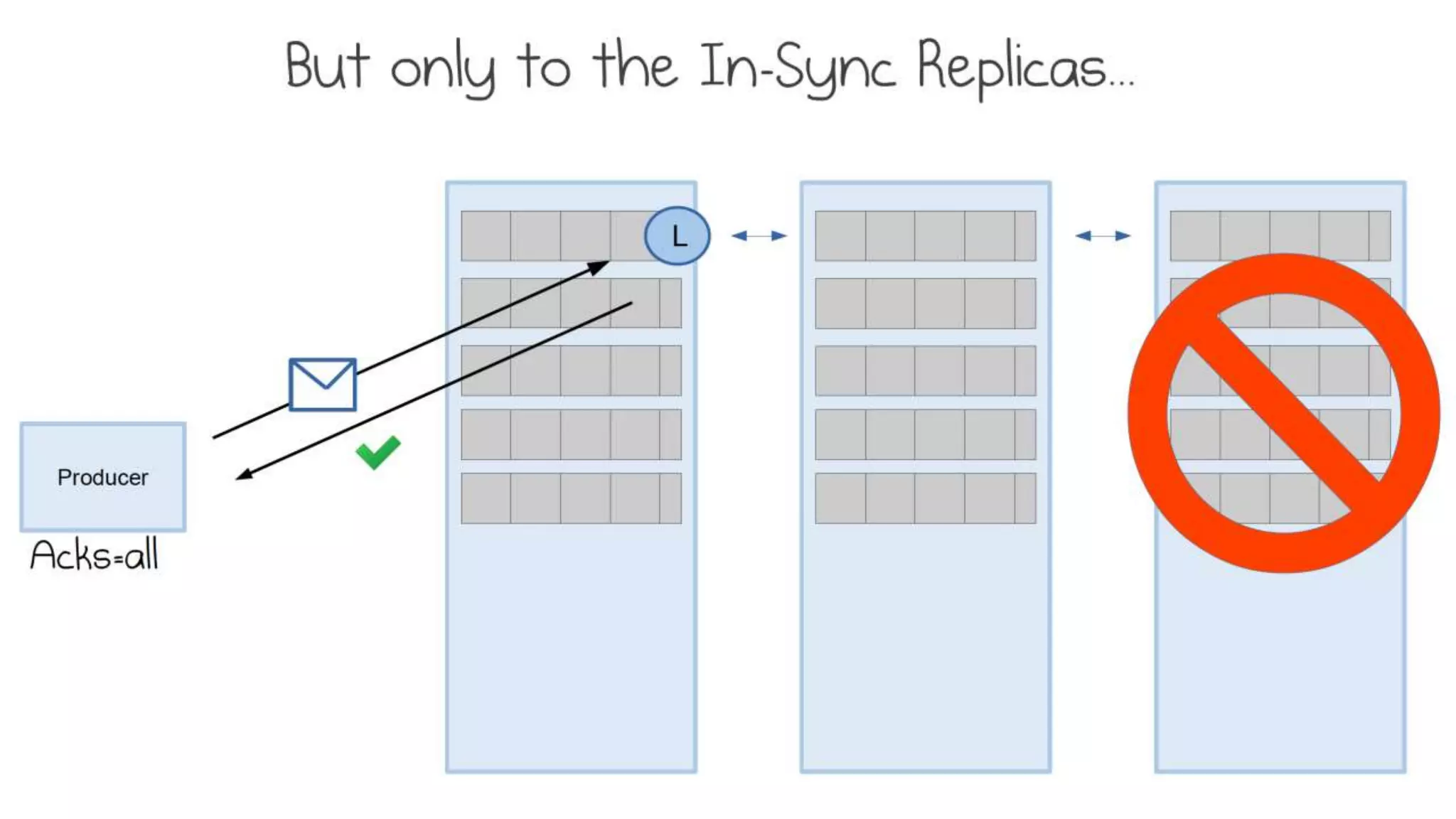

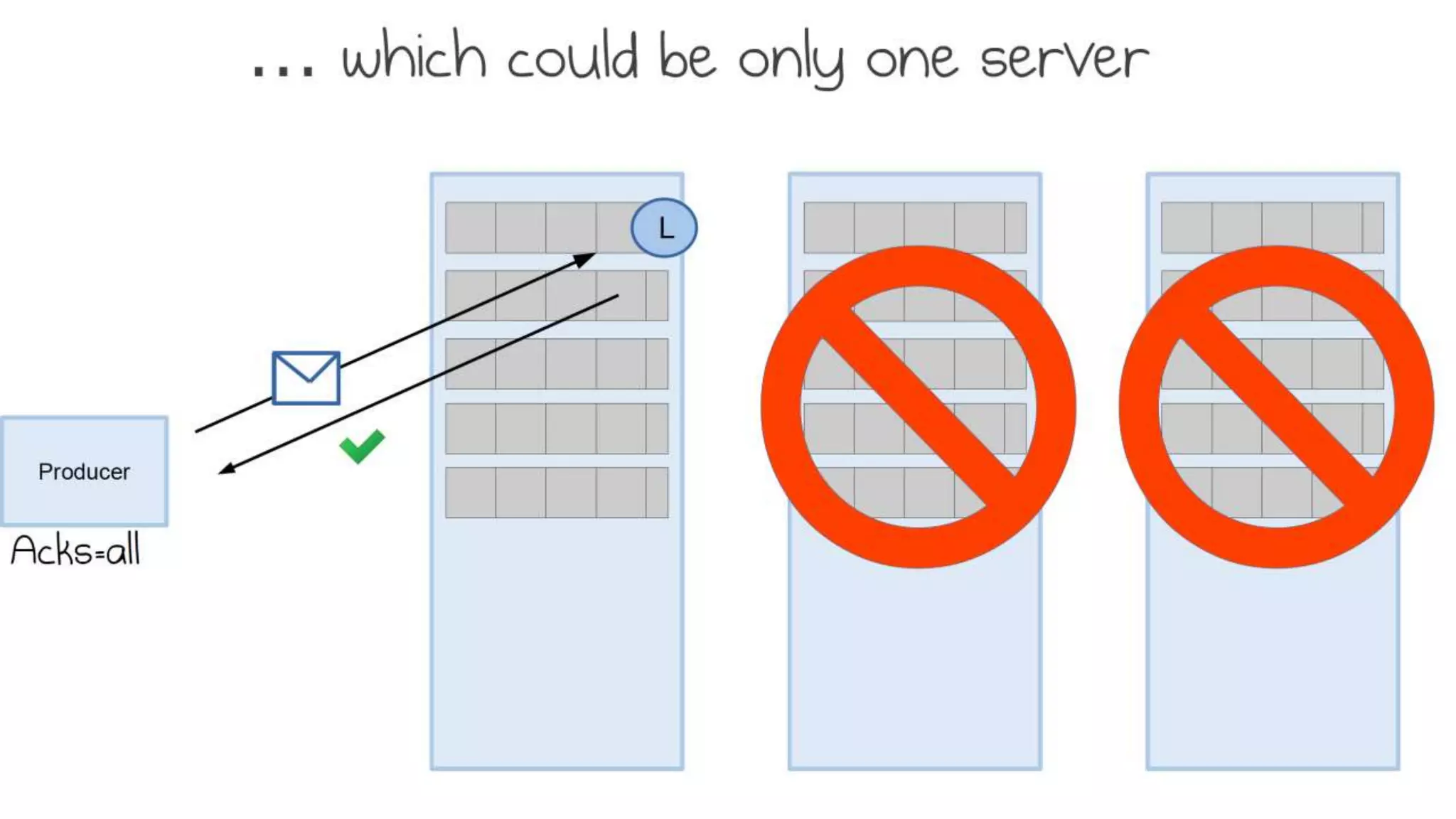

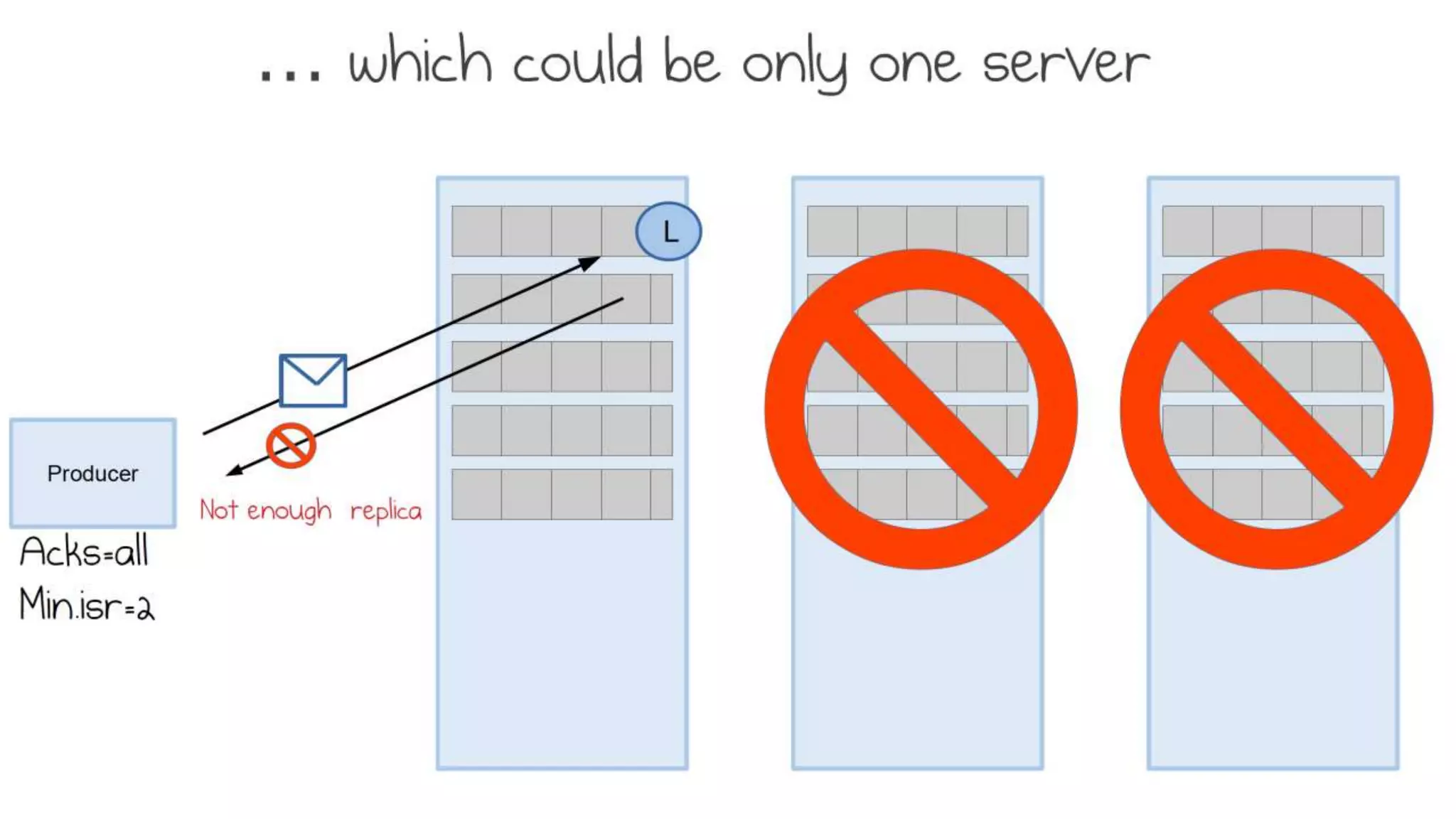

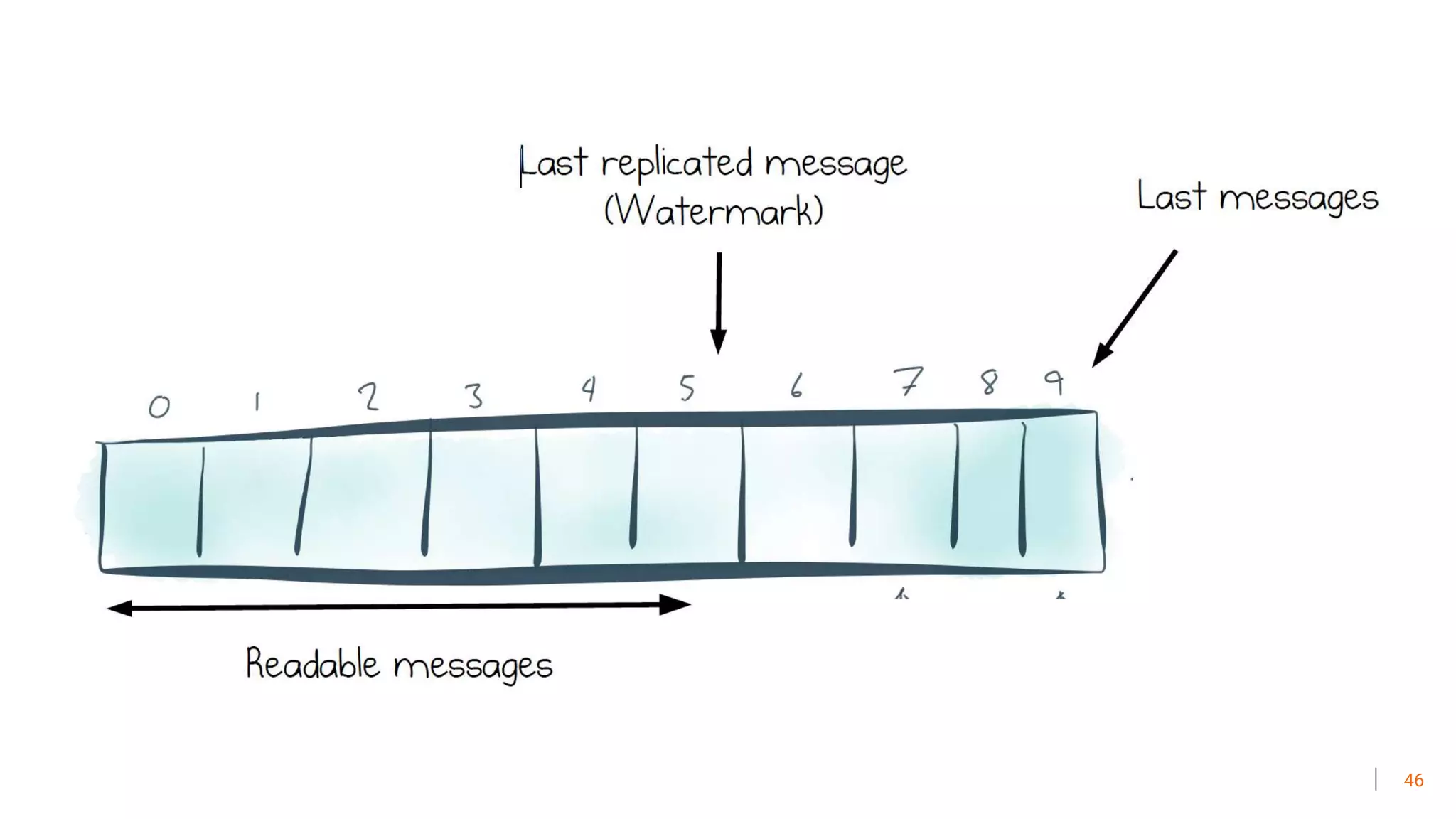

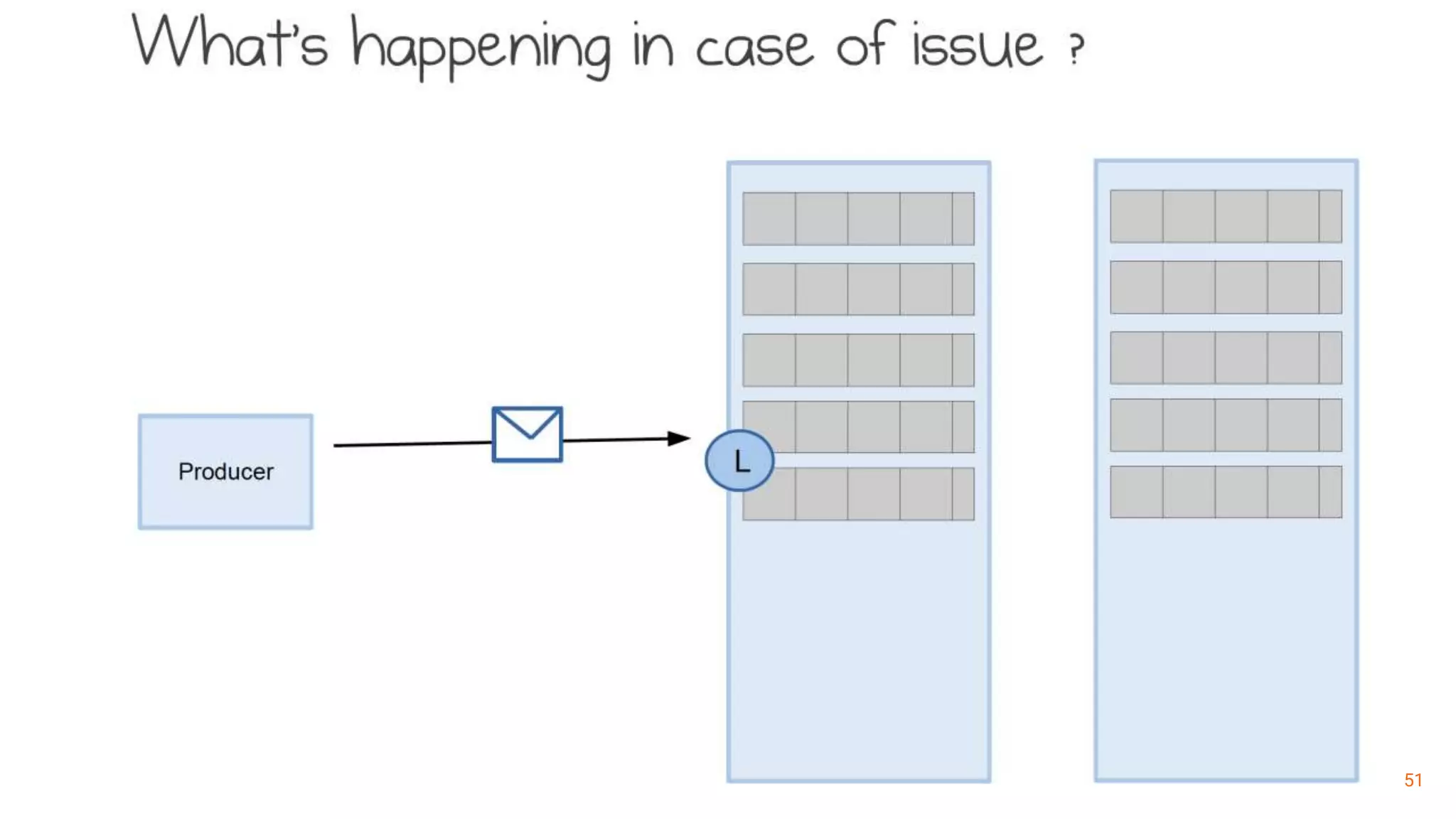

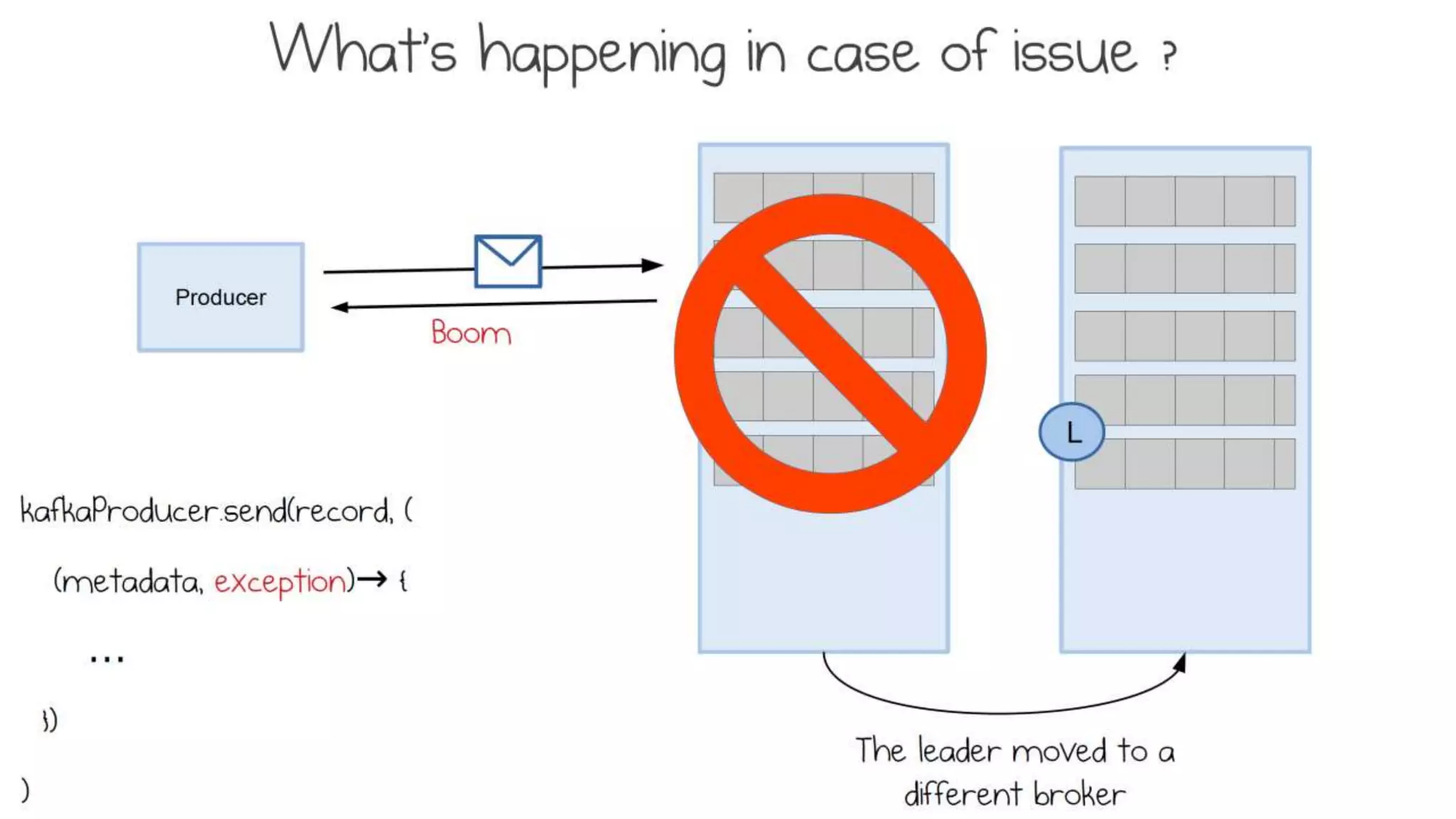

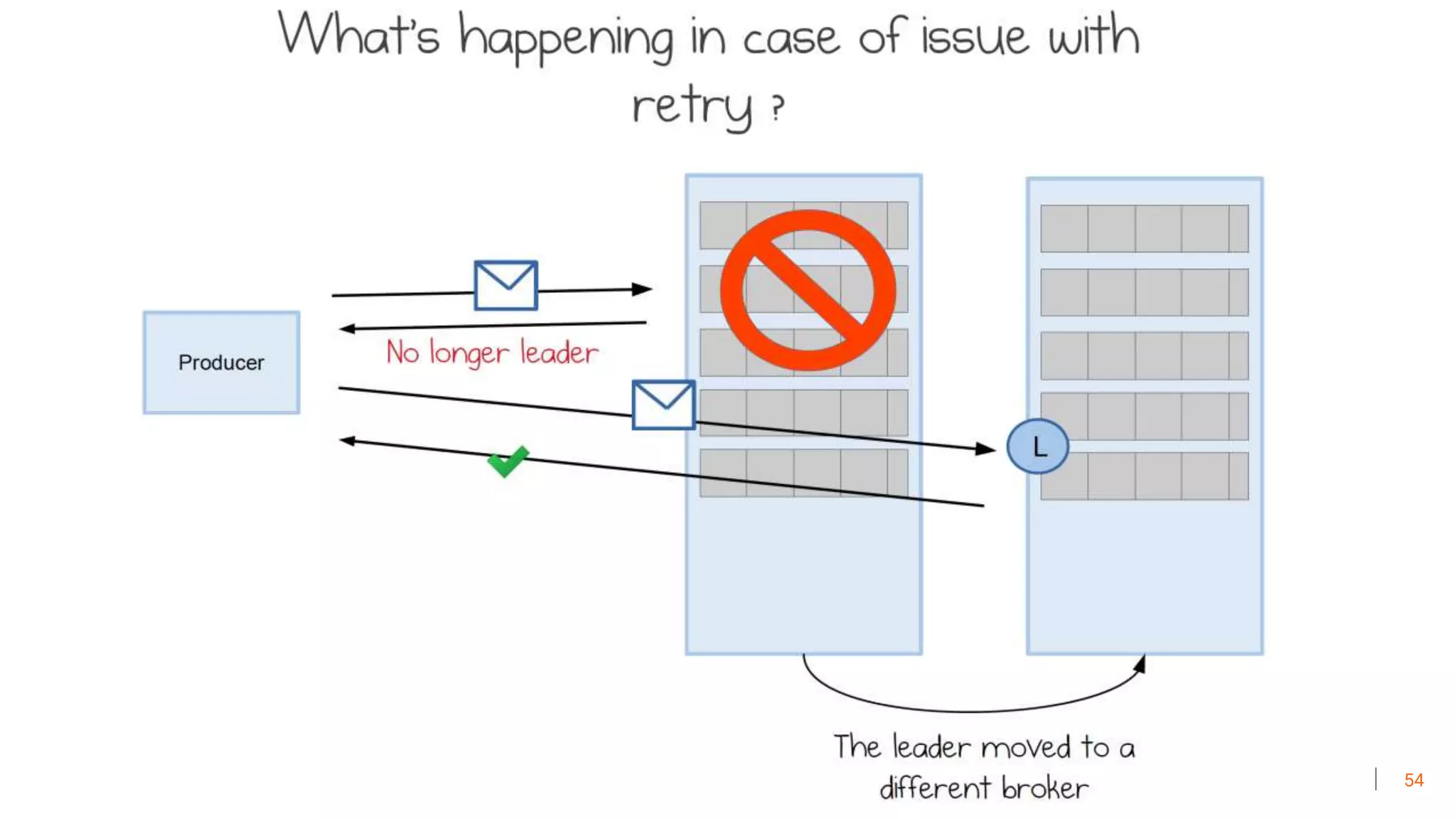

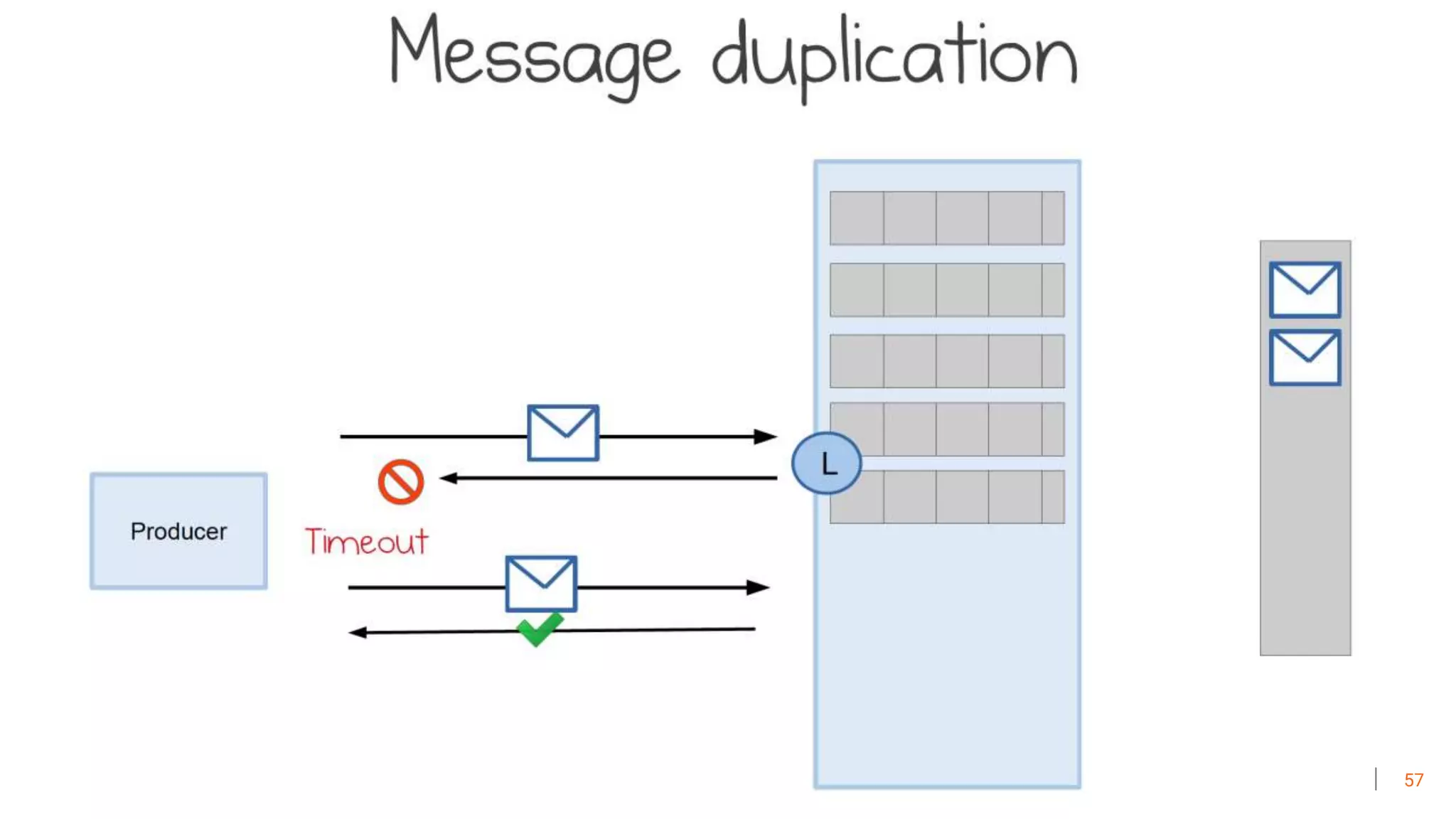

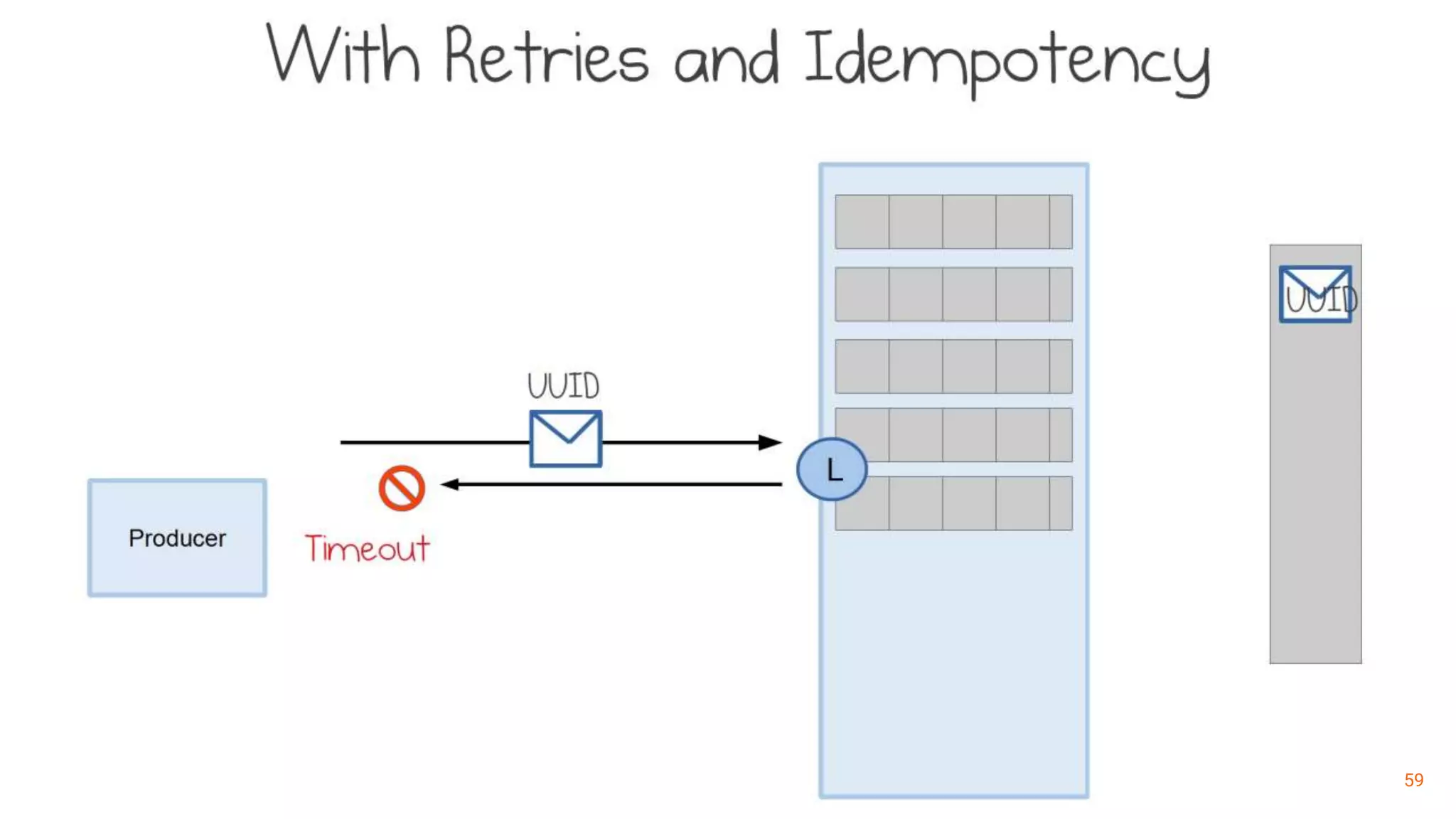

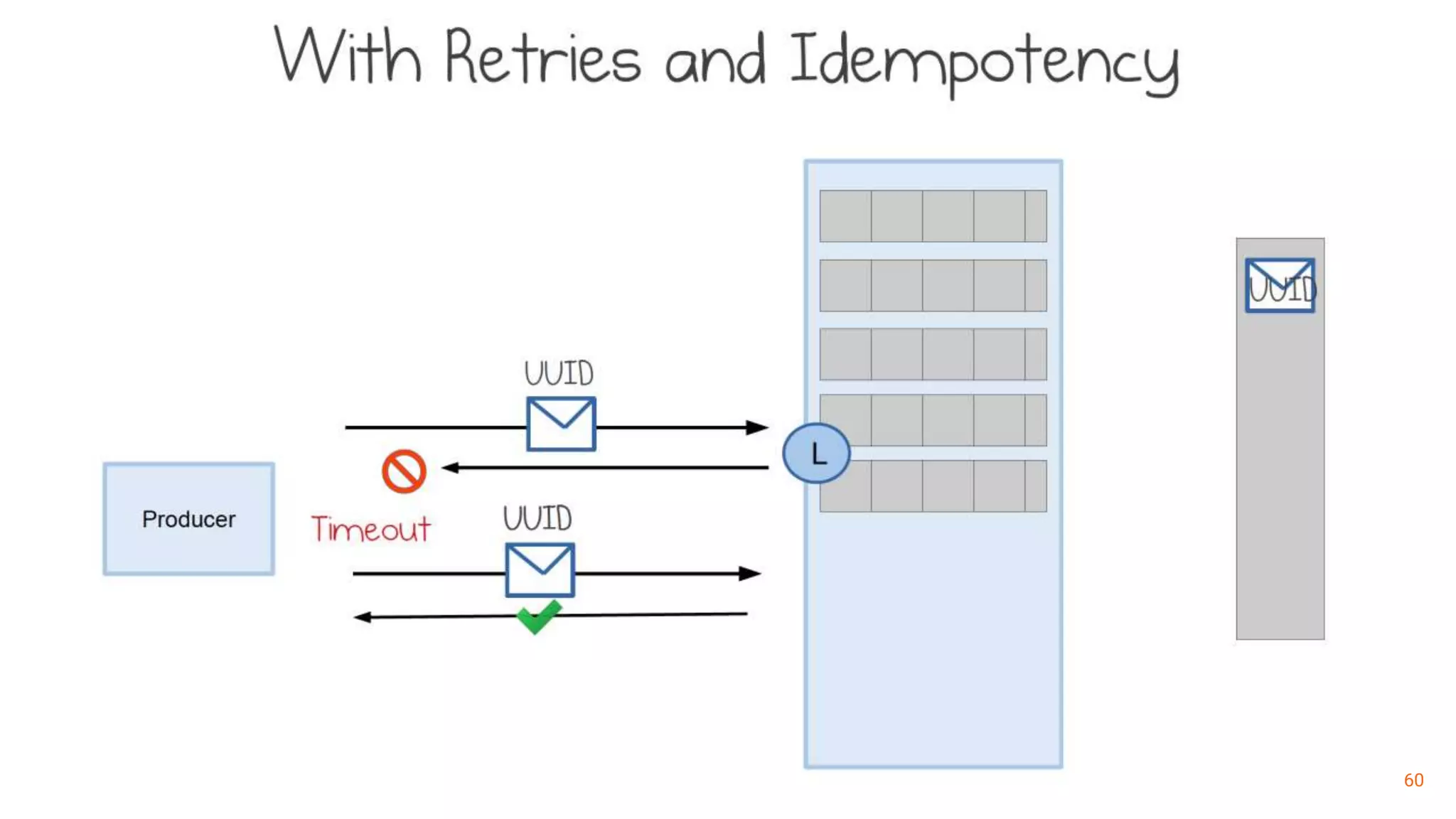

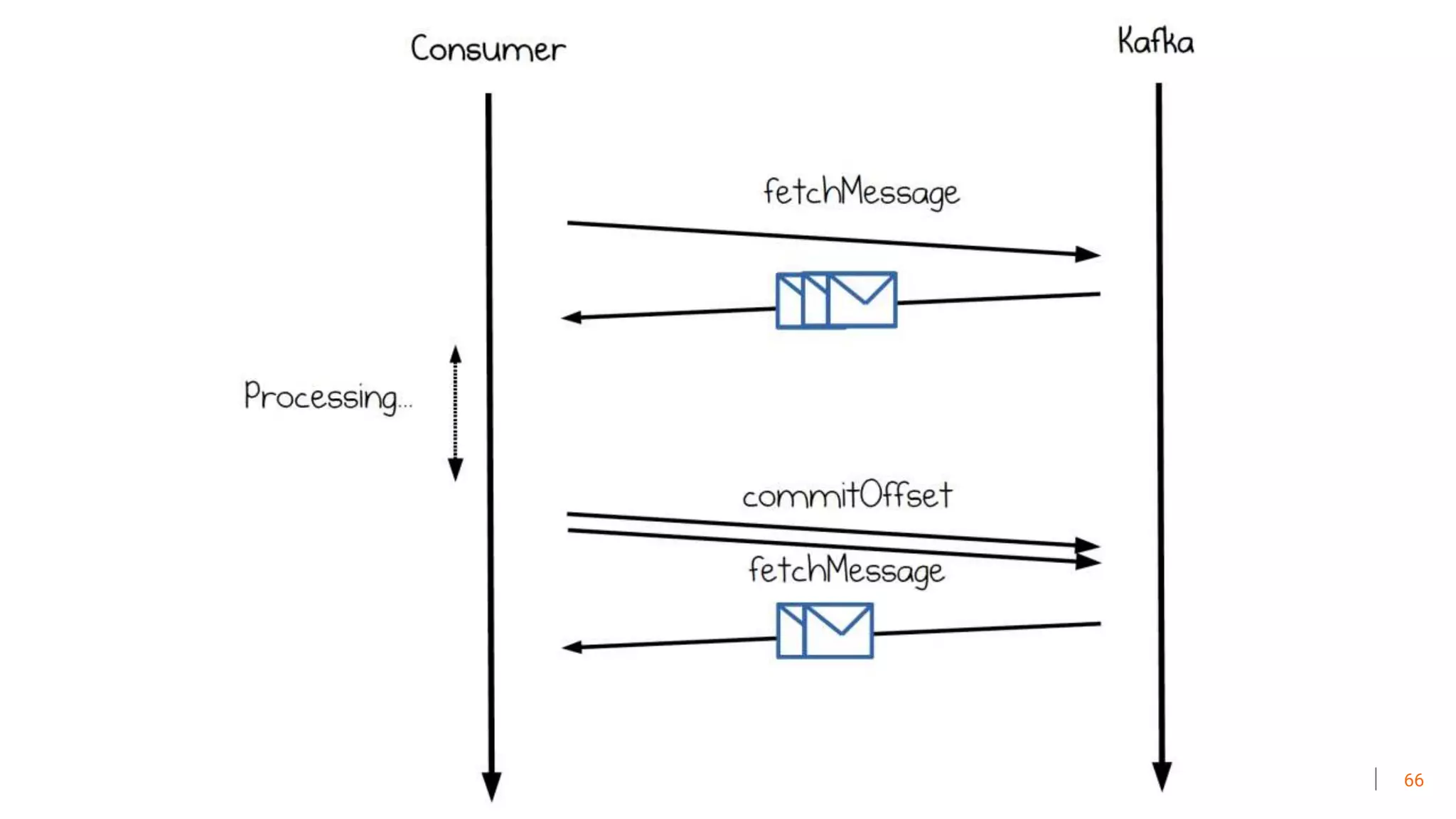

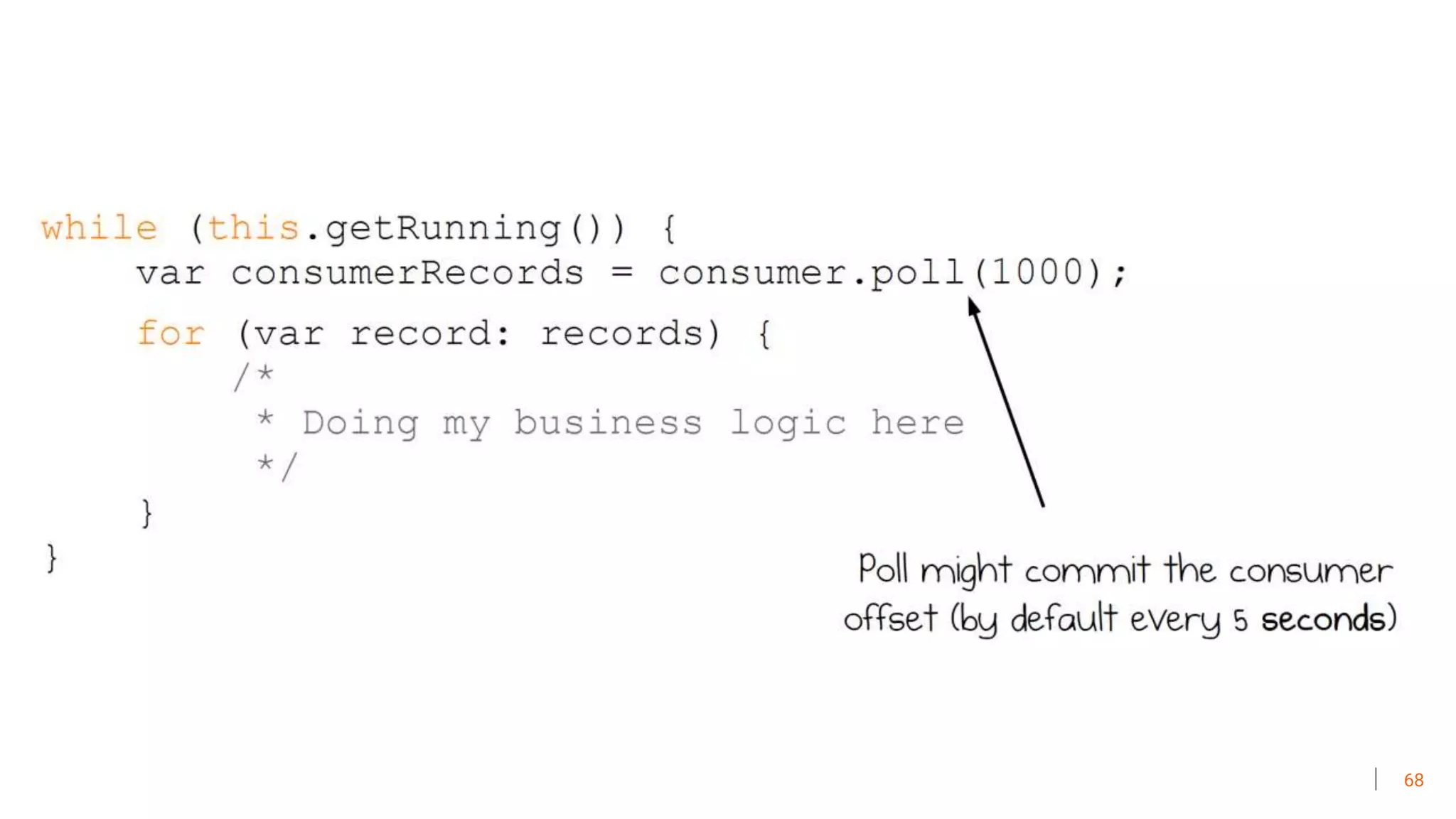

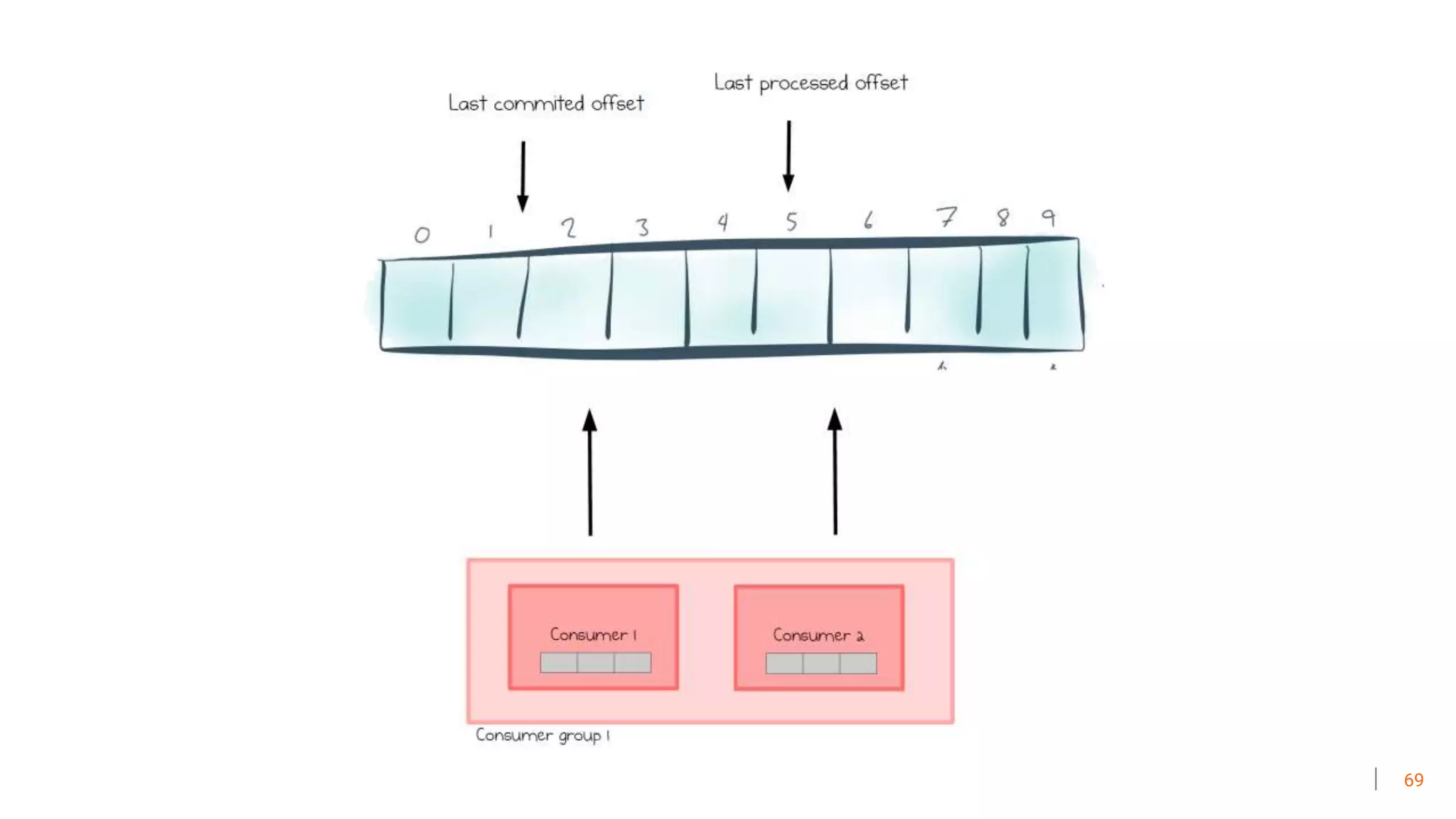

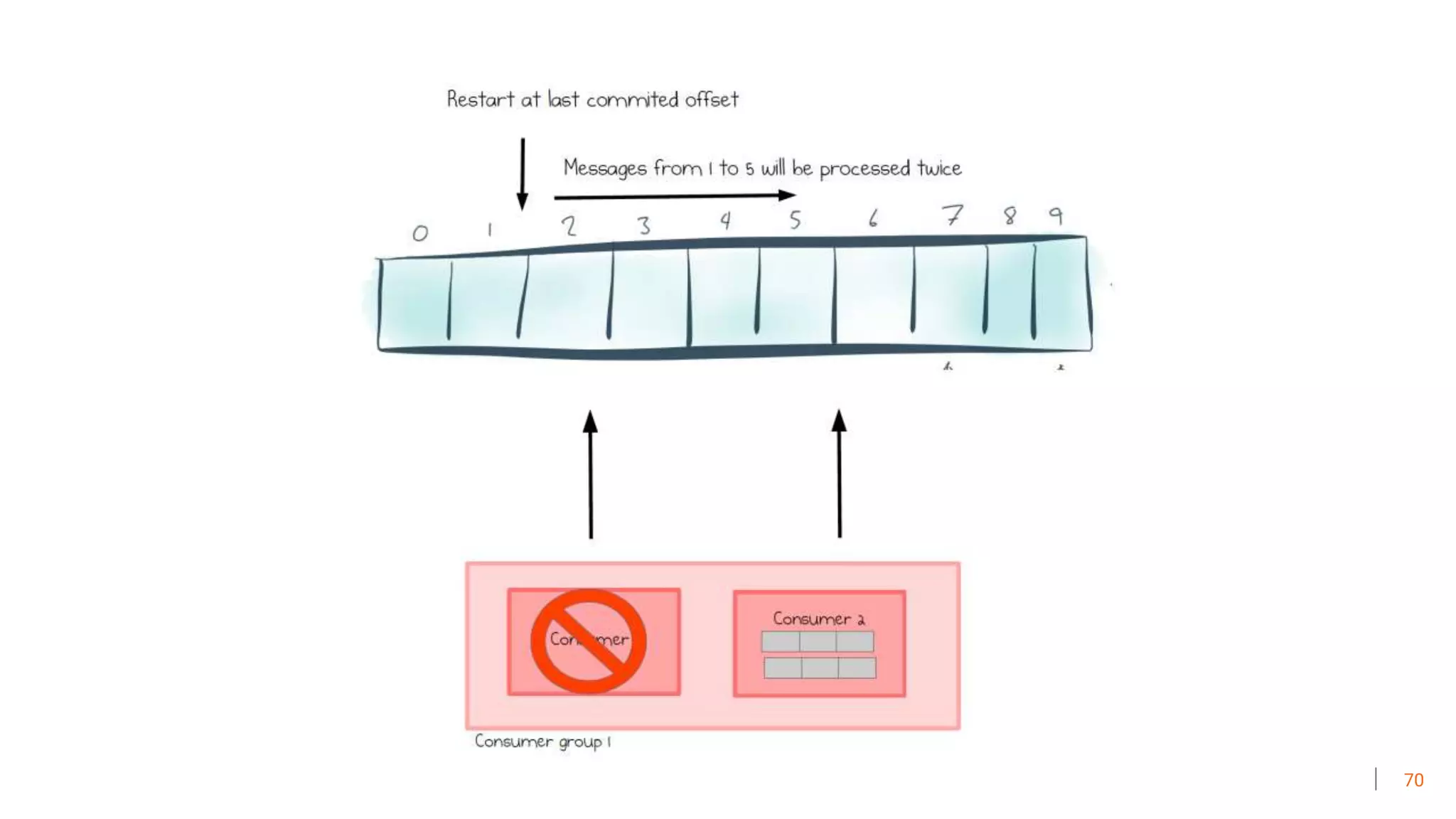

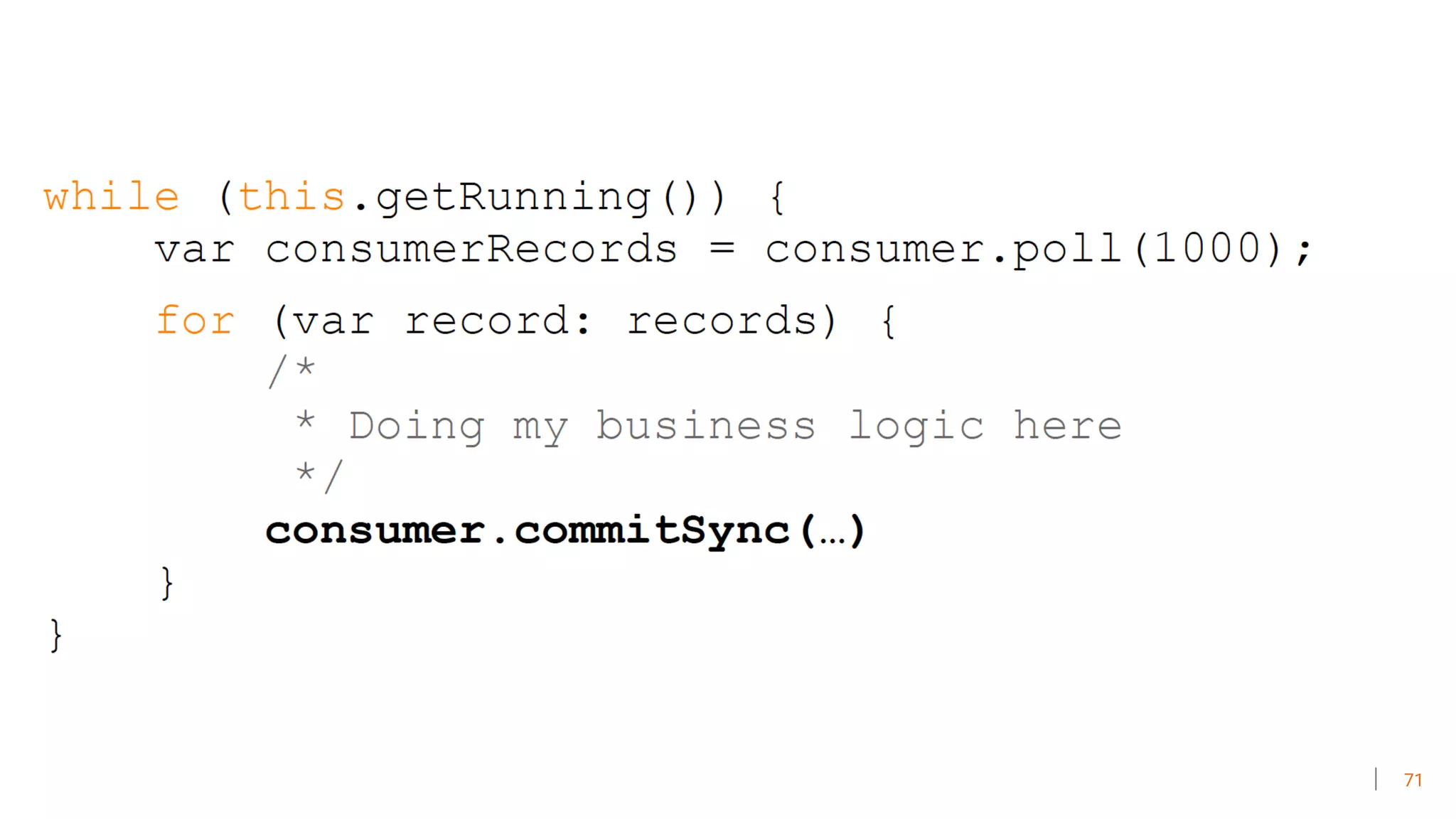

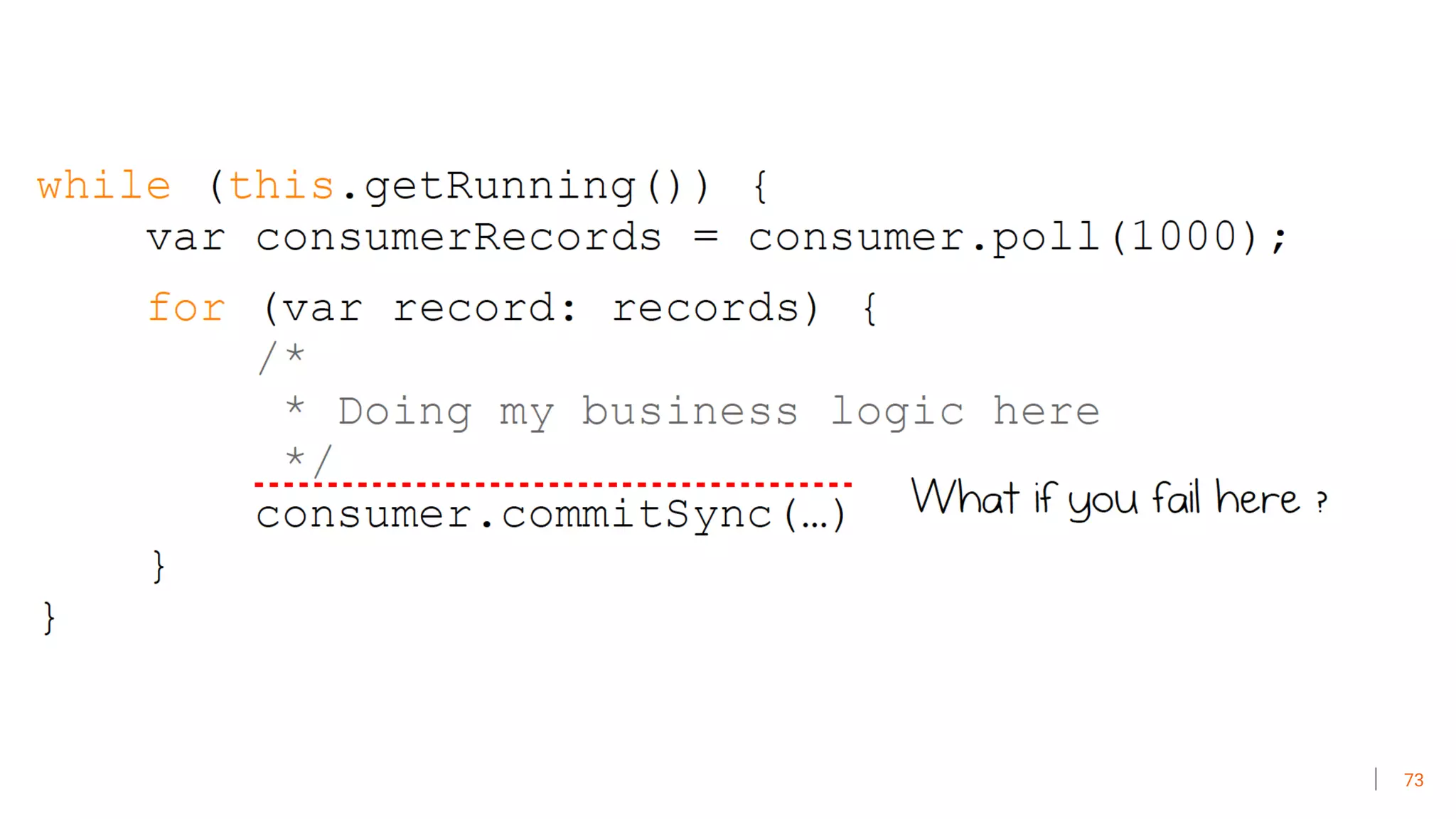

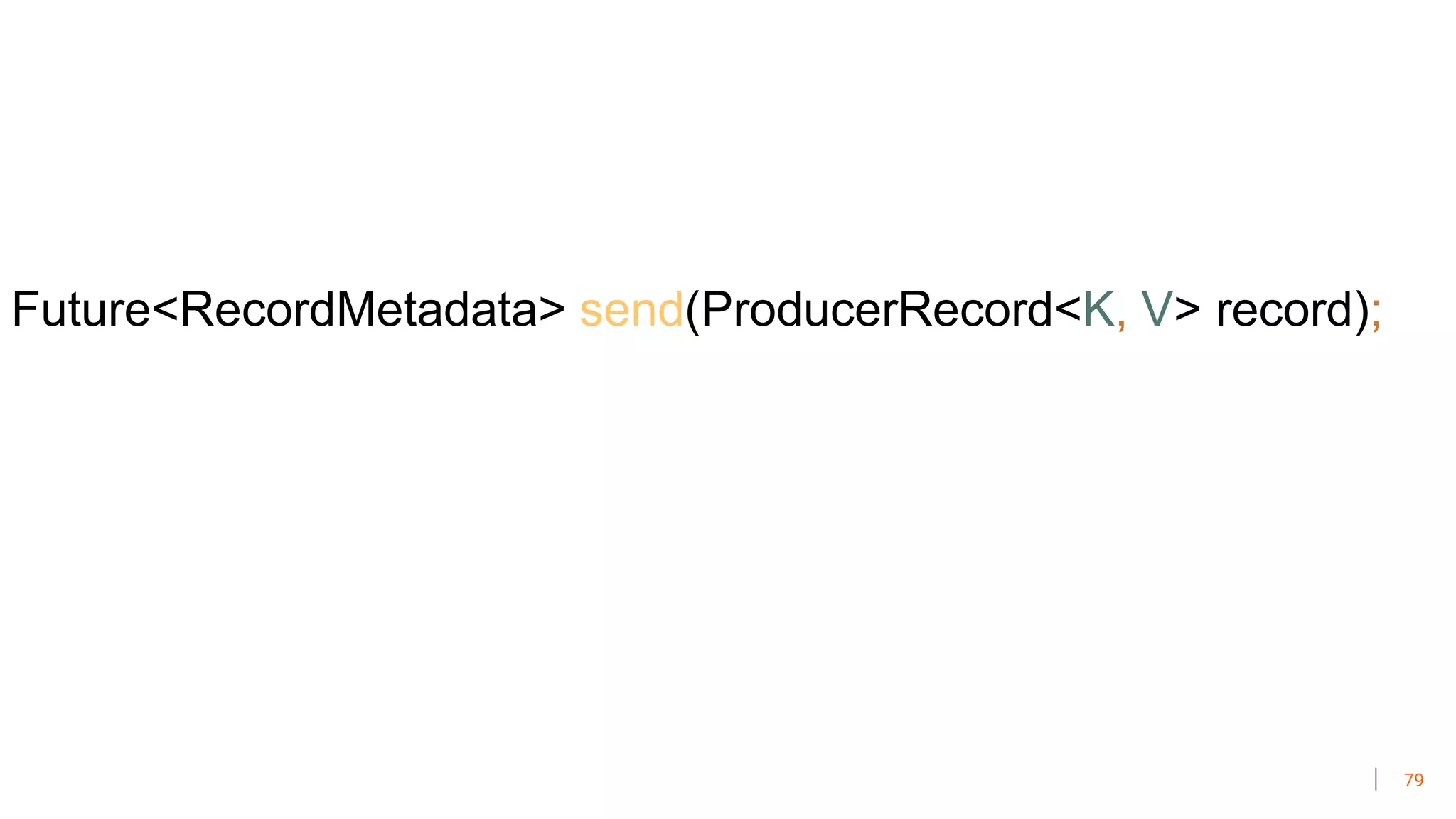

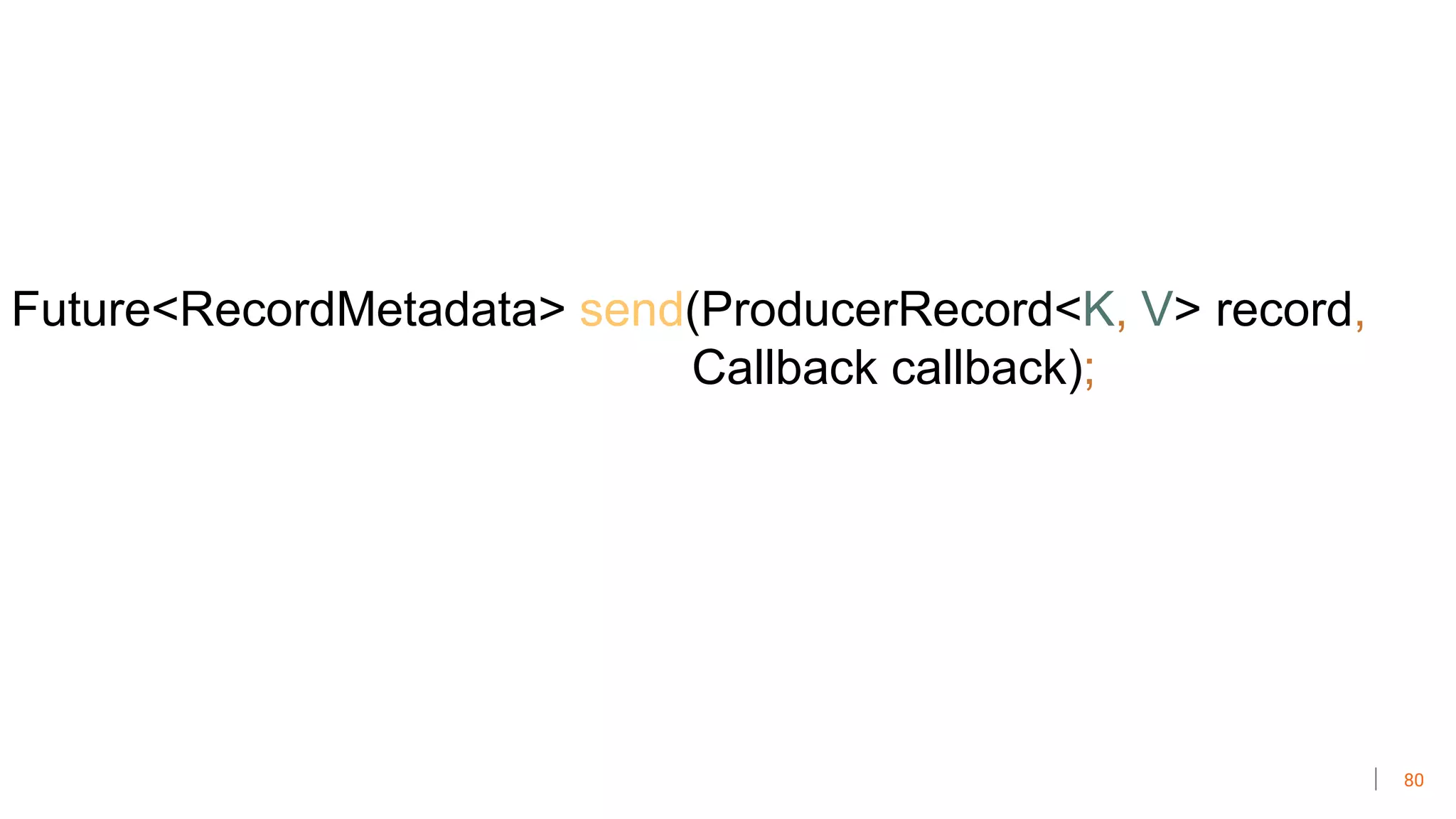

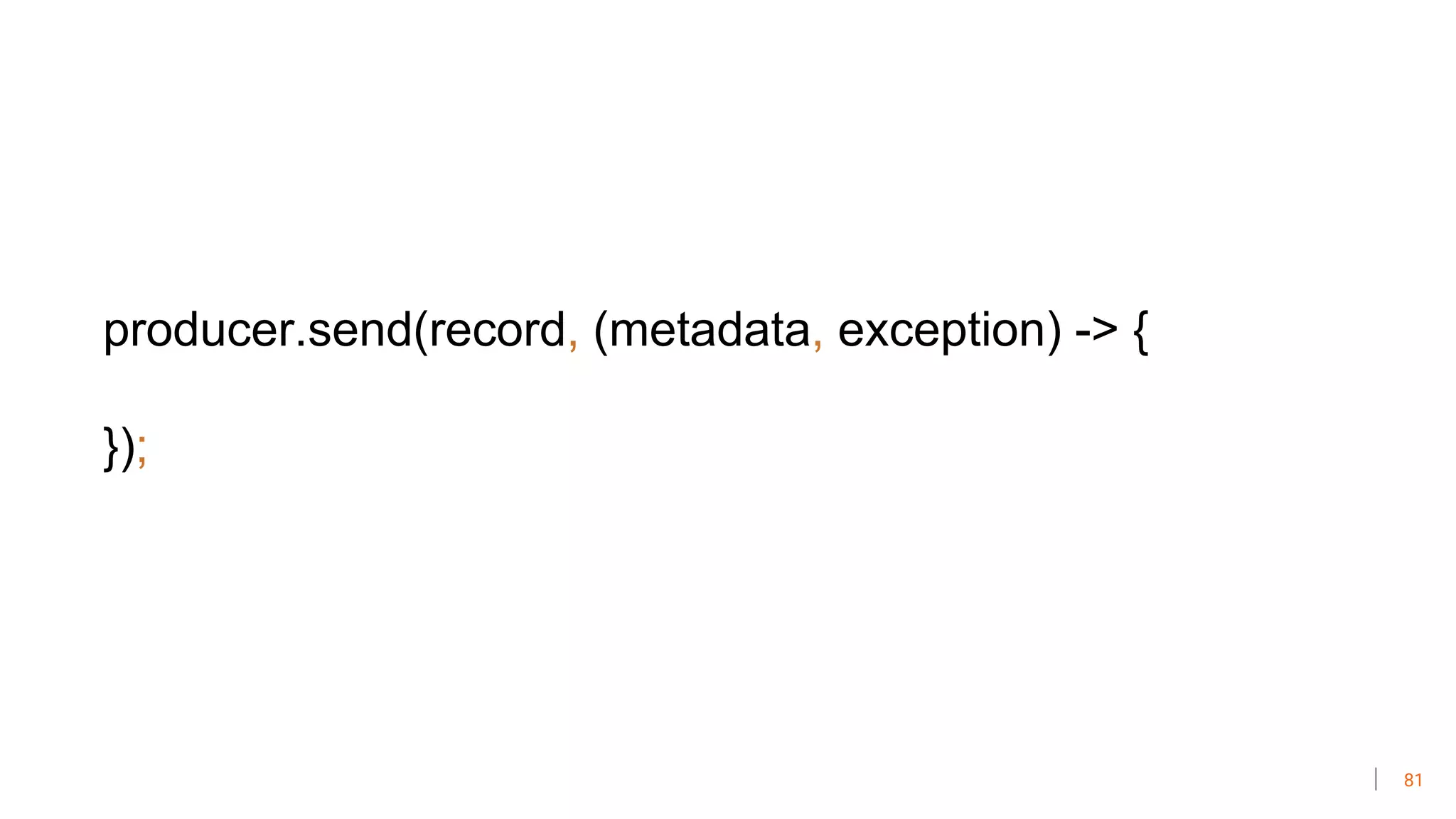

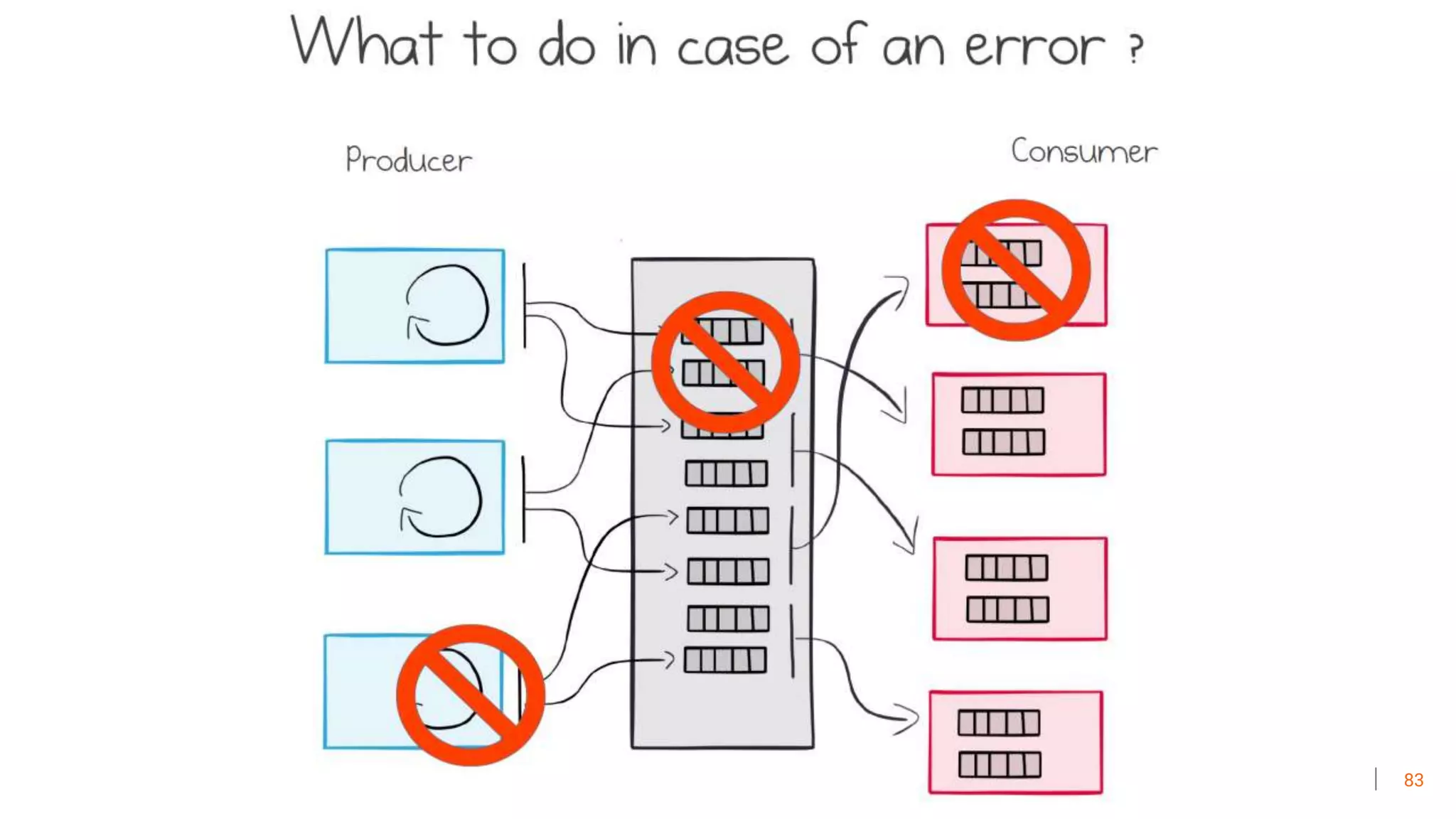

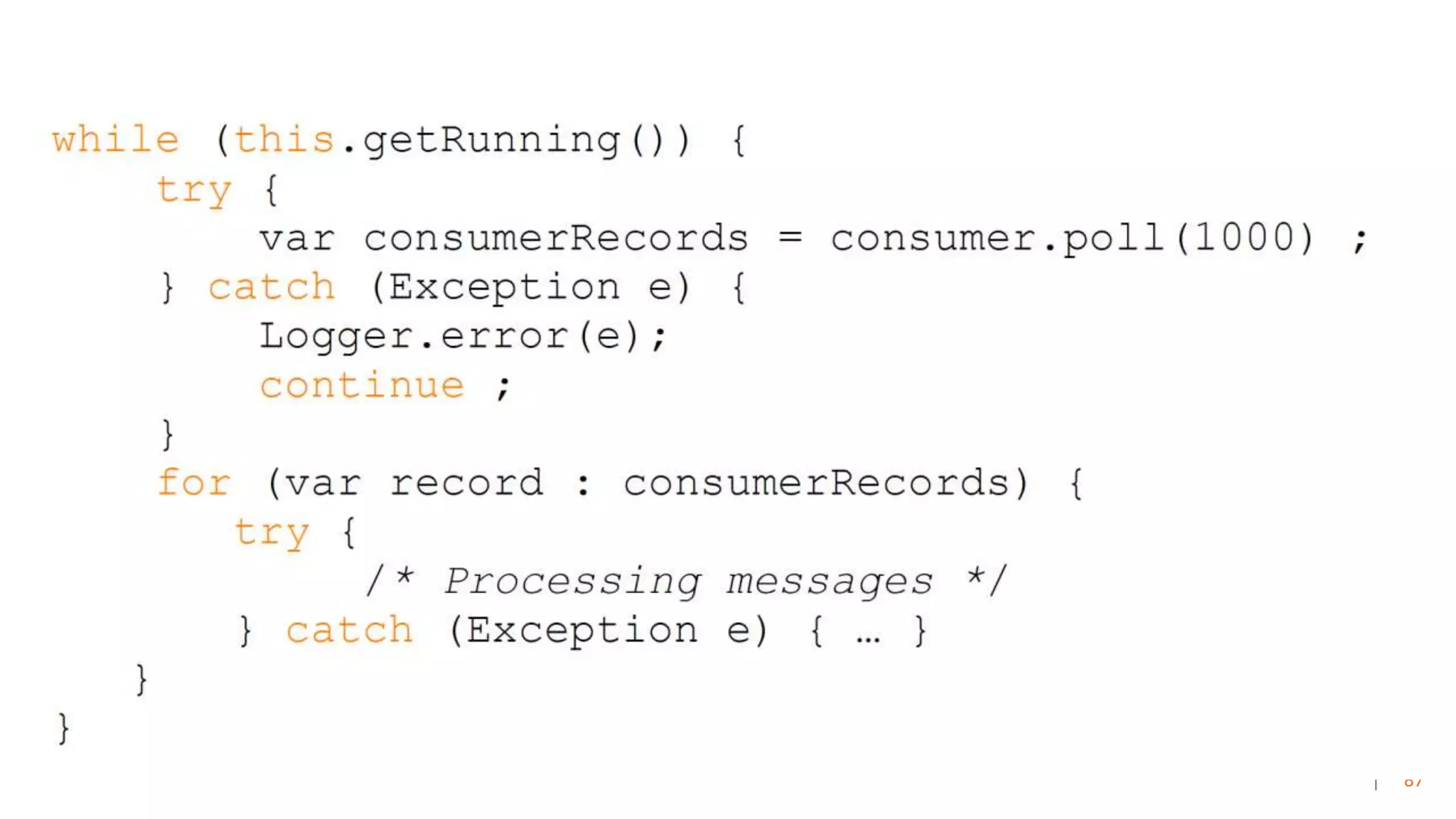

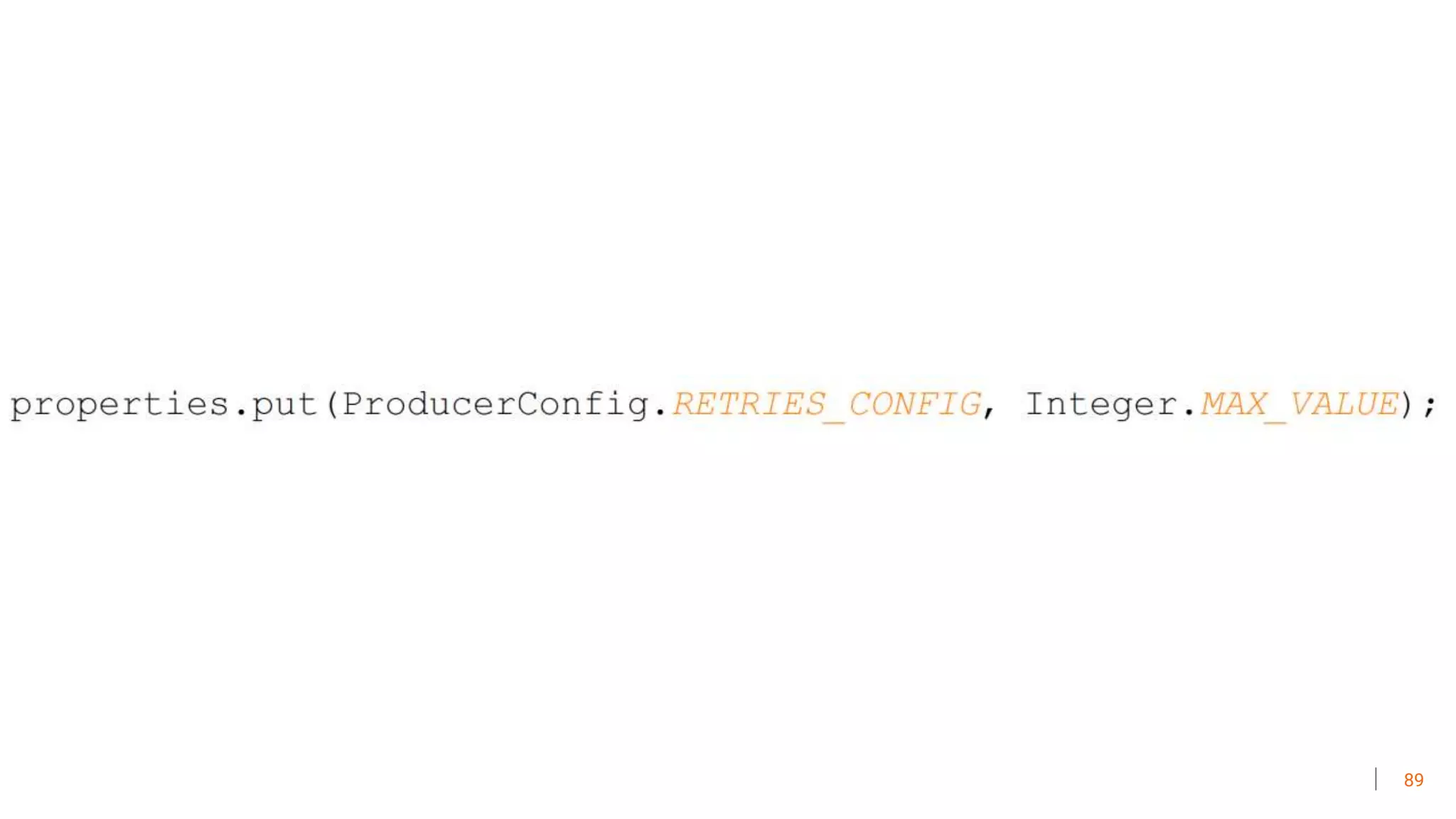

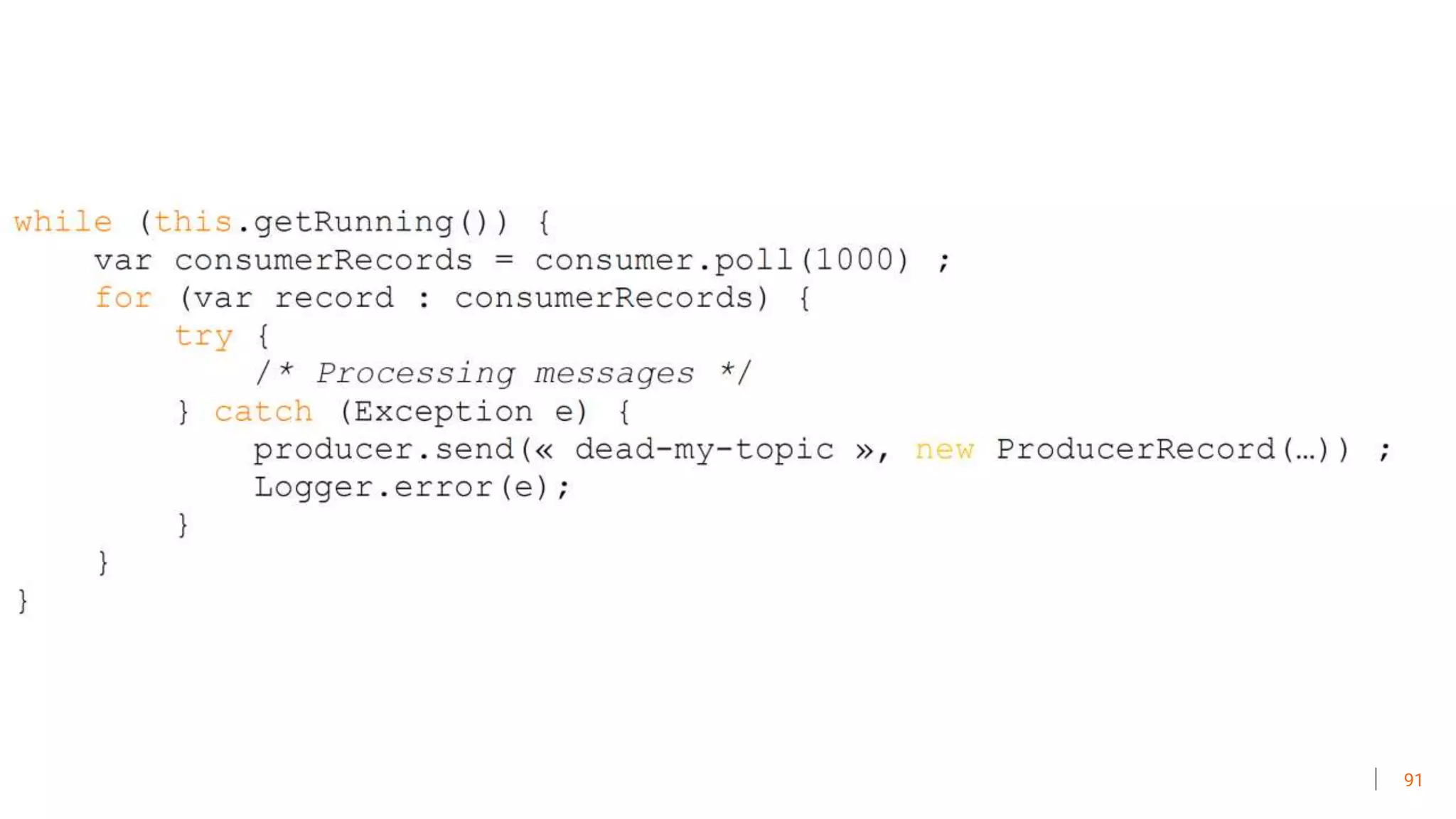

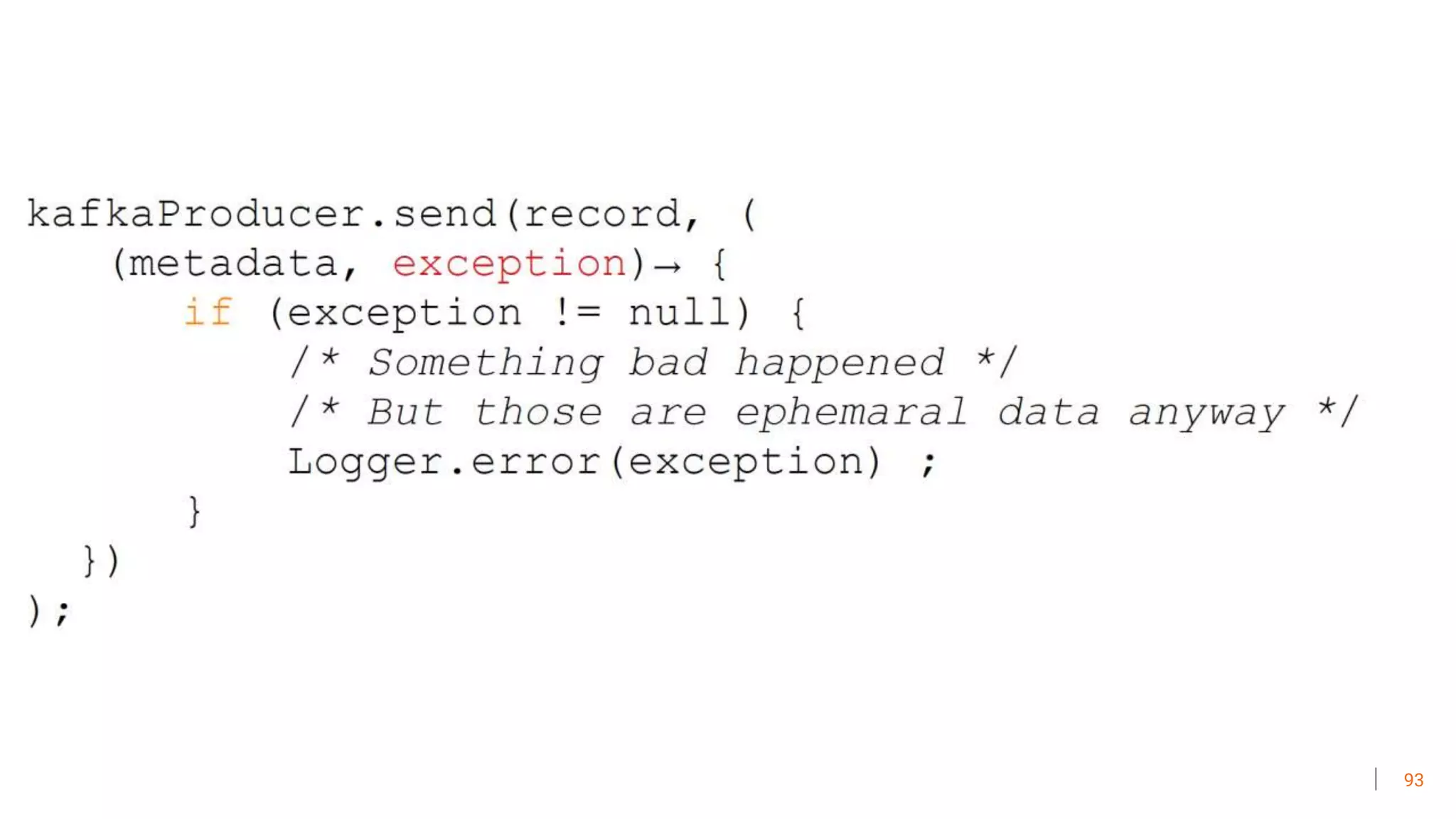

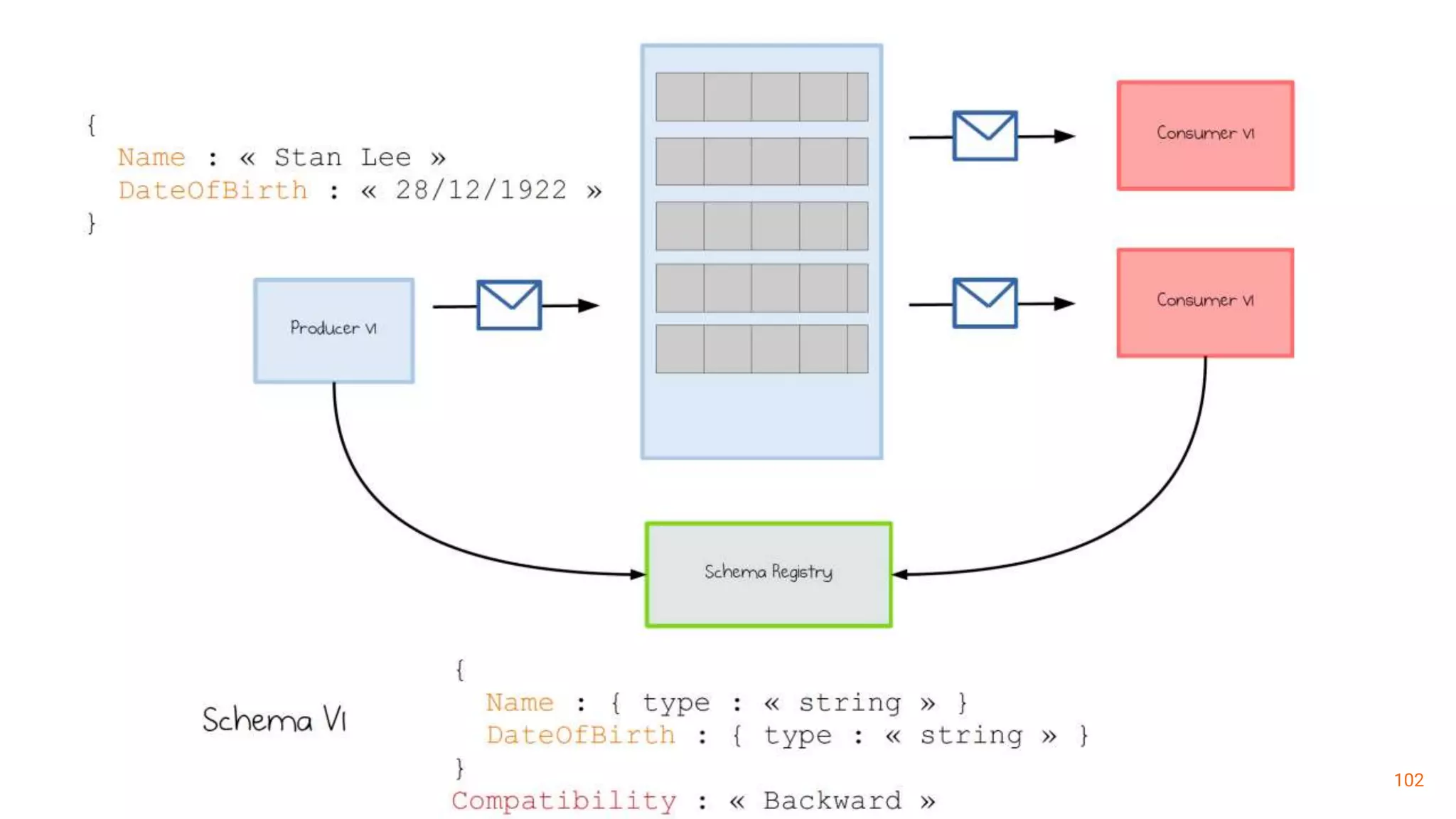

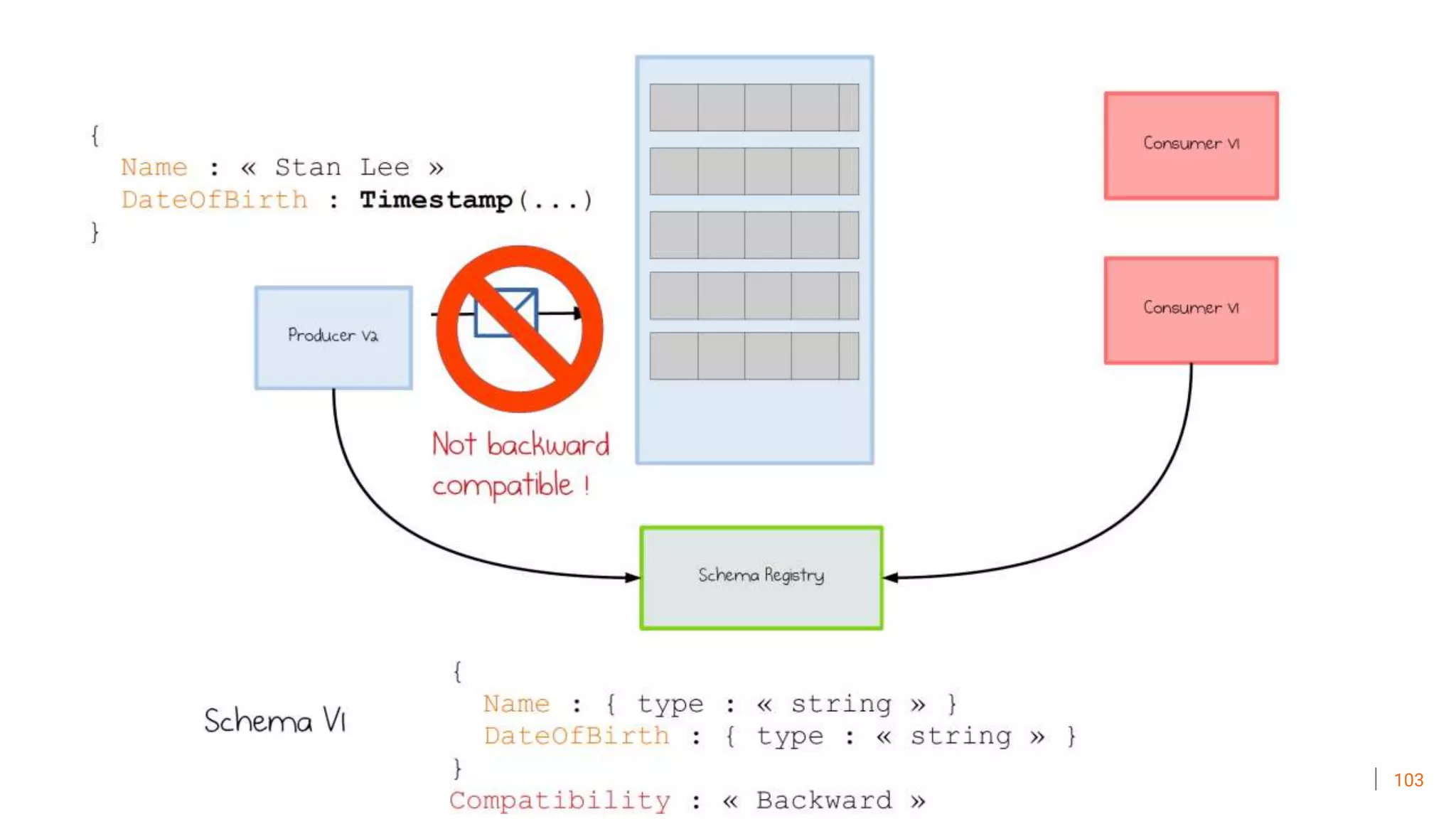

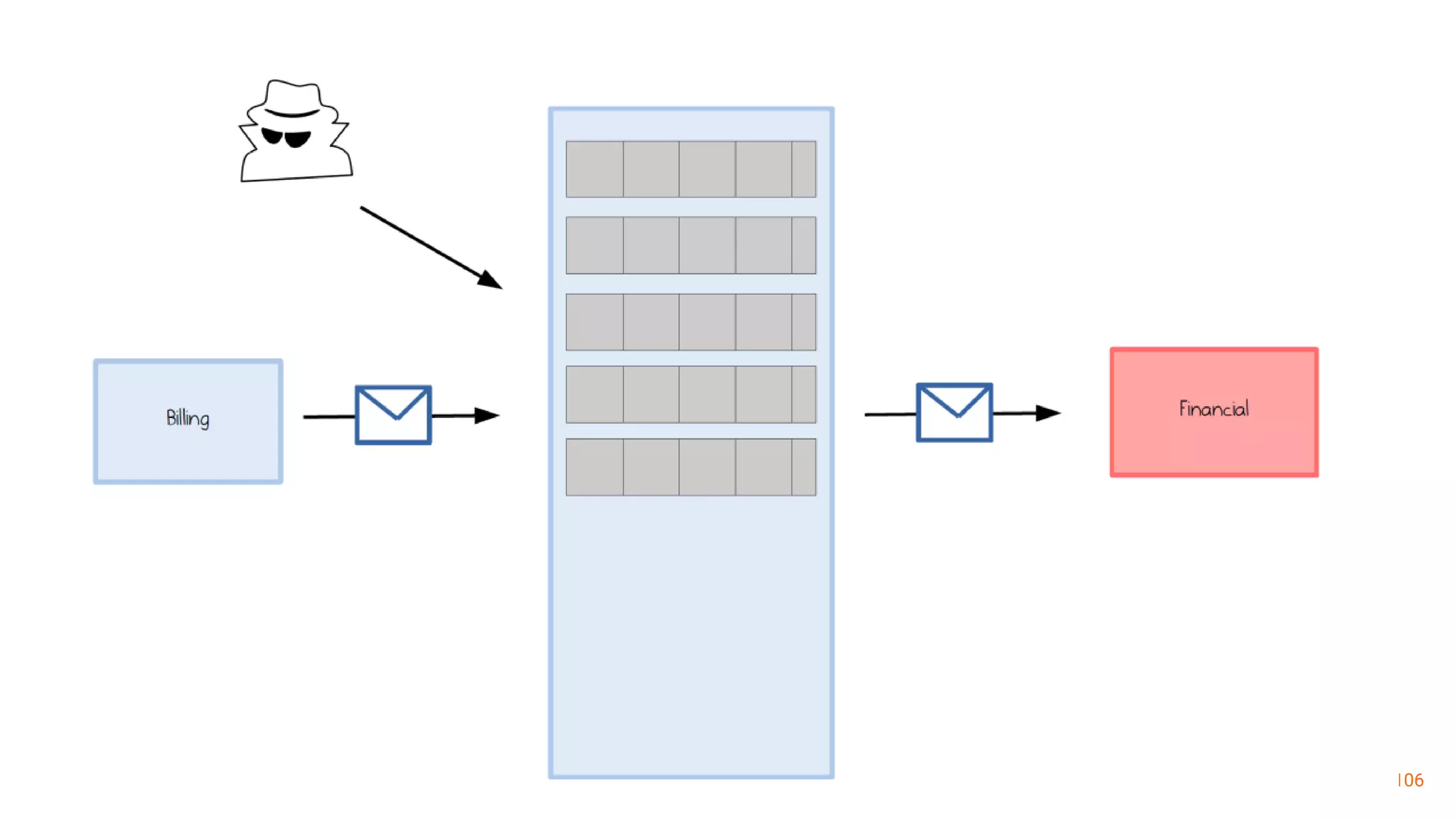

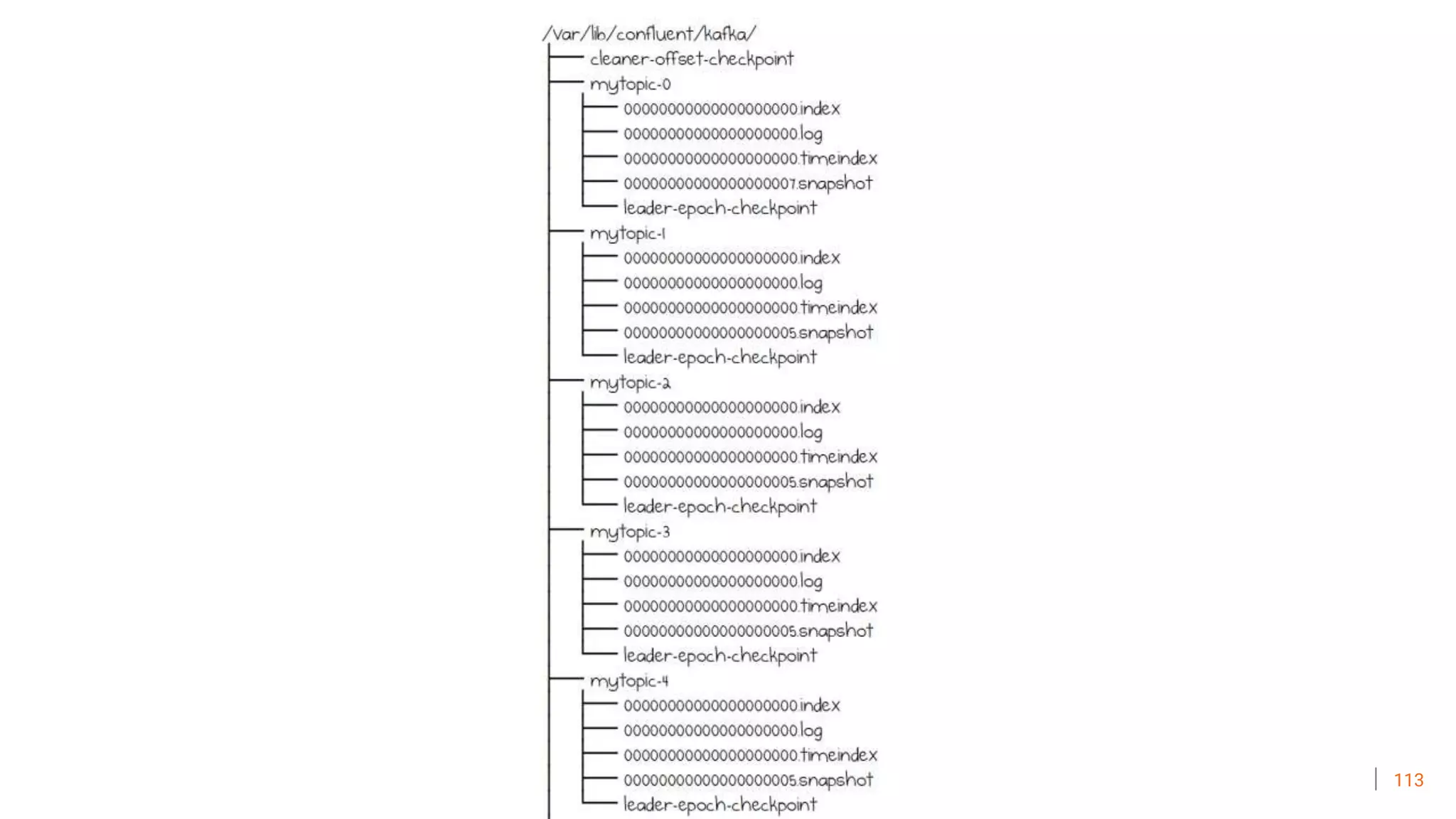

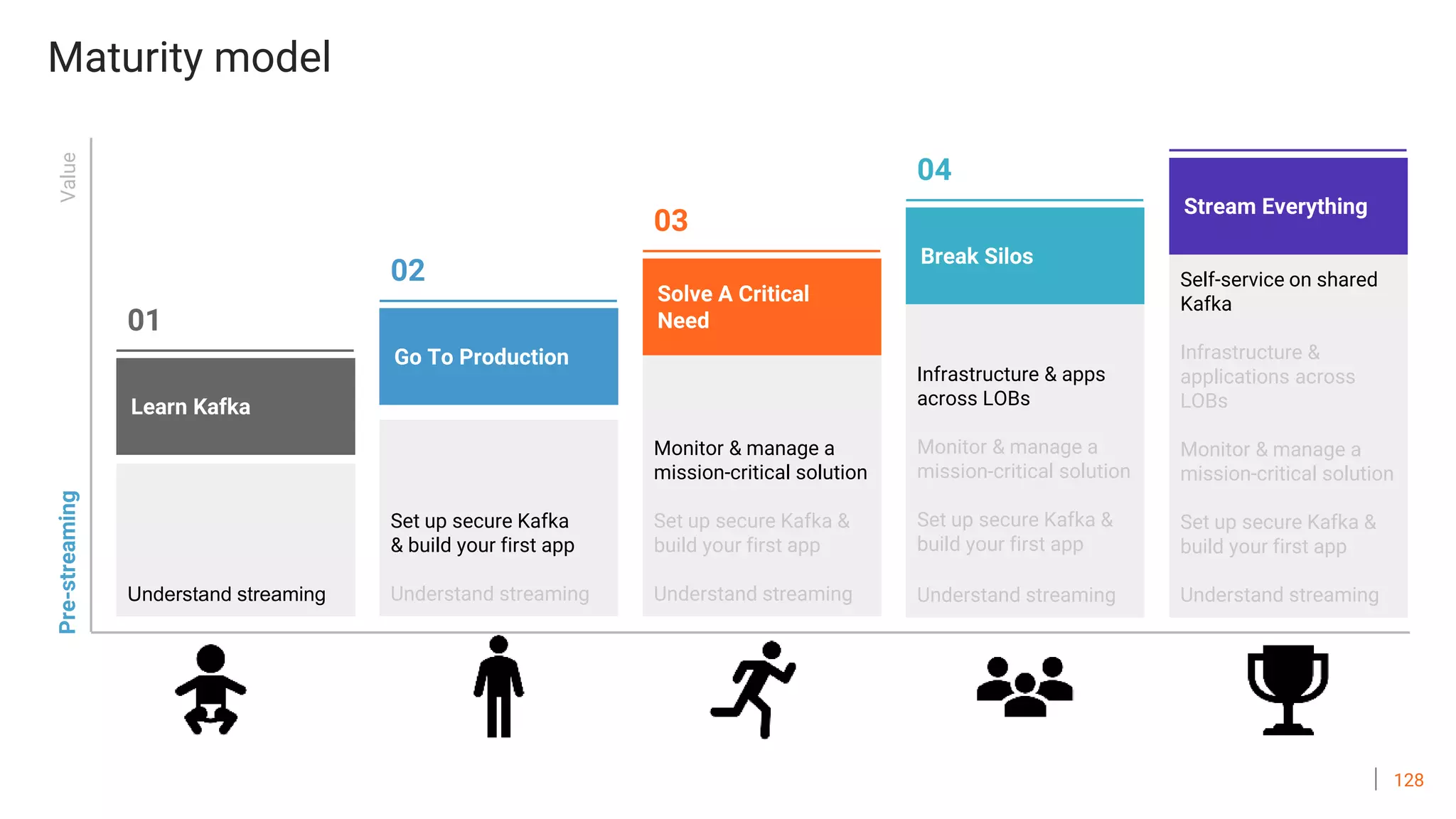

The document discusses the challenges and anti-patterns of deploying Apache Kafka, highlighting key concepts such as data durability, error handling, retries, and idempotency. It emphasizes the importance of configuration for achieving desired latency and durability while addressing common pitfalls like insufficient monitoring and mismanagement of resources. The presentation aims to guide users in effectively managing their Kafka deployments and ensuring data integrity across systems.