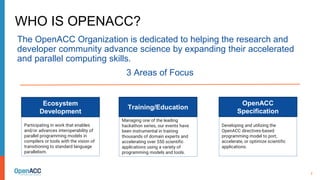

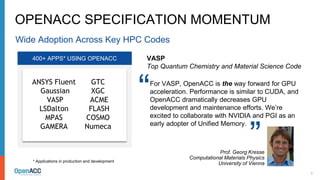

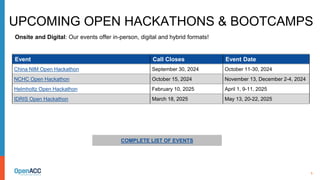

The document outlines the OpenACC organization's efforts to enhance accelerated and parallel computing within the research community through hackathons, training, and the development of the OpenACC specification. Key highlights include upcoming open hackathons, collaborative projects, and the promotion of the 2024 Open Accelerated Computing Summit for sharing research advancements. It also emphasizes the importance of interoperability among programming models and features various programming and performance resources available to the community.