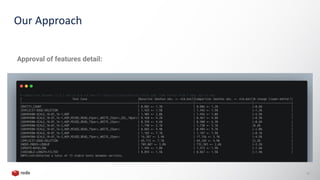

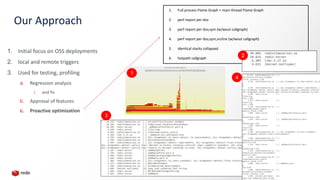

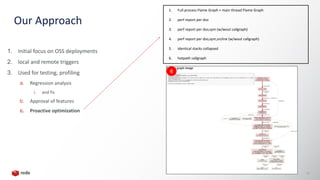

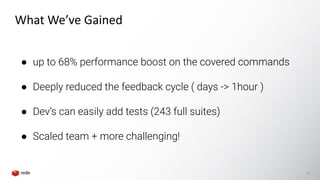

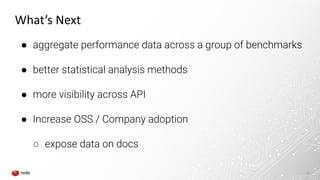

The document discusses performance testing and analysis in Redis, highlighting the importance of measuring performance to improve it. The approach involves using automated tests to catch regressions early, reducing feedback cycles significantly. Future goals include better statistical analysis methods and increased adoption of performance data across open source and company projects.