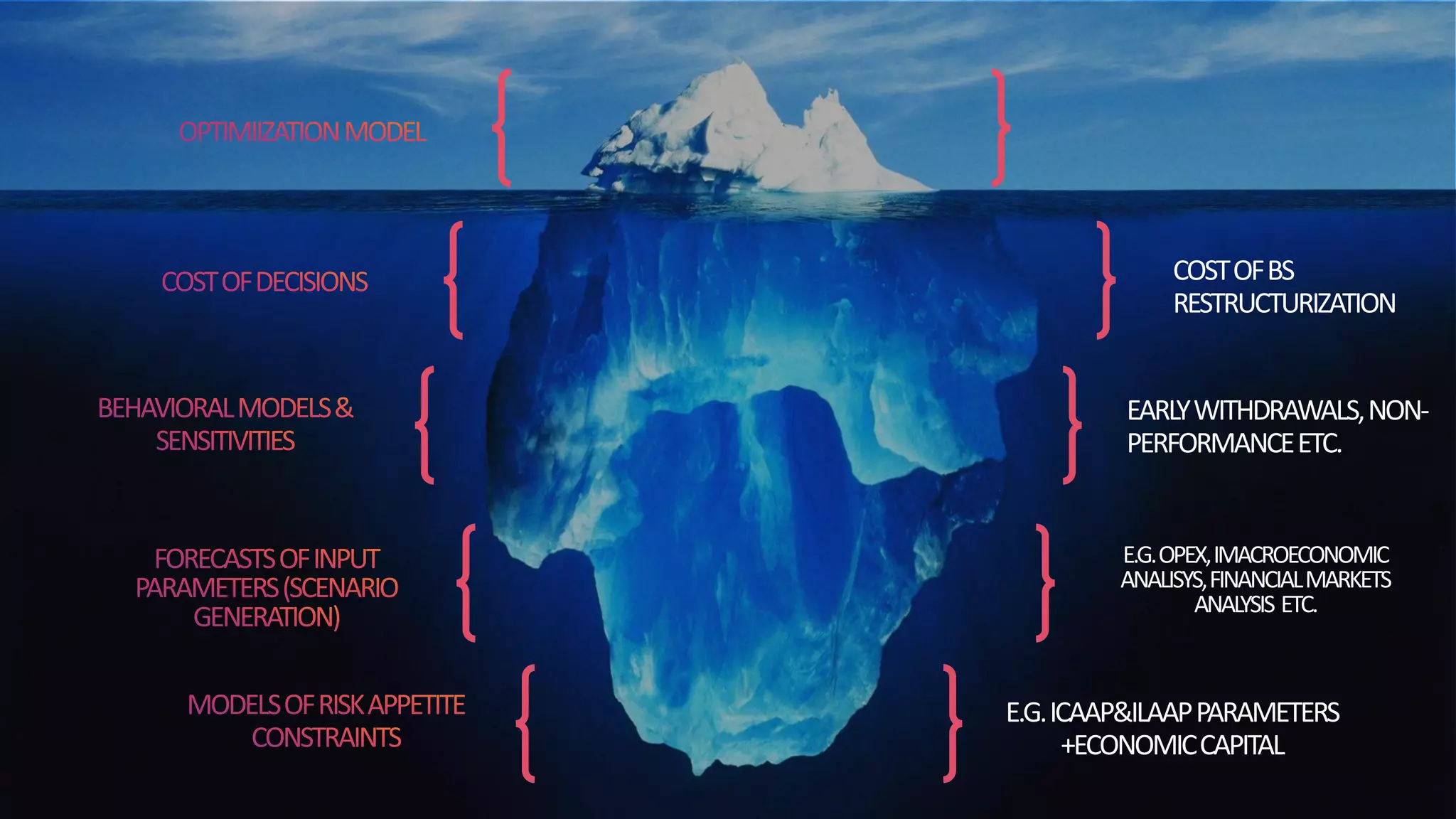

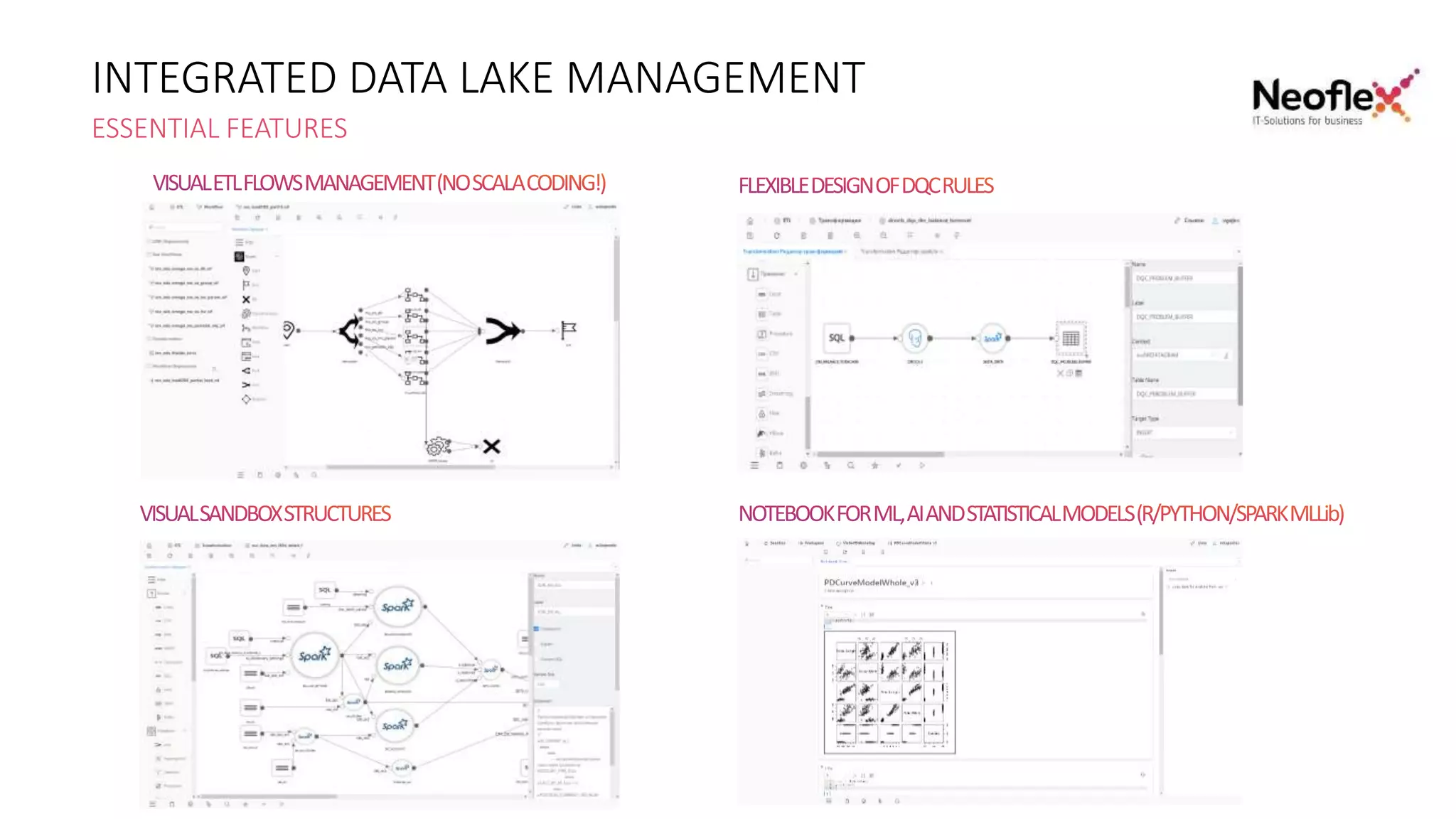

The document discusses the need for EU banks to optimize balance sheets amidst profitability concerns due to regulatory constraints and high competition. It advocates for a new treasury operating model that enhances governance and data capabilities, potentially increasing net interest income by 10-15% while reducing balance sheet consumption. Furthermore, it highlights the complexity of implementing advanced modeling techniques and the significant data management requirements necessary for effective treasury optimization.