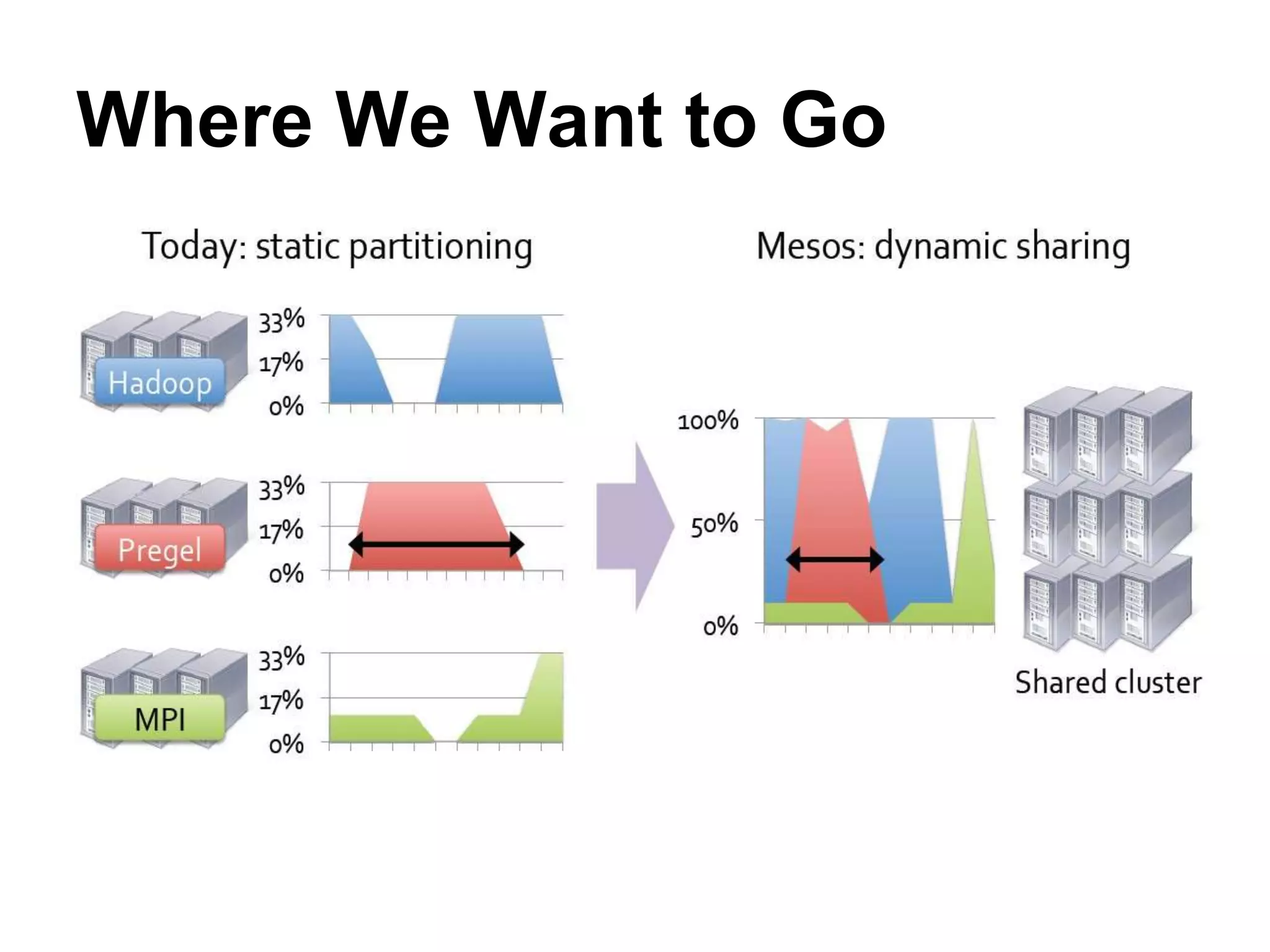

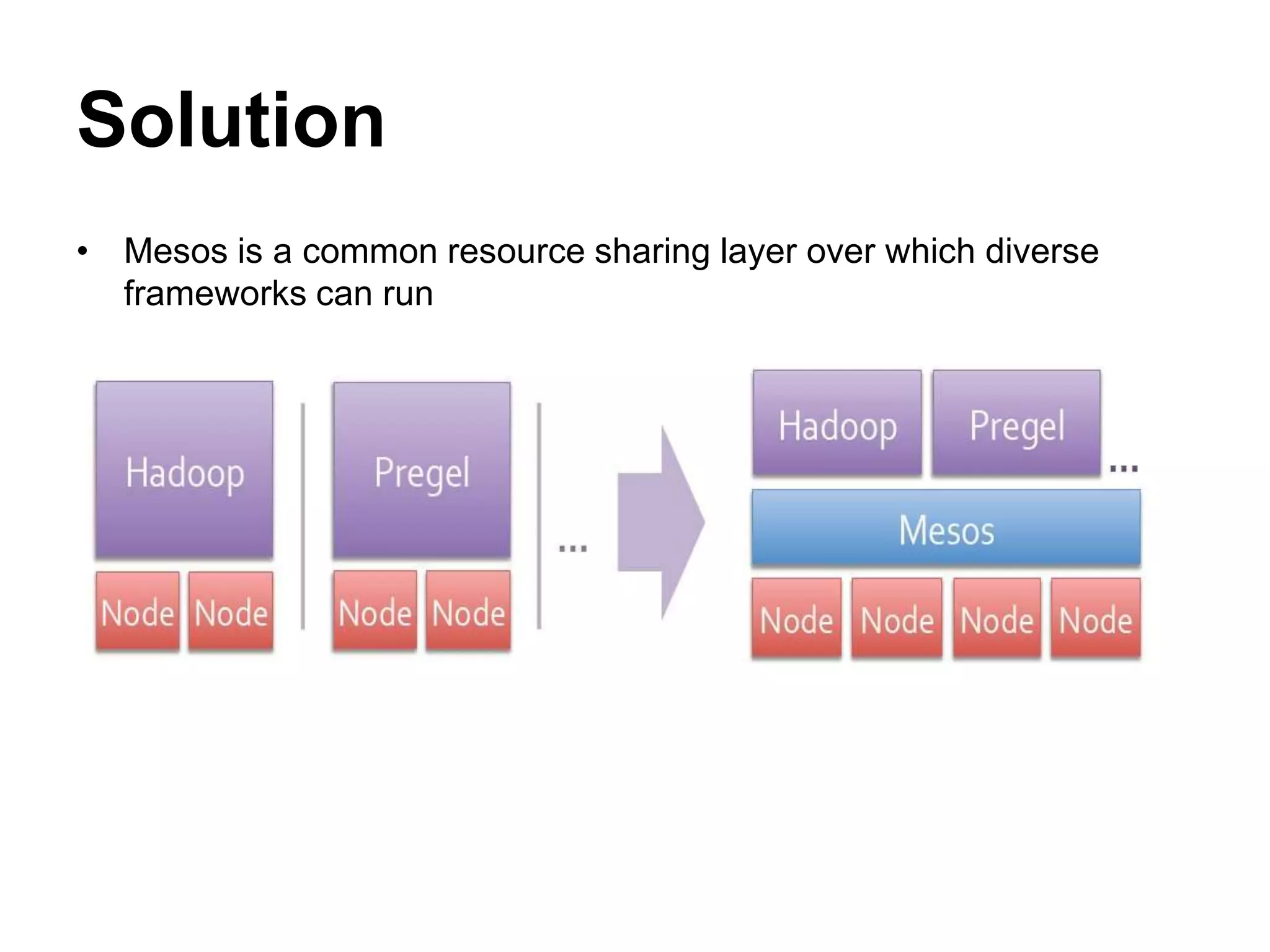

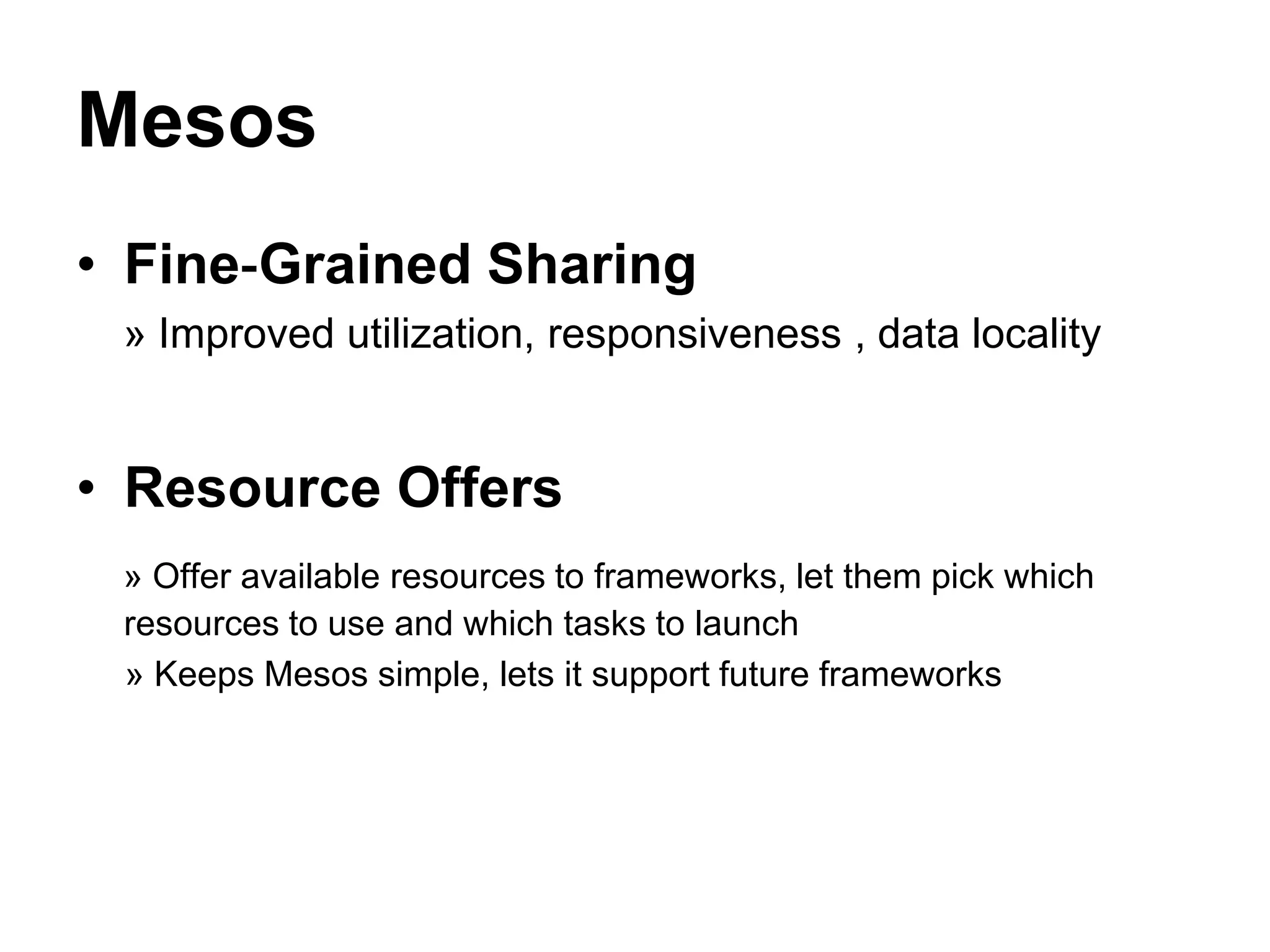

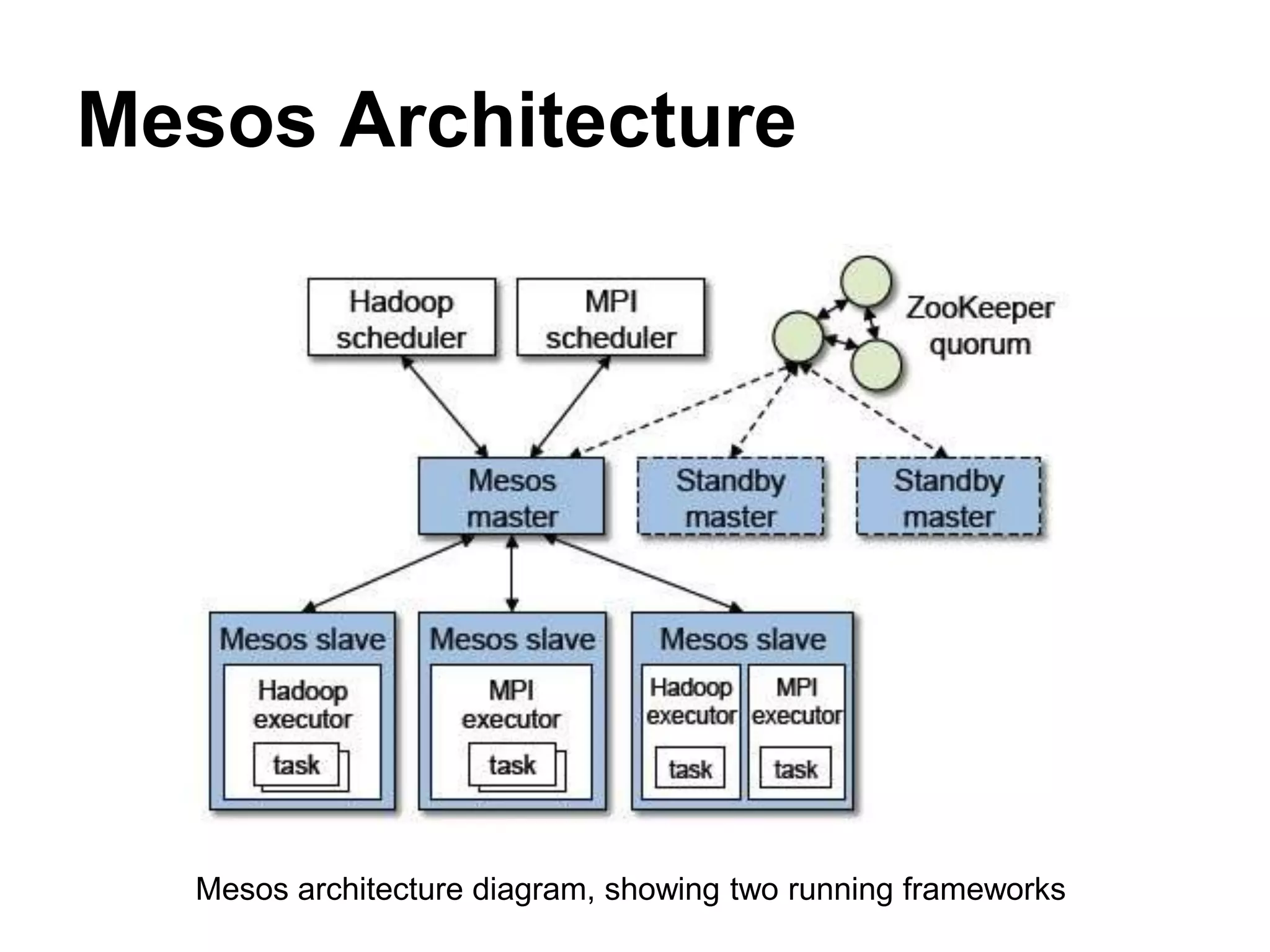

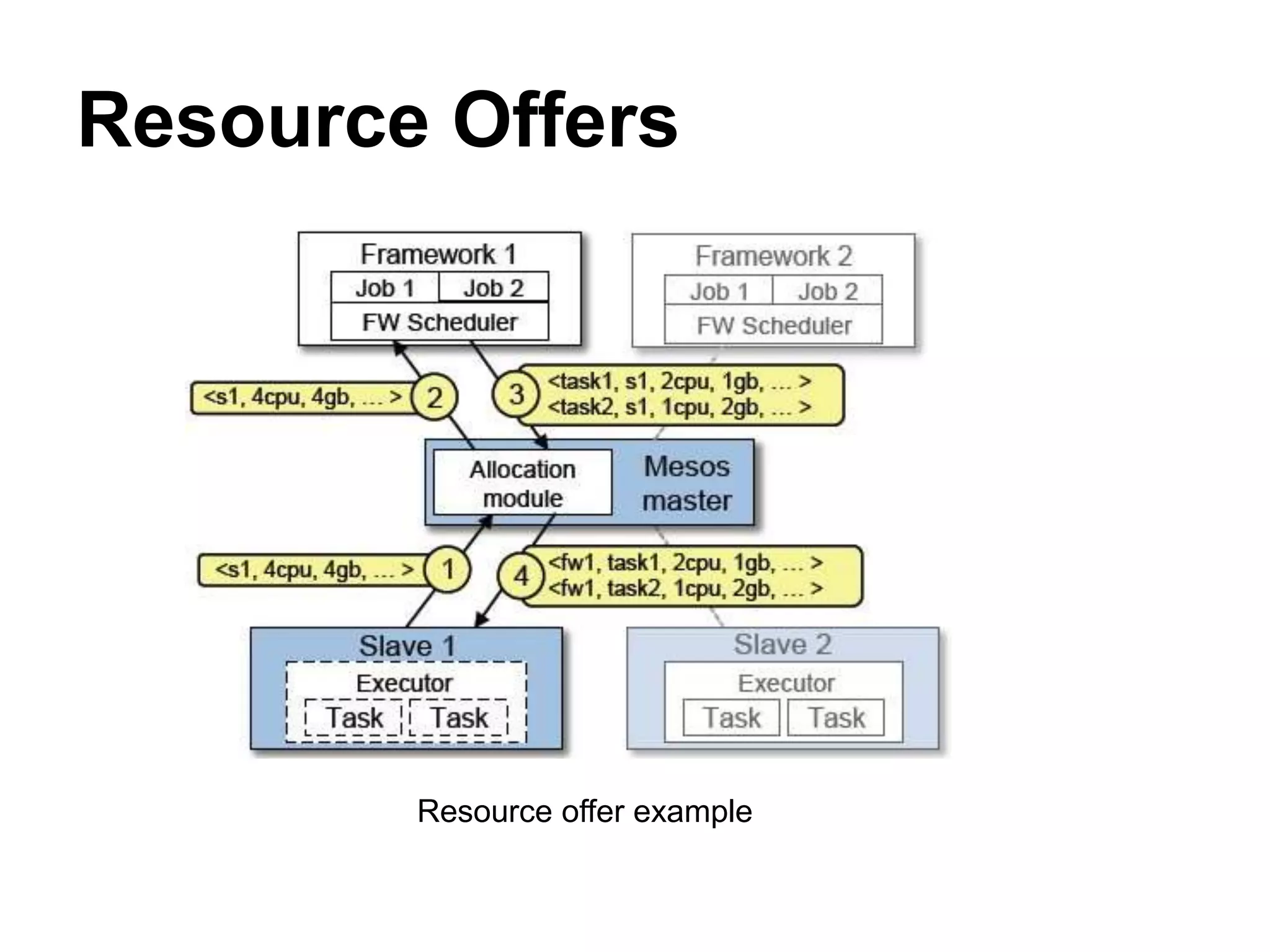

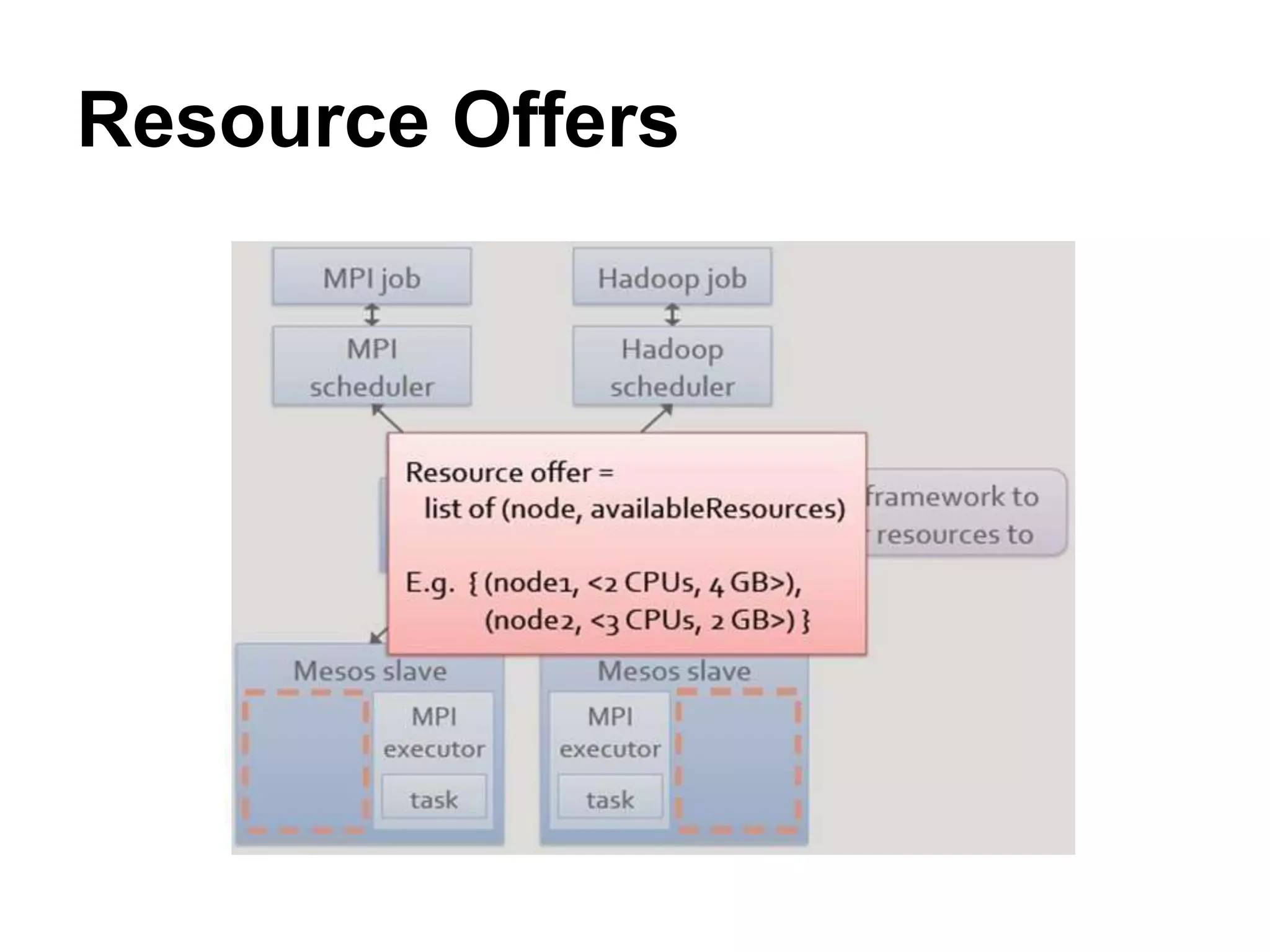

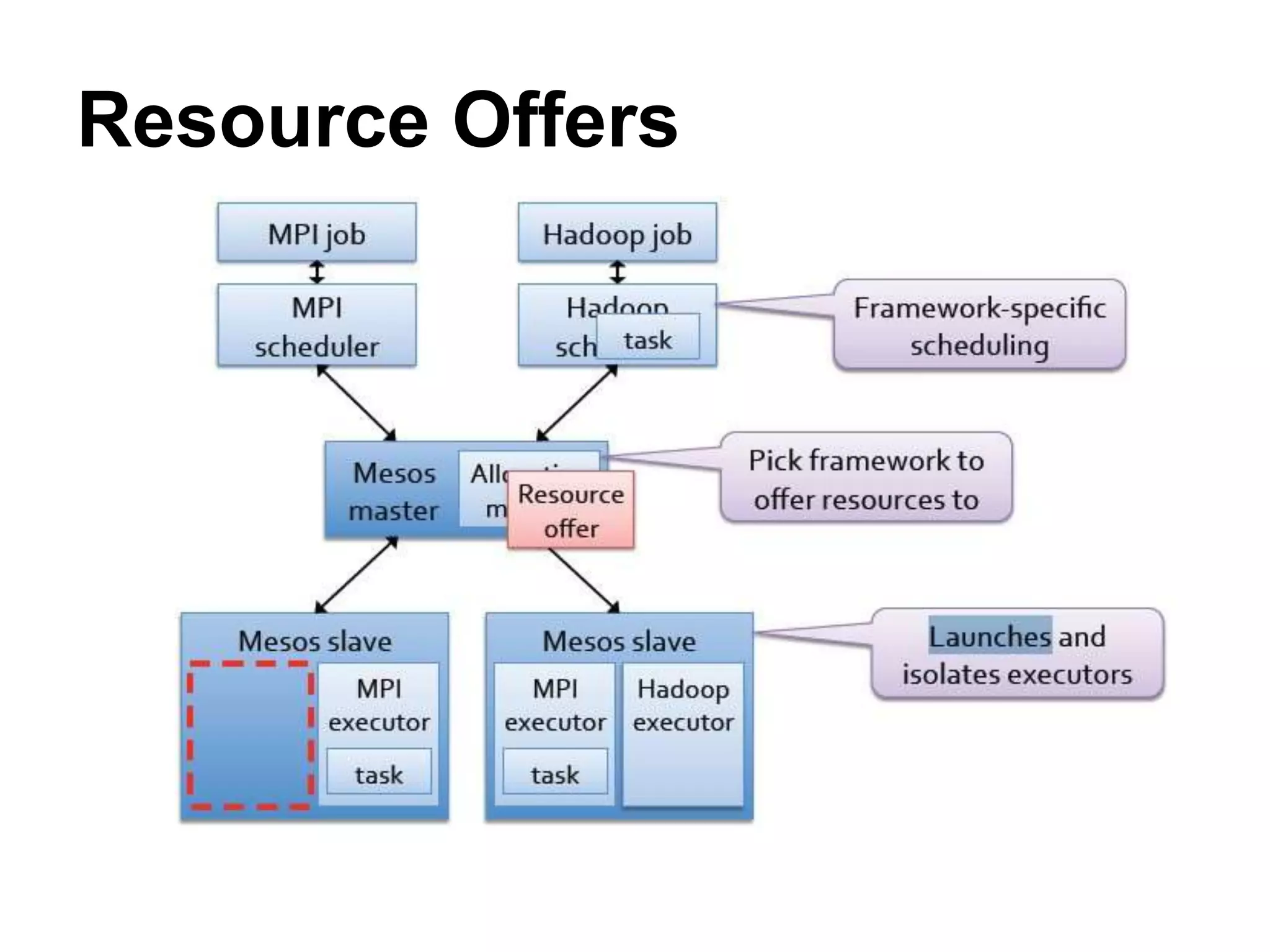

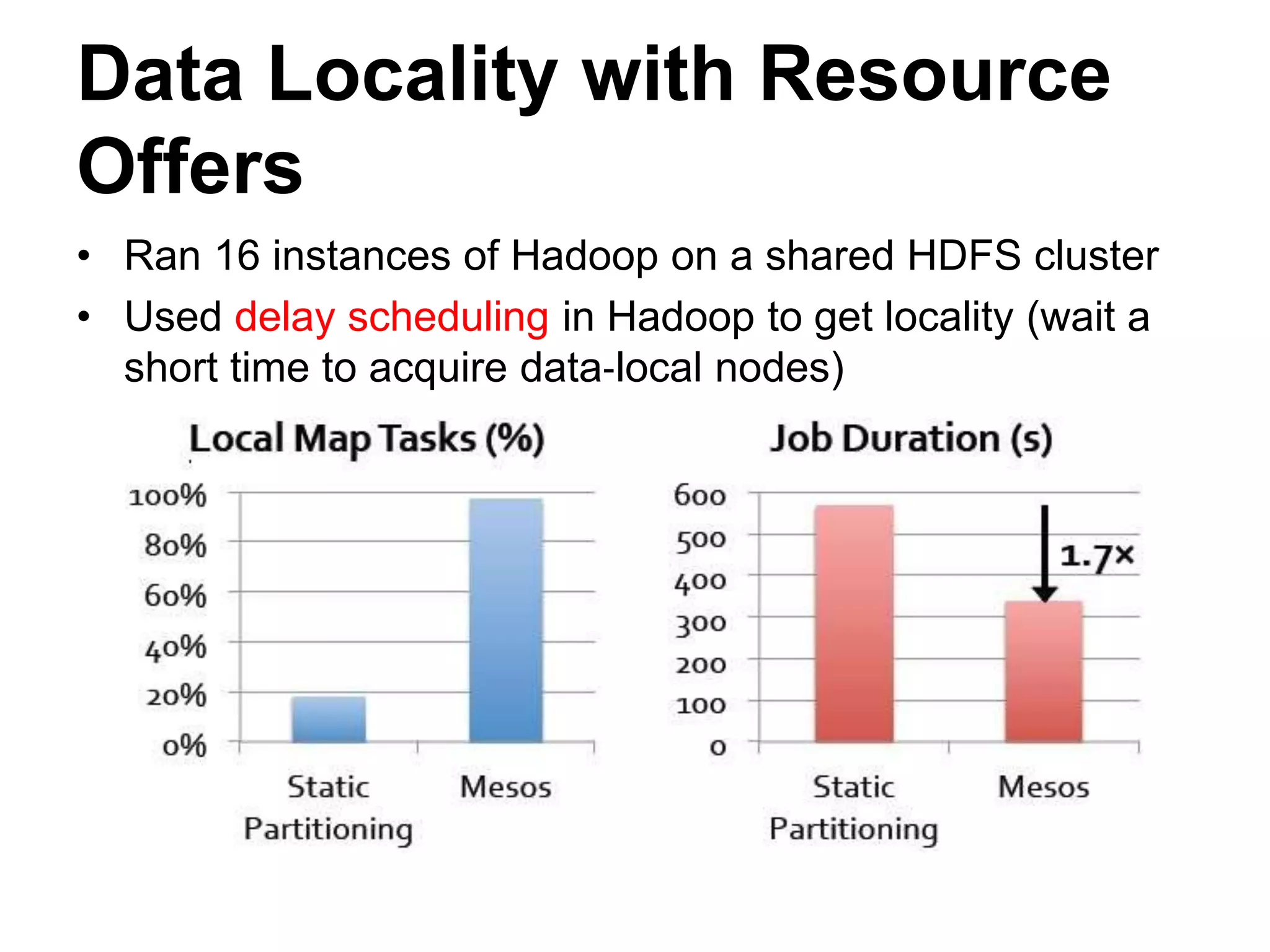

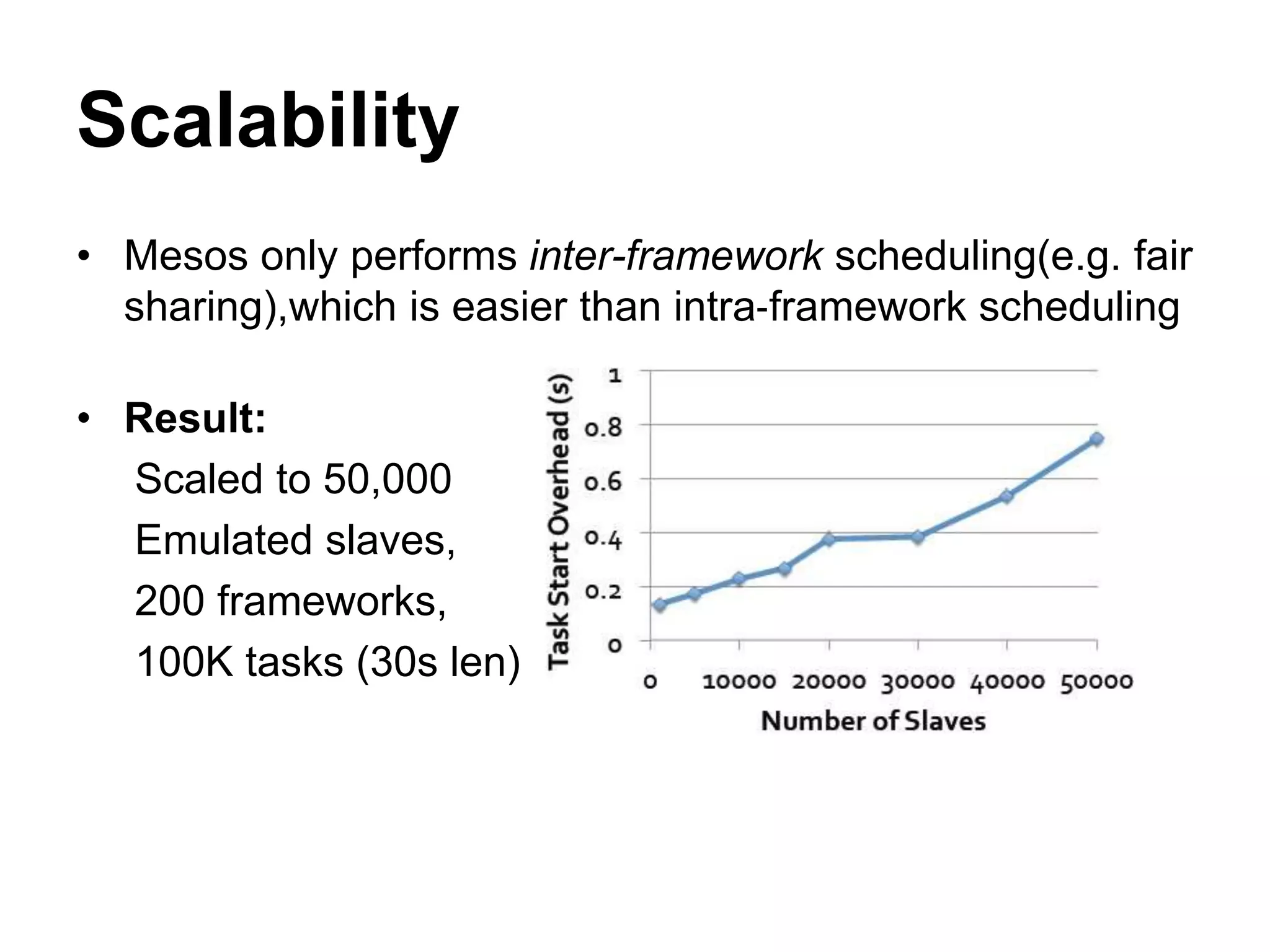

Mesos is a platform that enables sharing of cluster resources between different frameworks. It achieves this through a two-level resource sharing approach: 1) Mesos manages coarse-grained sharing of resources like CPUs and memory between frameworks; 2) Frameworks control fine-grained sharing of tasks within their allocated resources. Mesos's use of resource offers allows frameworks to dynamically accept or reject resources based on their needs, improving cluster utilization. It has been used successfully at large companies to share resources between frameworks like Hadoop and Spark.