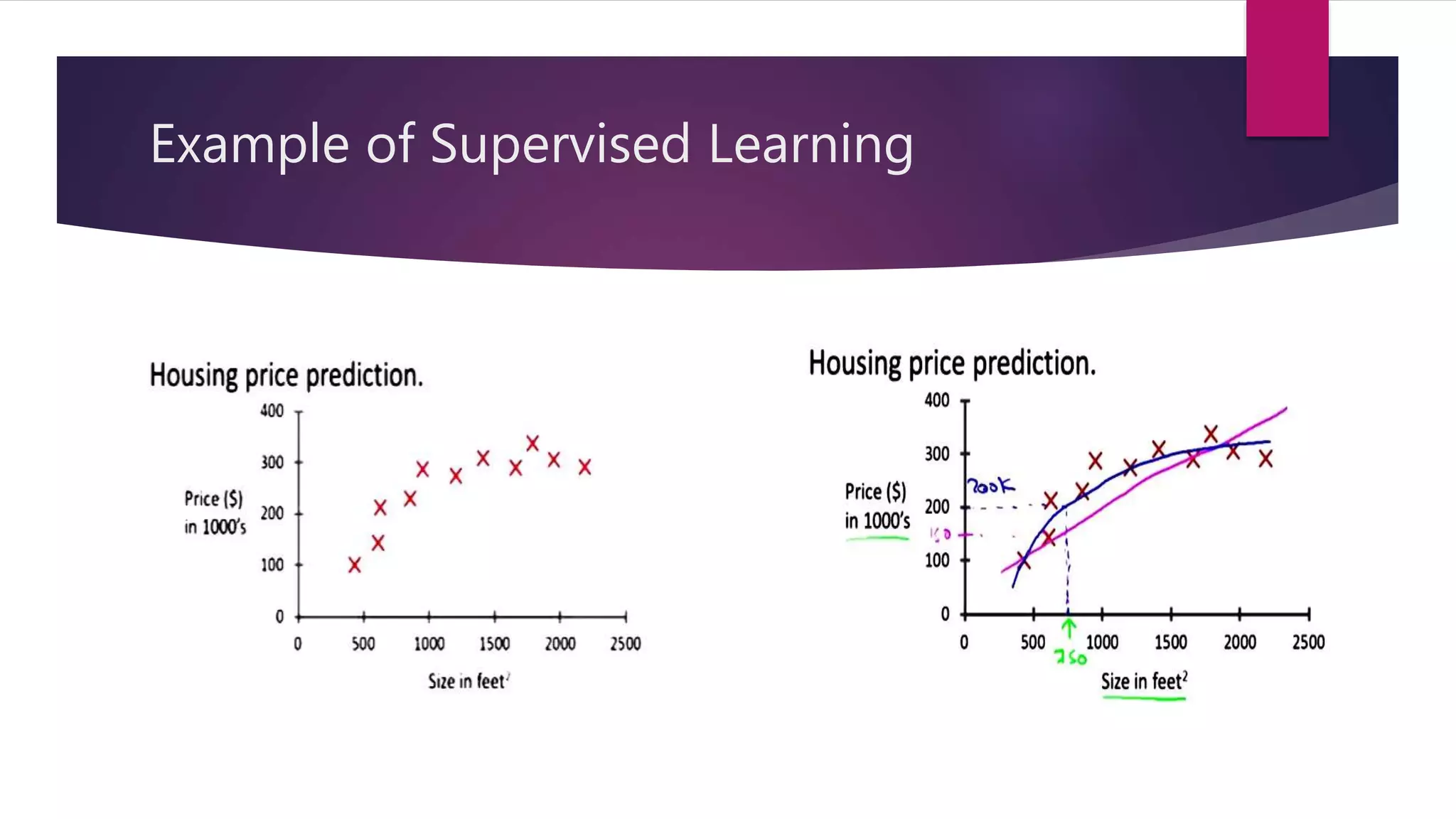

This document provides an overview of machine learning. It begins with an introduction and definitions, explaining that machine learning allows computers to learn without being explicitly programmed by exploring algorithms that can learn from data. The document then discusses the different types of machine learning problems including supervised learning, unsupervised learning, and reinforcement learning. It provides examples and applications of each type. The document also covers popular machine learning techniques like decision trees, artificial neural networks, and frameworks/tools used for machine learning.

![Terminology with Example

Features

Color – Red

Type- Logo

Shape

Features

Color – Light Blue

Type – Logo

Shape

Here sample are –both apples, Feature Vector =[Color, Type, Shape] , Training Set- Taken all at time](https://image.slidesharecdn.com/machinecanthink-160226155704/75/Machine-Can-Think-12-2048.jpg)