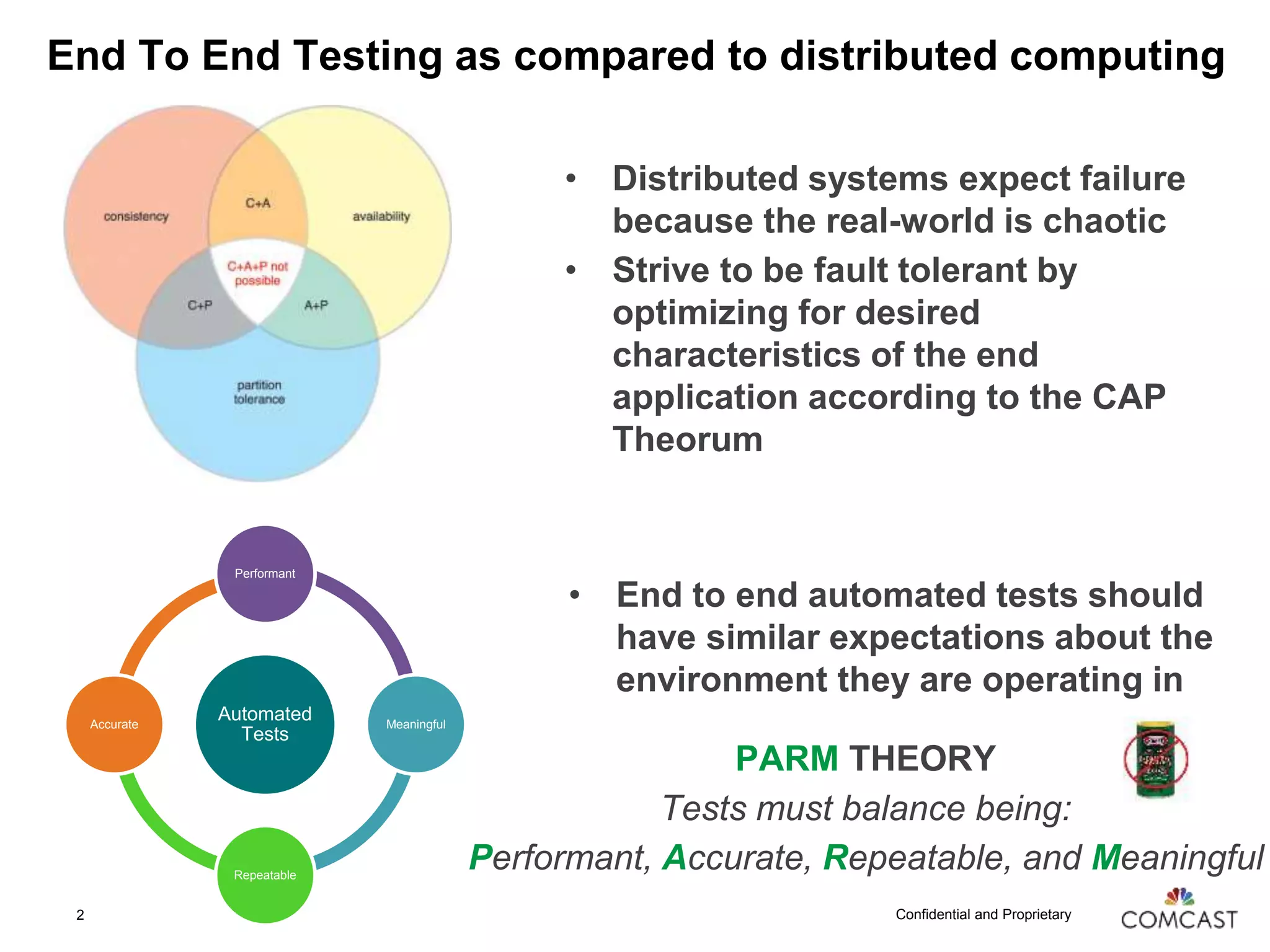

This document provides lessons learned about end-to-end testing based on metaphors from zombie movies. It discusses how end-to-end tests should expect failures like distributed systems due to real-world chaos. Tests need to balance being performant, accurate, repeatable, and meaningful. It provides tips for testing such as using dependency management, controlling all data, avoiding slow test methods, and sharing open-source testing tools and techniques with the community.