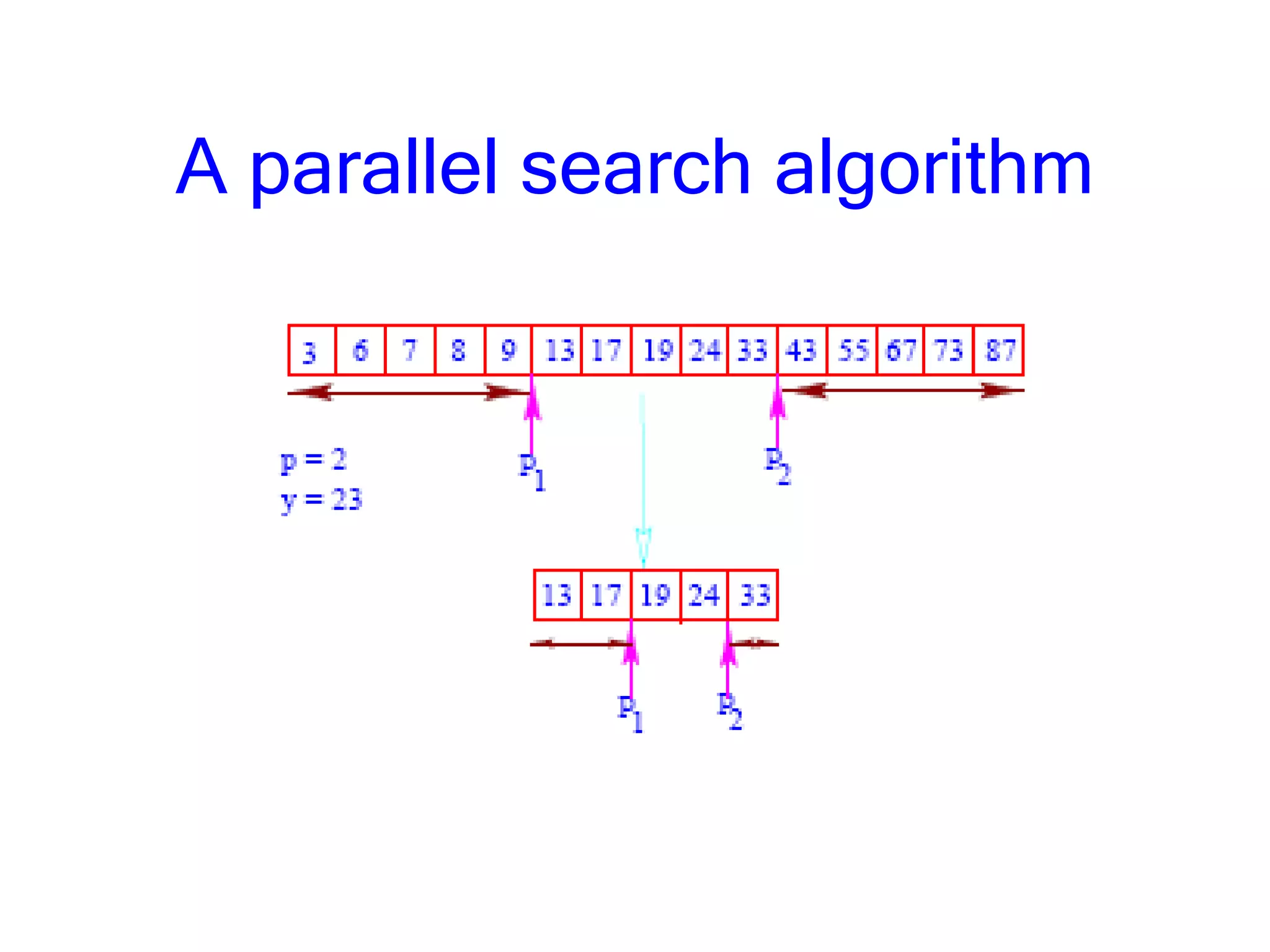

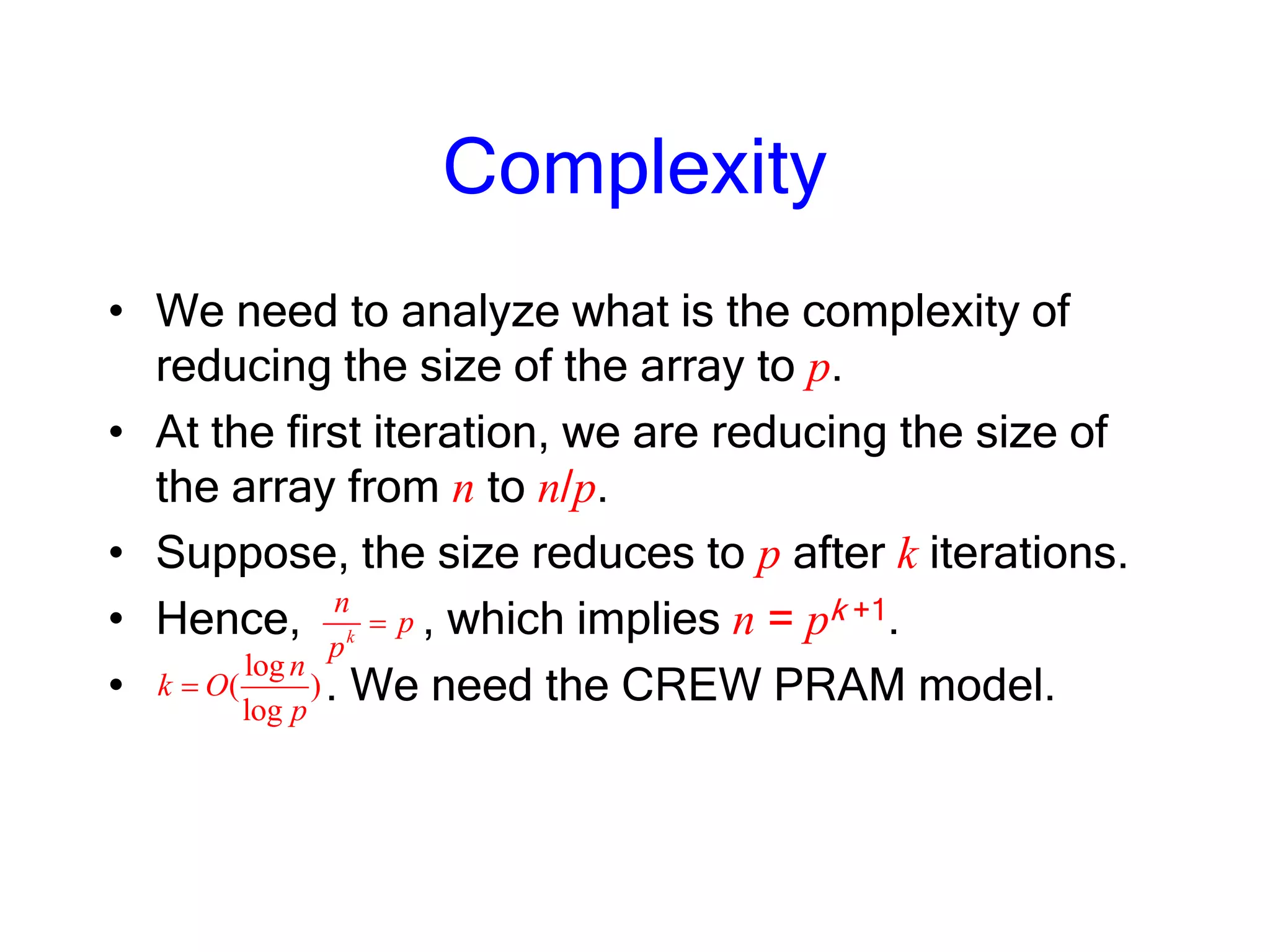

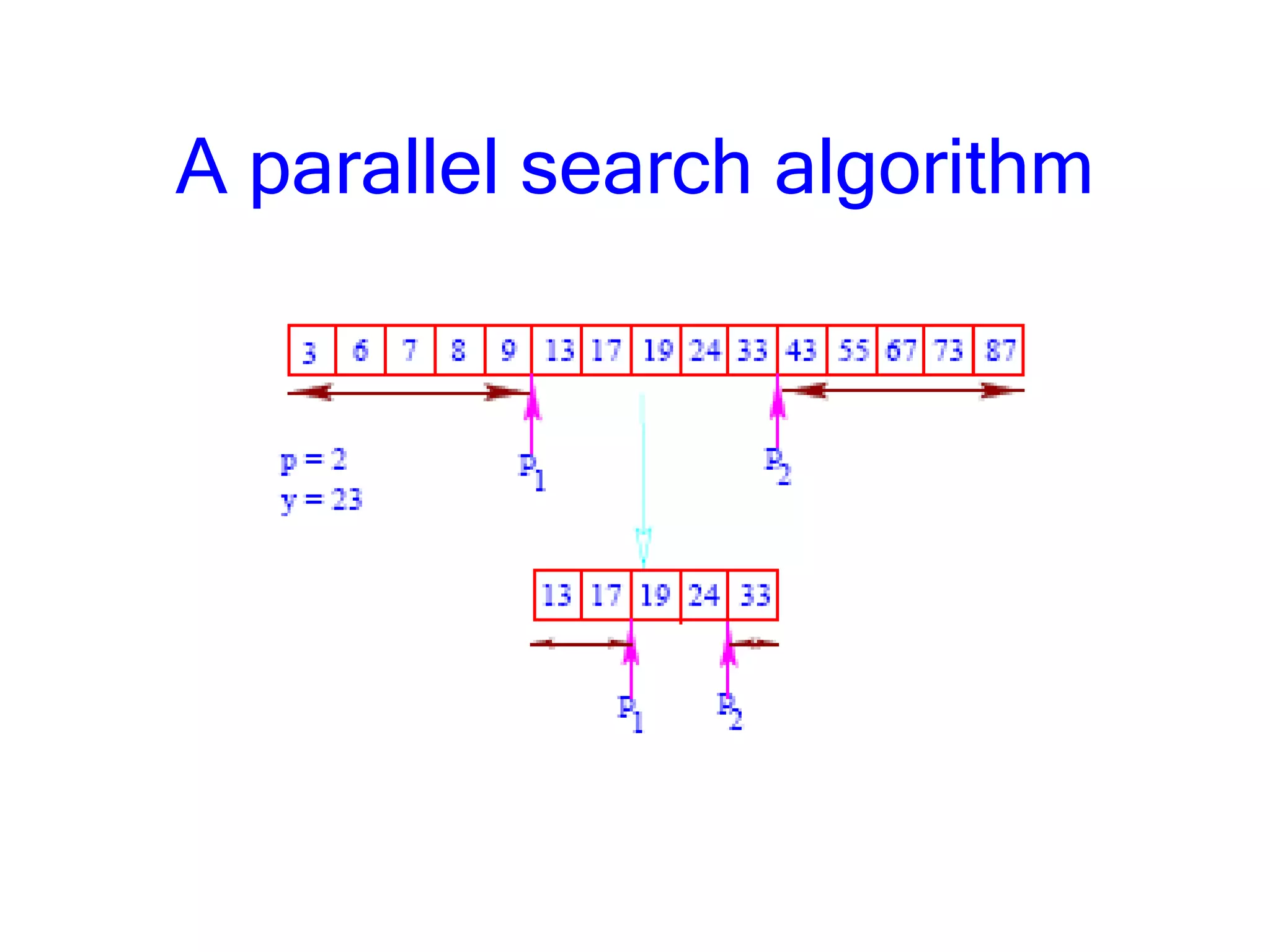

- A parallel search algorithm finds two elements in a sorted array that bracket a query element in logarithmic time using p processors (paragraph 1).

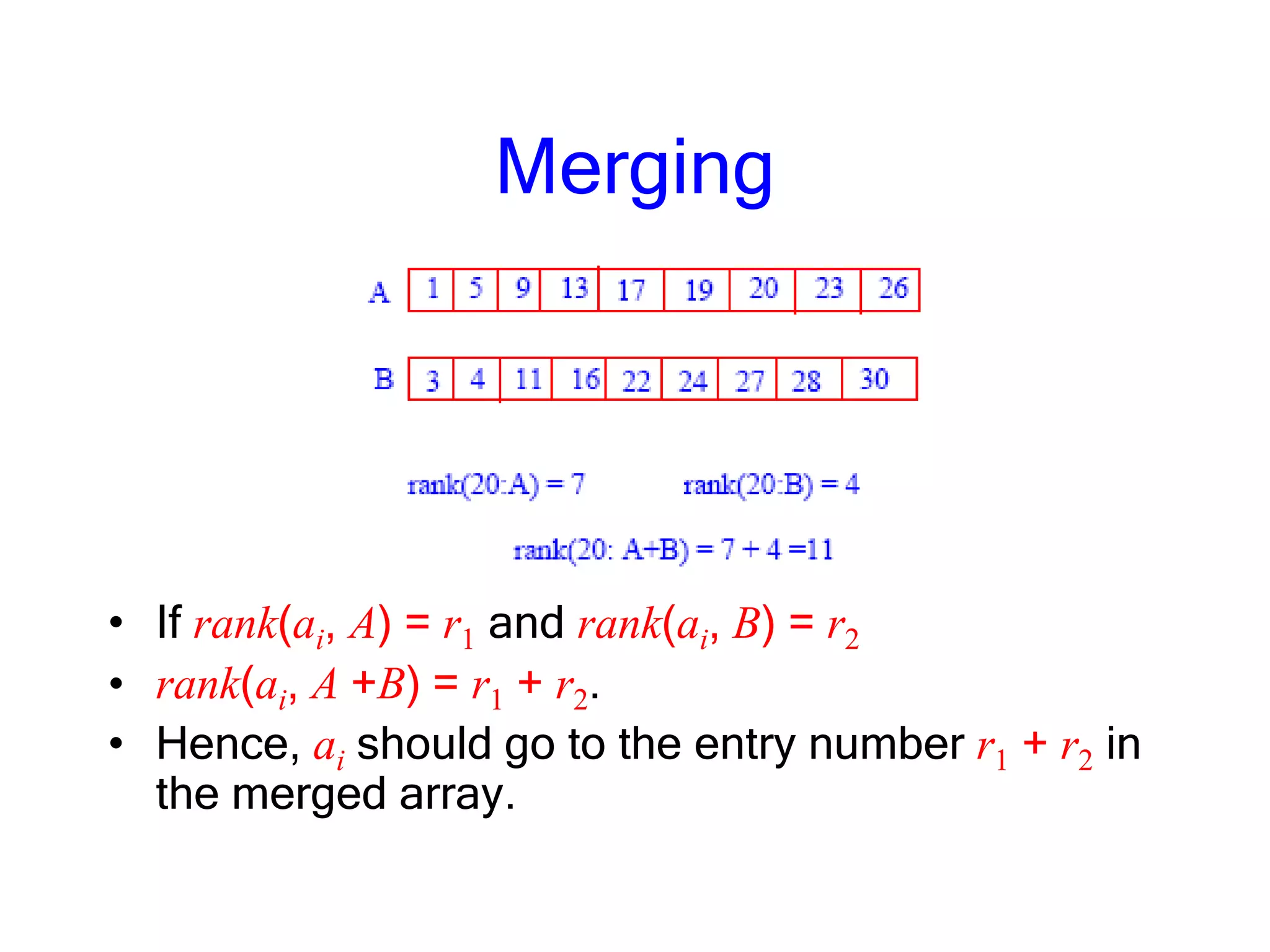

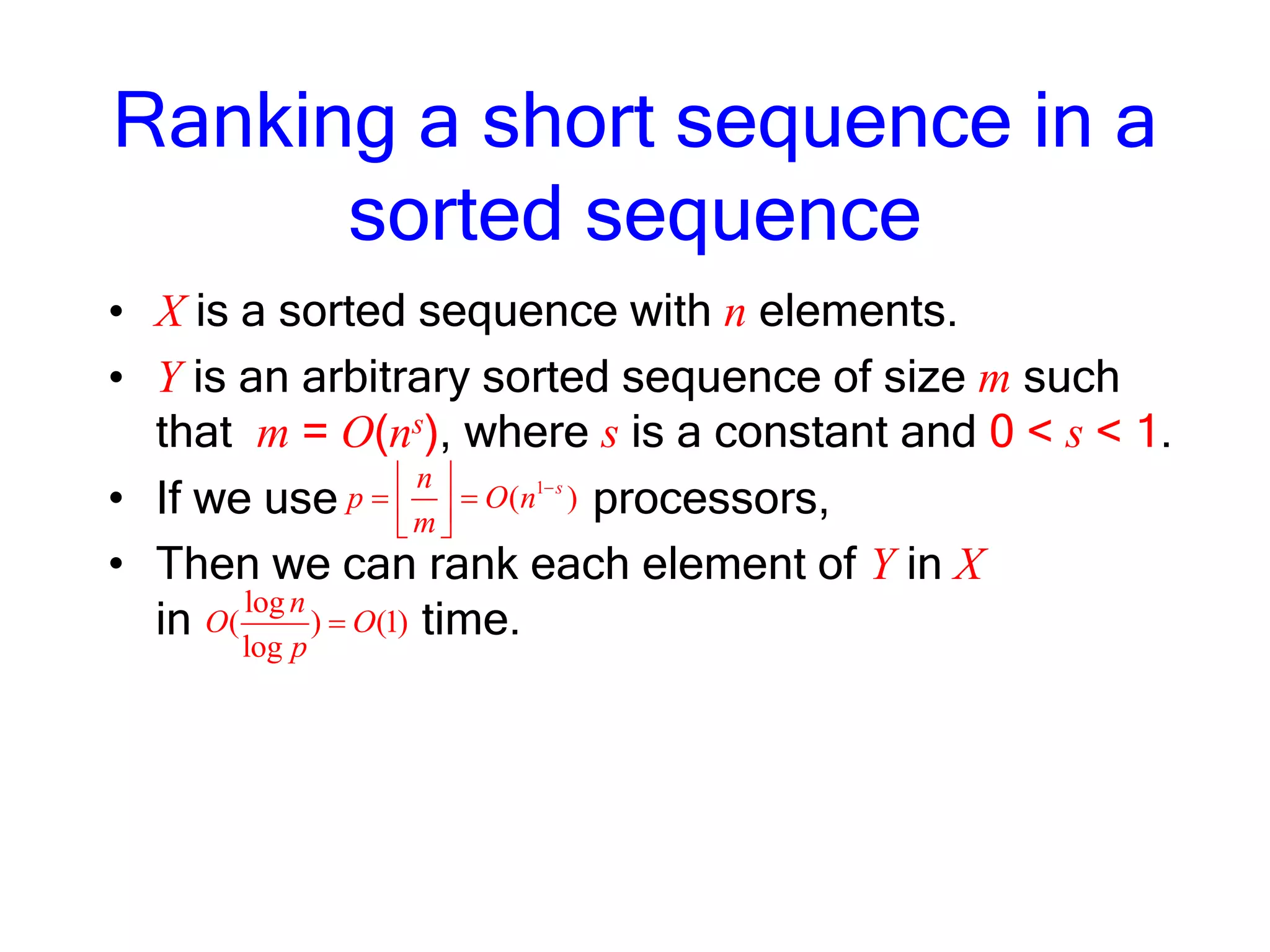

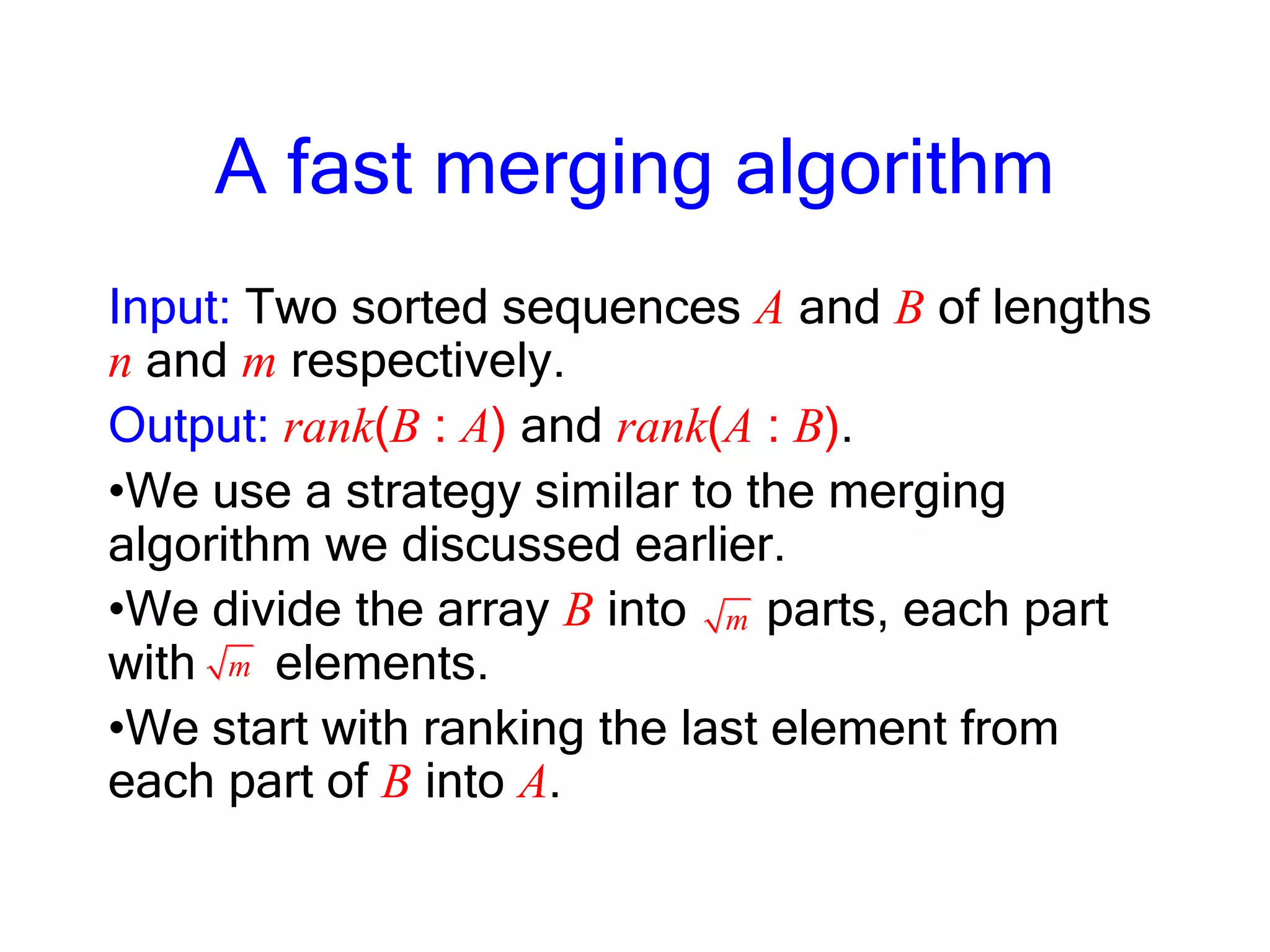

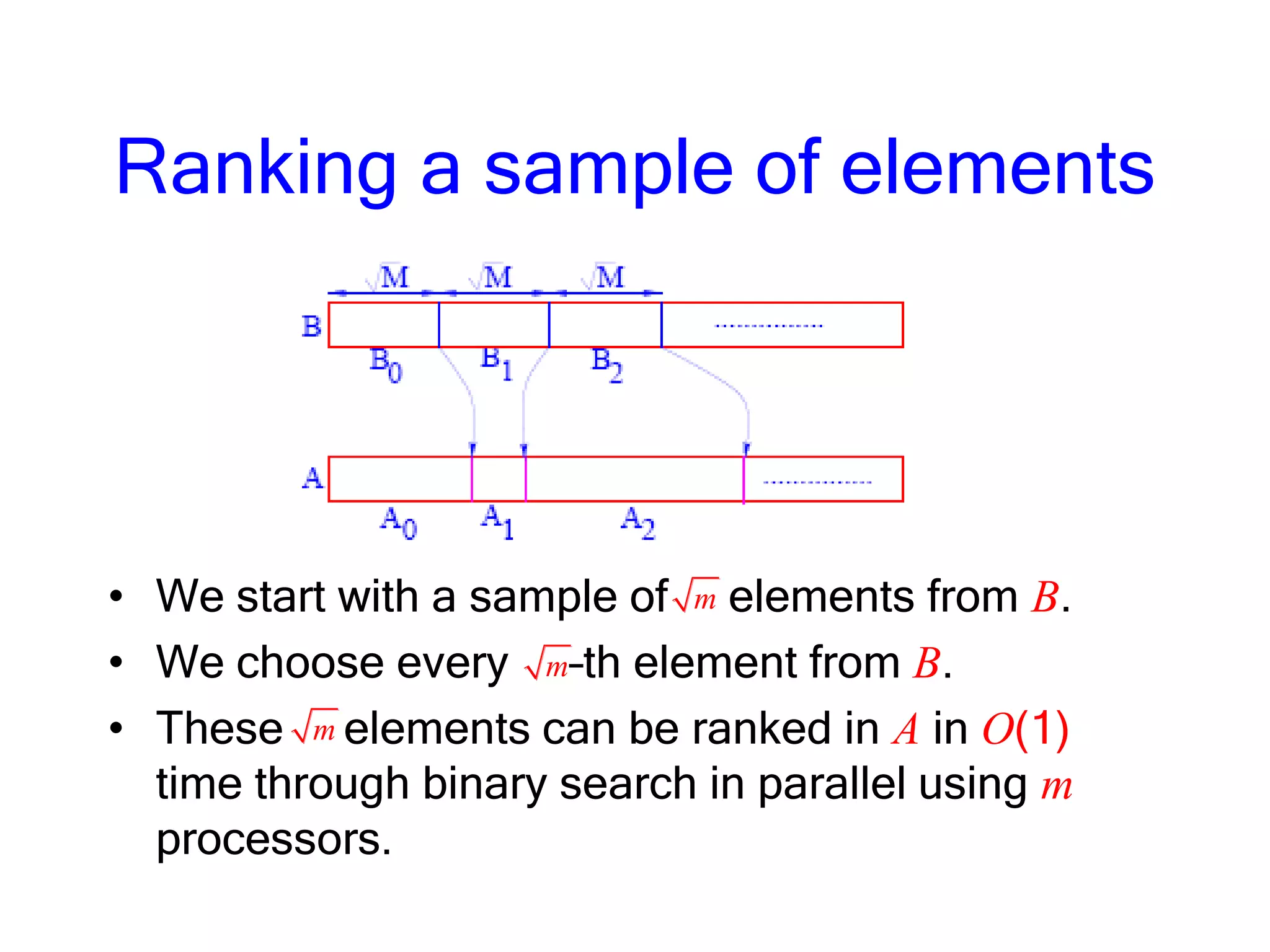

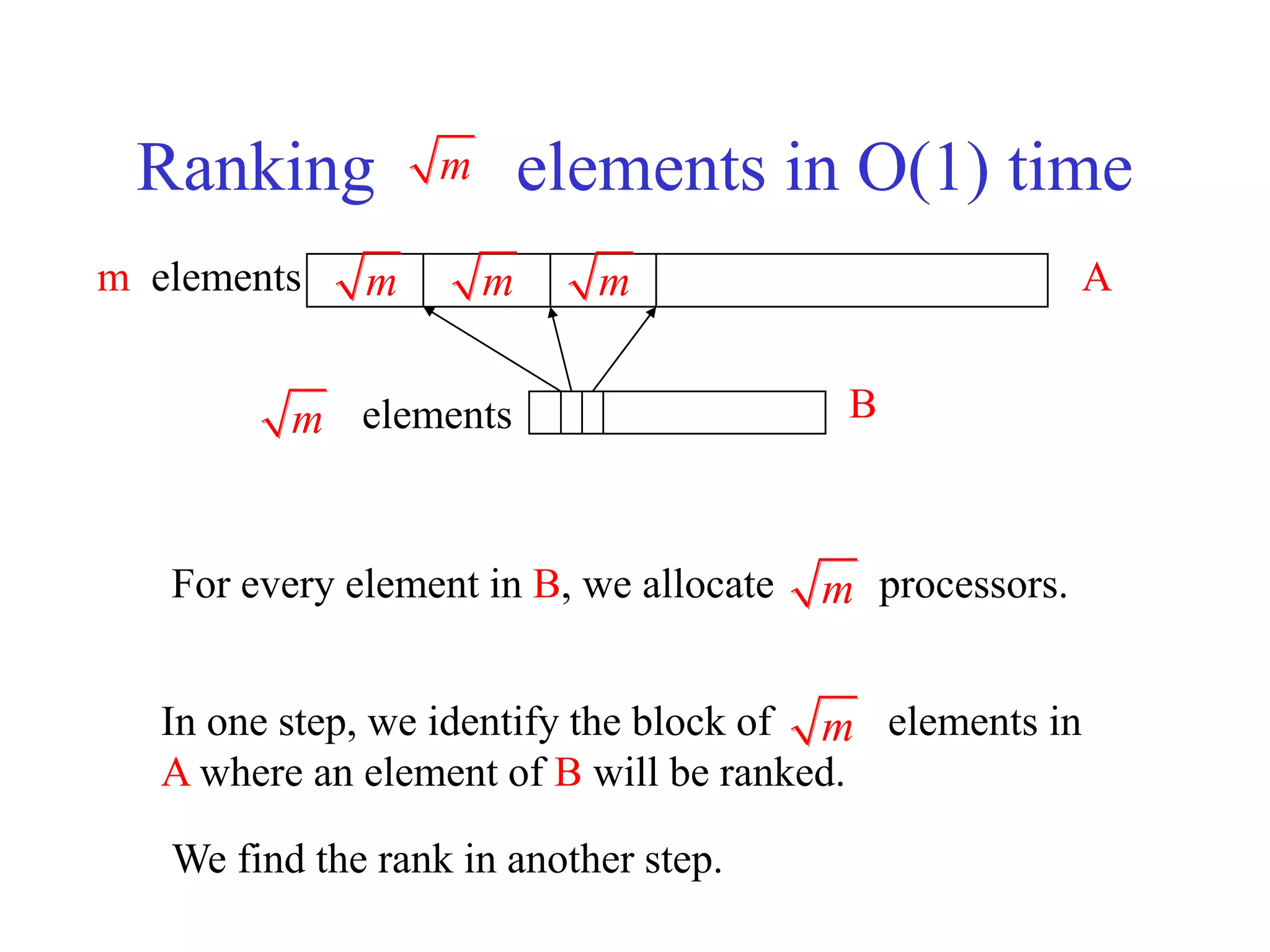

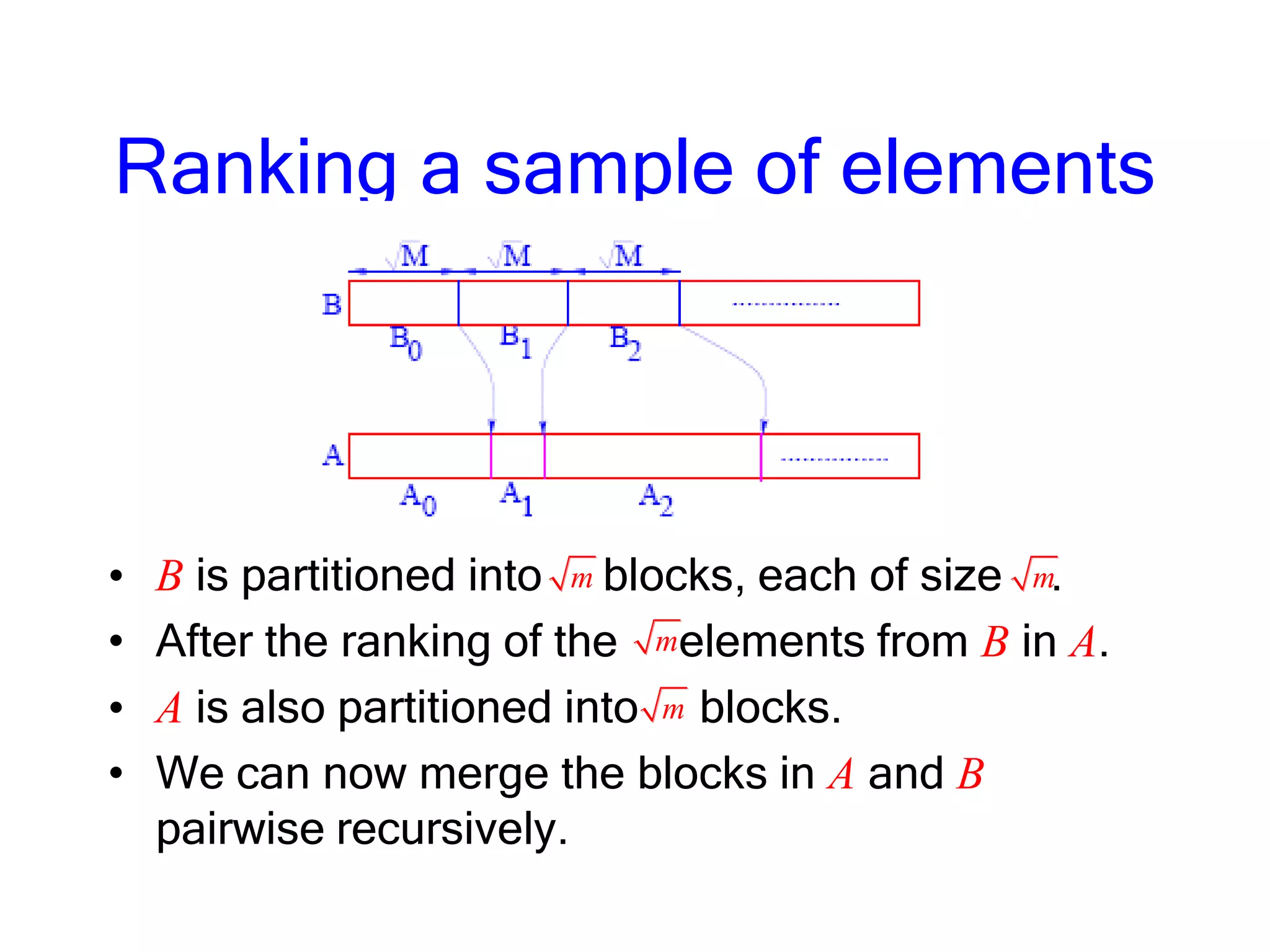

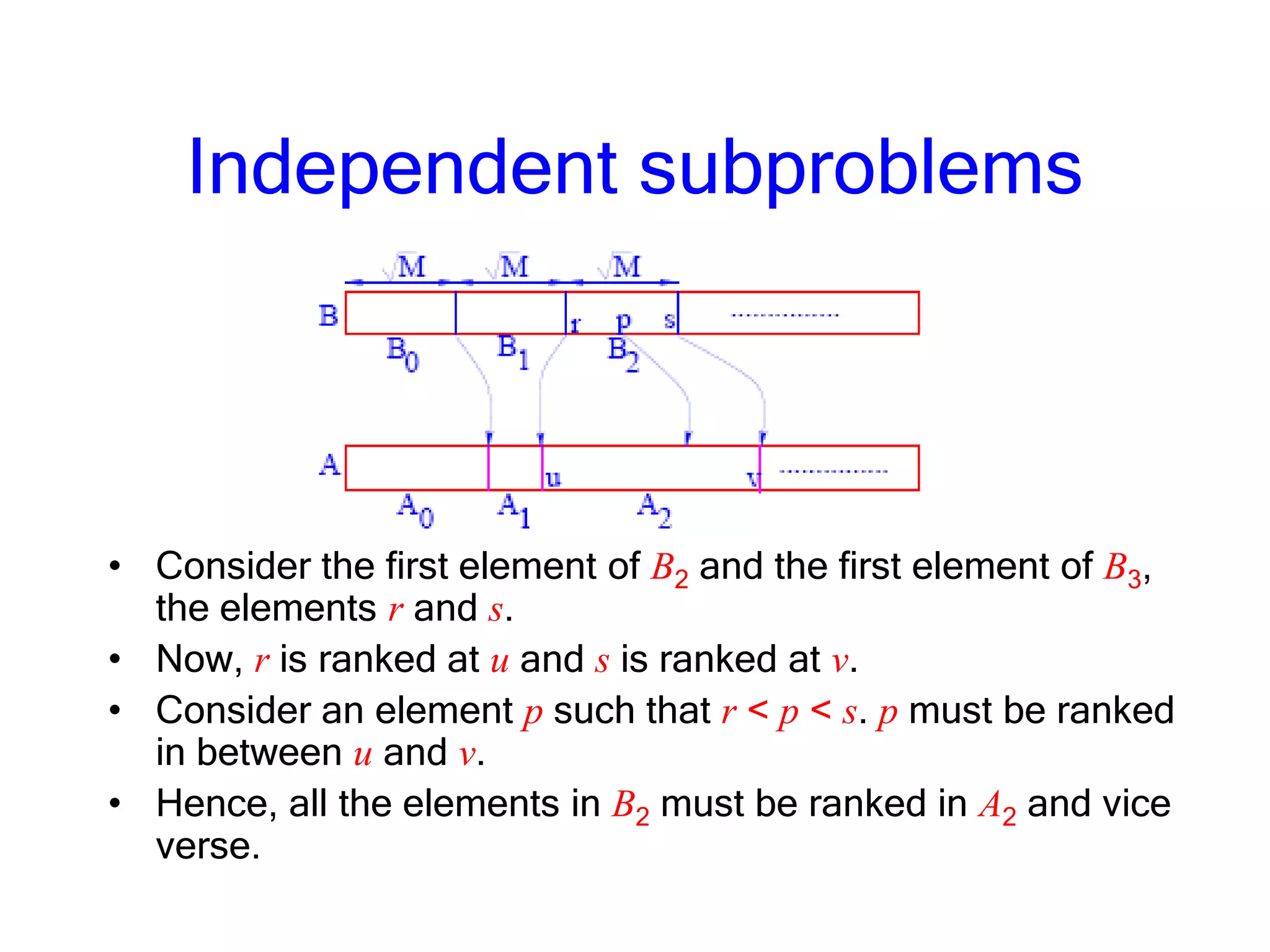

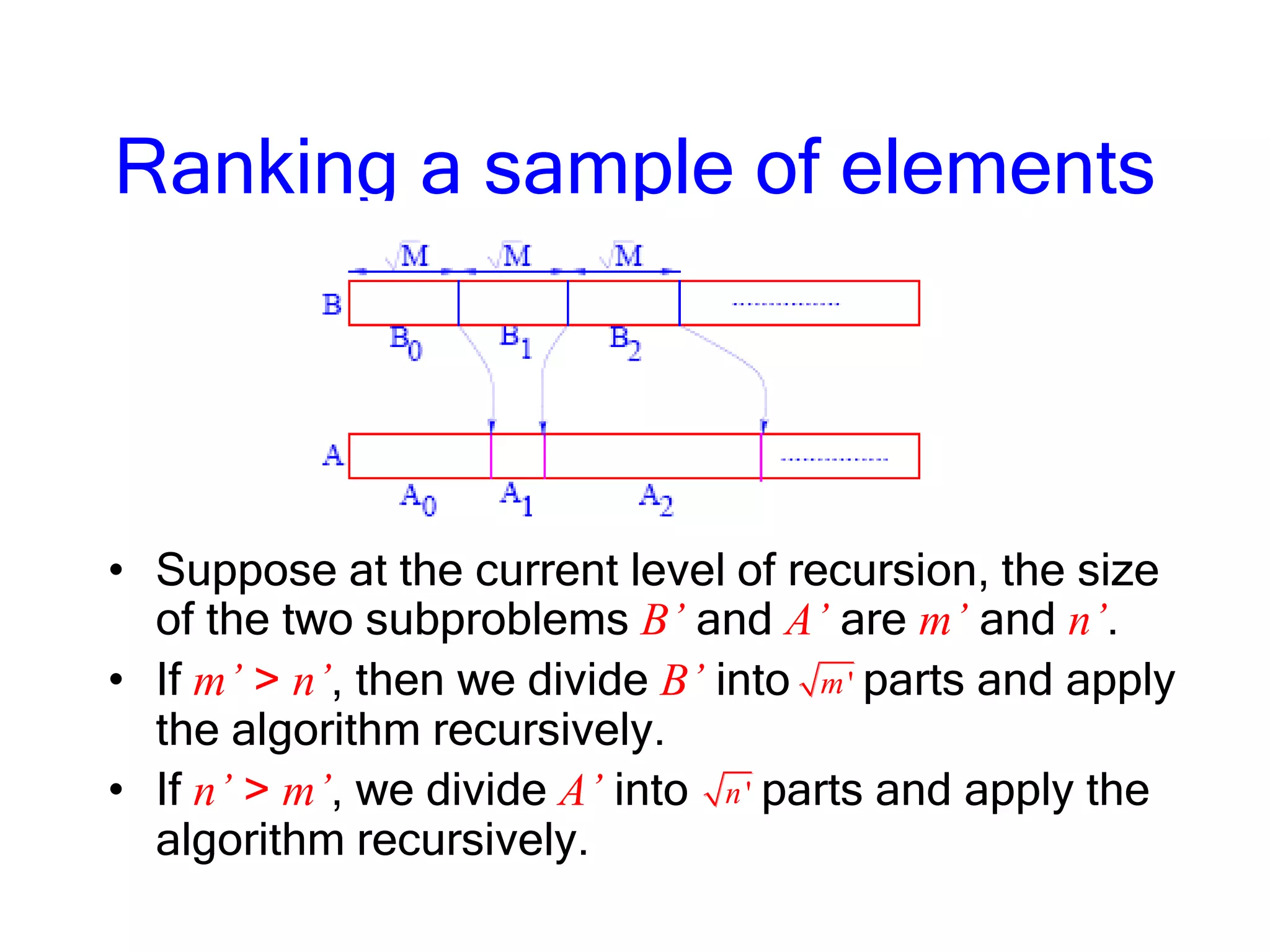

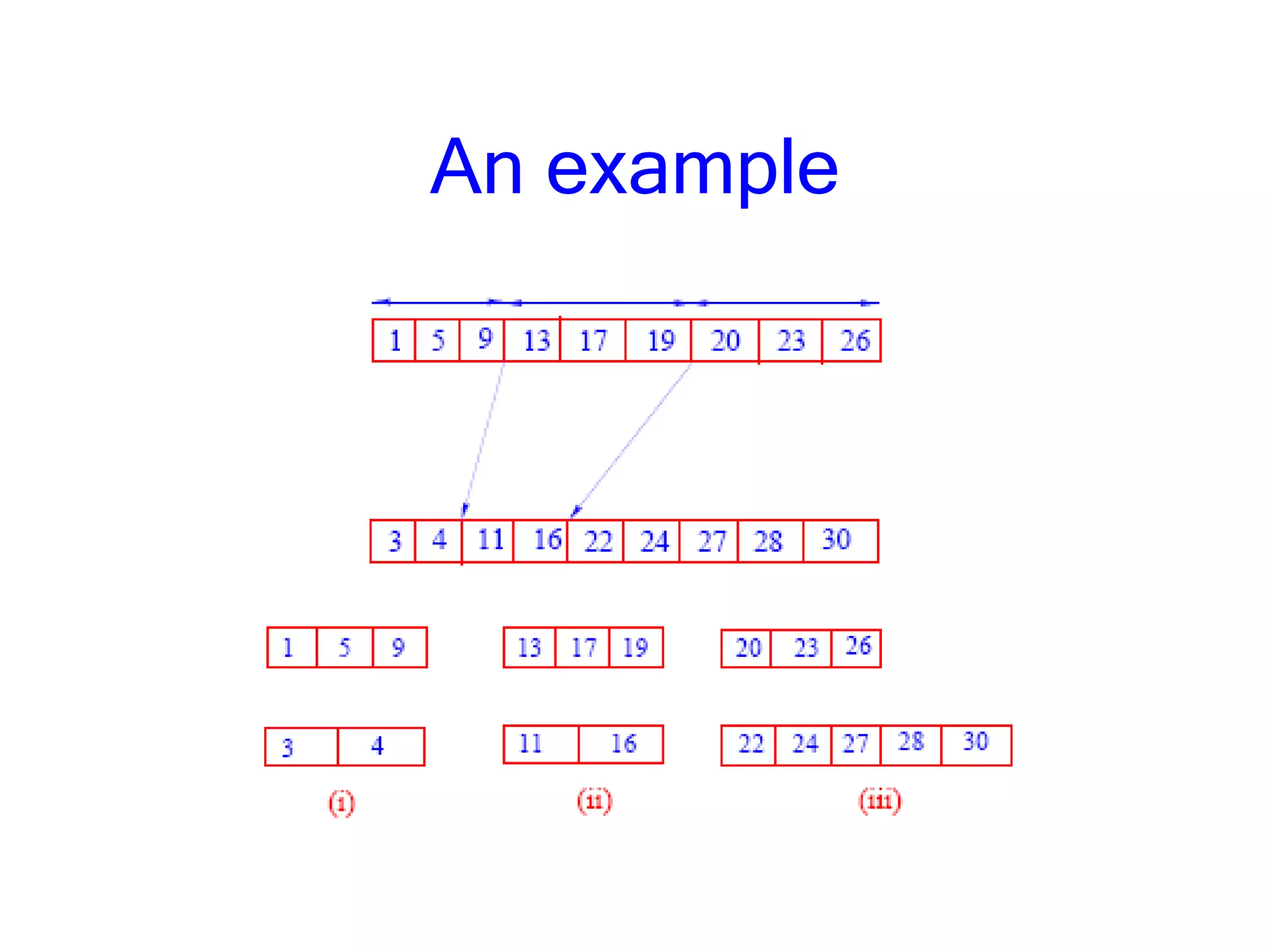

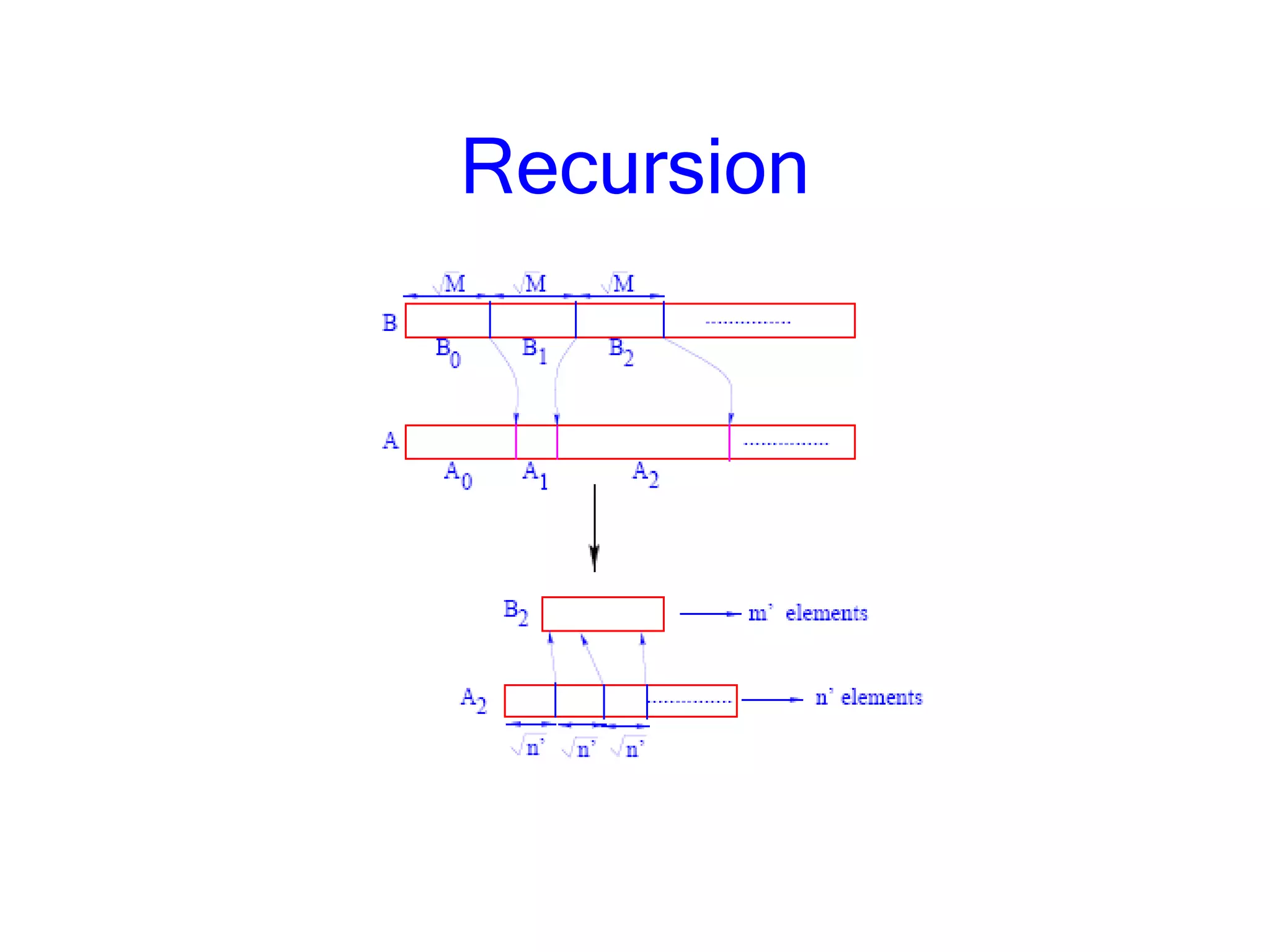

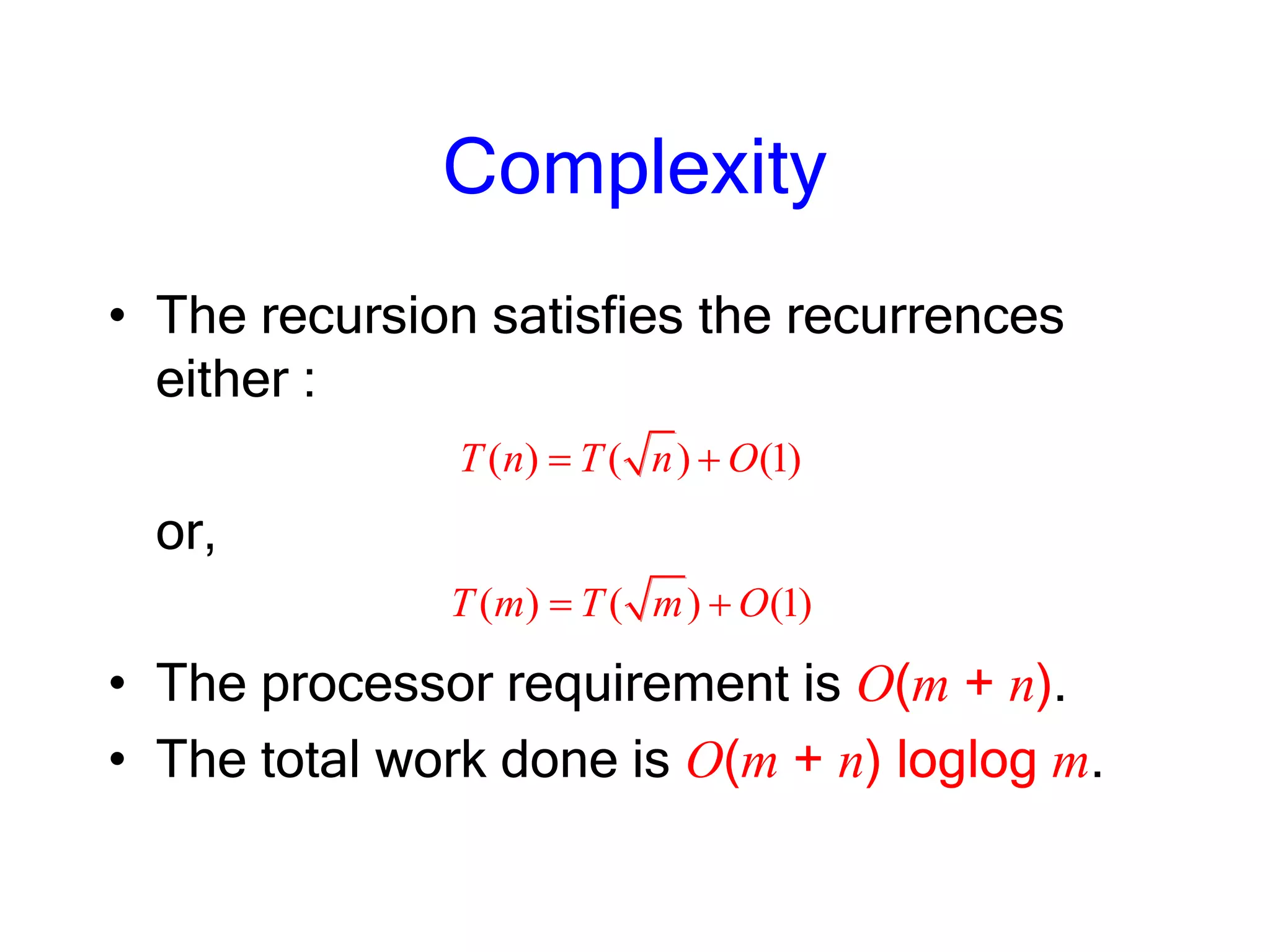

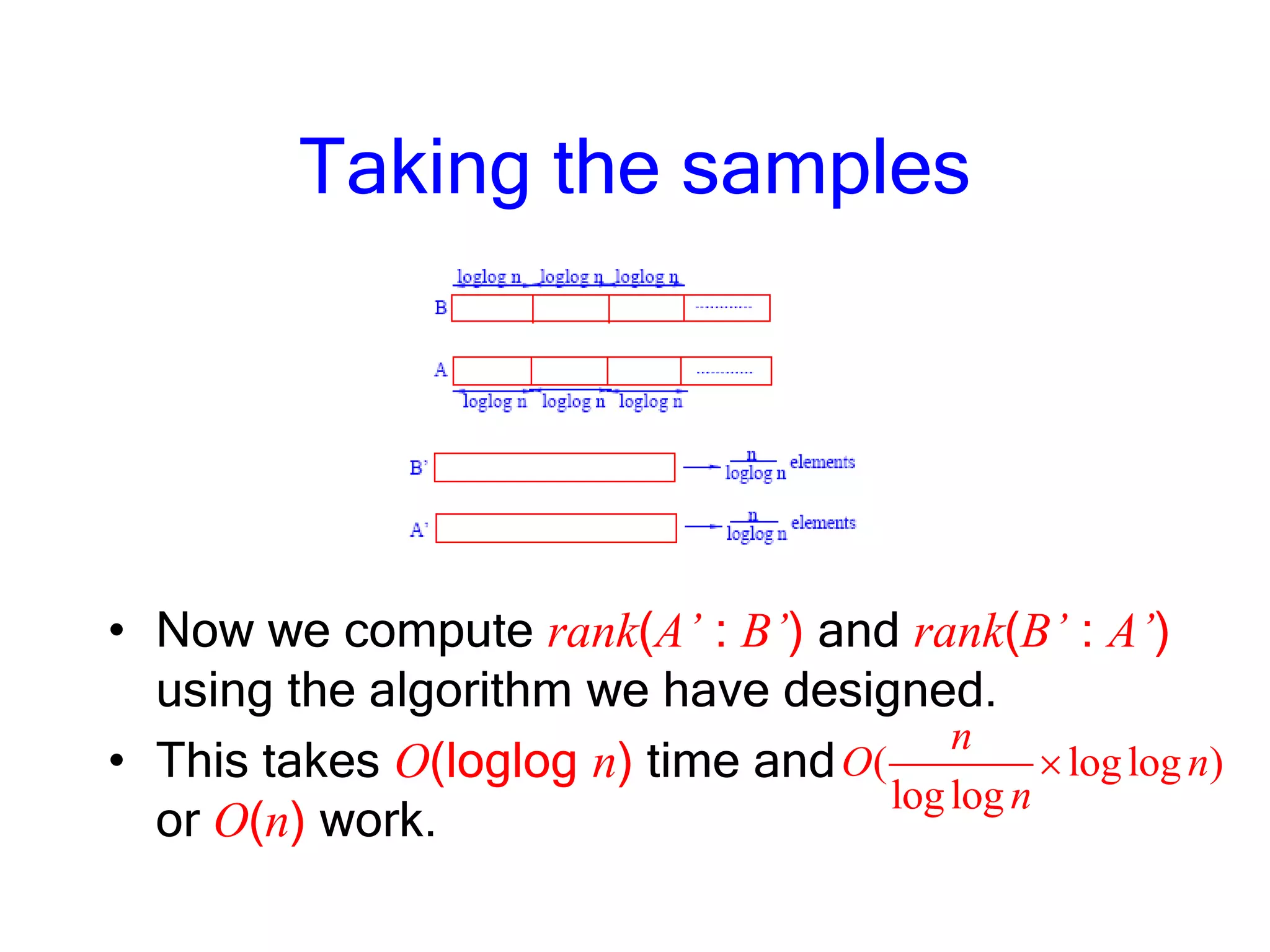

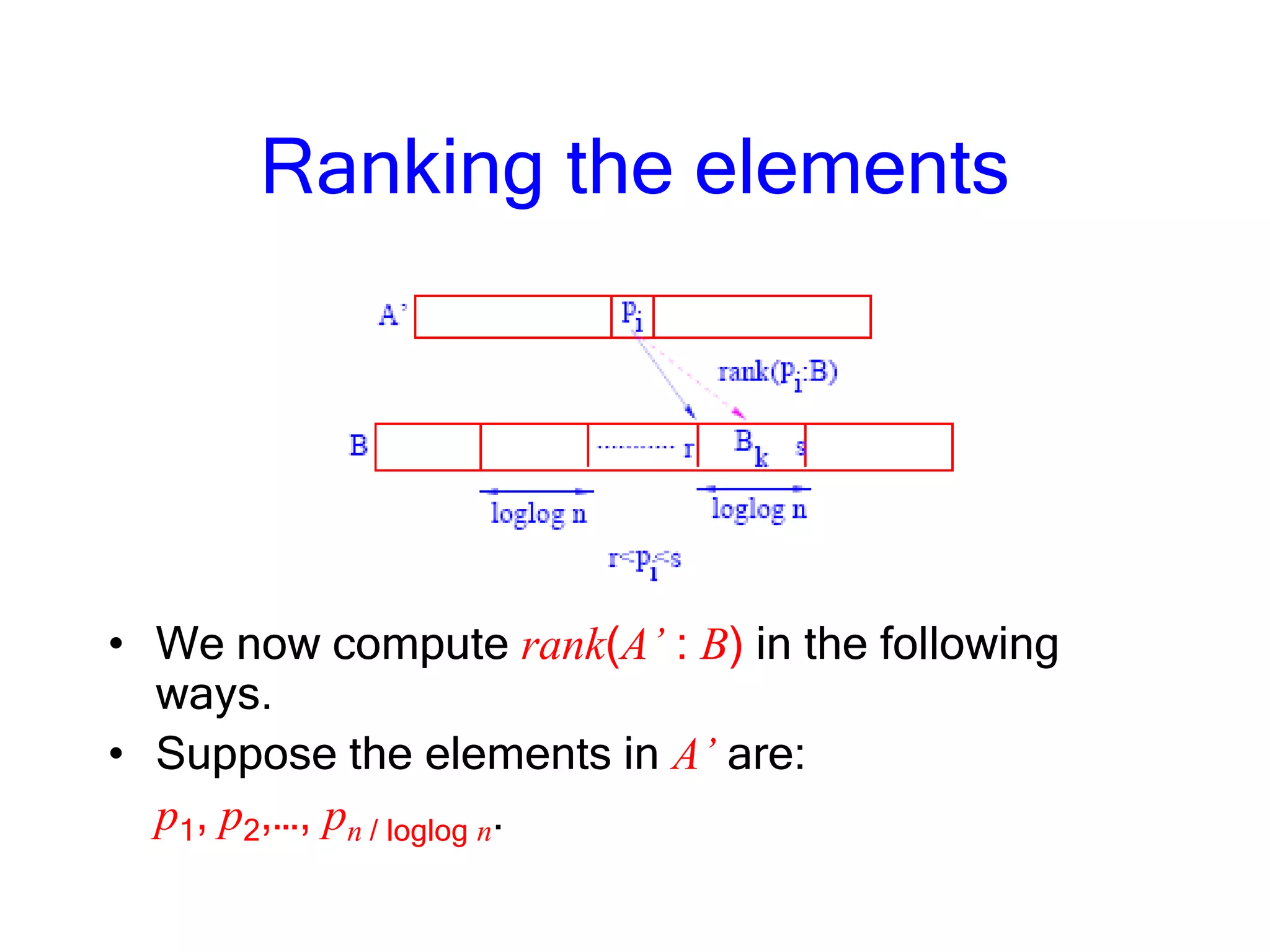

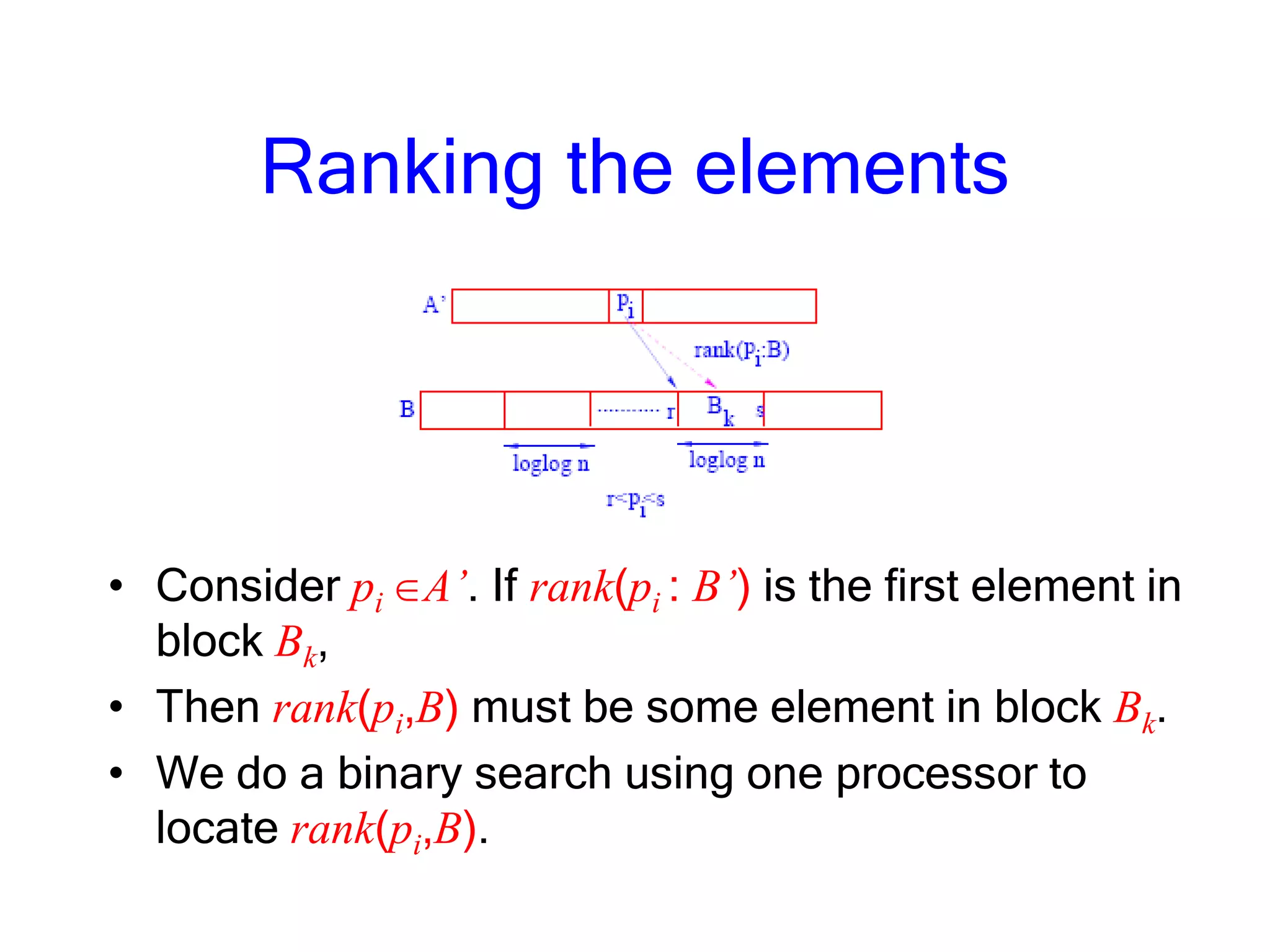

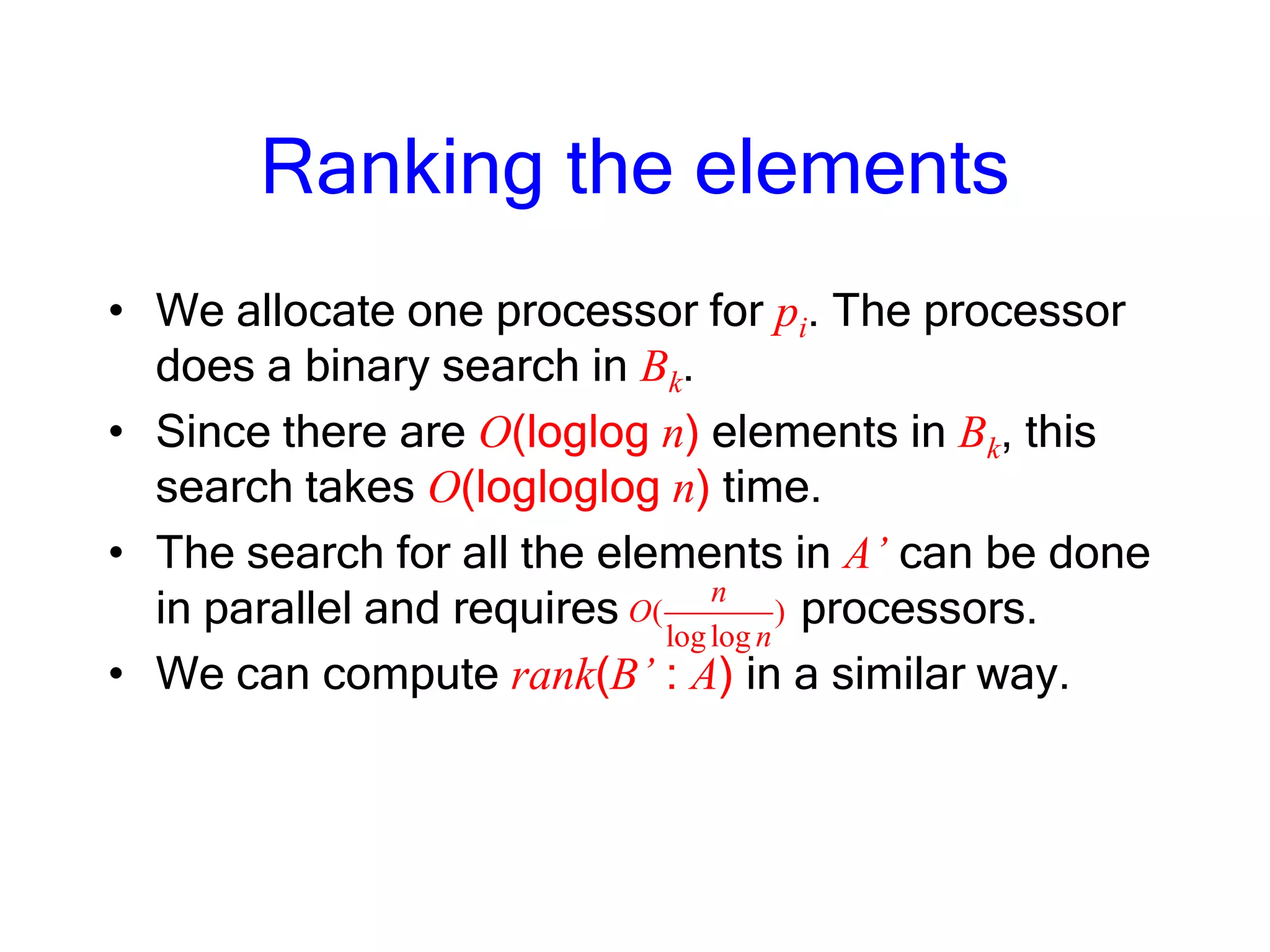

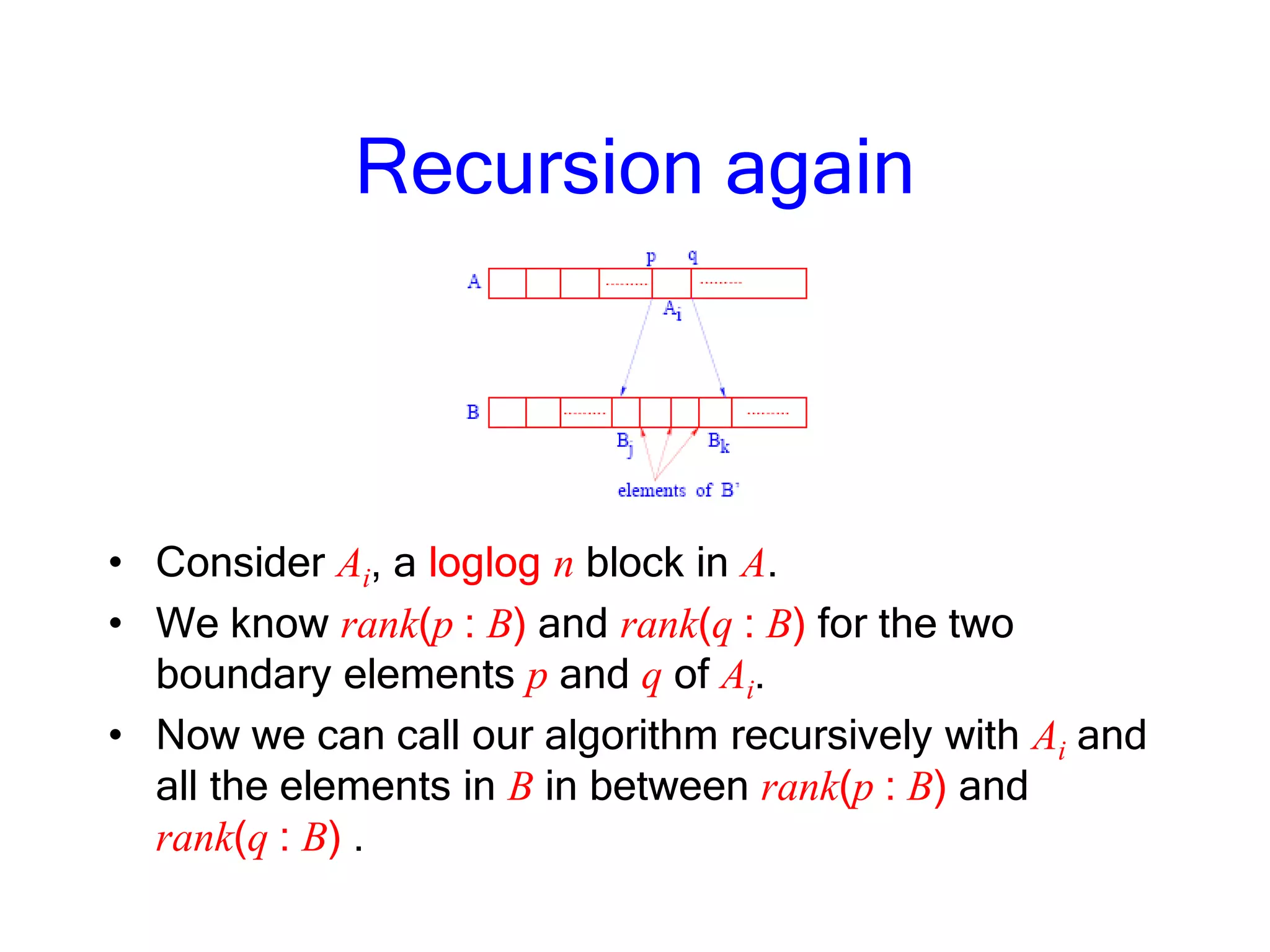

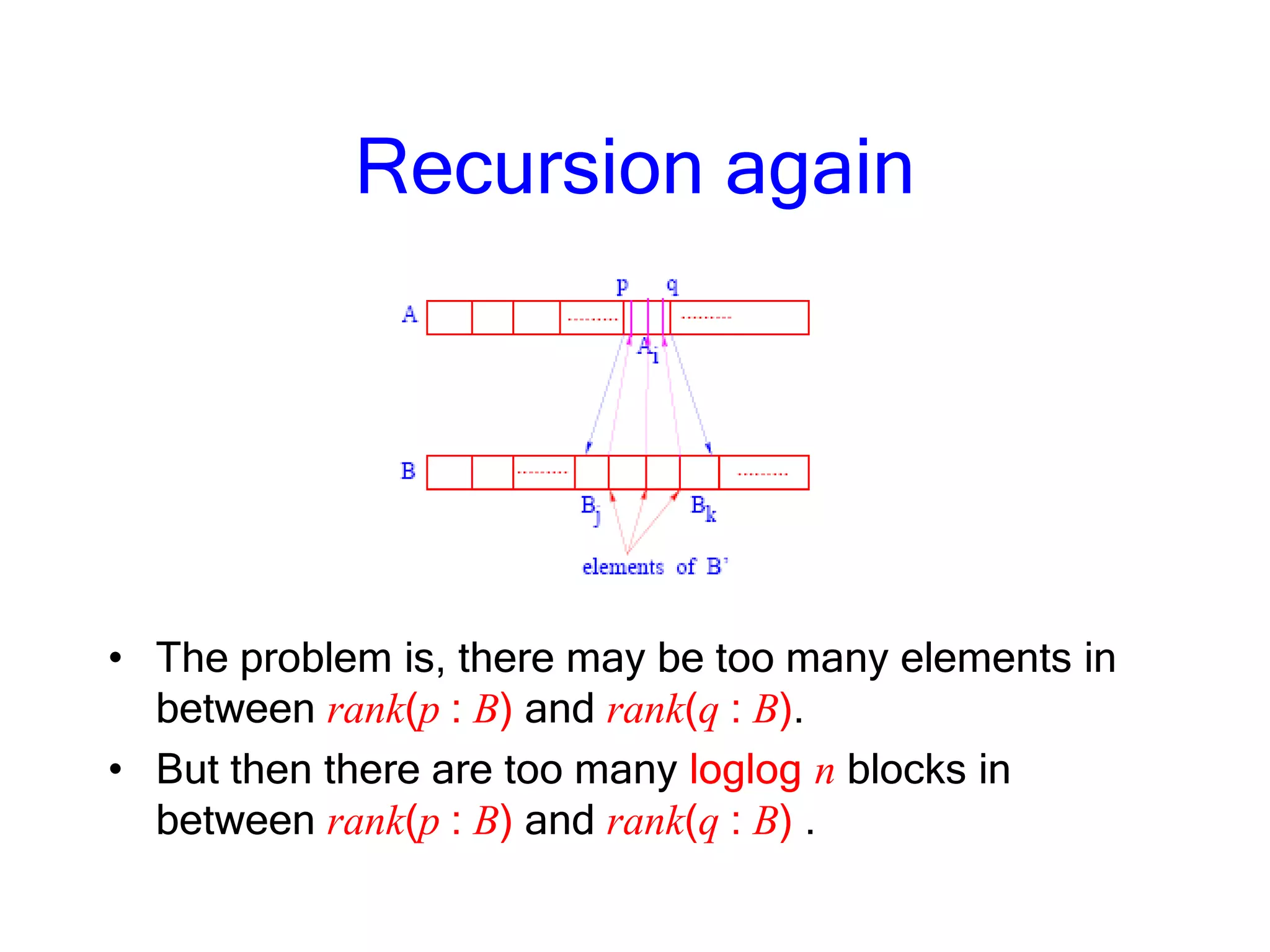

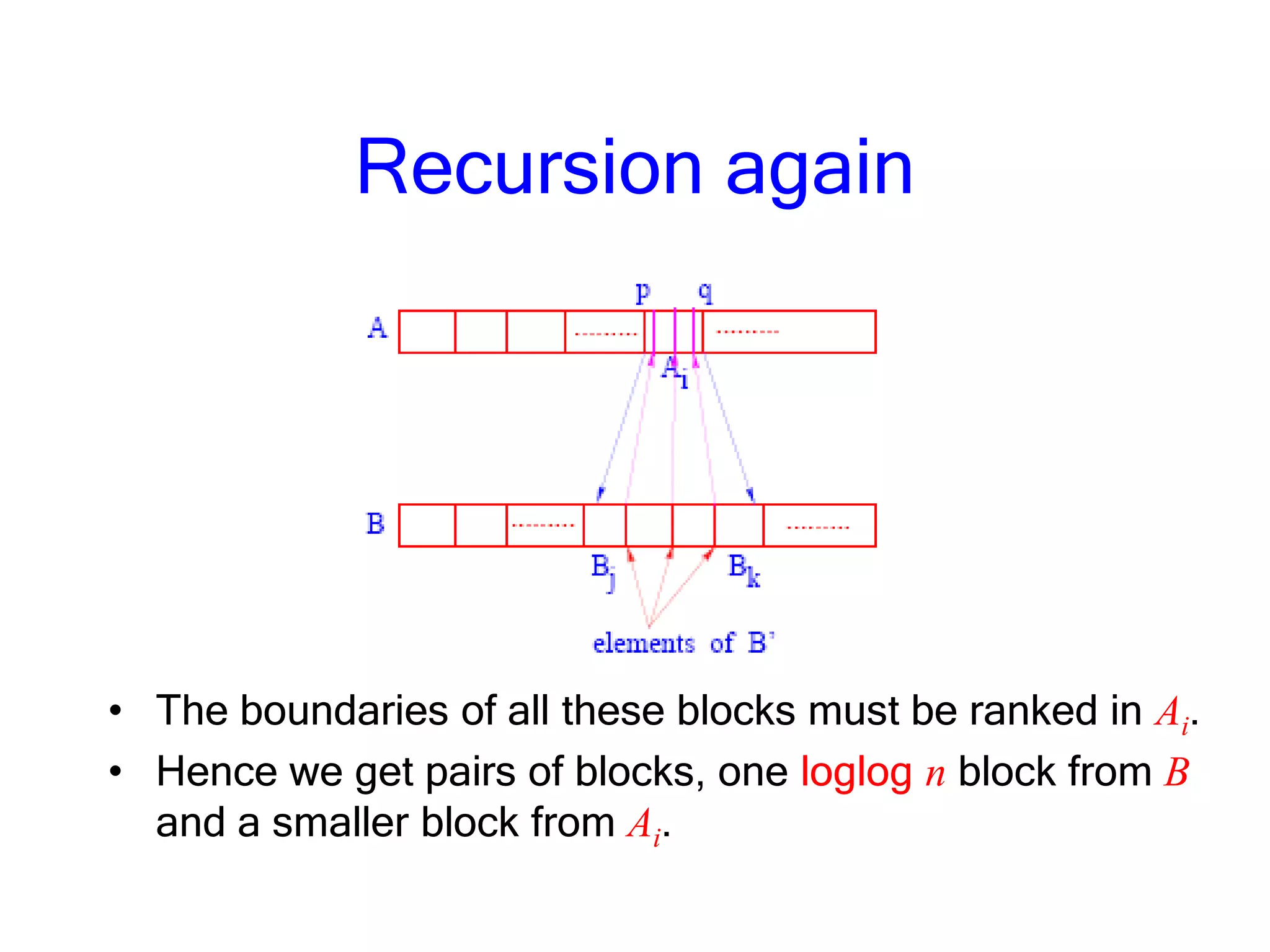

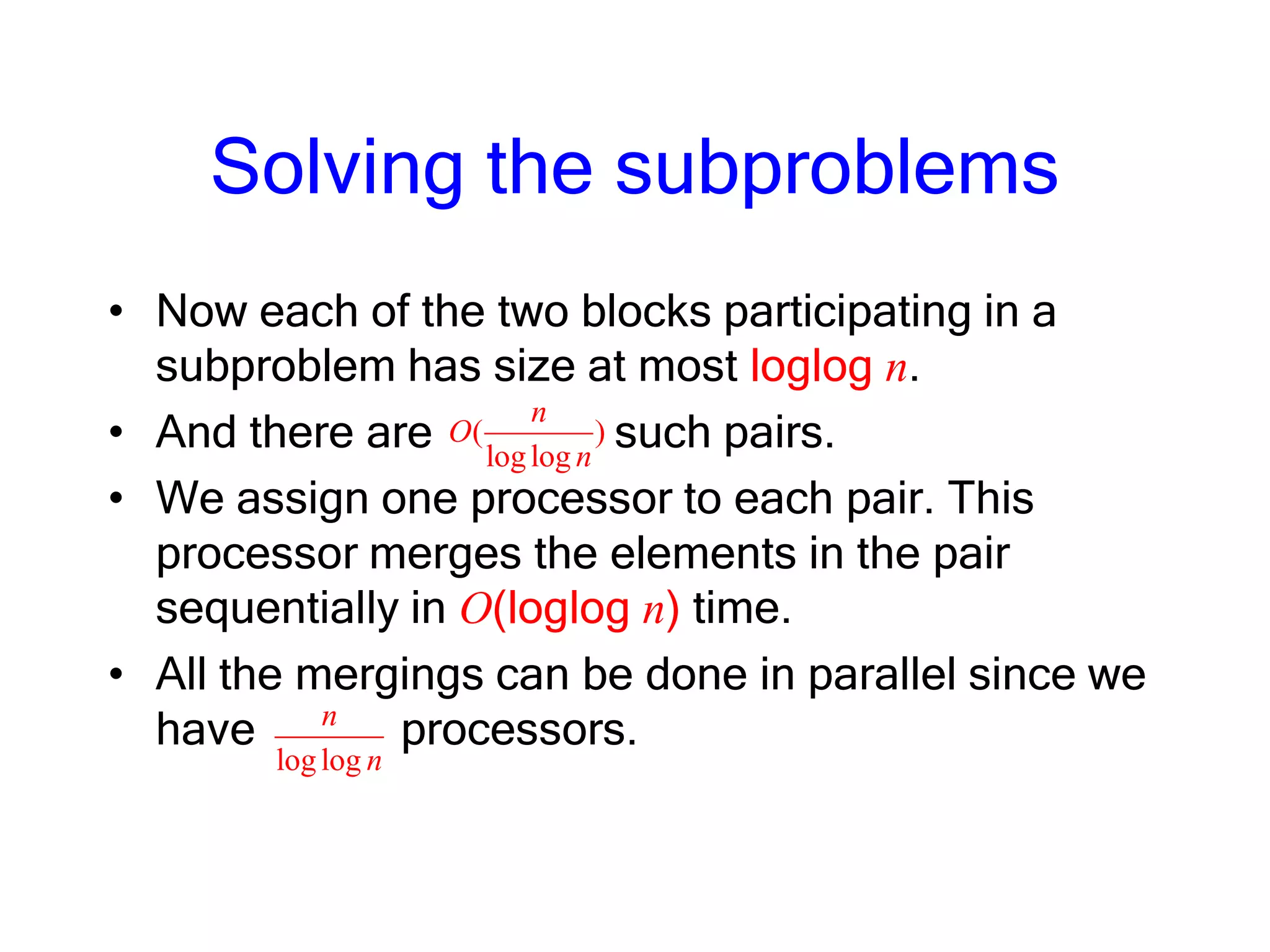

- A parallel merging algorithm uses the parallel search to rank and merge two sorted arrays in optimal O(log log n) time and O(n log log n) work (paragraph 2).

- An efficient sorting algorithm uses the optimal parallel merging algorithm in a merge sort approach to sort n elements in O(n log n) work and O(log n log log n) time (paragraph 3).