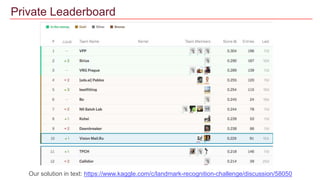

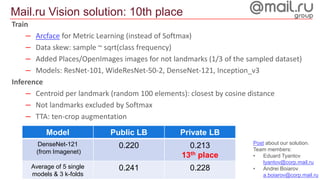

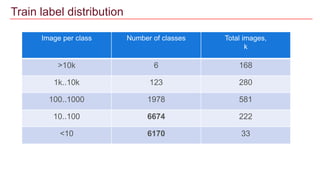

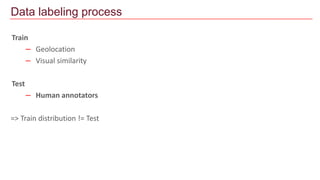

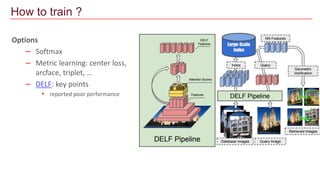

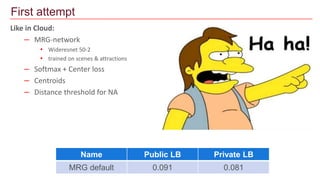

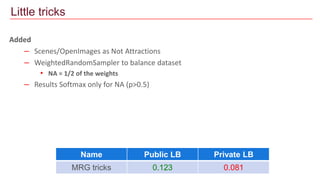

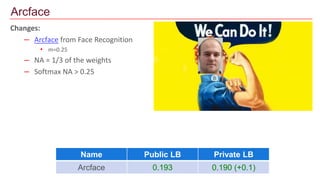

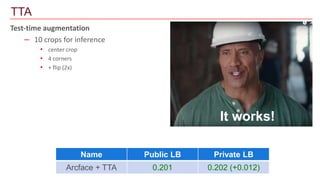

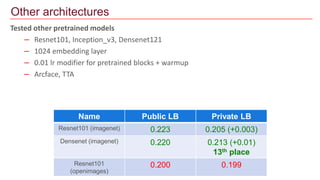

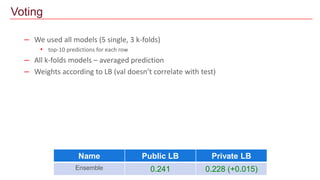

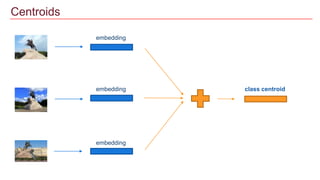

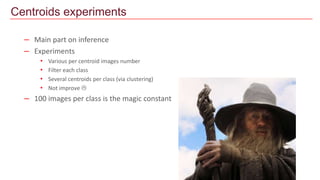

This document summarizes a Kaggle competition on landmark recognition. It provides details on the dataset, evaluation metrics, and the top approaches used. The dataset contains over 1 million training images across nearly 15,000 landmark classes. Models like ResNet and DenseNet were trained using metric learning and data balancing. Inference involved finding the closest centroids for each landmark class. Ensembling multiple models and test-time augmentation improved results. The top solution used ArcFace metric learning, balanced sampling, and kNN to achieve 10th place on the private leaderboard.

![4th place

– ResNet-50 for not attractions recognition (OpenImages)

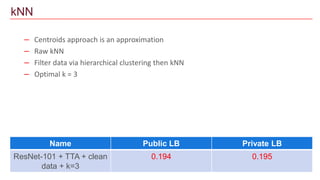

– kNN:

• k = 5

• scores[class_id] = sum(cos(query_image, index) for index in K_closest_images)

• scores[class_id] /= min(K, number of samples in train dataset with class=class_id)

• label = argmax(scores), confidence = scores[label]

– 100 augmented local crops from each image + kNN

– Simple voting

– Pure single model, overfitting

Other competitors

Name Public LB Private LB

ods 0.323 0.255](https://image.slidesharecdn.com/2018-06-13-kaggle-attractions-cvpr-181124122049/85/Kaggle-Google-Landmark-recognition-25-320.jpg)