This document provides an overview of the evolution of the java.util.concurrent.ConcurrentHashMap class. It discusses the motivations and improvements between different versions (v5, v6, v8) of the implementation, moving from using segments with reentrant locks in v5, to using unsafe operations and spin locks in v6, and removing segments and using nodes as locks in v8. It also describes new bulk operations introduced in v8 like search, forEach, and reduce.

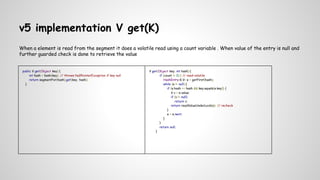

![v5 implementation V put(K,V)

● The segment gives to volatile reads and guarded writes by locks

● the segment has volatile count which is incremented in the put method guarded by locks

public V put(K key, V value) {

if (value == null)

throw new NullPointerException();

int hash = hash(key);

return segmentFor(hash).put(key, hash, value, false);

}

V put(K key, int hash, V value, boolean onlyIfAbsent) {

lock();

try {

int c = count;

if (c++ > threshold) // ensure capacity

rehash();

HashEntry[] tab = table;

int index = hash & (tab.length - 1);

HashEntry<K,V> first = (HashEntry<K,V>) tab[index];

HashEntry<K,V> e = first;

while (e != null && (e.hash != hash || !key.equals(e.key)))

e = e.next;

V oldValue;

if (e != null) {

oldValue = e.value;

if (!onlyIfAbsent)

e.value = value;

}

else {

oldValue = null;

tab[index] = new HashEntry<K,V>(key, hash, first, value);

count = c; // write-volatile

}

return oldValue;

} finally {

unlock(); }

}](https://image.slidesharecdn.com/java-150113085256-conversion-gate02/85/Java-util-concurrent-concurrent-hashmap-5-320.jpg)