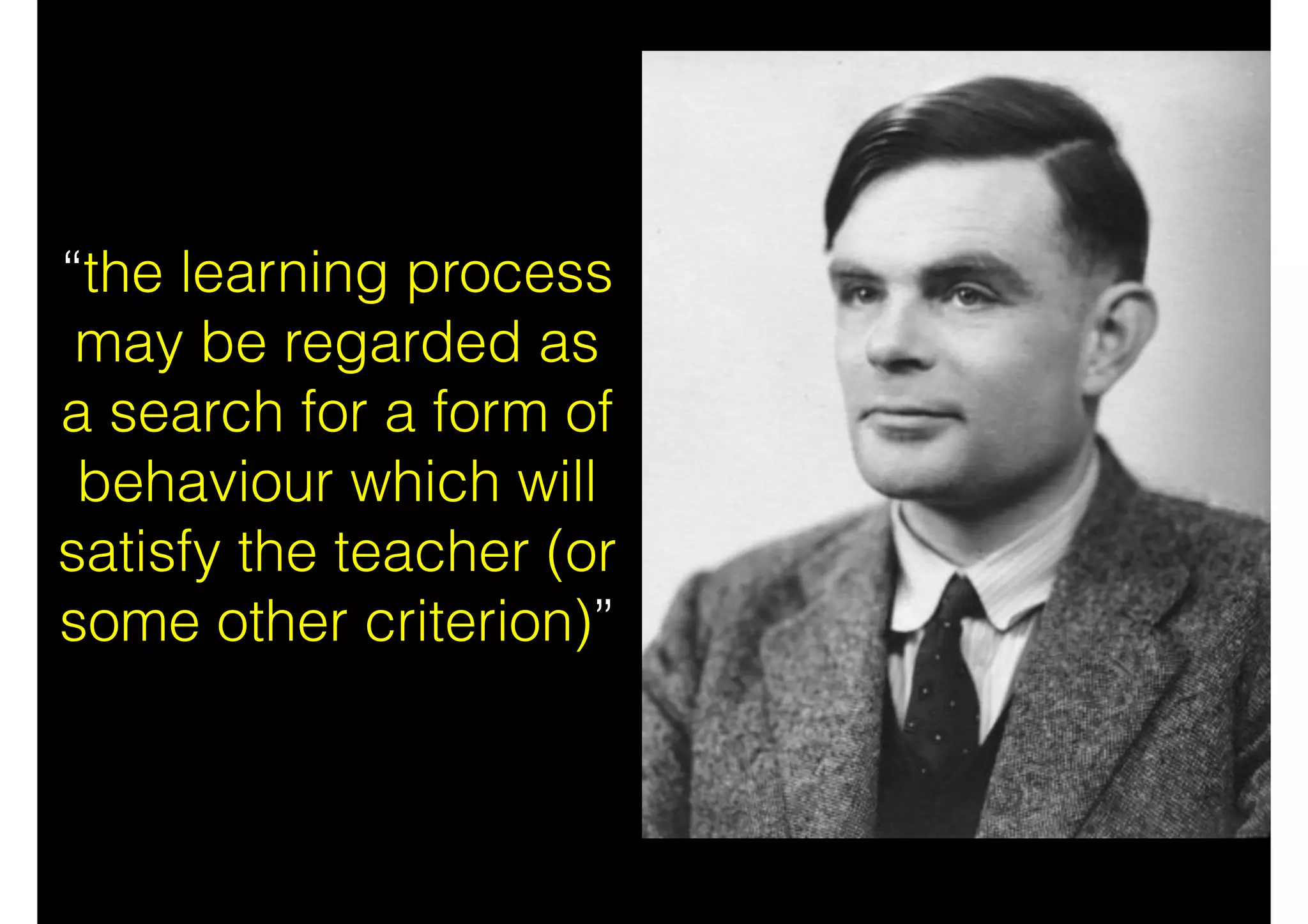

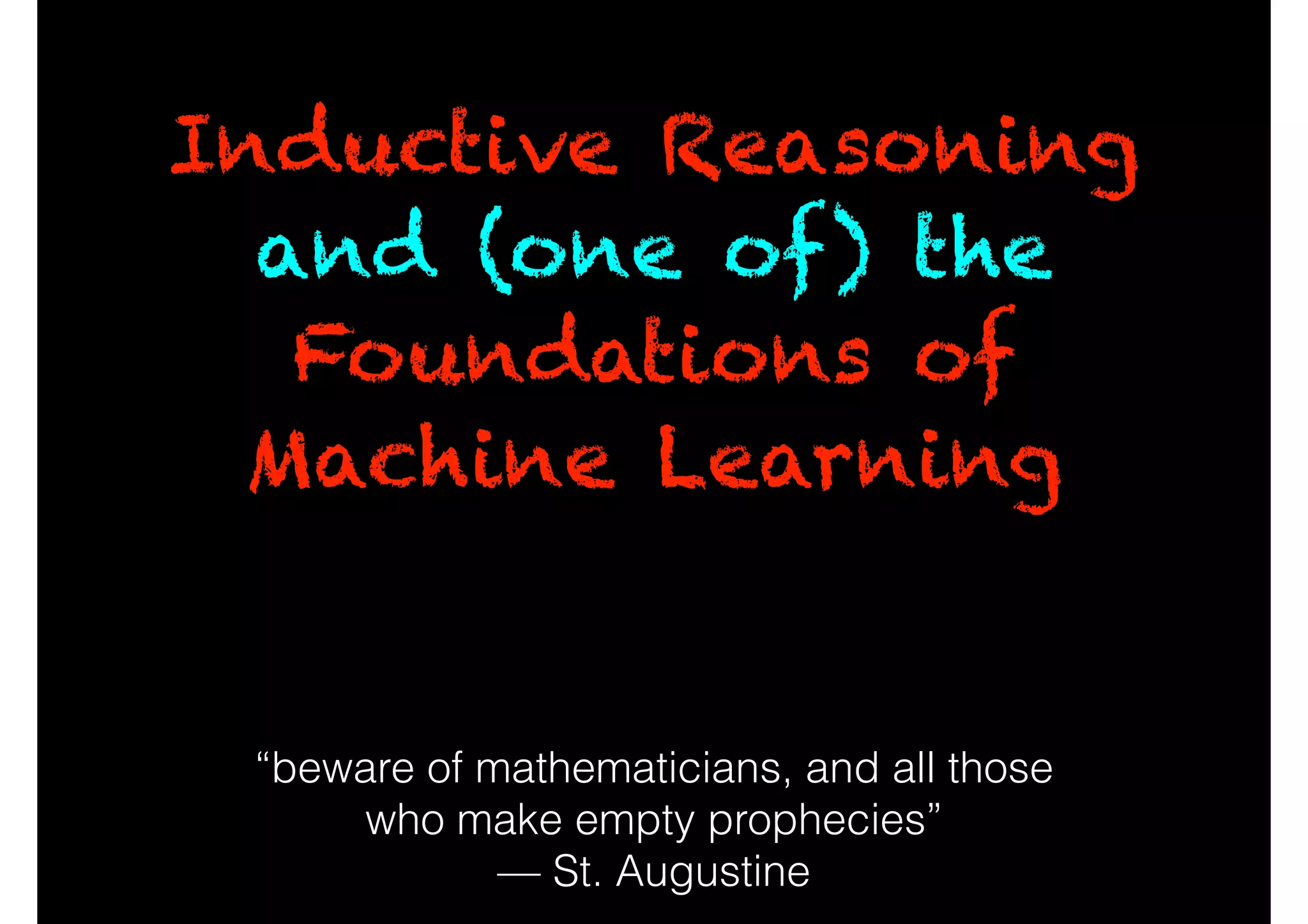

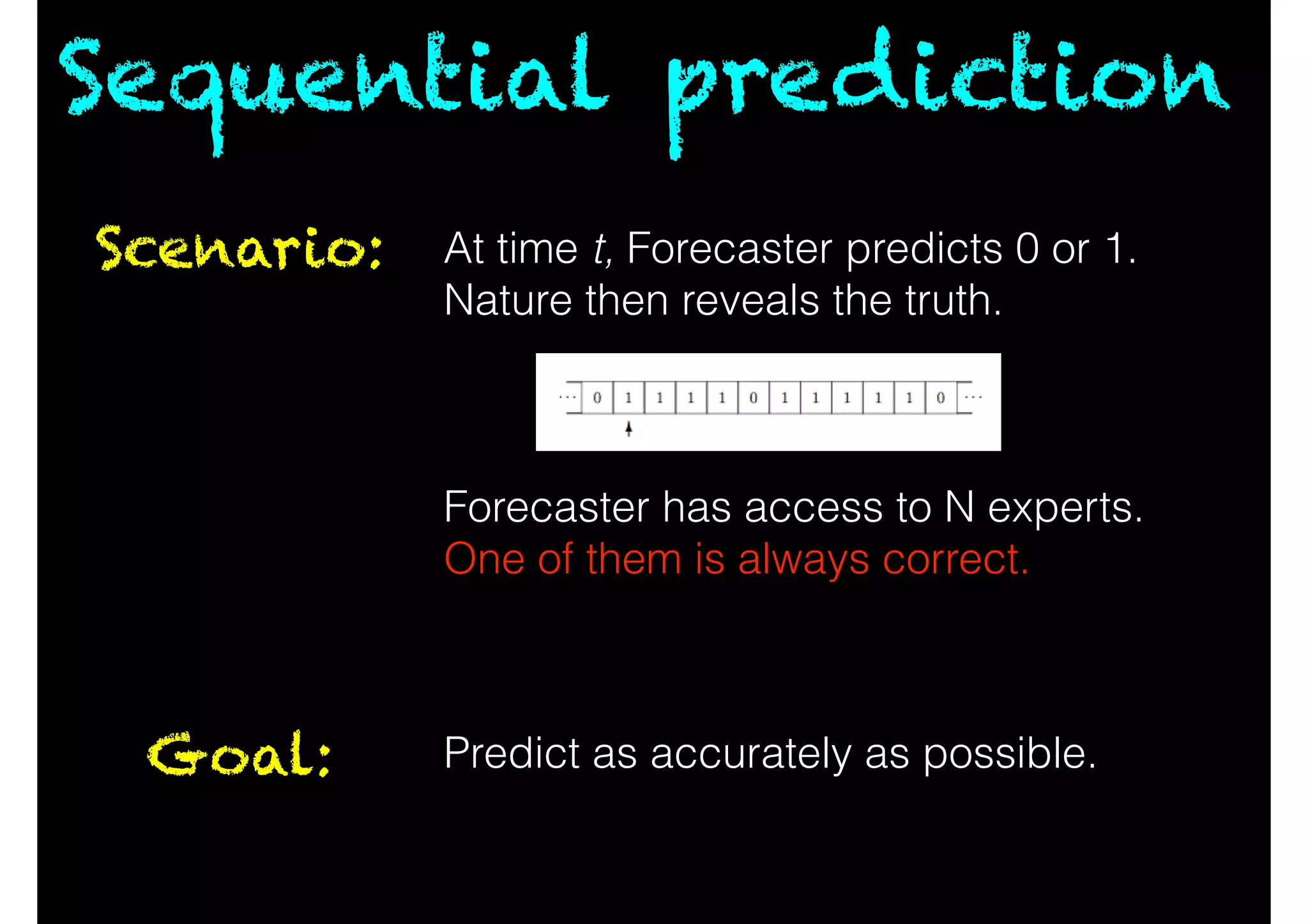

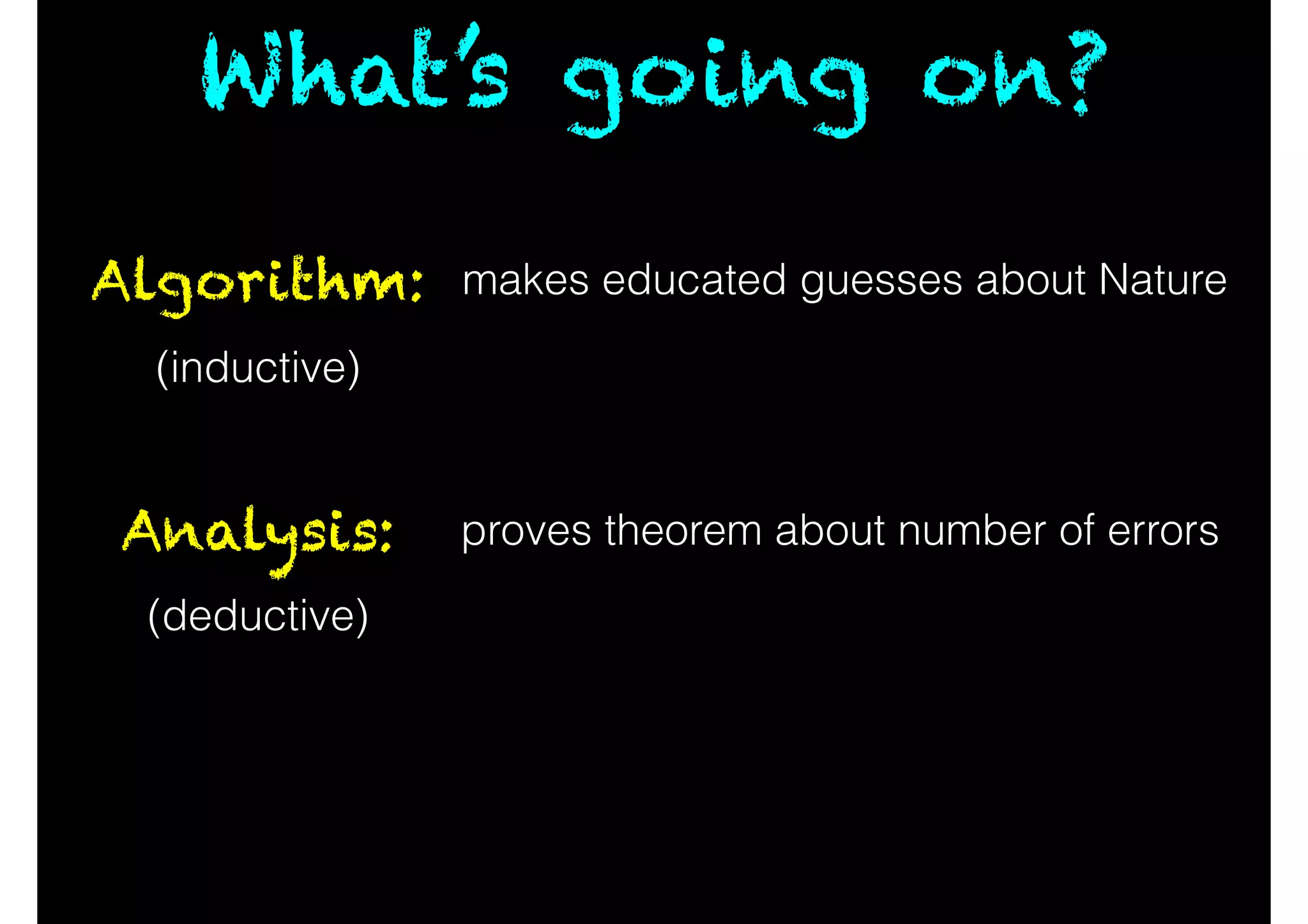

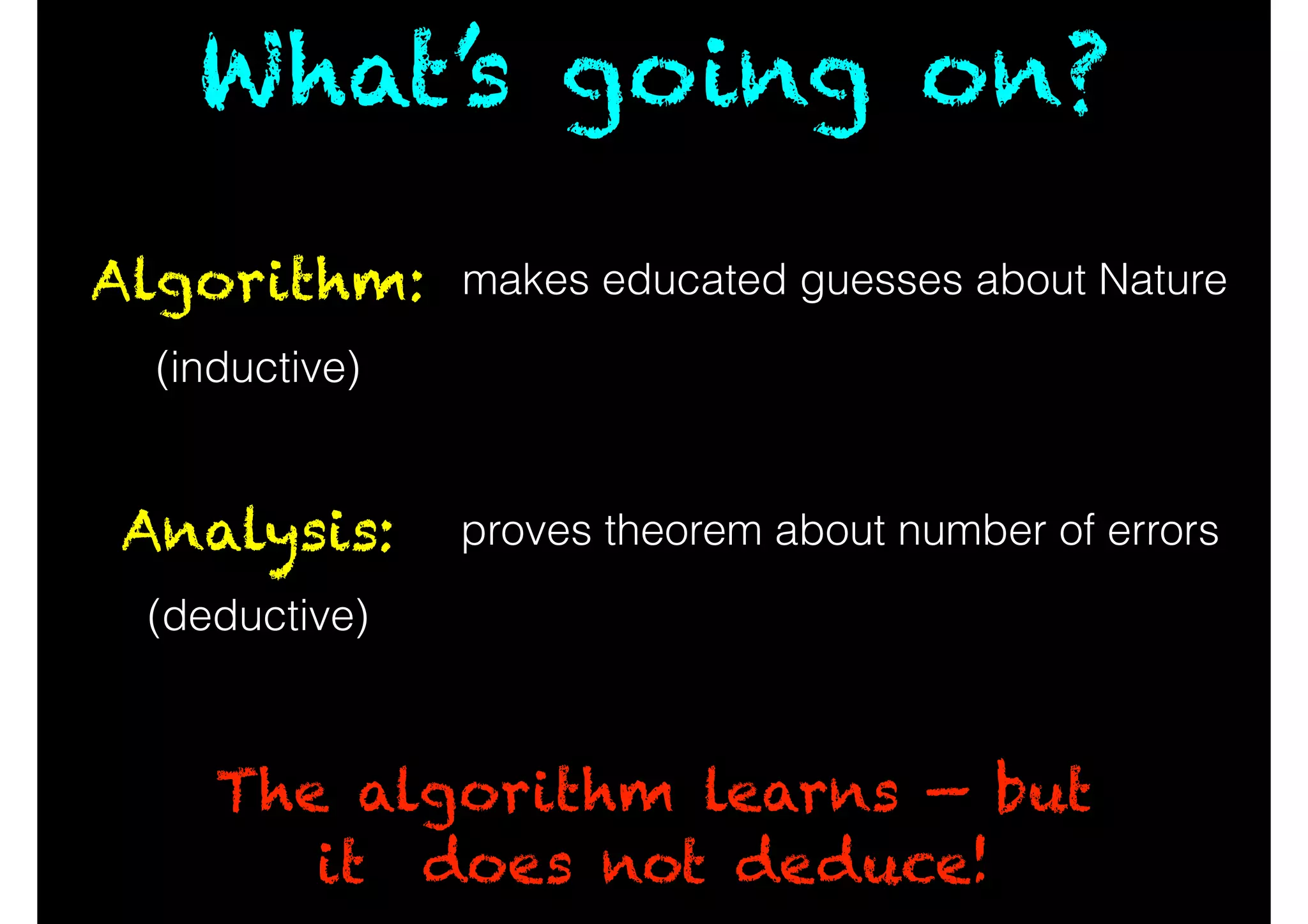

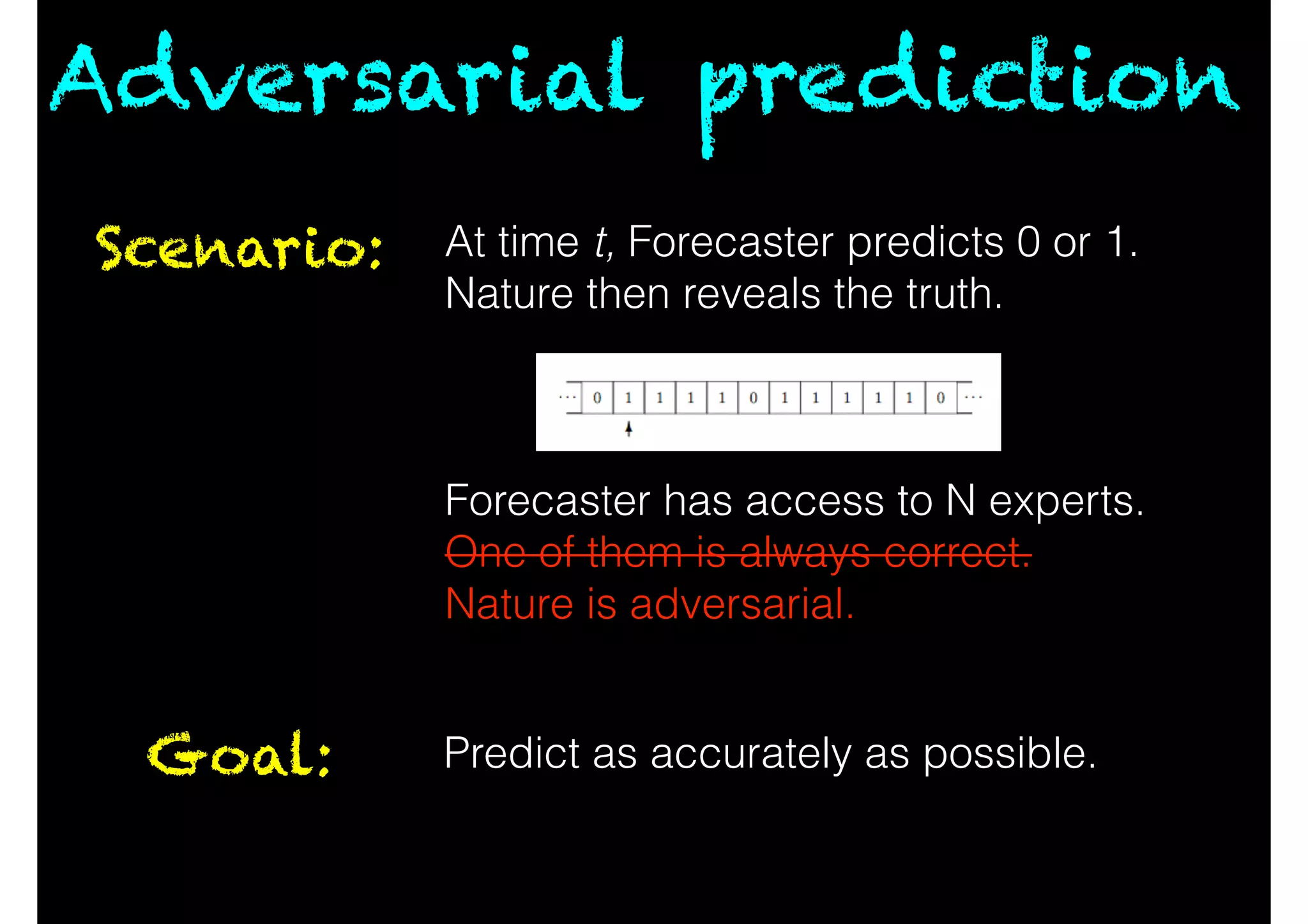

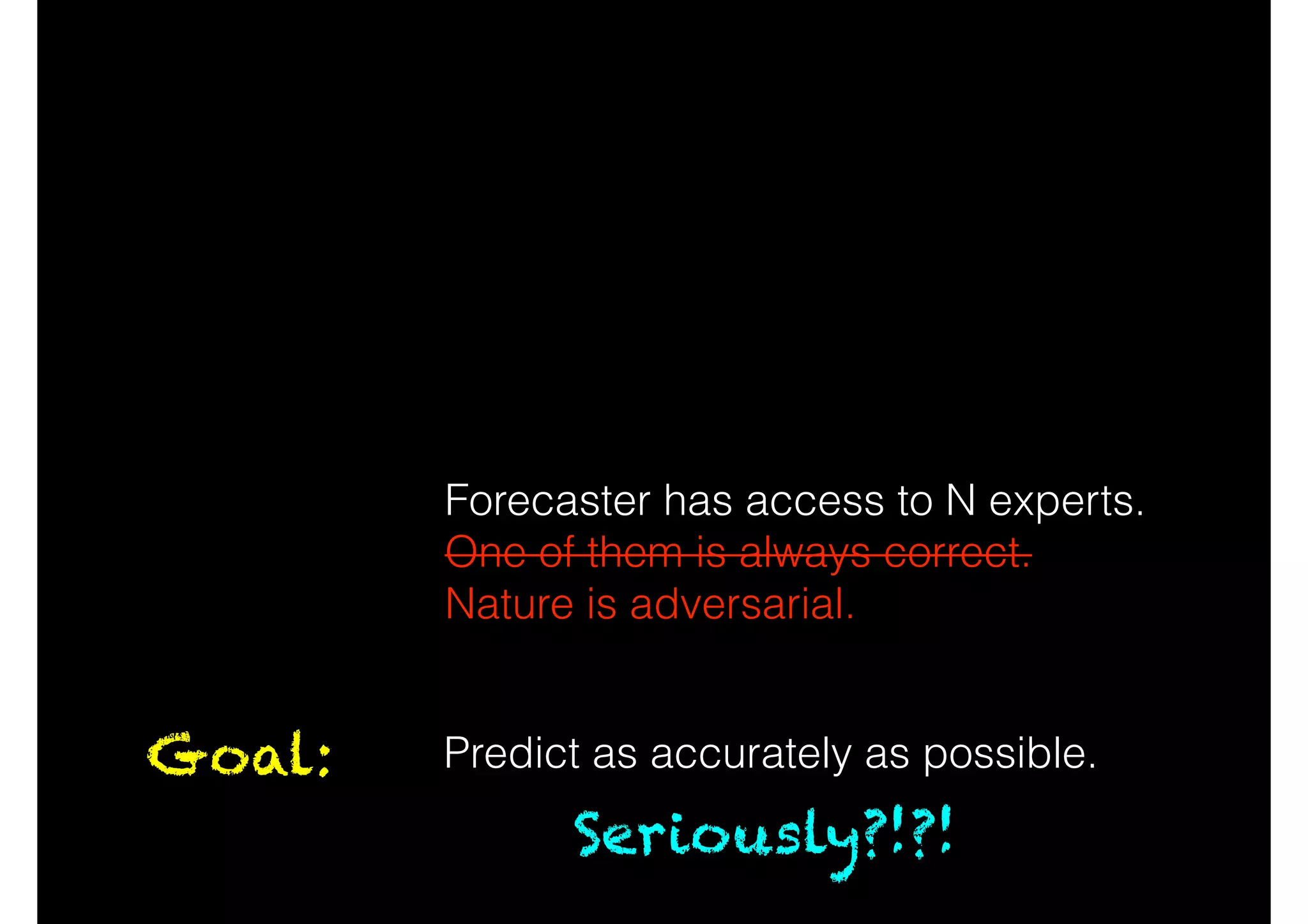

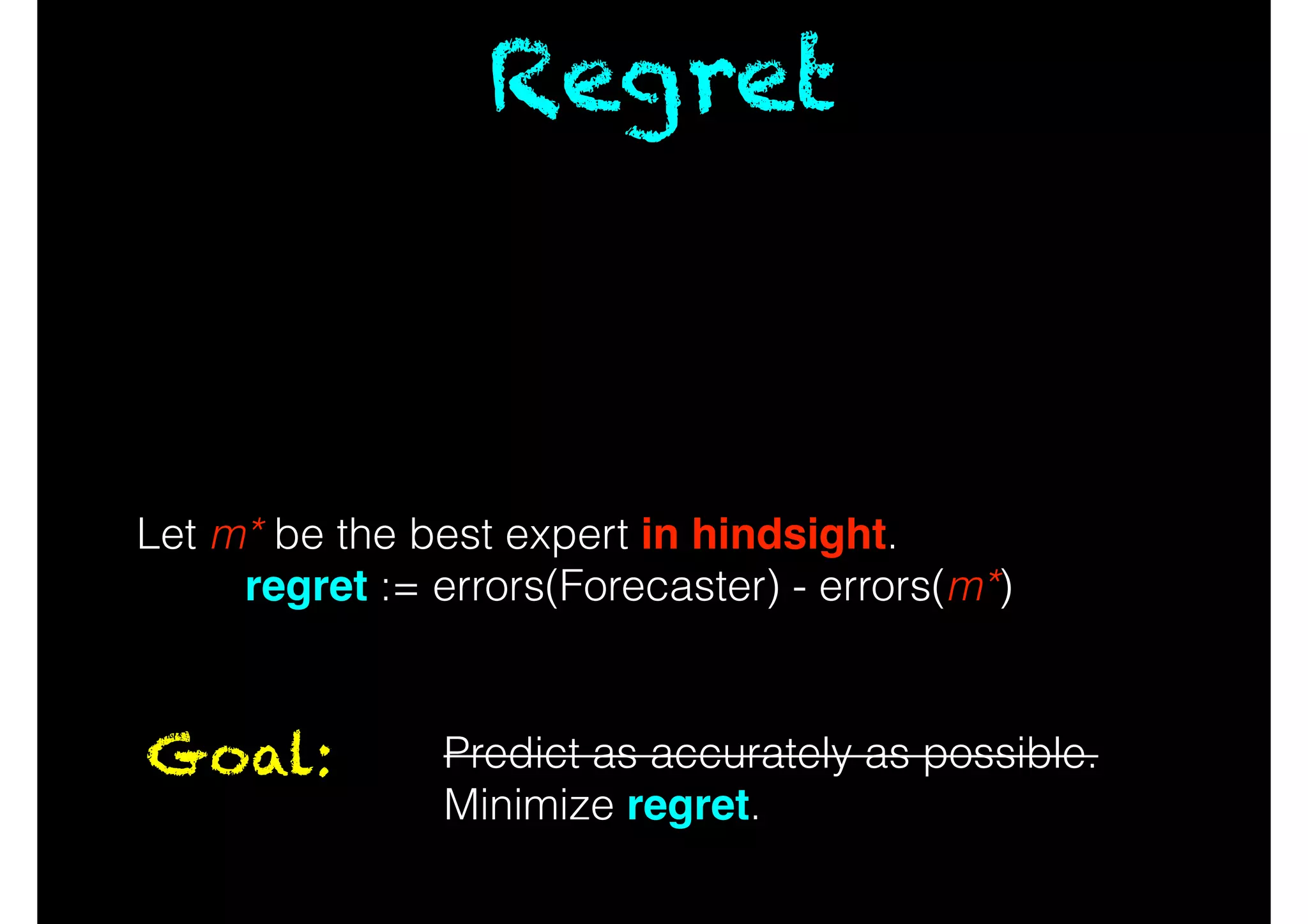

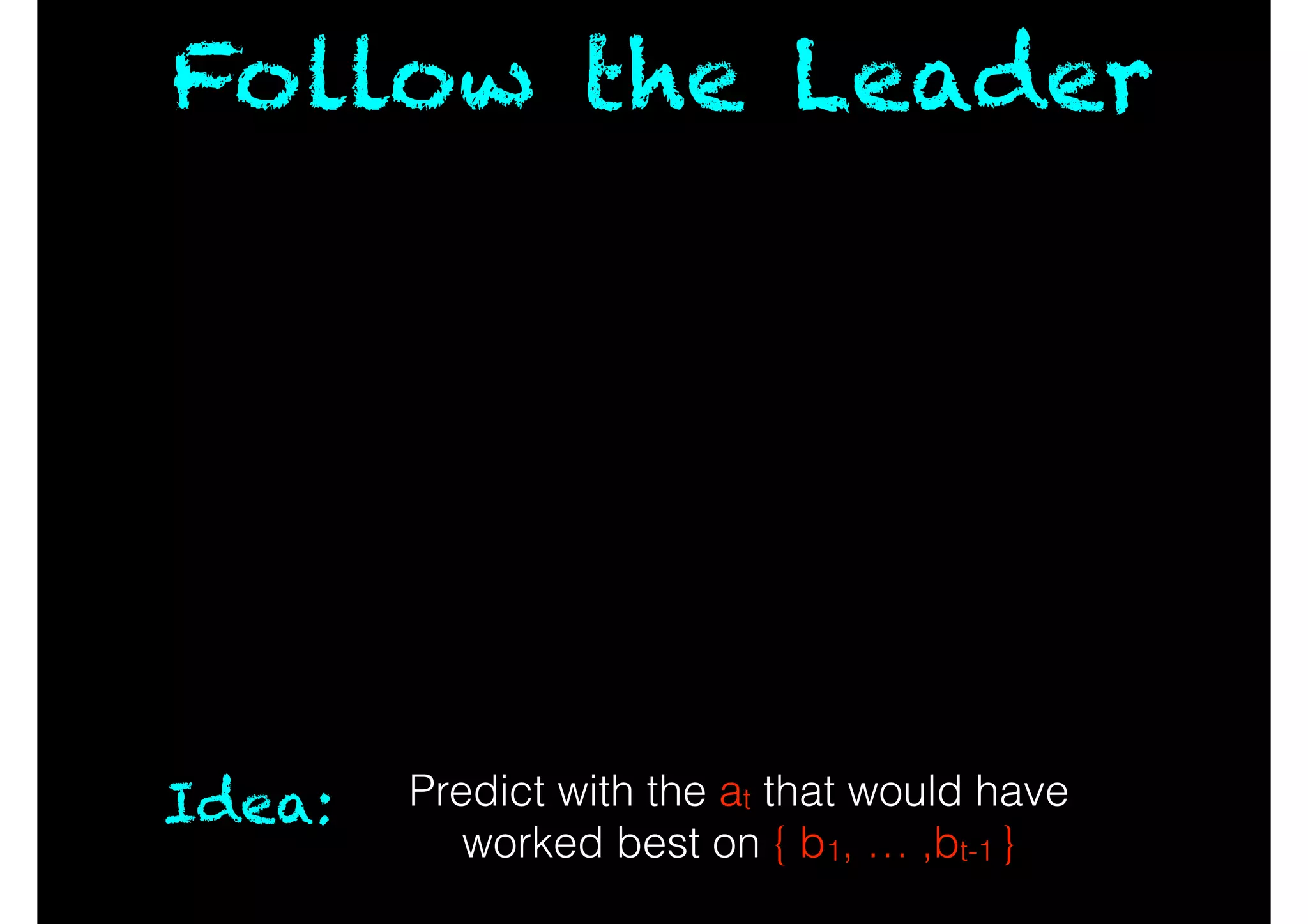

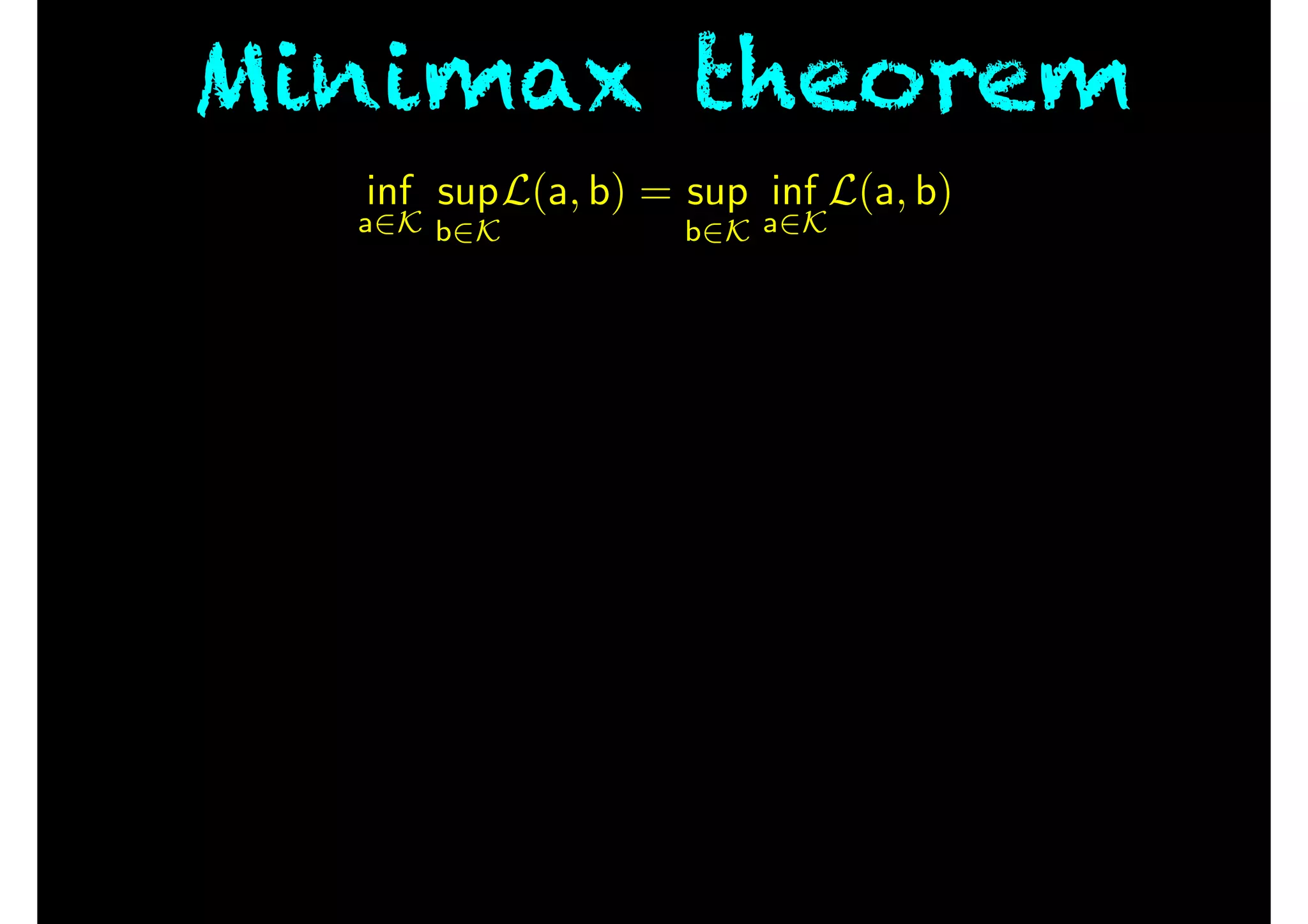

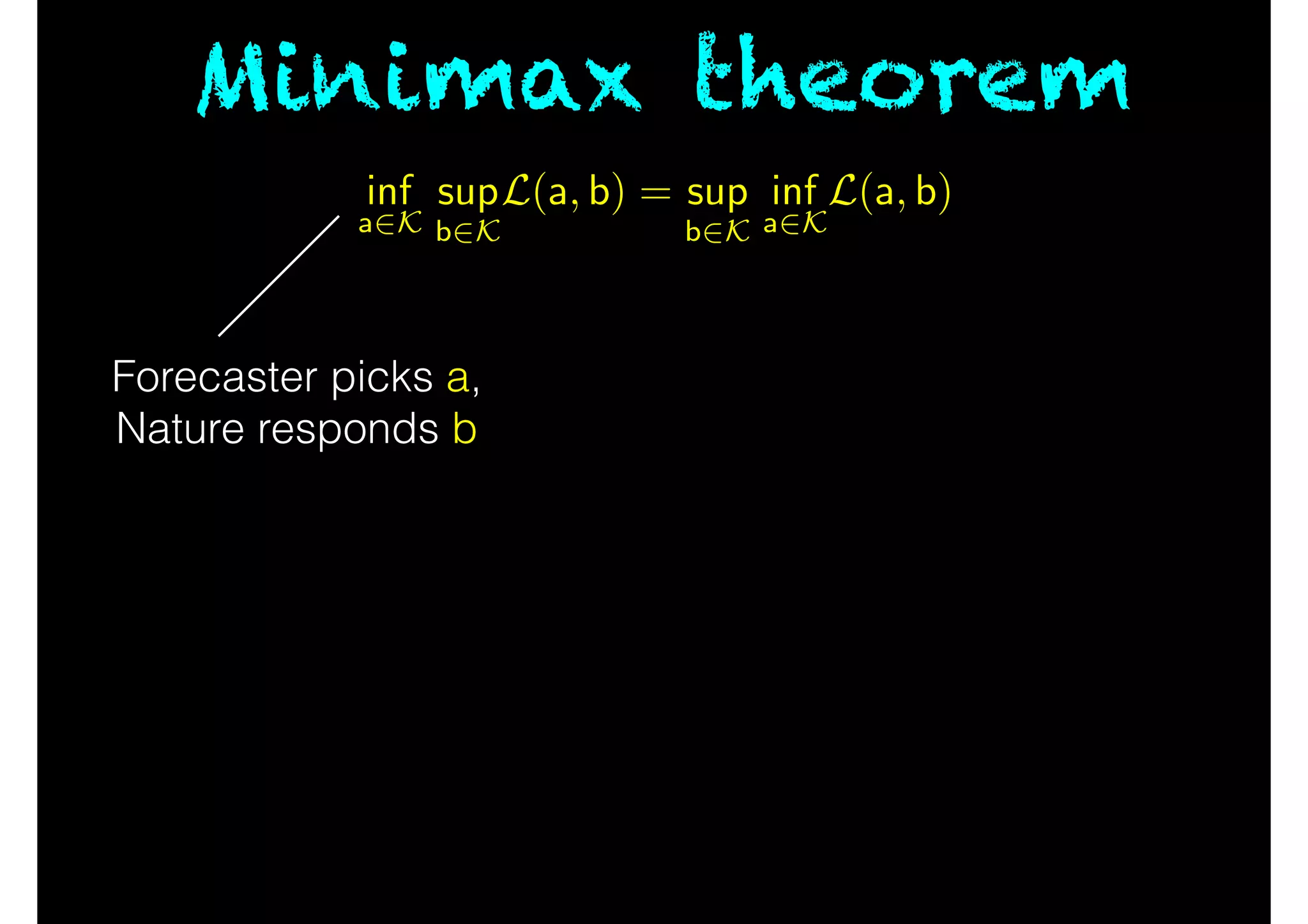

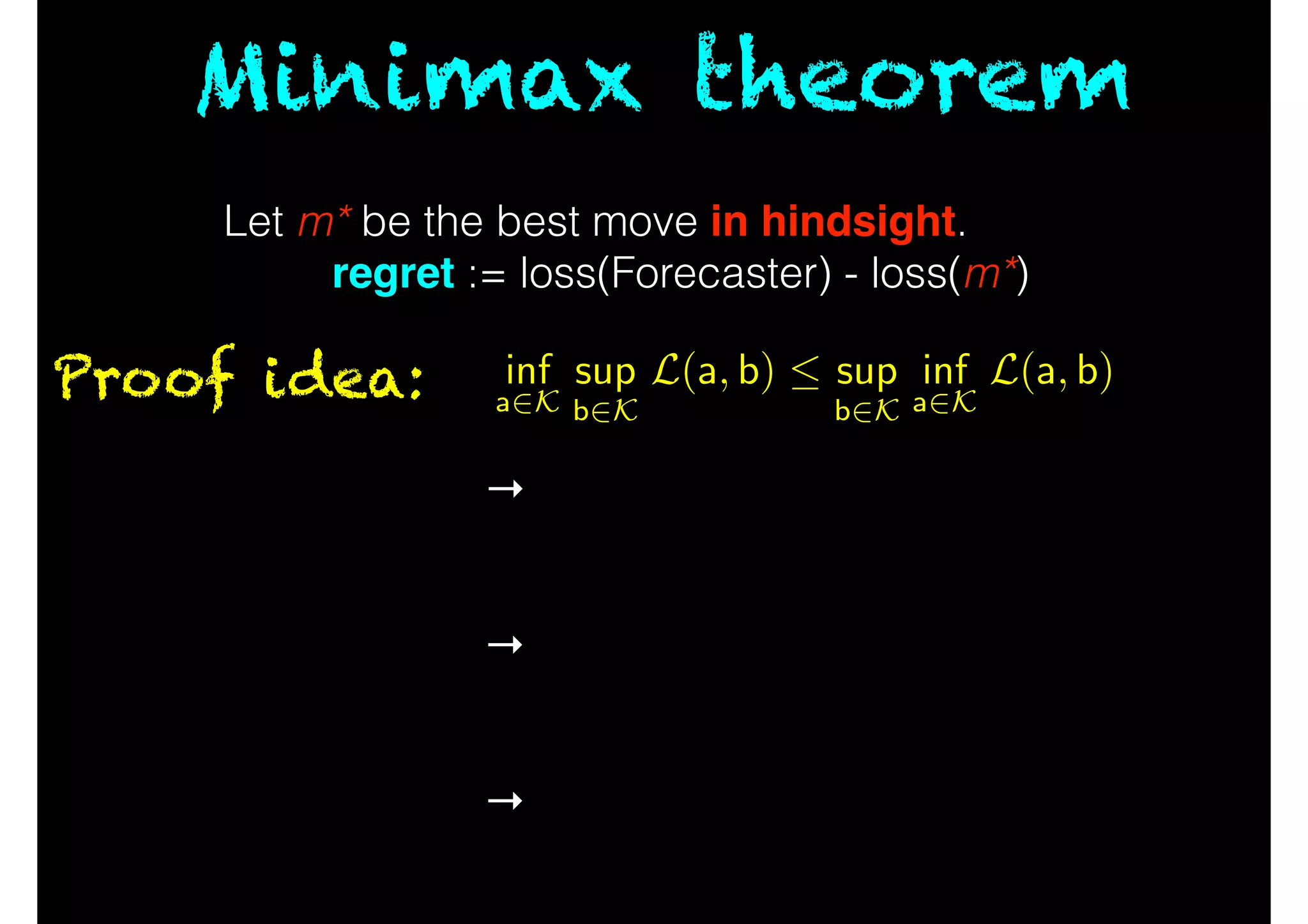

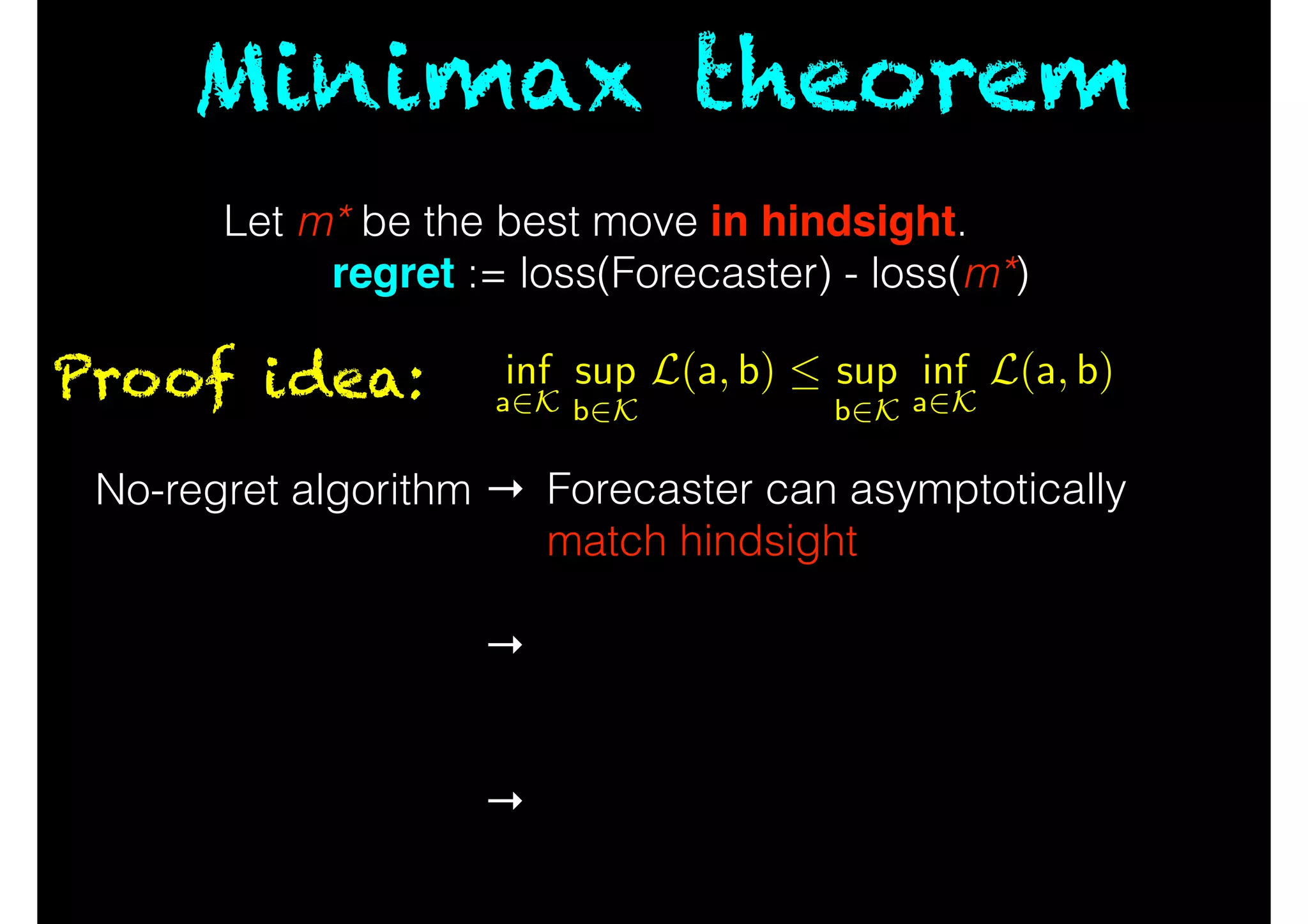

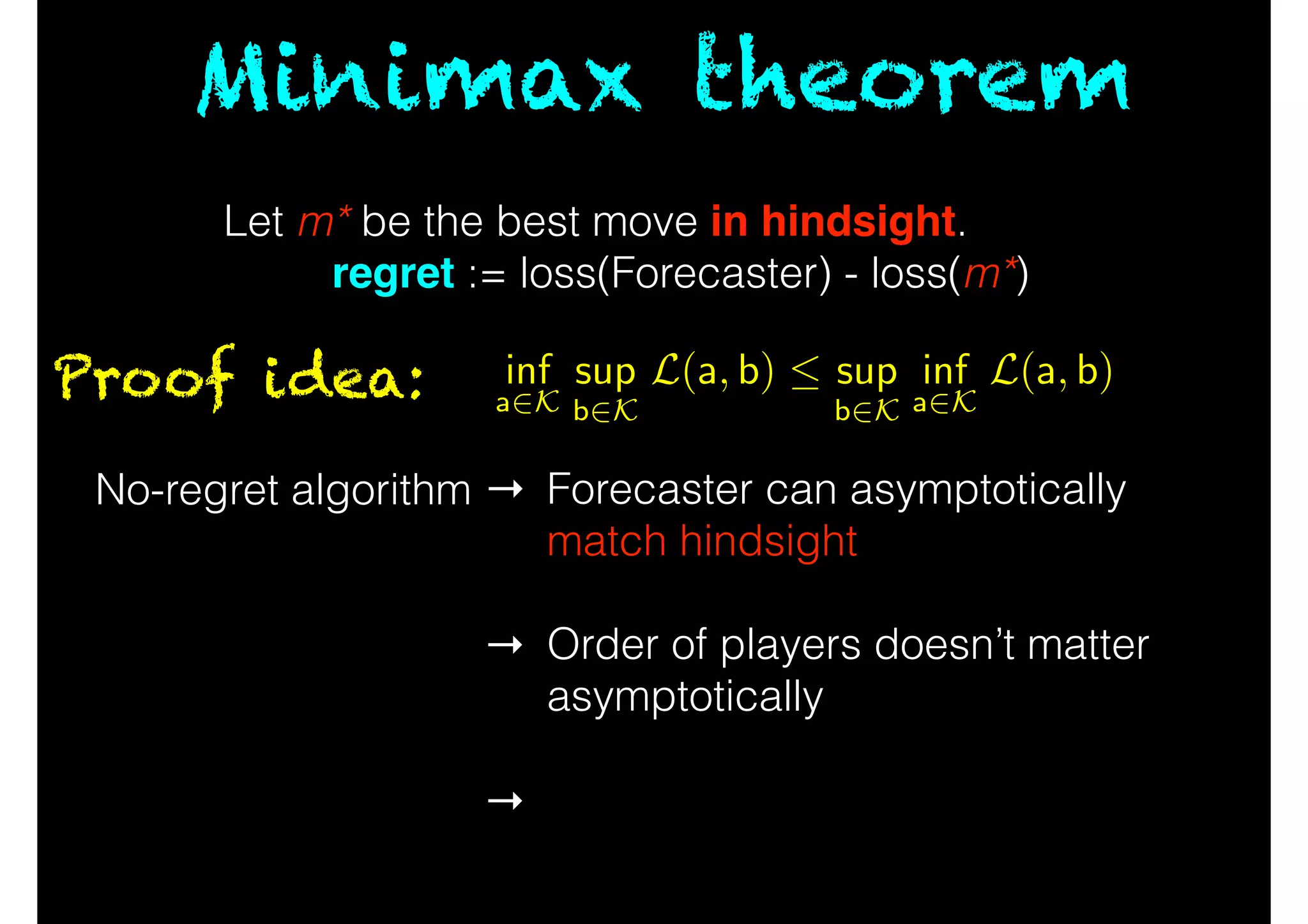

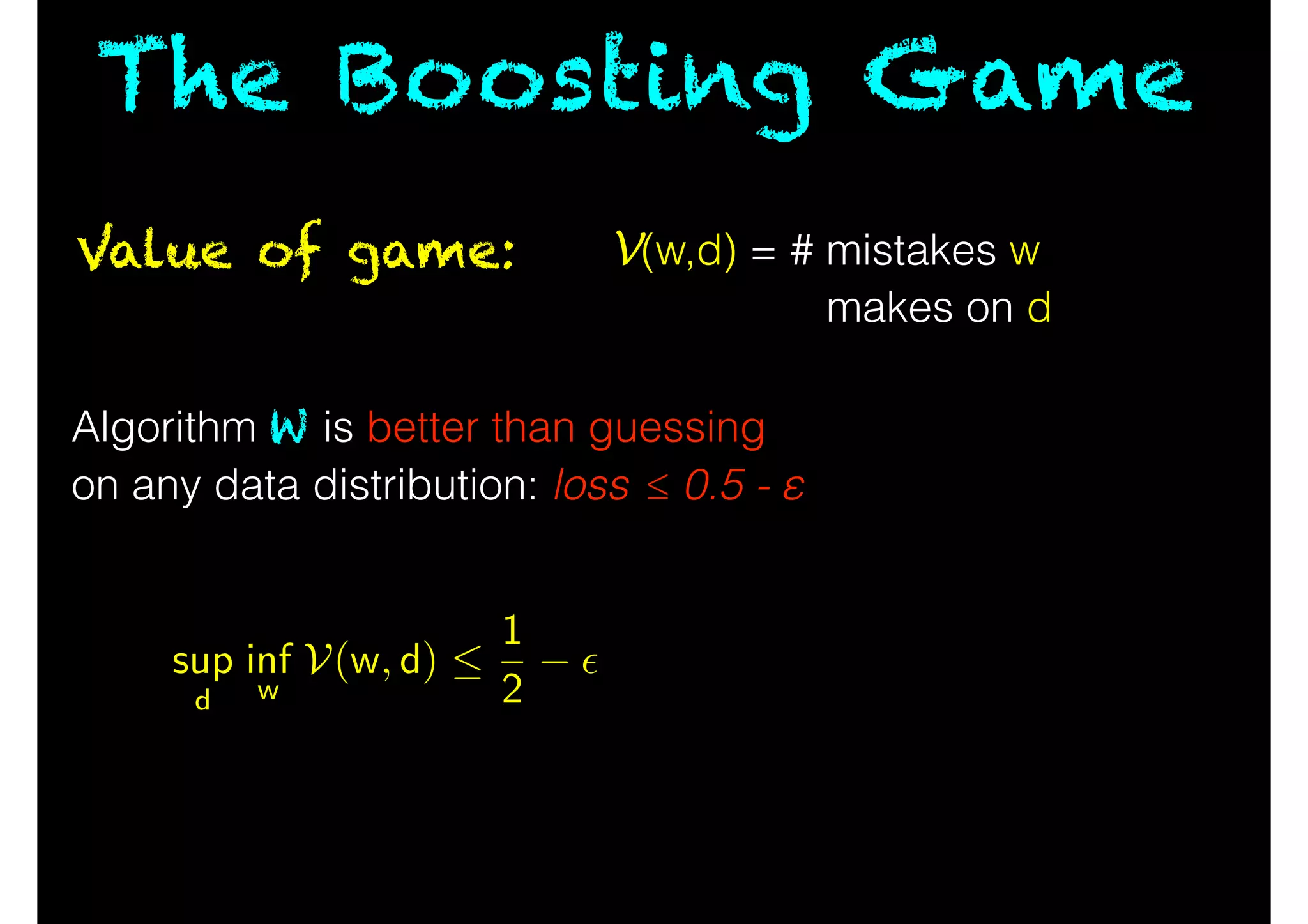

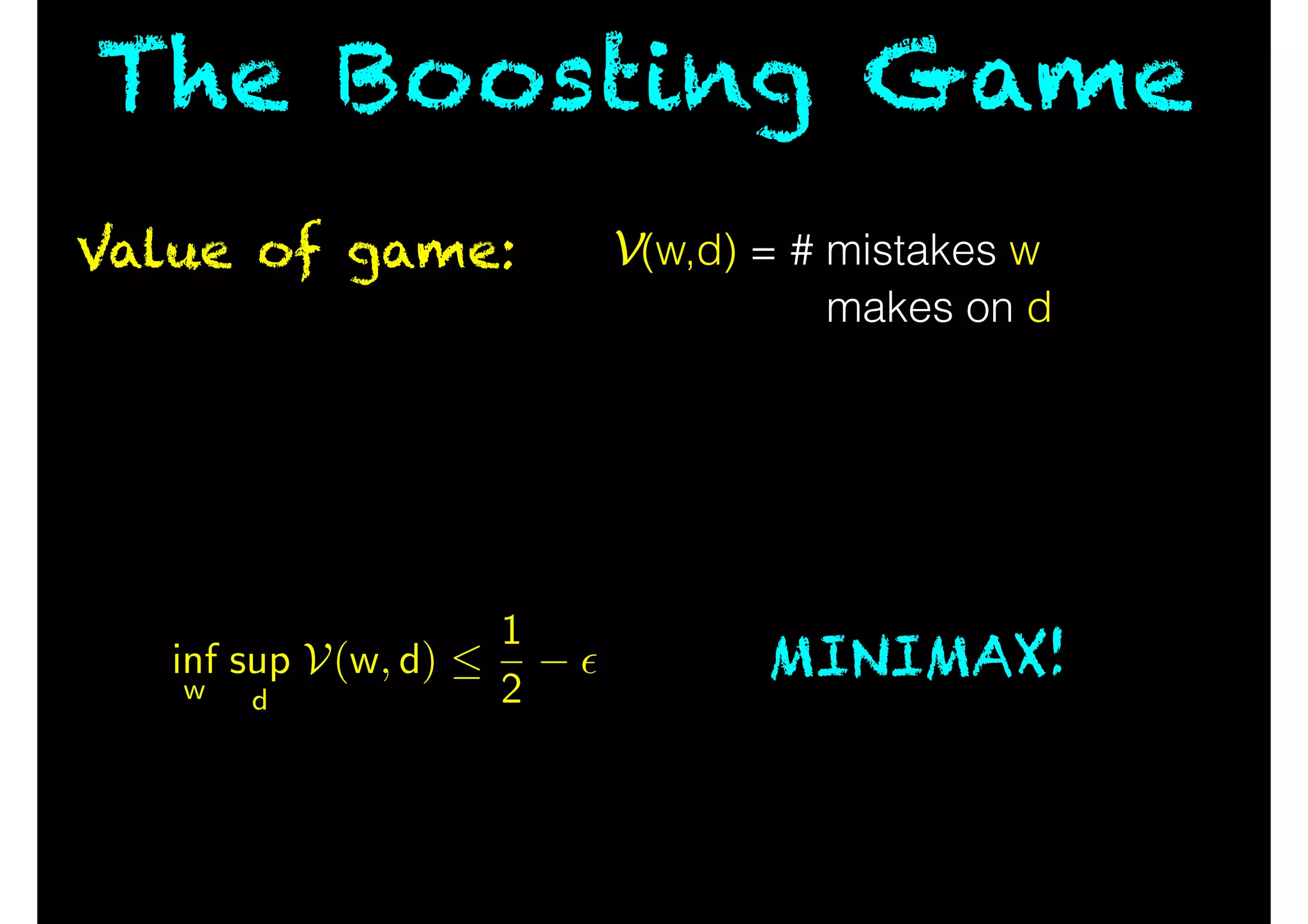

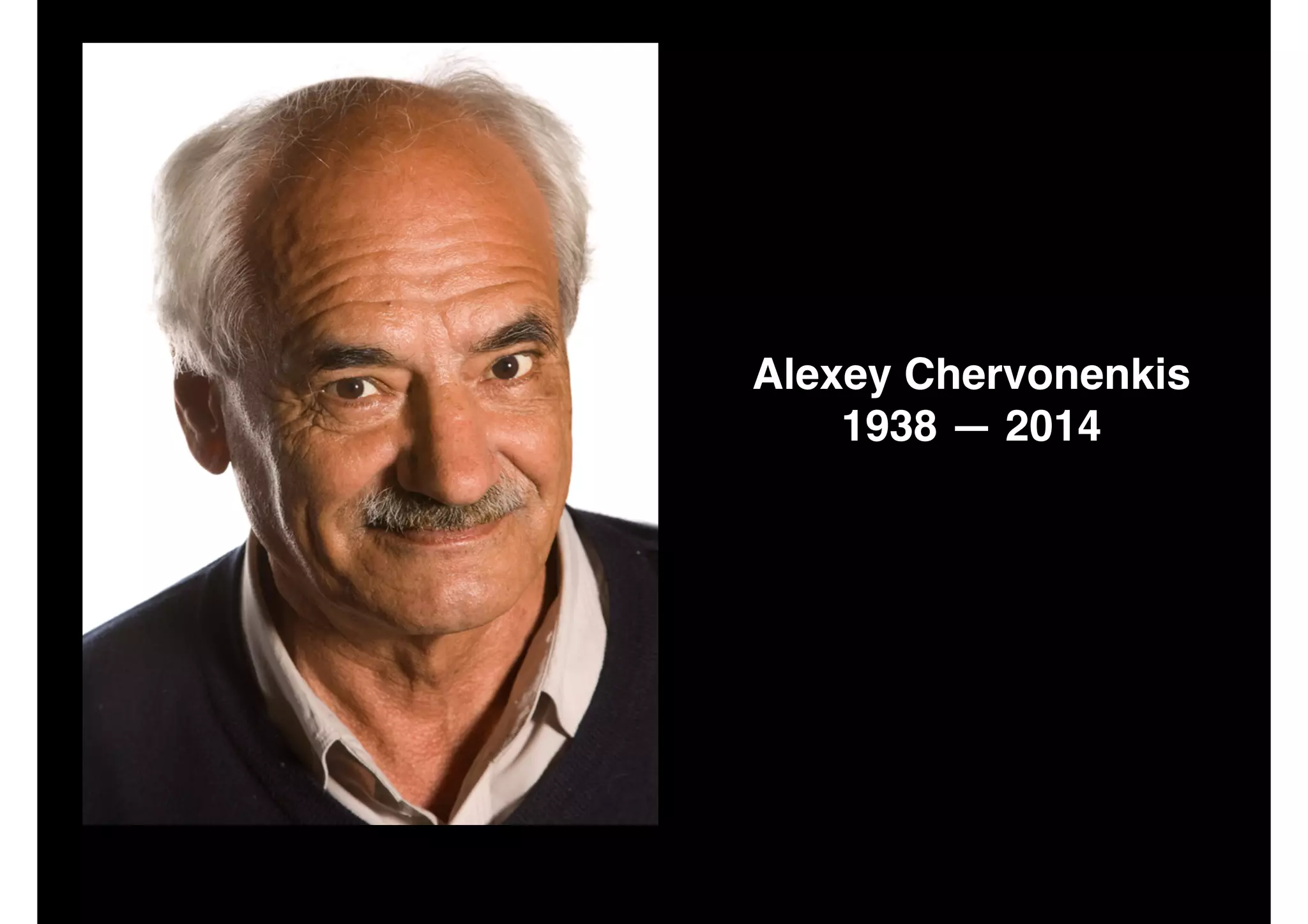

The document discusses the principles of inductive reasoning and various machine learning algorithms, exploring how algorithms learn from mistakes rather than relying solely on deductive reasoning. It outlines scenarios for sequential and adversarial prediction, emphasizing the importance of minimizing regret and the challenges posed by non-convex optimization in deep learning. The document concludes by highlighting the advancements in deep learning and mentions influential figures in the field.

![Next: Boy ponders the errors of his ways

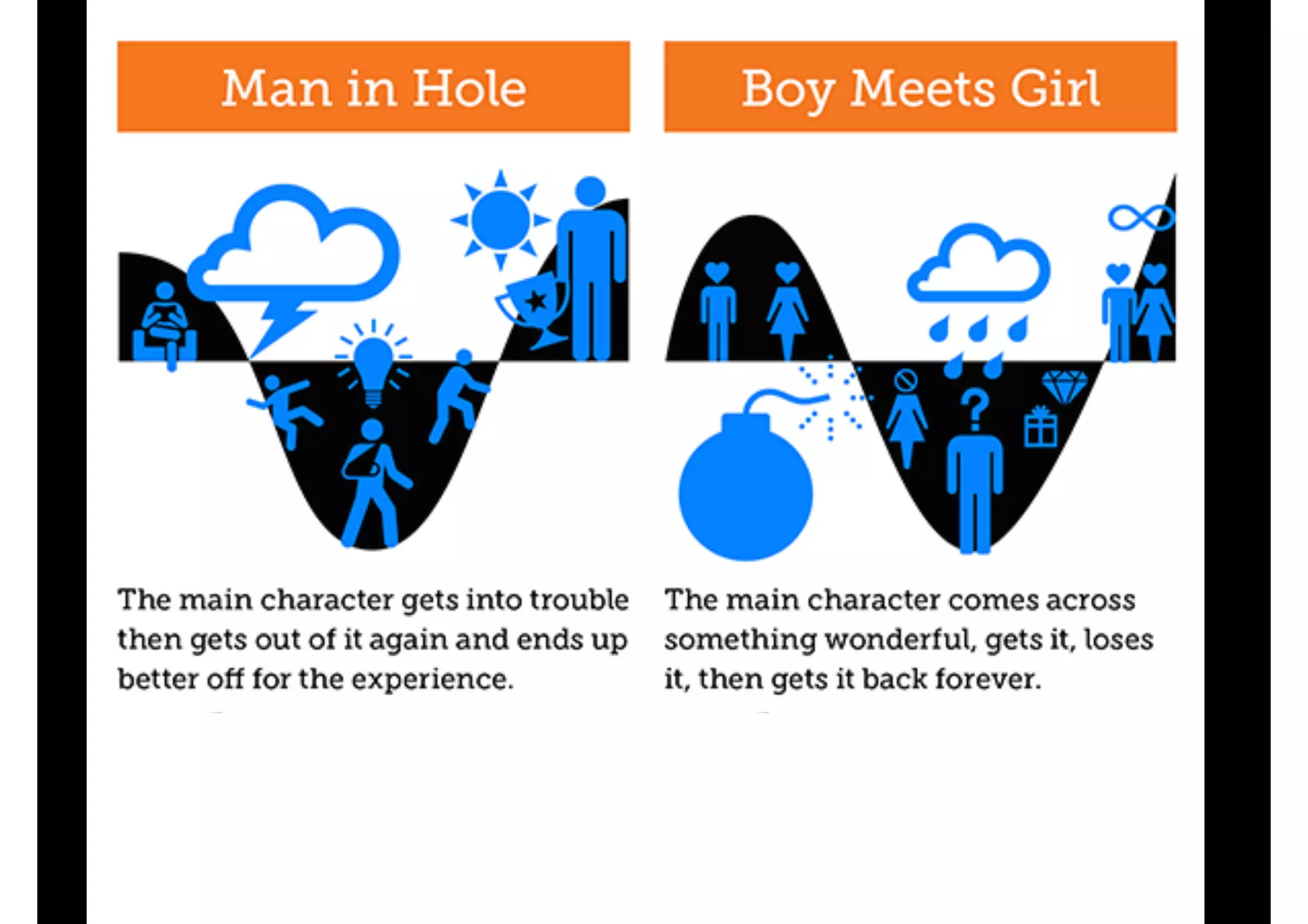

“this book is composed […] upon one very simple theme

[…] that we can learn from our mistakes”

!

!

!

!

!

!

!

!

!

!

Karl Popper, Conjectures and Refutations](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-17-2048.jpg)

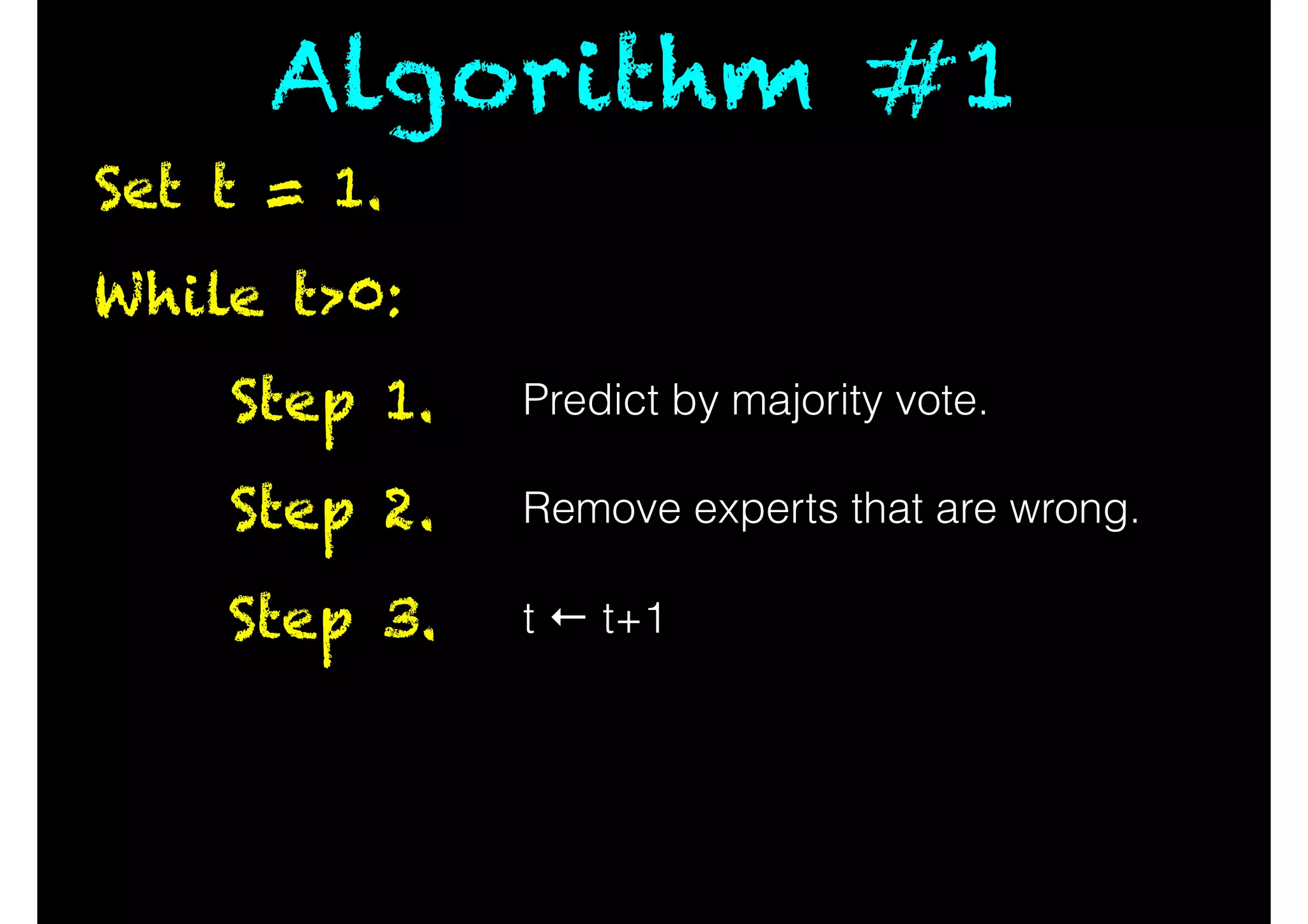

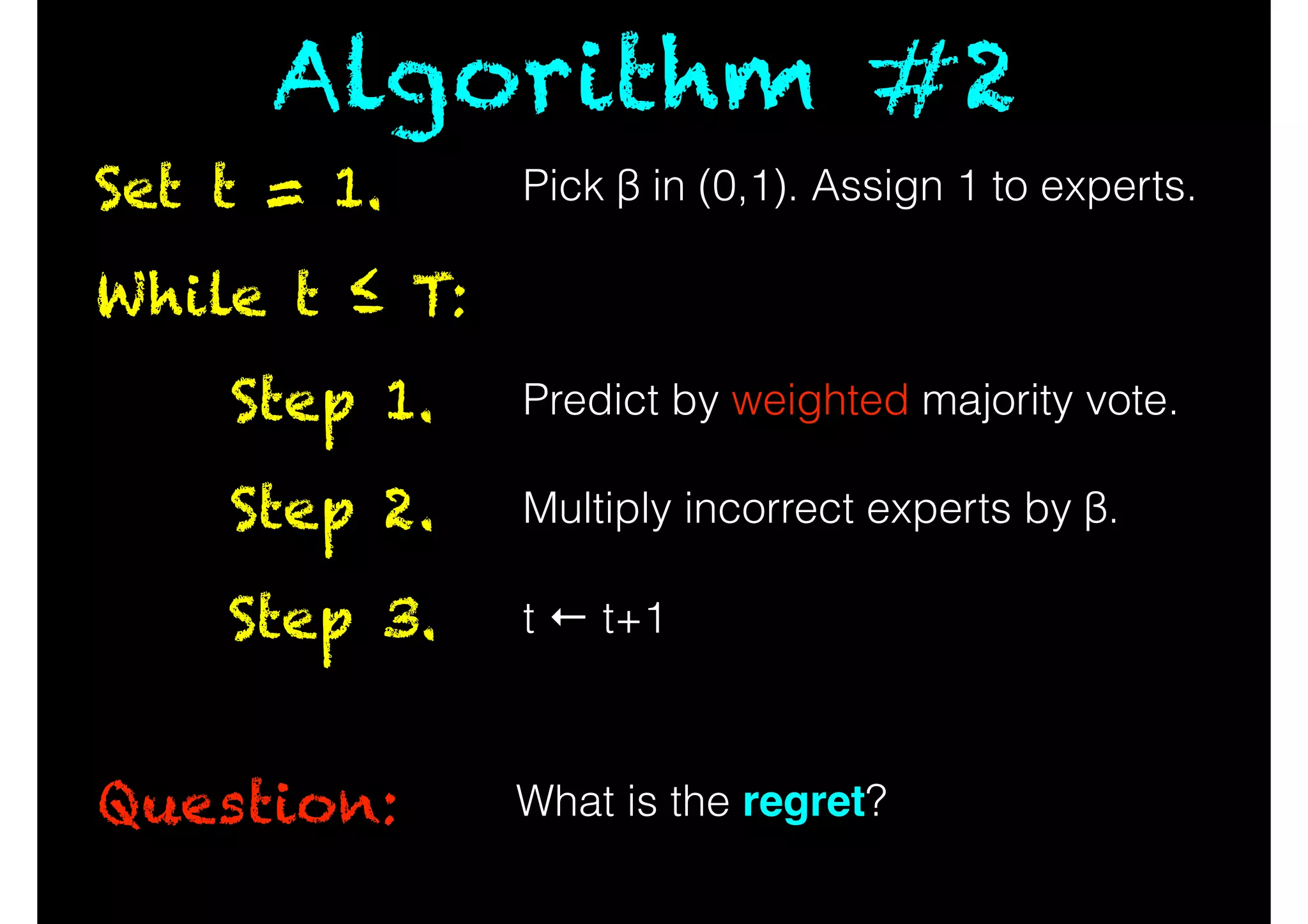

![While t ≤ T:

Predict by weighted majority vote.Step 1.

Multiply incorrect experts by β.Step 2.

t ← t+1Step 3.

Set t = 1.

Algorithm #2

What is the regret? [ choose β carefully ]

r

T · log N

2

Pick β in (0,1). Assign 1 to experts.](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-36-2048.jpg)

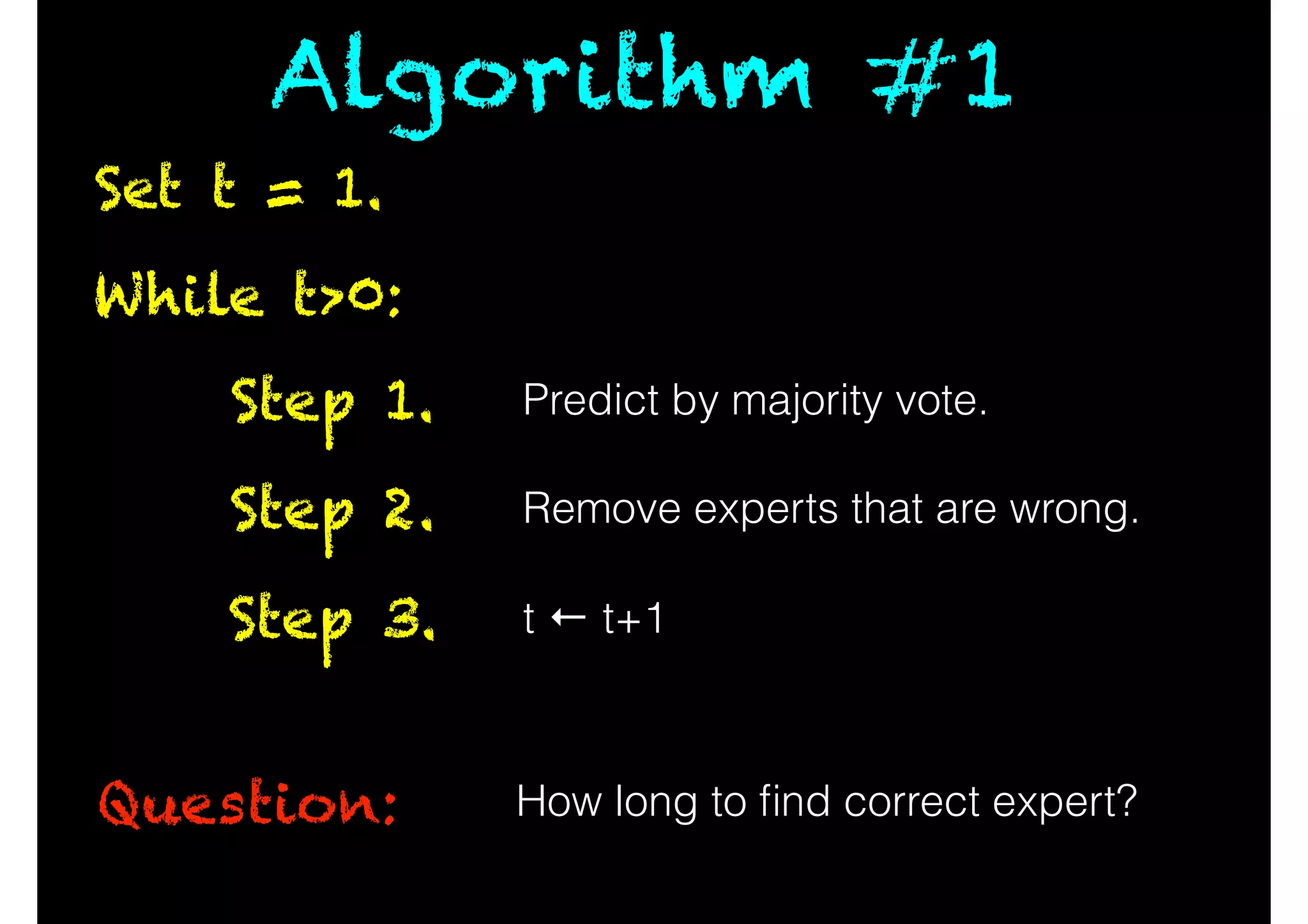

![Online Convex Opt.

Scenario: Convex set K; convex loss L(a,b)

[ in both arguments, separately ]

!

At time t,

Forecaster picks at in K

Nature responds with bt in K

[ Nature is adversarial ]

Forecaster’s loss is L(a,b)

Goal: Minimize regret.](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-38-2048.jpg)

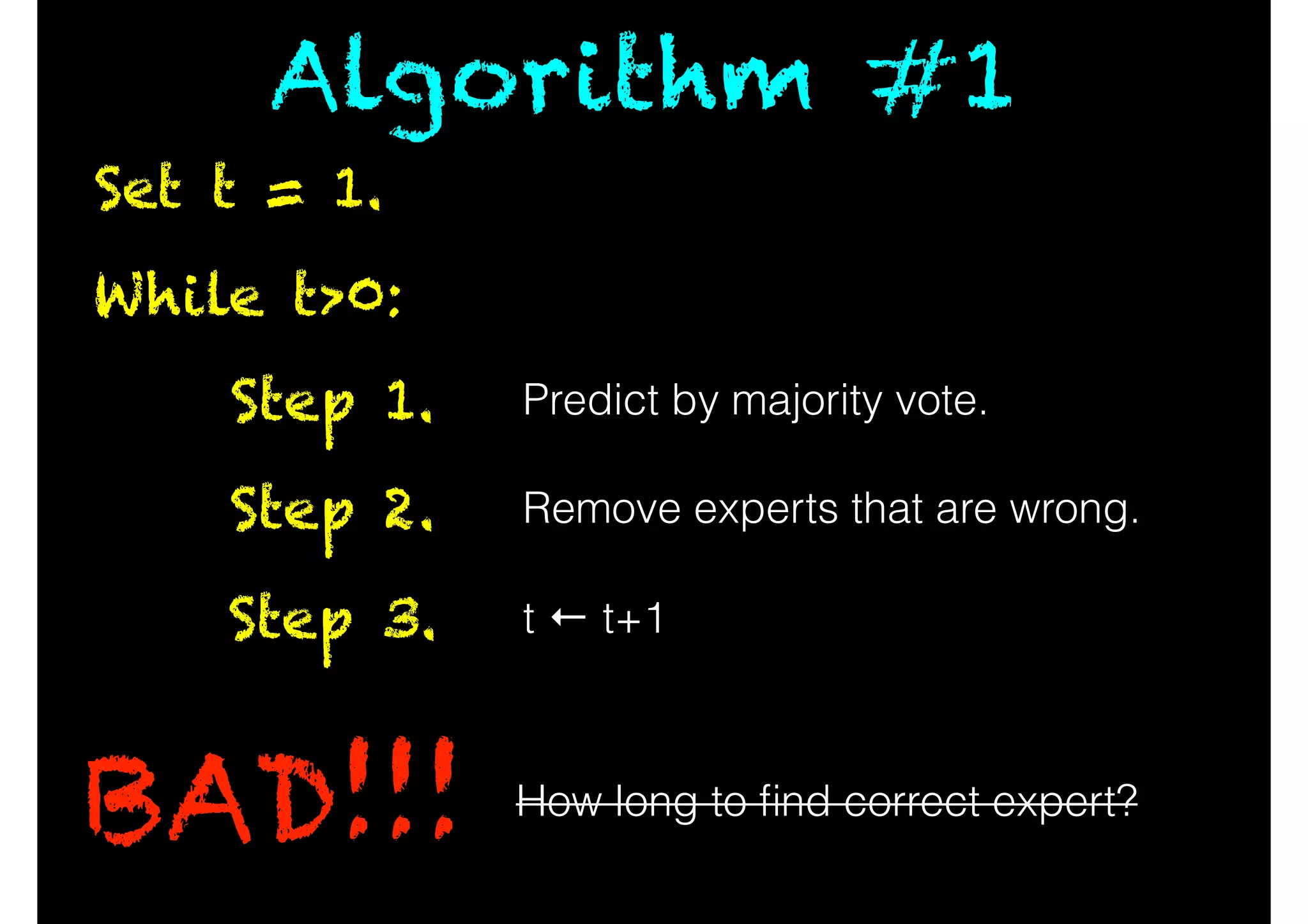

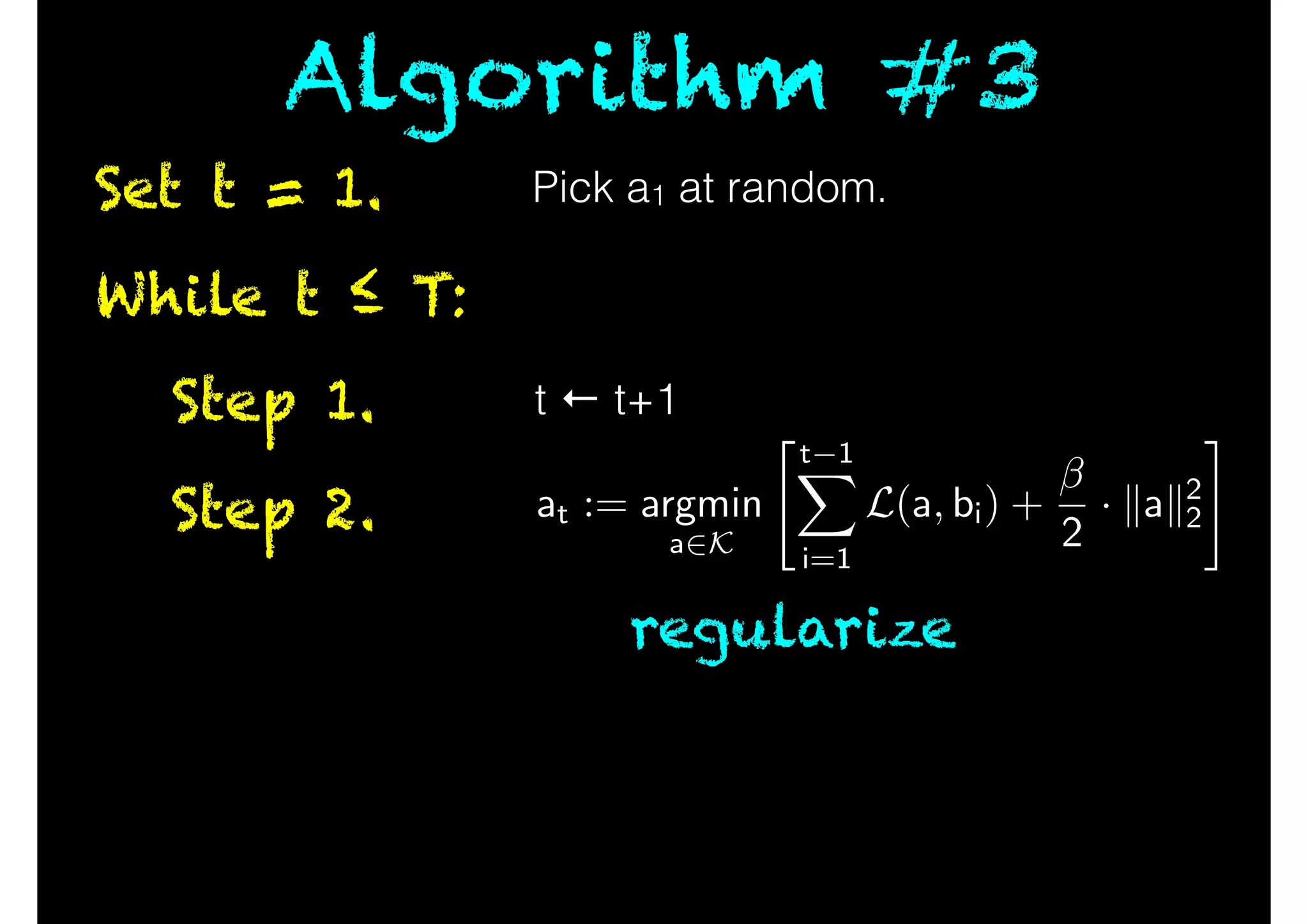

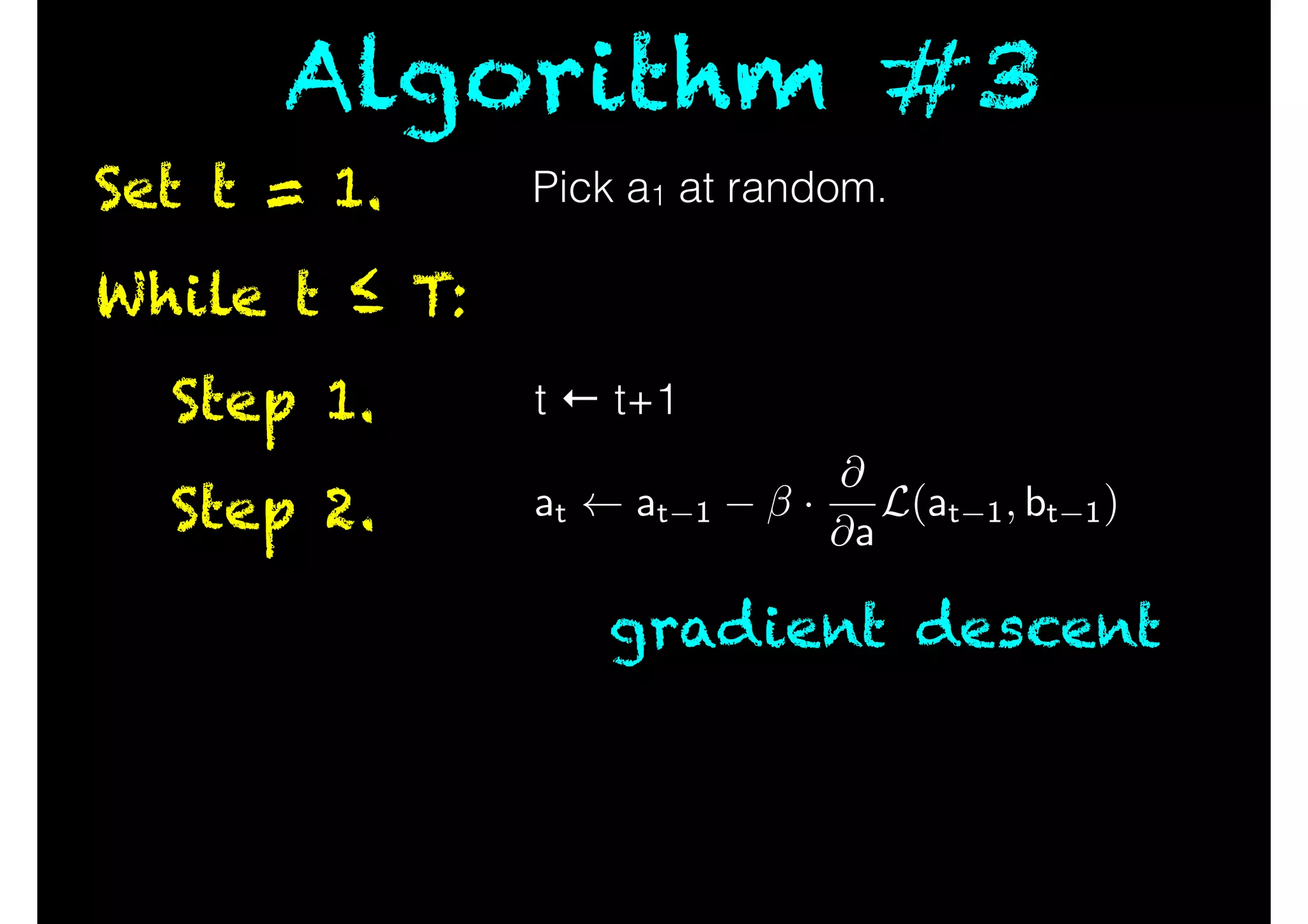

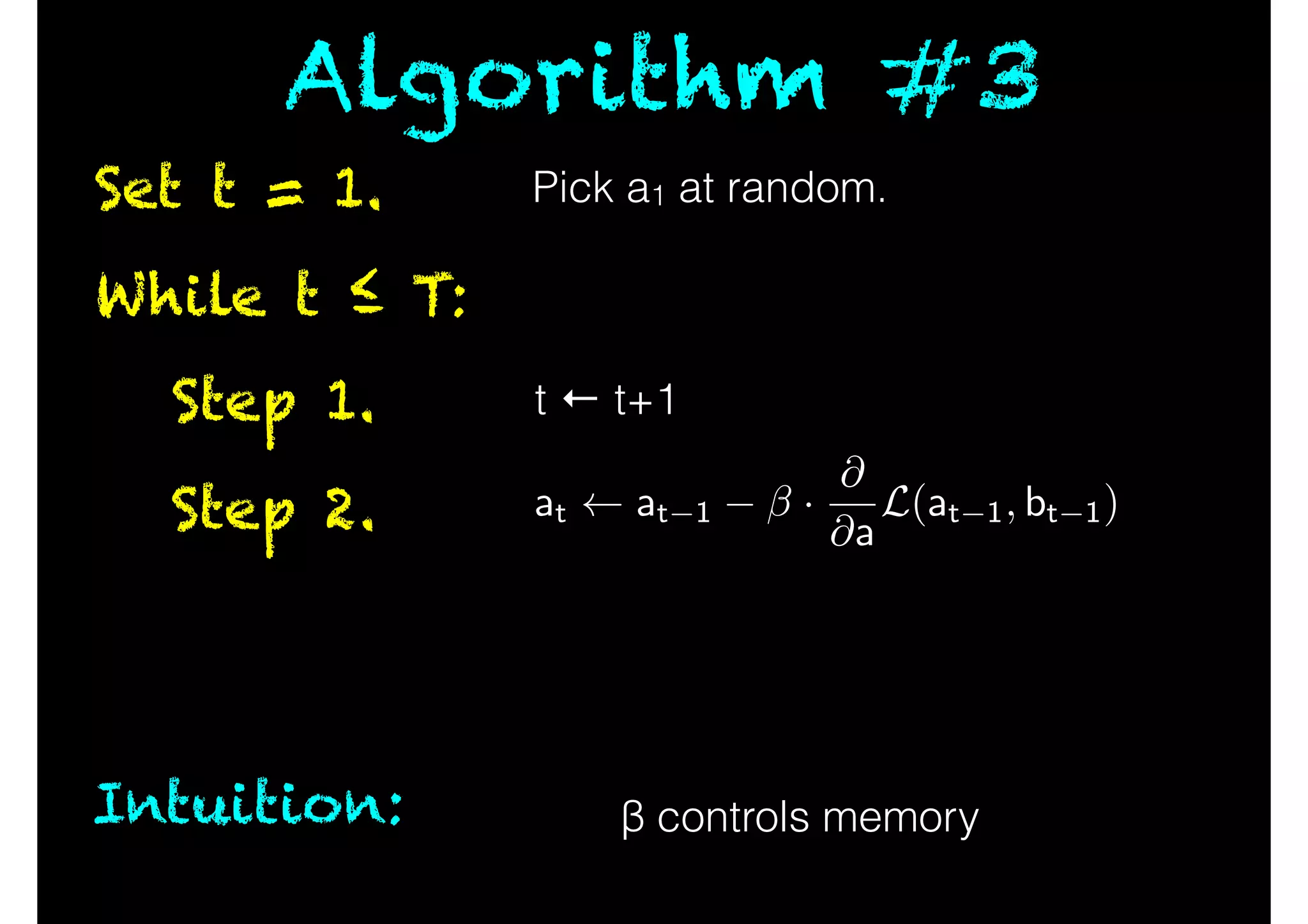

![While t ≤ T:

Step 1.

Step 2.

Set t = 1.

Algorithm #3

What is the regret?

[ choose β carefully ]

diam(K) · Lipschitz(L) ·

p

T

t ← t+1

Pick a1 at random.

at at 1 ·

@

@a

L(at 1, bt 1)](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-45-2048.jpg)

![Deep thought #3

Those who cannot

remember [their]

past are condemned

to repeat it

George Santayana](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-46-2048.jpg)

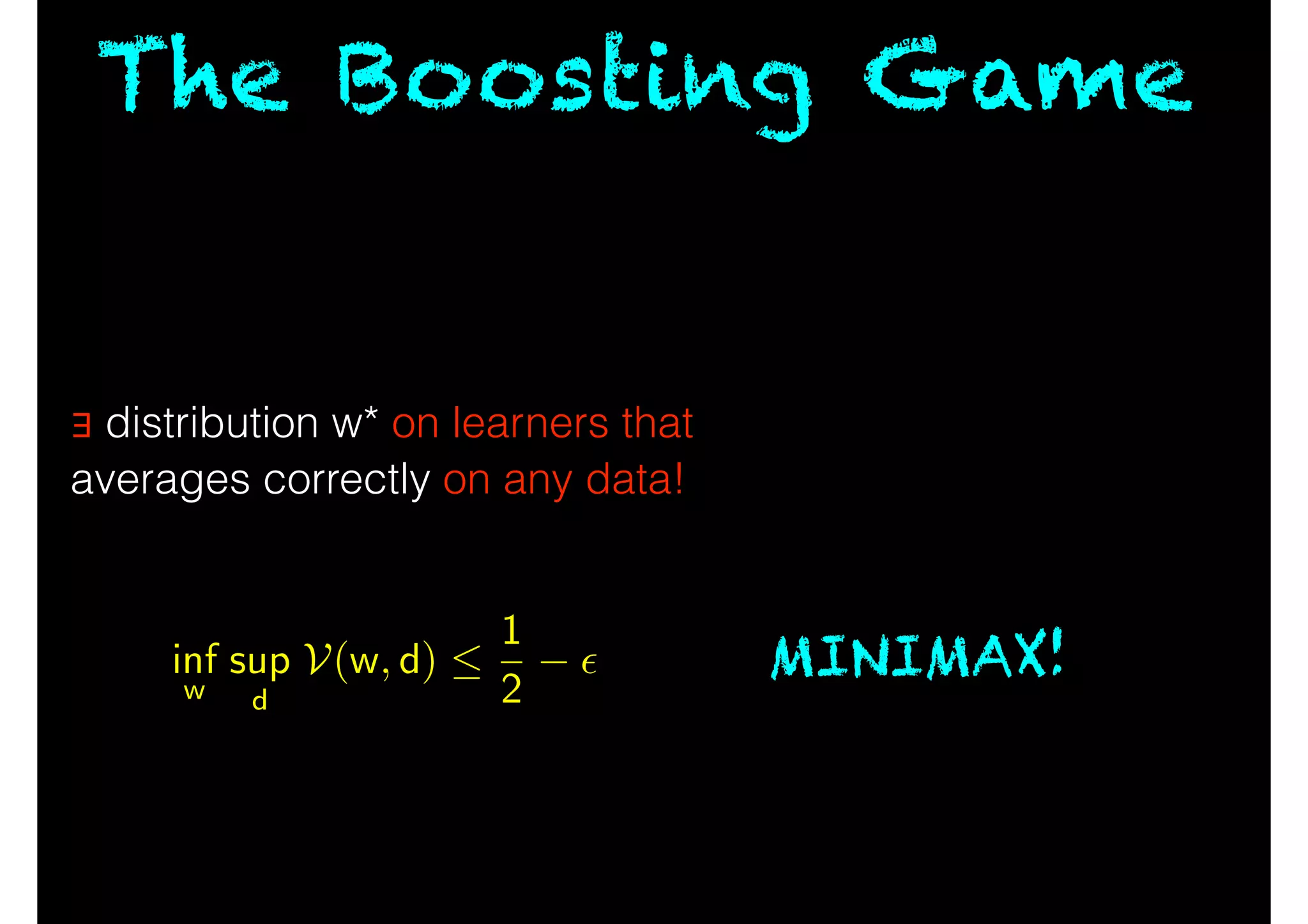

![Meta-Algorithm #4

Play Algorithm #2 against Algorithm W

[ #2 maximizes W’s mistakes ]](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-59-2048.jpg)

![Meta-Algorithm #4

Play Algorithm #2 against Algorithm W

[ #2 maximizes W’s mistakes ]

inf

w

sup

d

V(w, d)

1

2

✏

Algorithm #2

Algorithm W](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-60-2048.jpg)

![Meta-Algorithm #4

• Freund and Schapire 1995

!

• Best learning algorithm in

late 1990s and early 2000s

!

• Authors won Gödel prize

Play Algorithm #2 against Algorithm W

[ #2 maximizes W’s mistakes ]

inf

w

sup

d

V(w, d)

1

2

✏

Algorithm #2

Algorithm W](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-61-2048.jpg)

![“[A] theory of induction is superfluous.

It has no function in a logic of science.

The best we can say of a hypothesis

is that up to now it has been able to

show its worth, and that it has been

more successful that other

hypotheses although, in principle, it

can never be justified, verified, or

even shown to be probable. This

appraisal of the hypothesis relies

solely upon deductive consequences

(predictions) which may be drawn

from the hypothesis: There is no need

to even mention induction.”](https://image.slidesharecdn.com/e99c6b9c-8e5f-4fc1-90d1-fdfb1fe283f3-150702055920-lva1-app6891/75/Inductive-Reasoning-and-one-of-the-Foundations-of-Machine-Learning-71-2048.jpg)