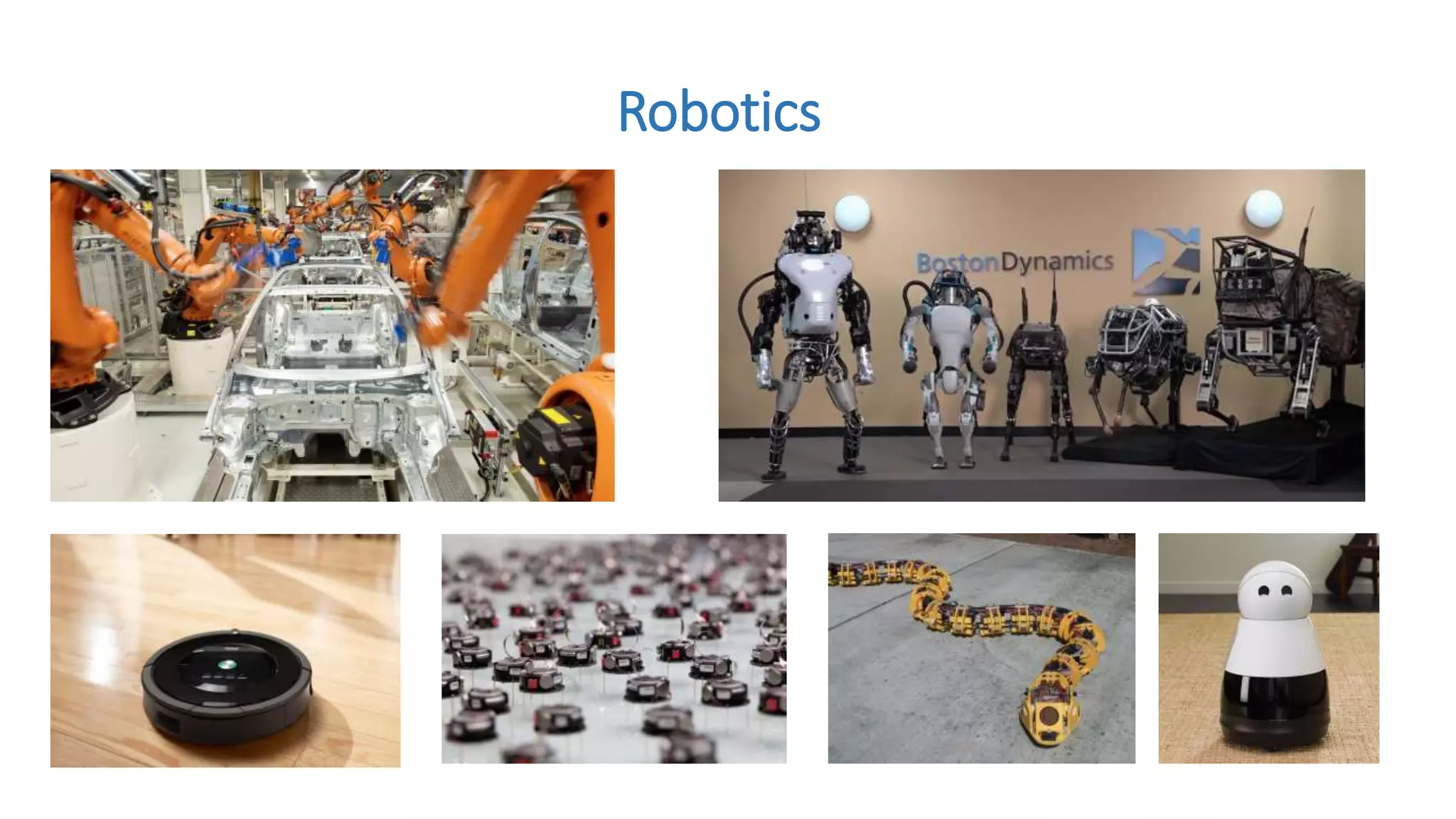

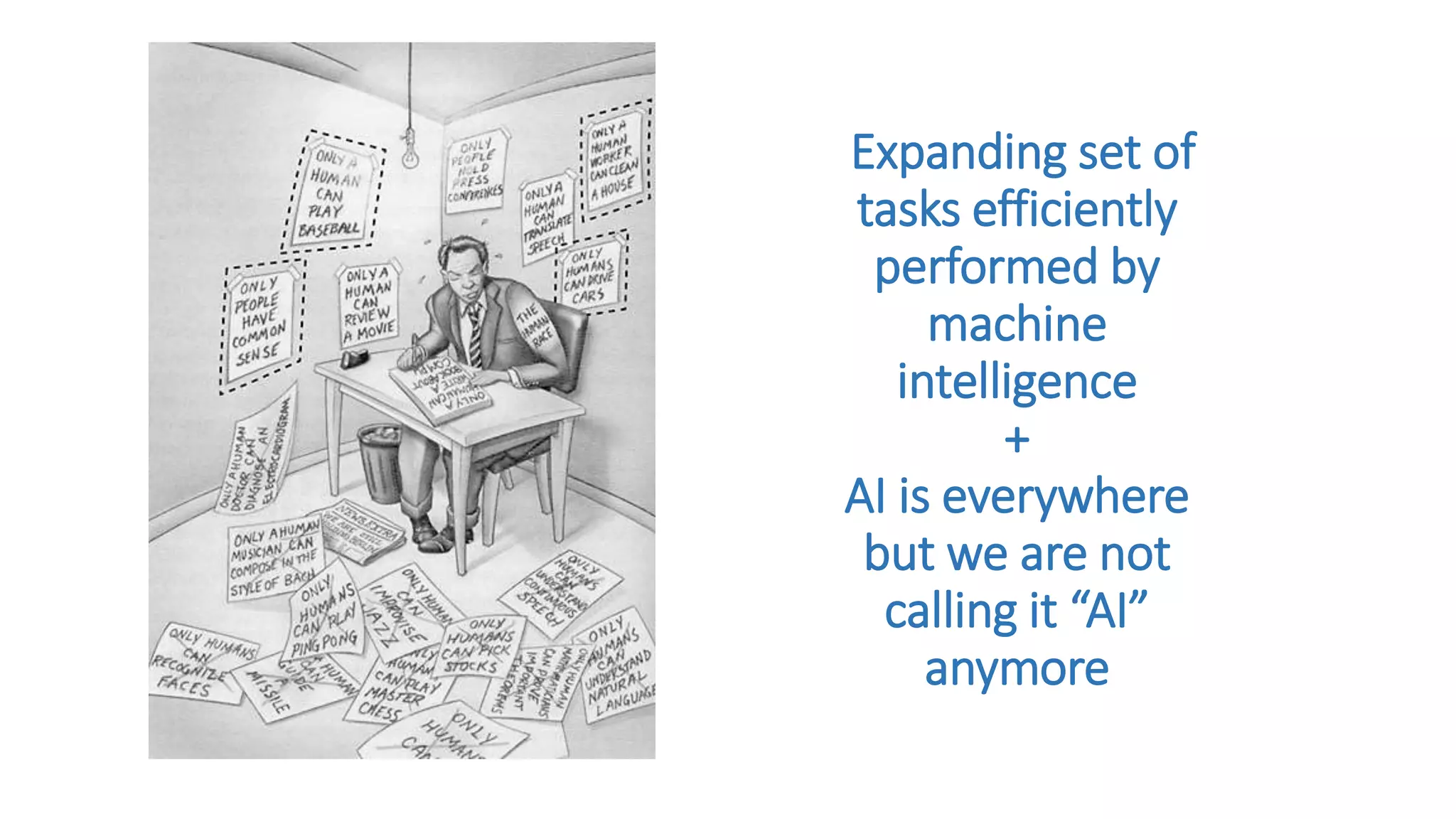

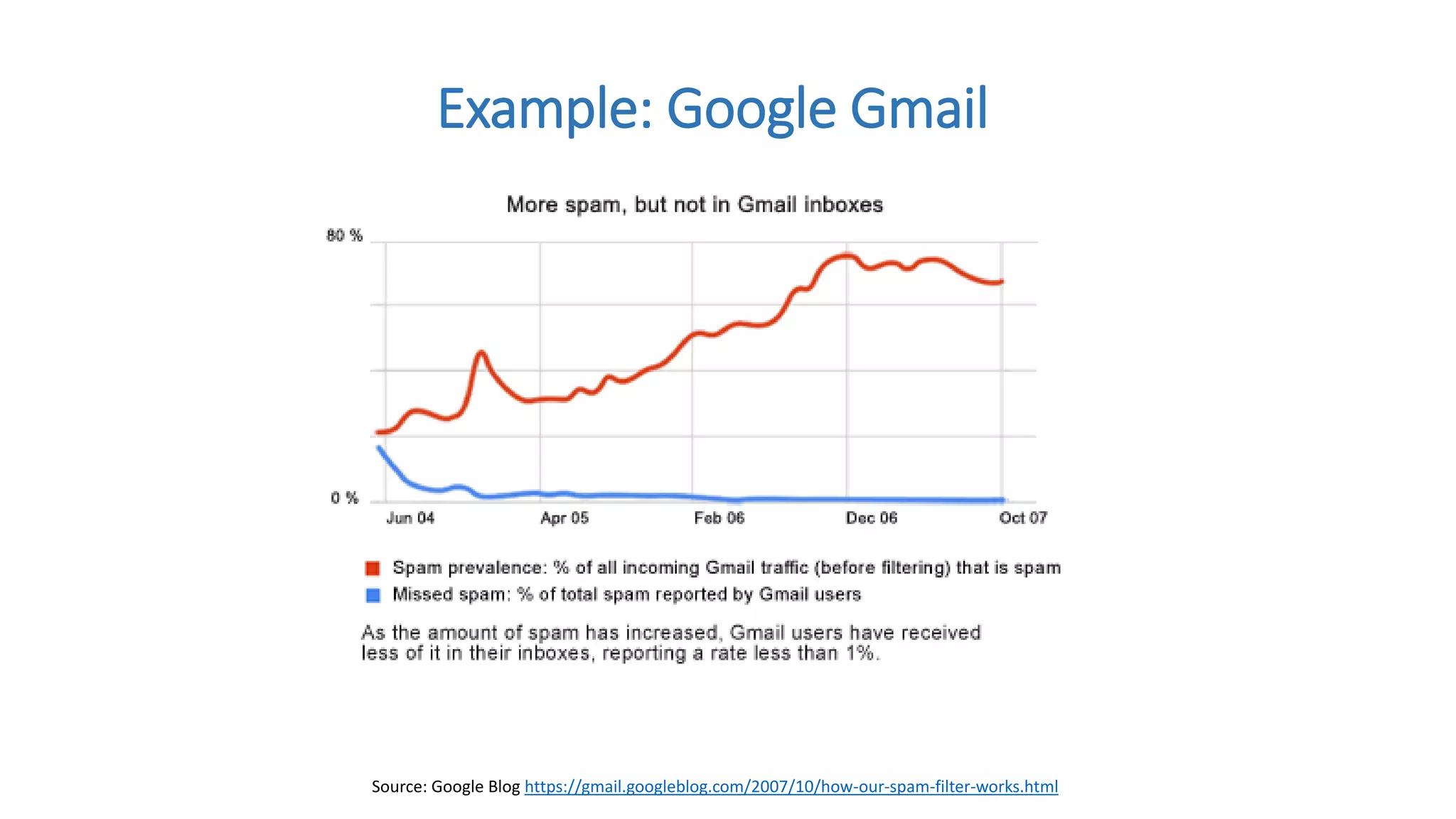

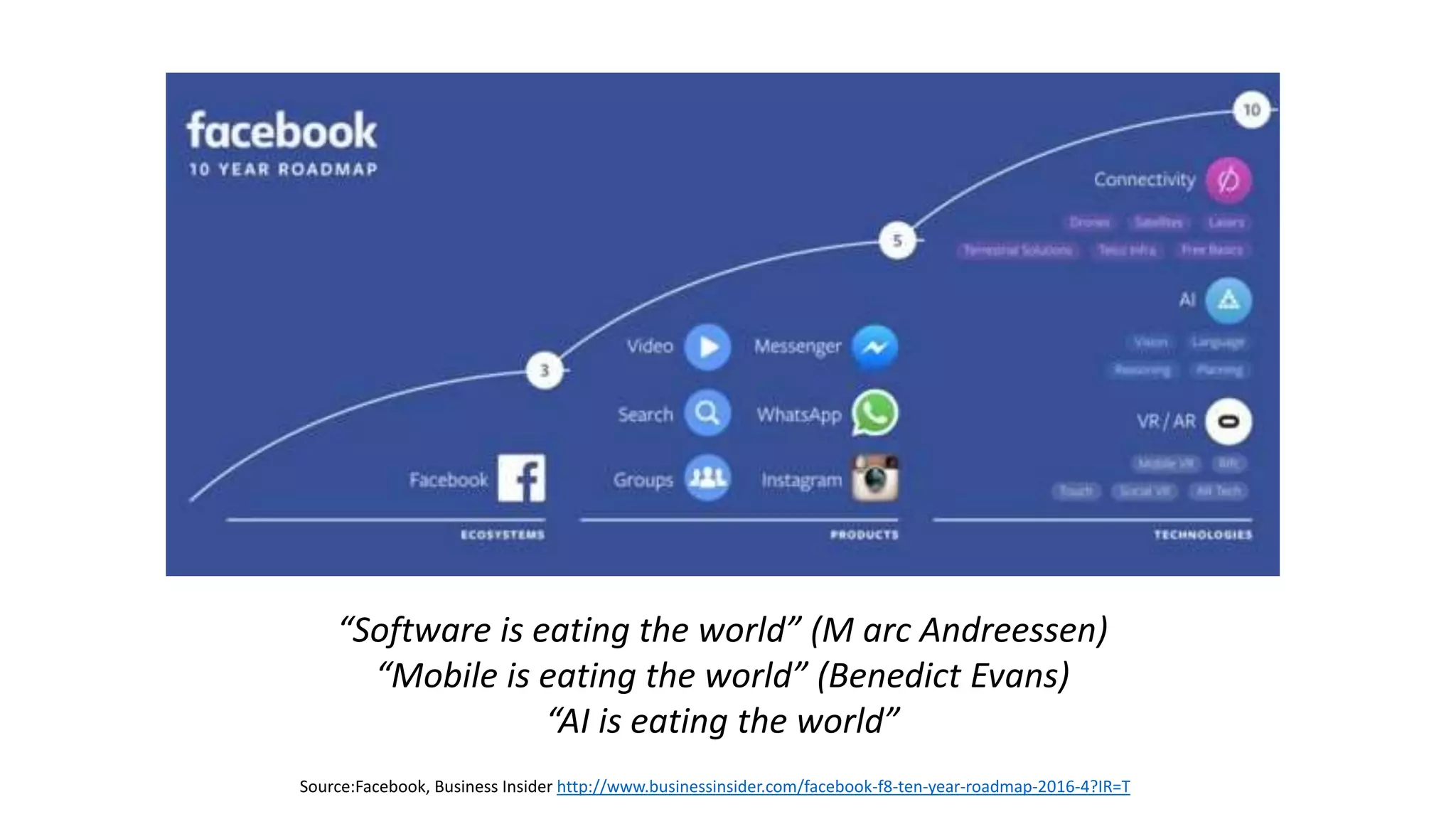

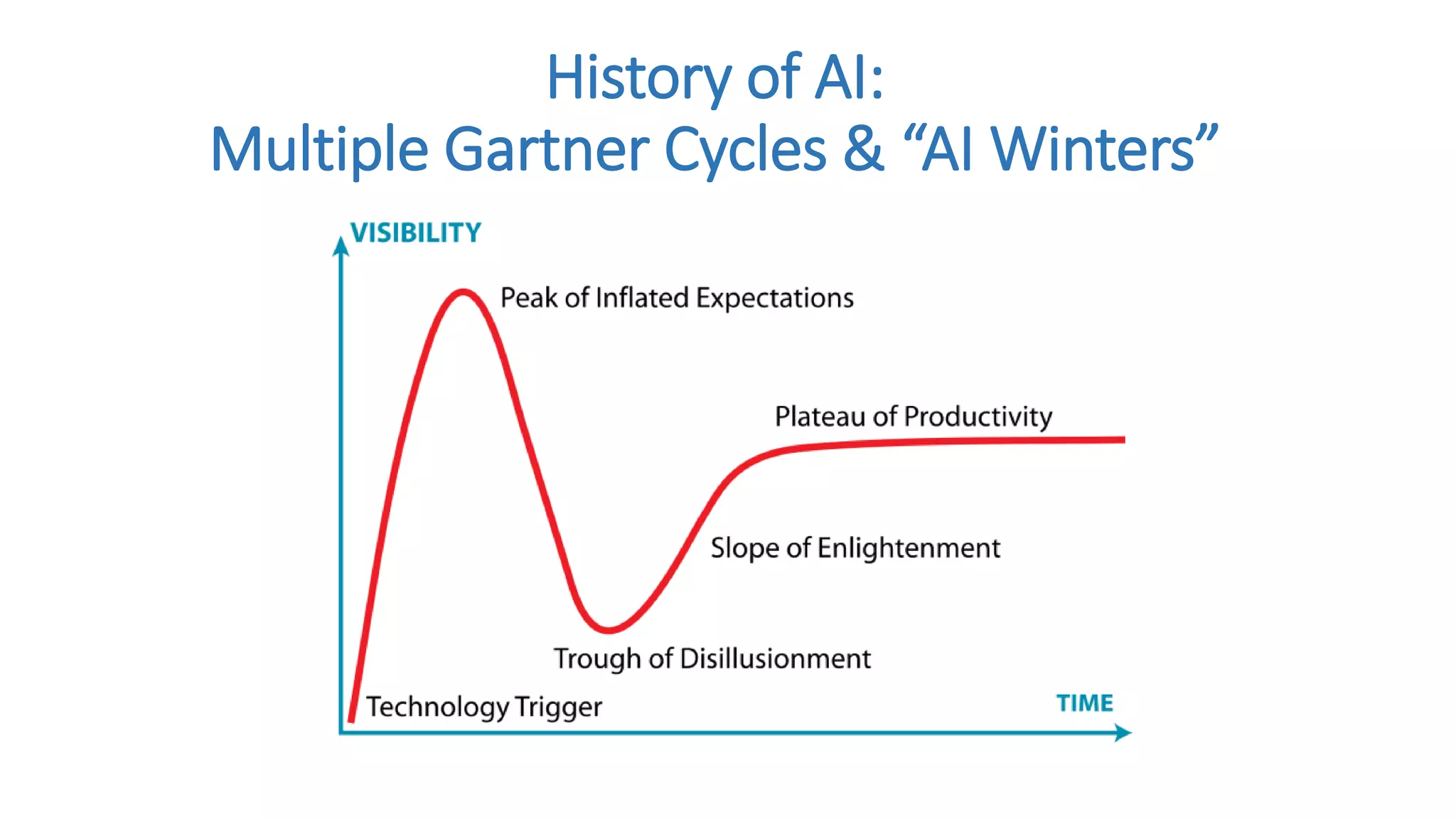

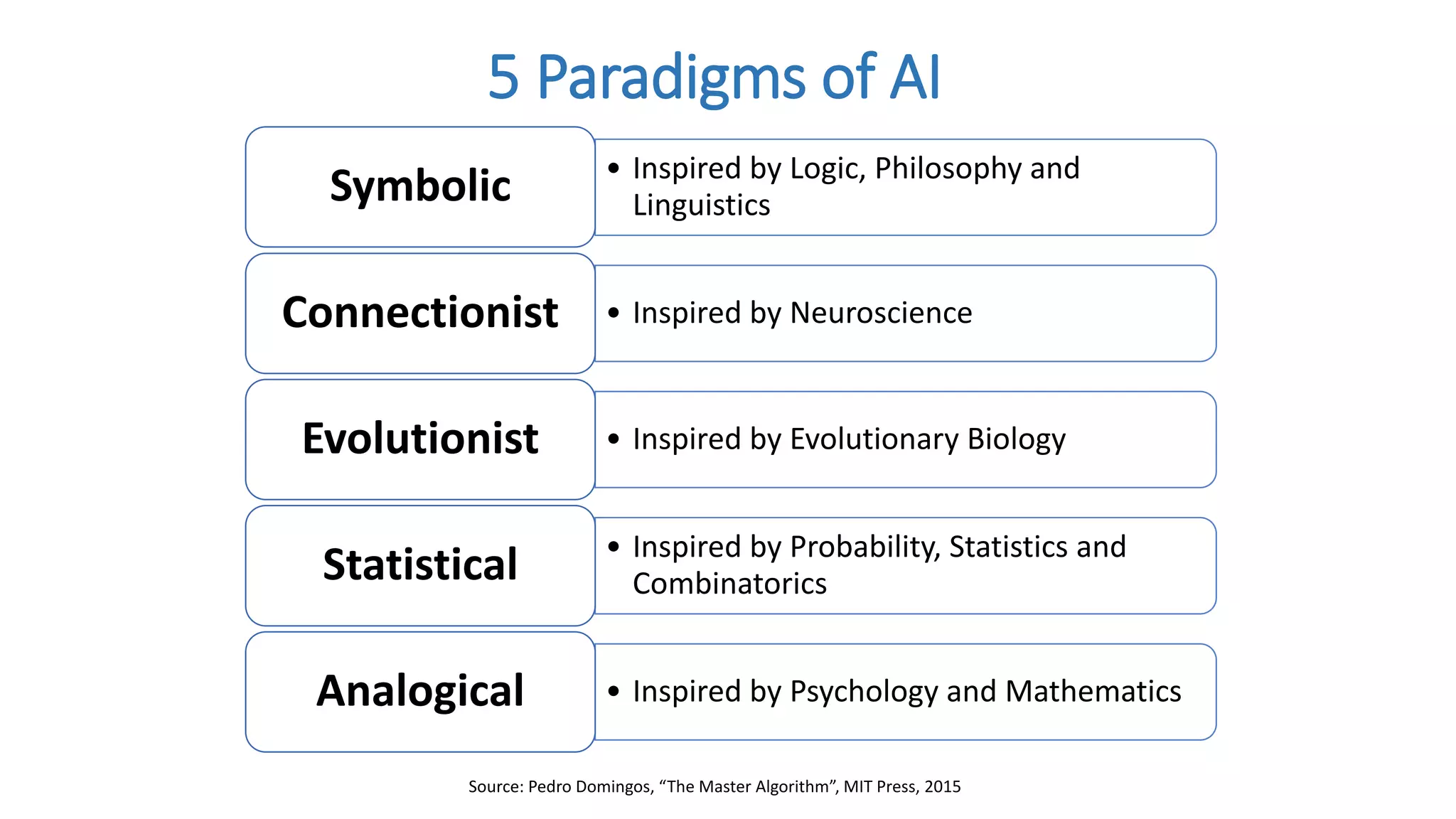

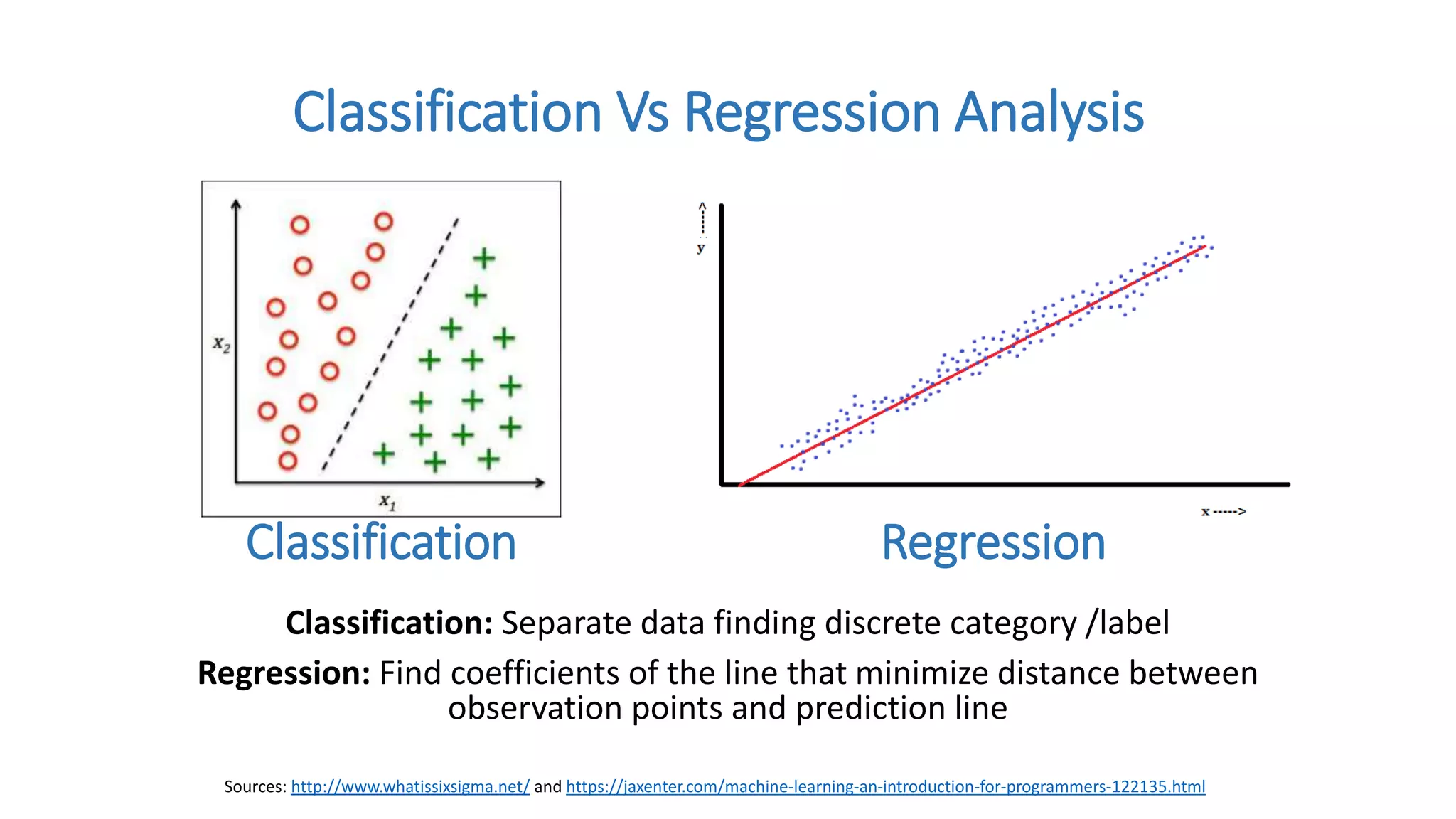

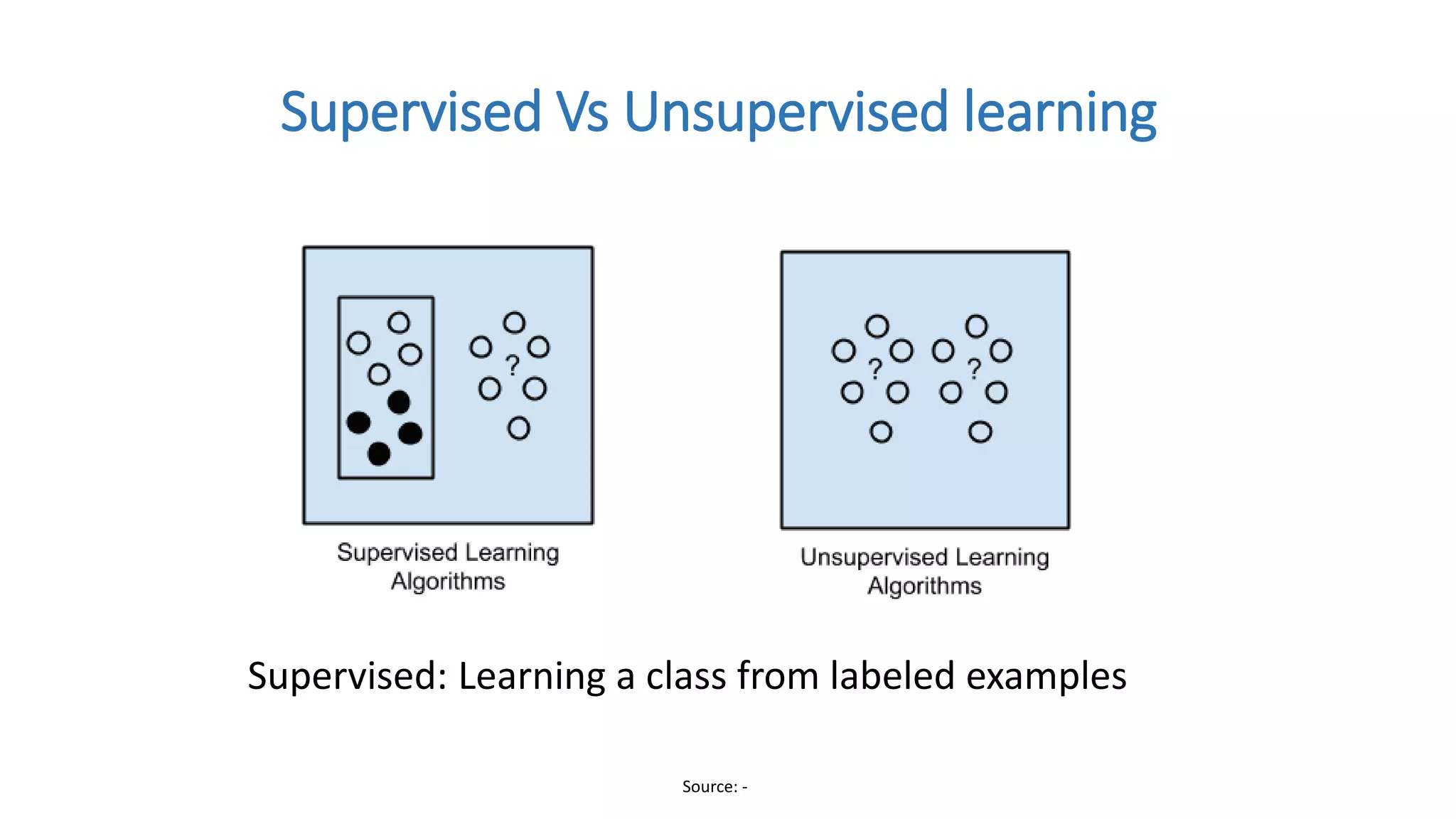

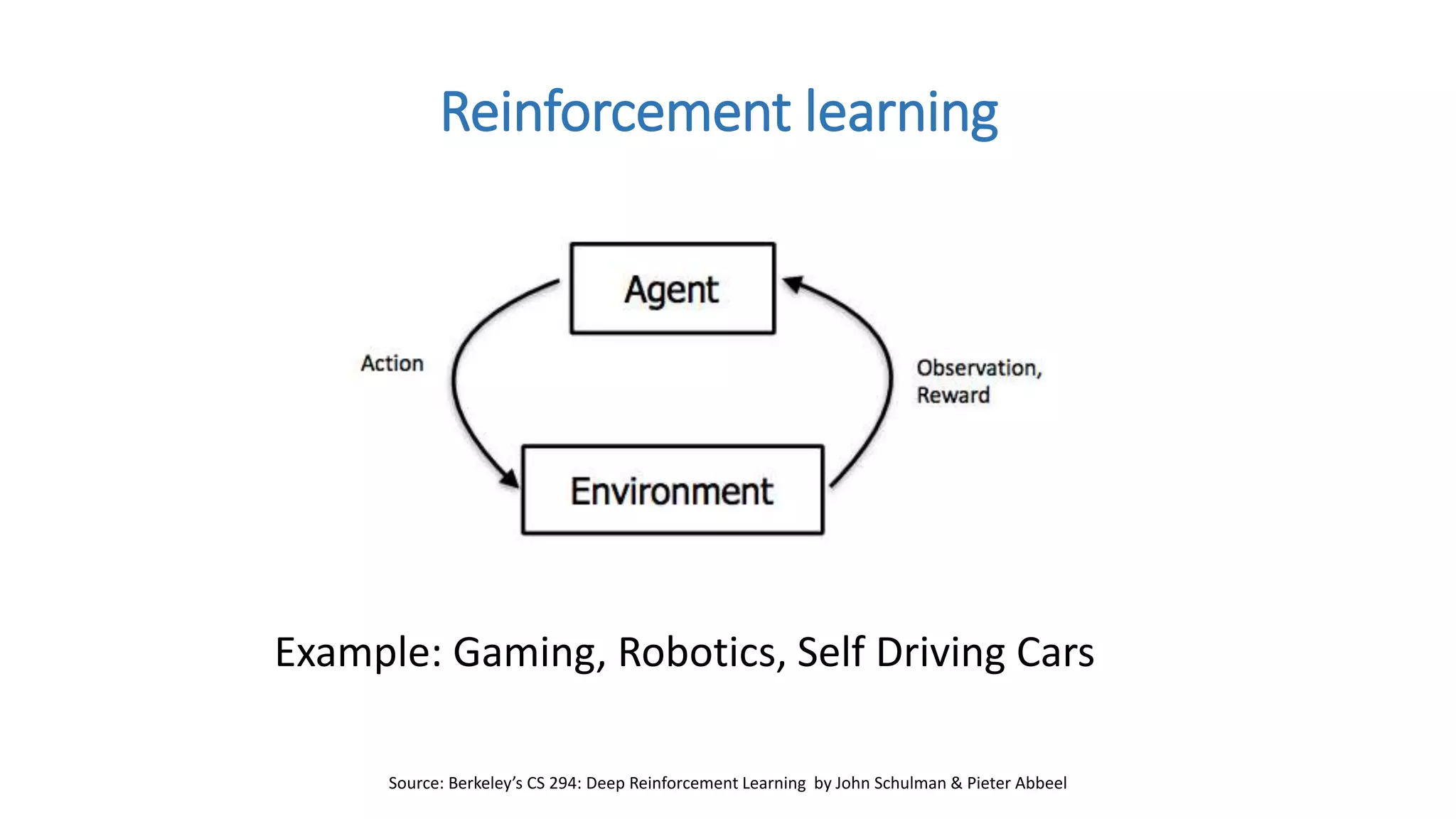

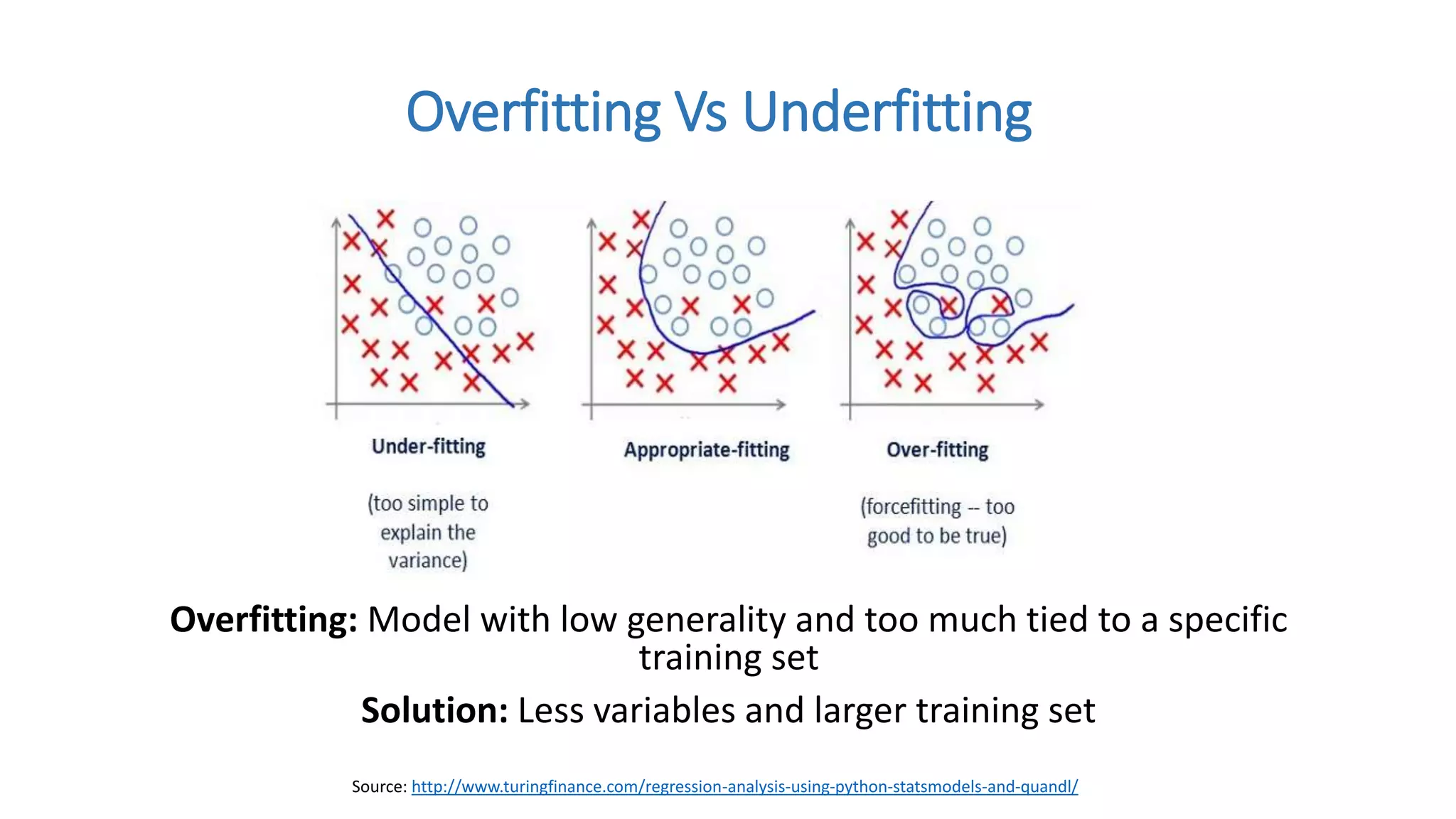

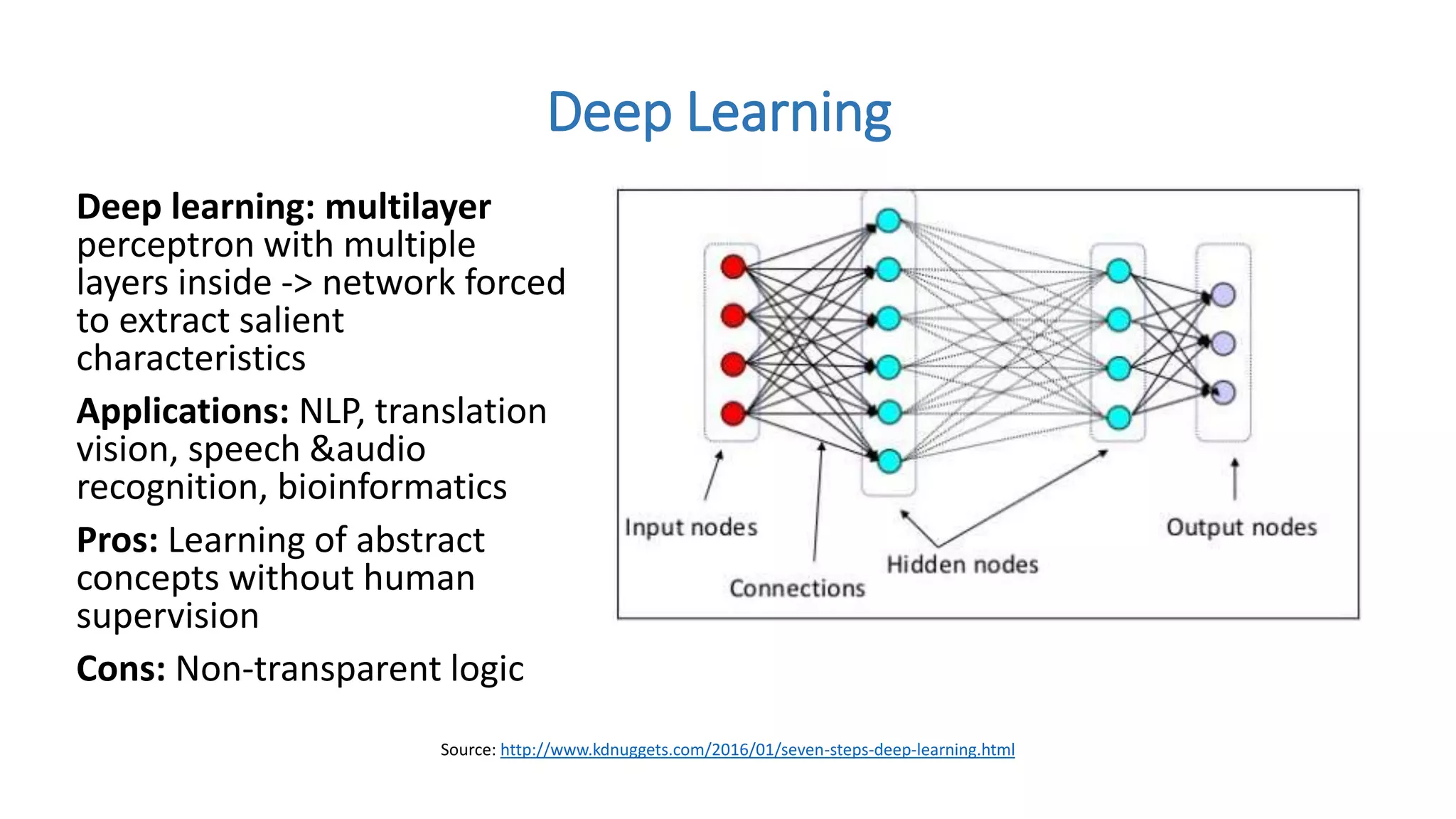

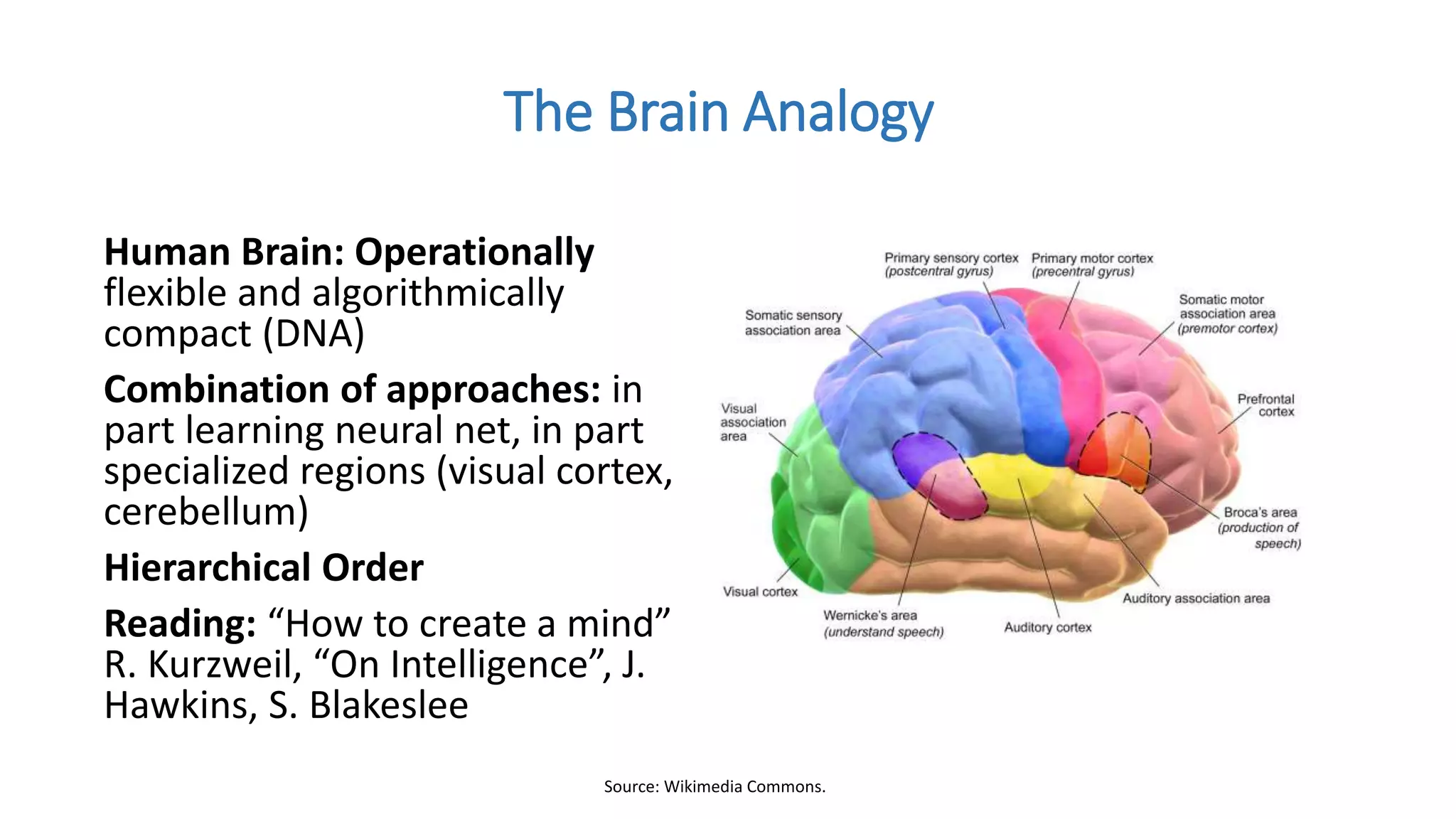

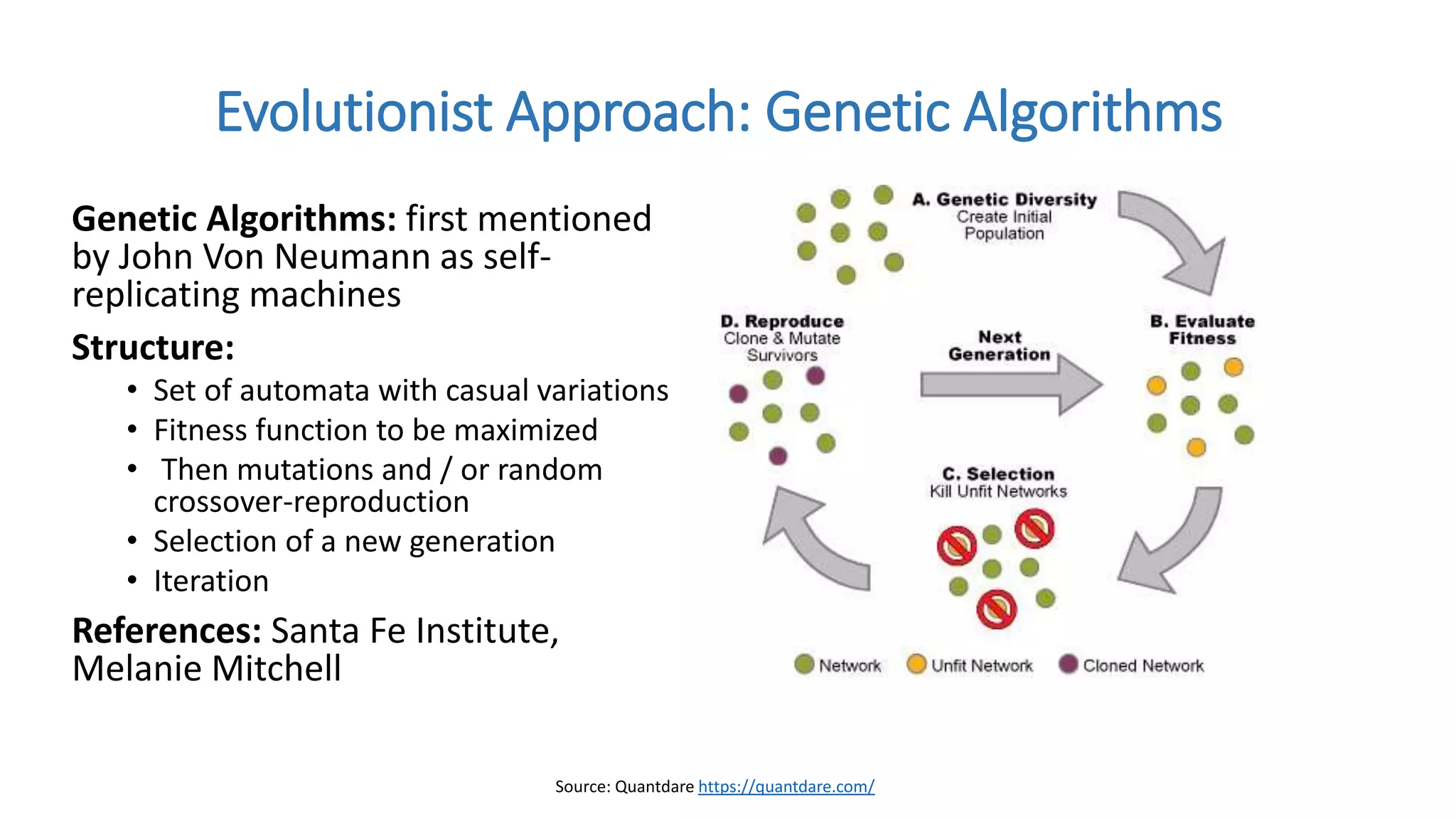

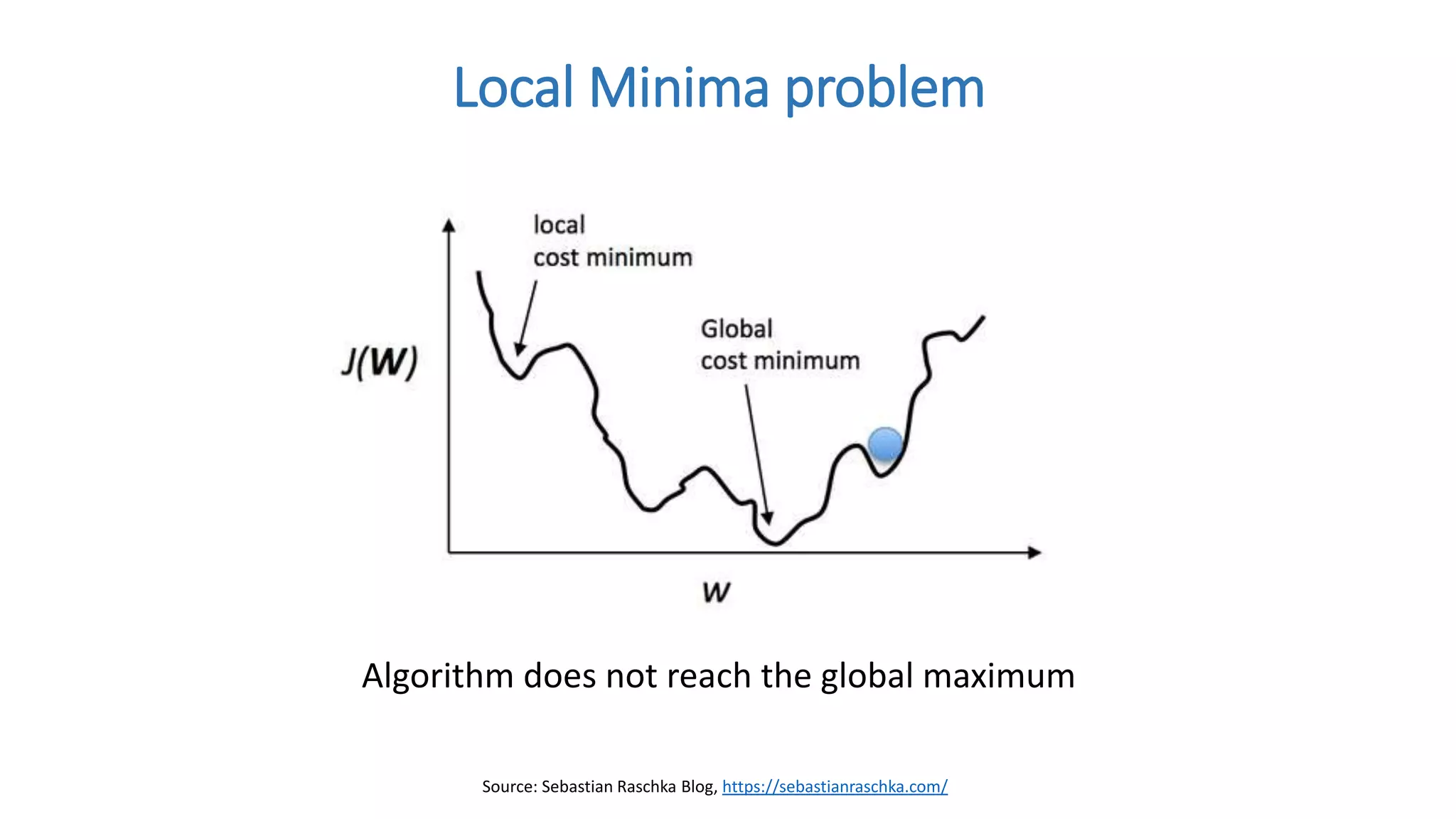

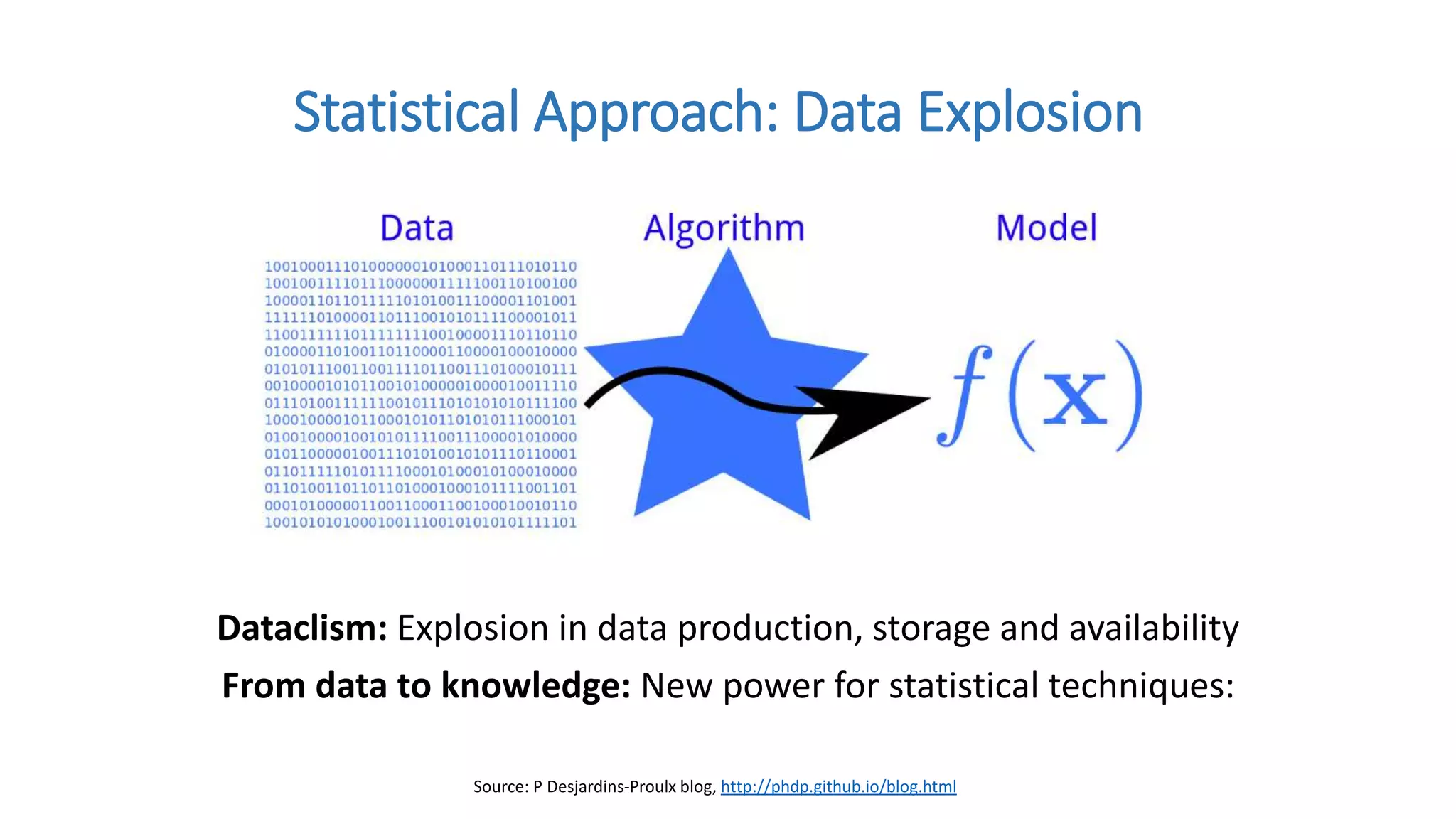

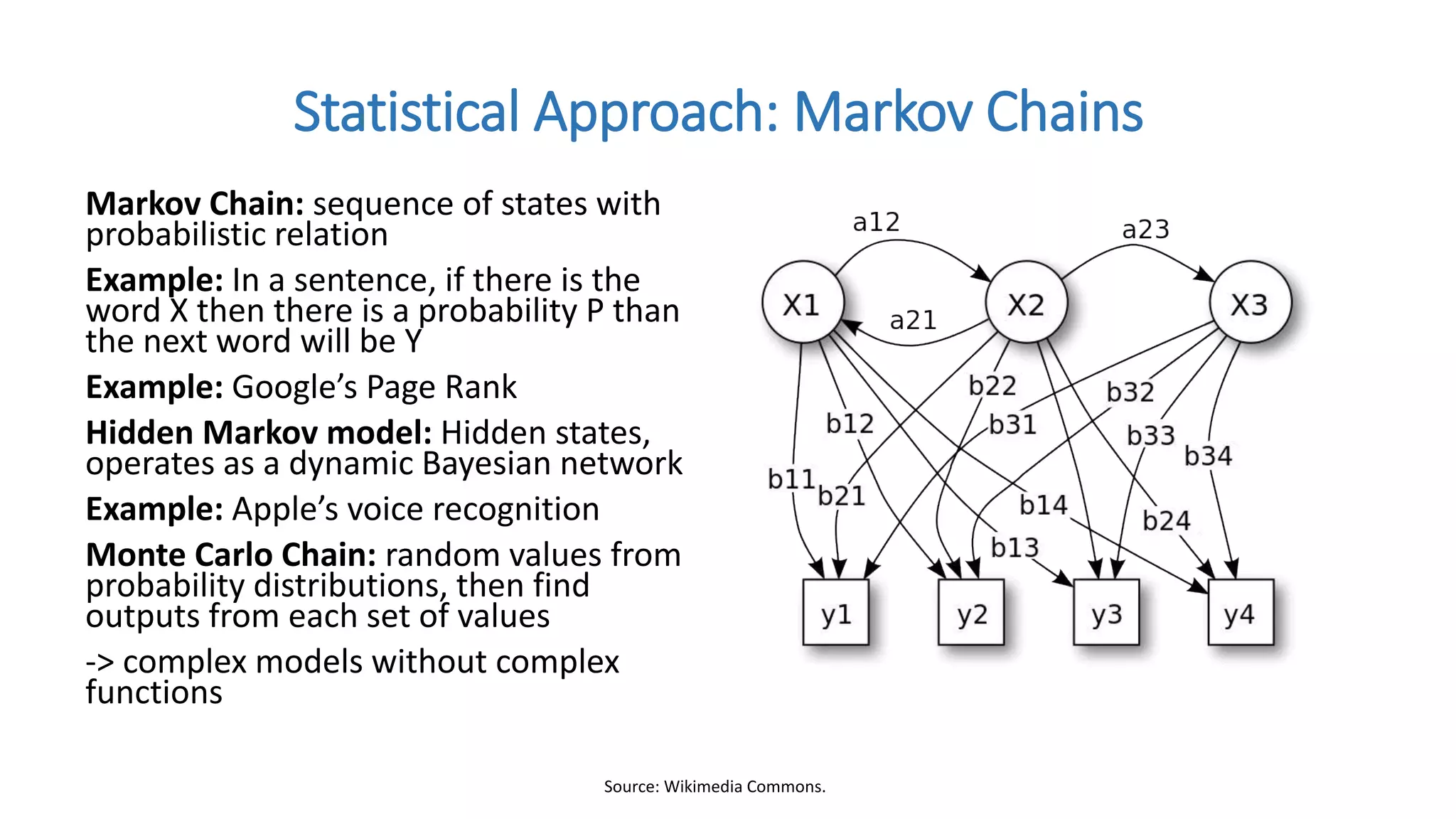

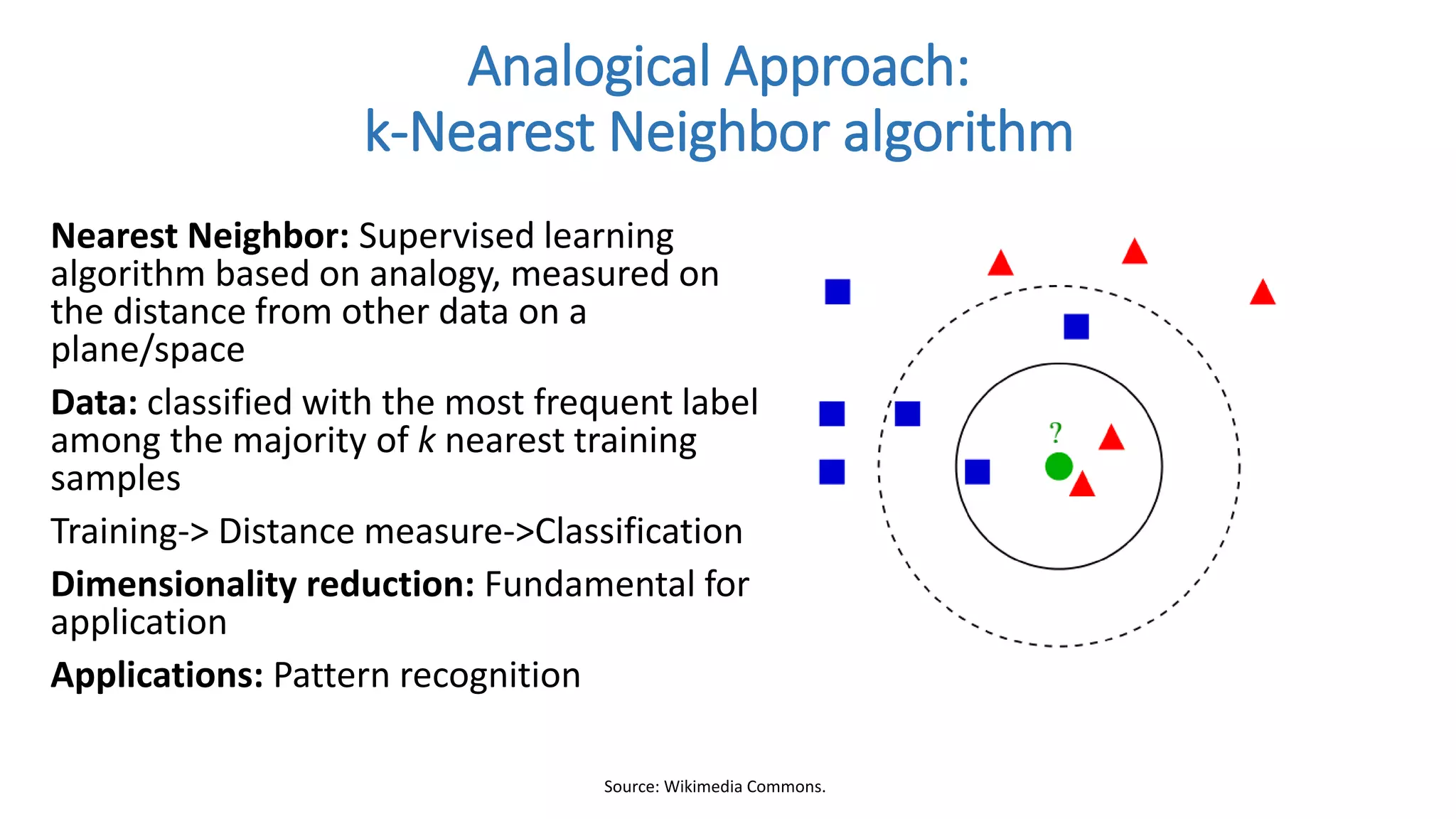

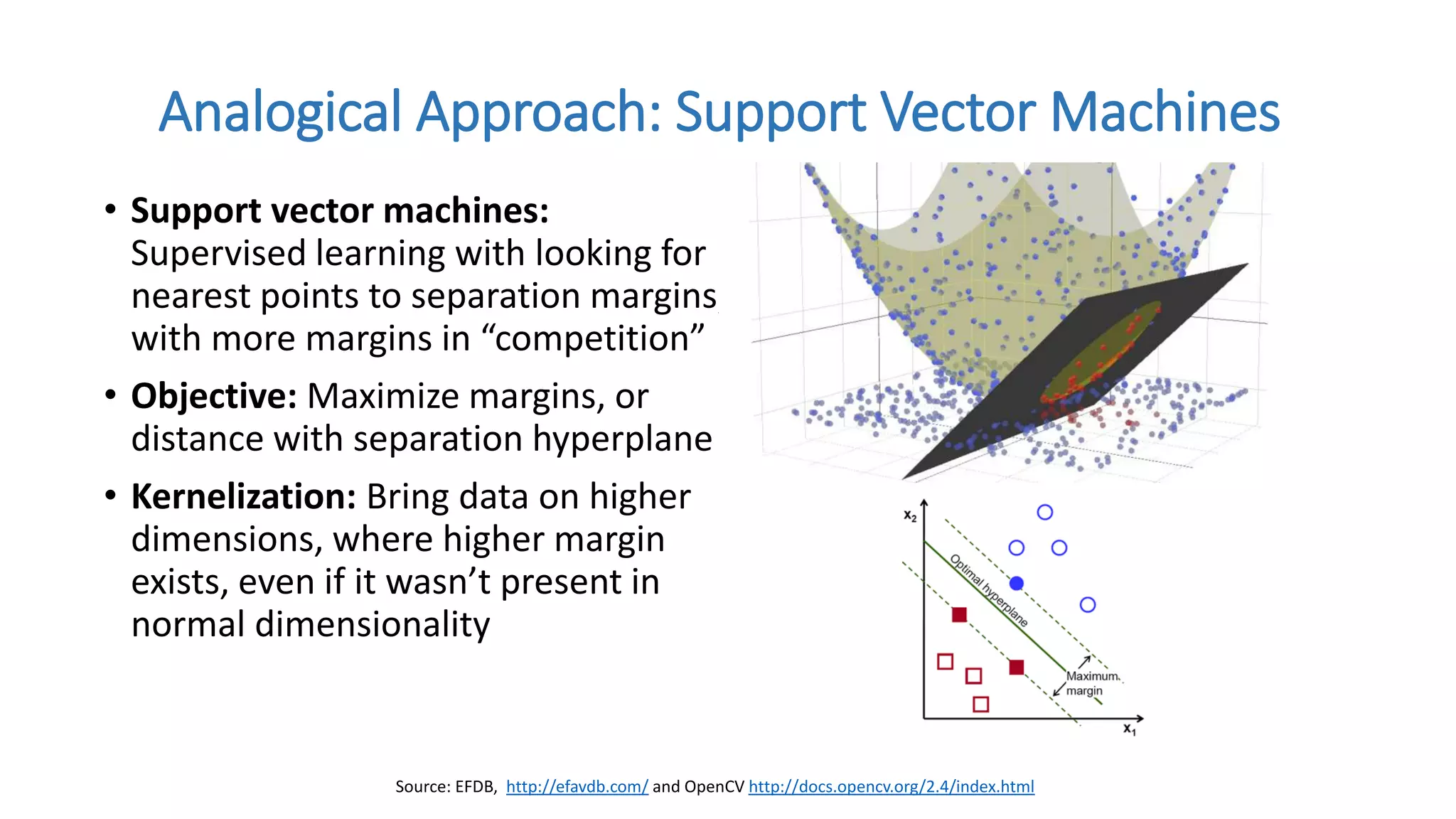

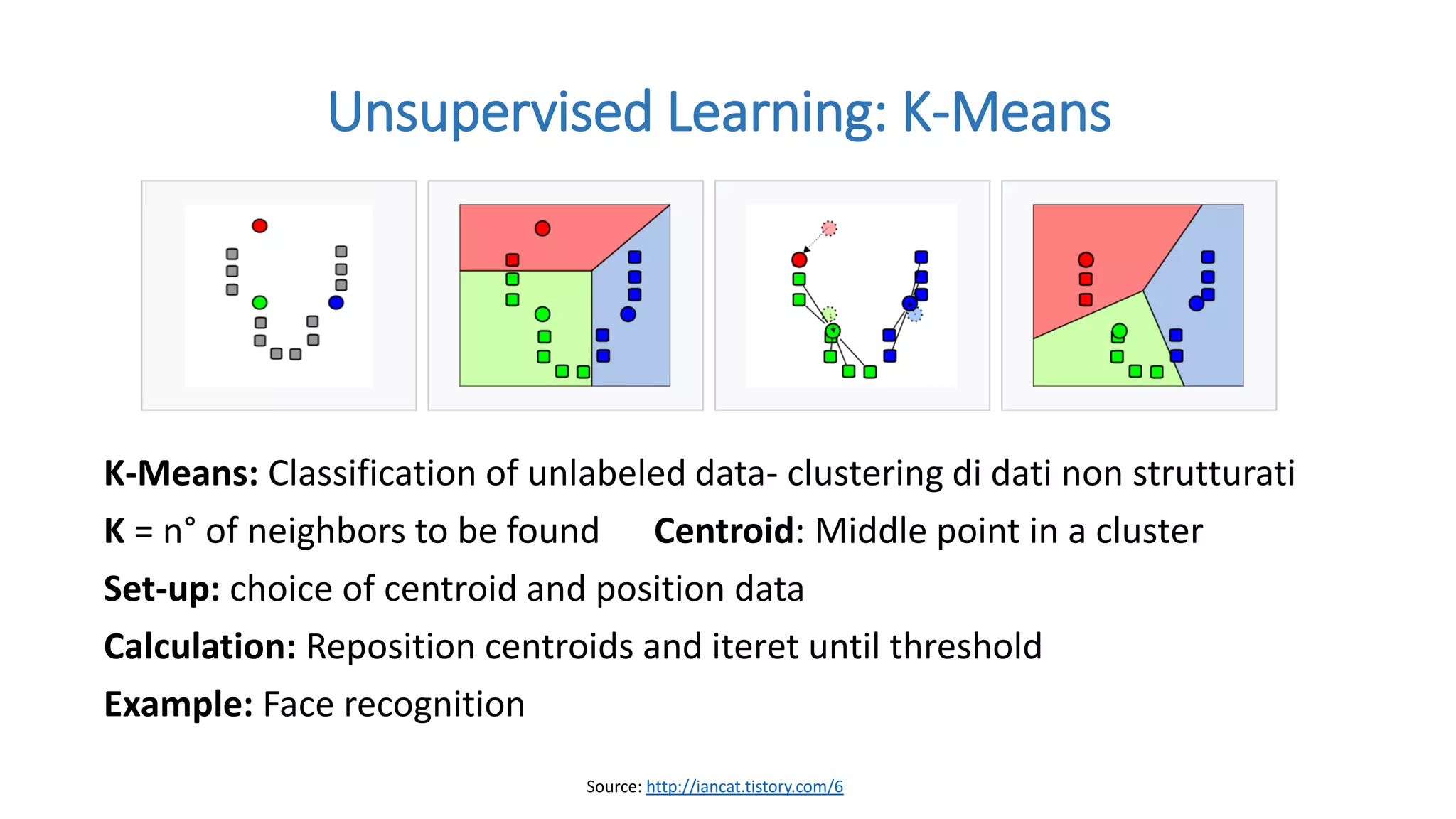

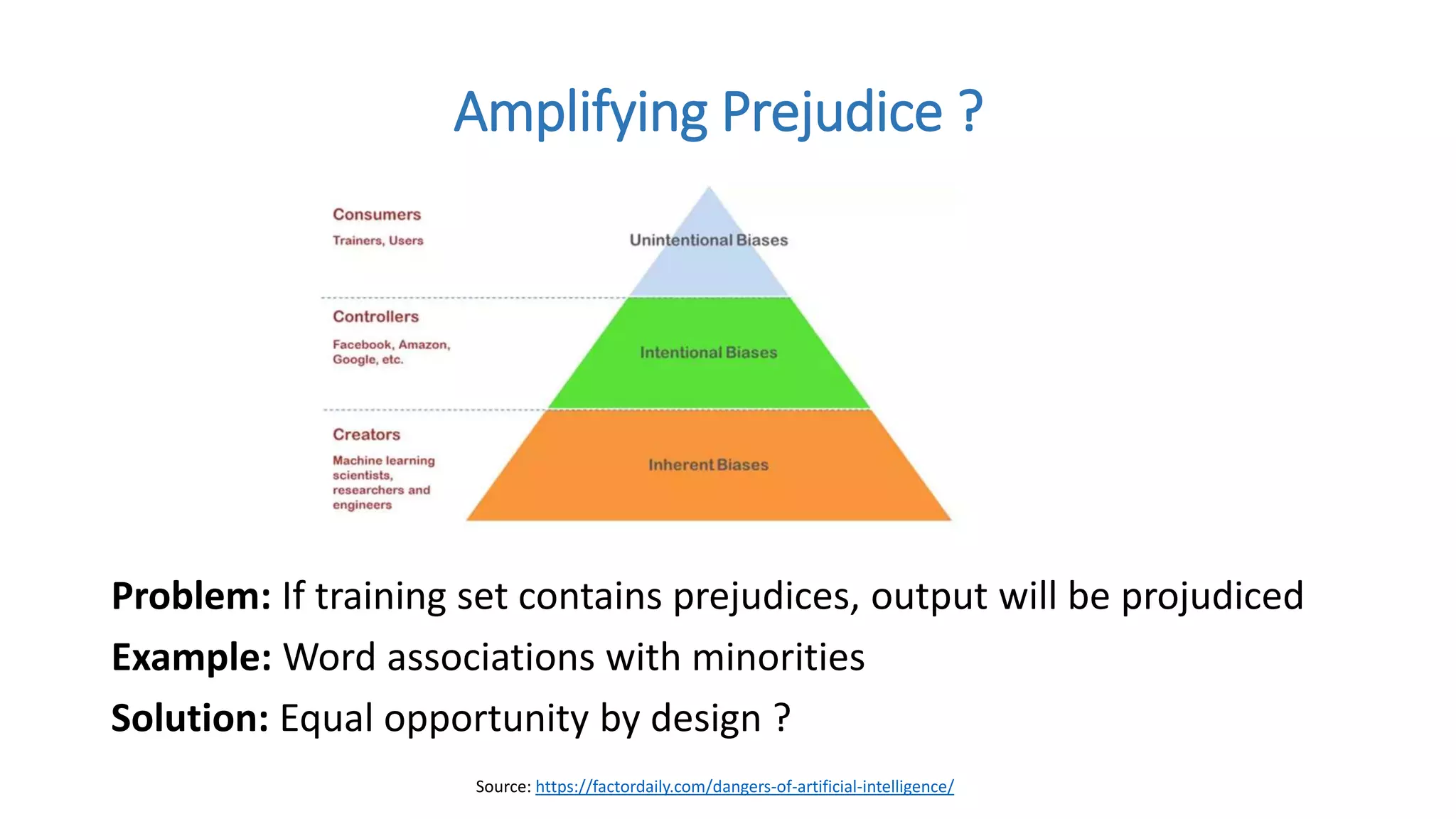

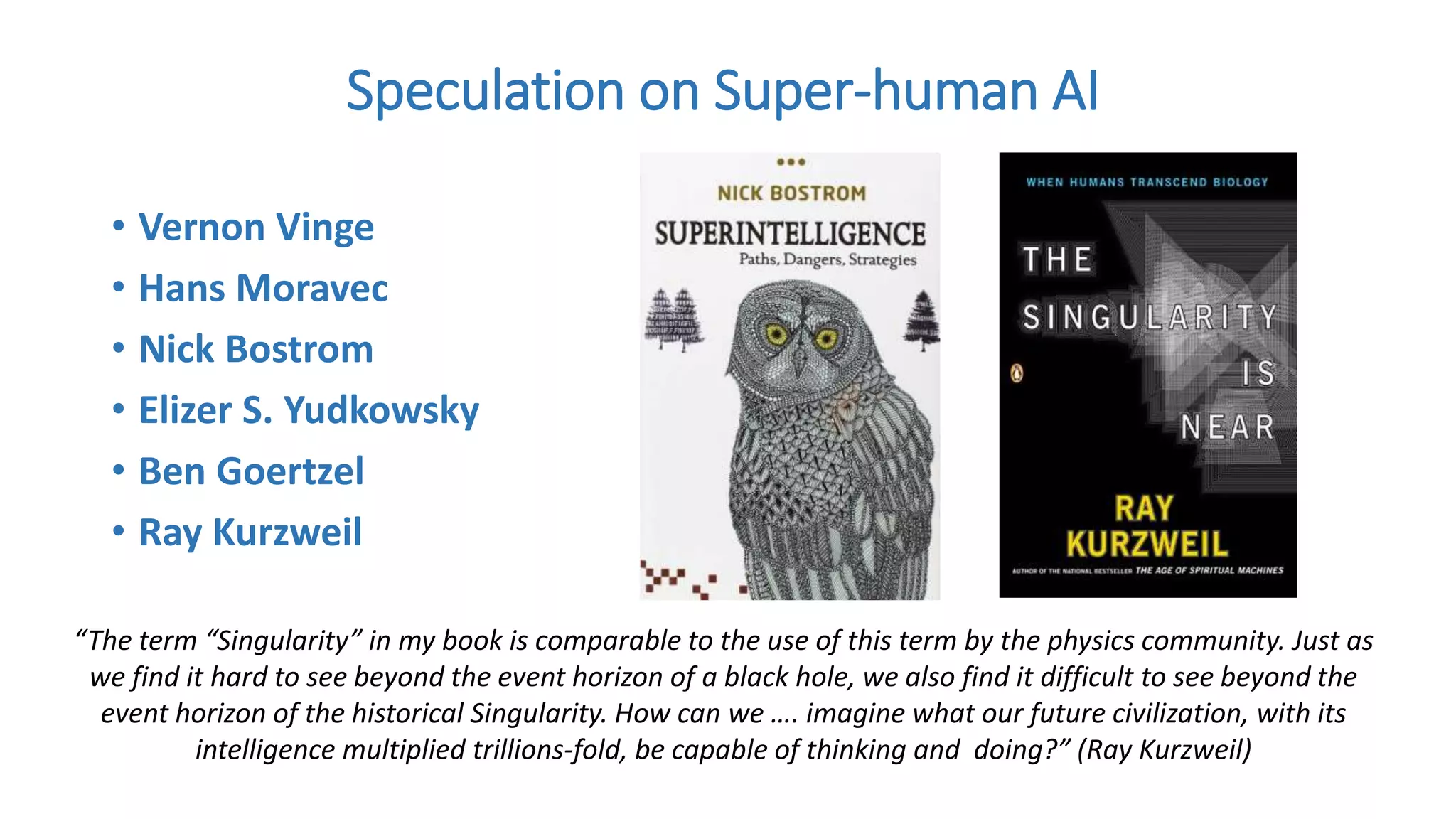

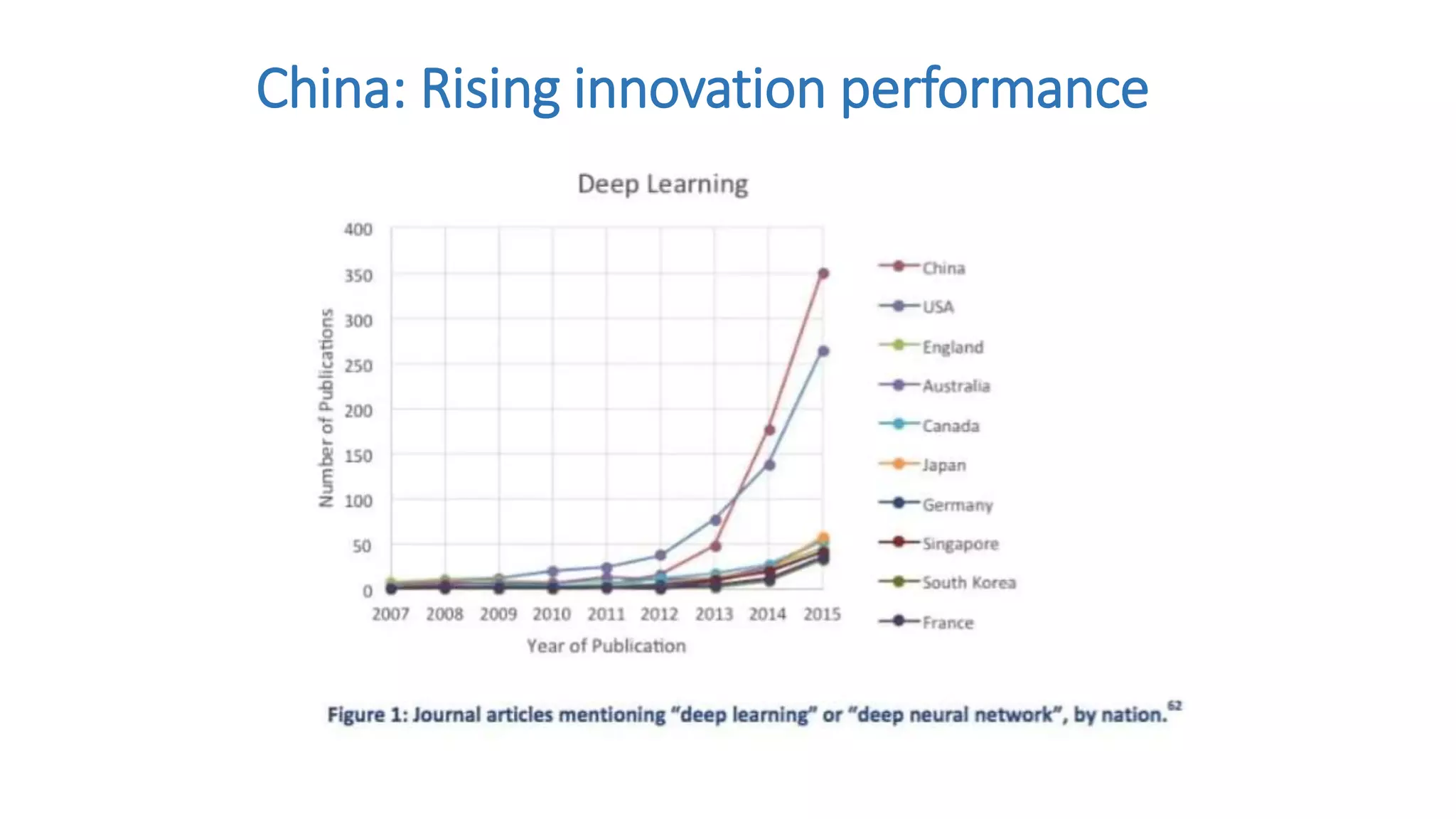

The document provides an extensive overview of artificial intelligence (AI) and machine learning (ML), discussing their evolution, methodologies, and applications in various fields such as finance, healthcare, and robotics. It highlights key concepts like supervised and unsupervised learning, neural networks, and the challenges of bias in AI systems. Additionally, it touches on the philosophical implications of AI development and the potential risks associated with super-human AI and ethics.