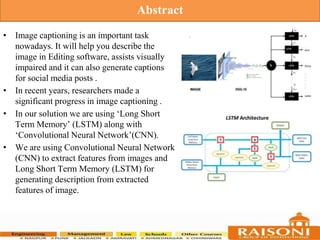

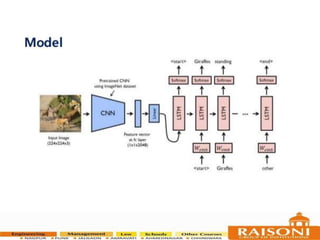

This document presents a project on image caption generation using deep learning and natural language processing. It discusses using a convolutional neural network to extract features from images and a long short term memory network to generate captions by predicting words from the extracted features. The objectives are to describe image contents, showcase LSTM effectiveness, and create a working model. It proposes using CNN, RNN and LSTM with Flickr datasets. Literature on existing approaches and references are provided.

![1. Chetan, A. and Vaishli, J. (2018). “Image Caption Generation using Deep

Learning Technique”, Fourth International Conference on Computing

Communication Control and Automation (ICCUBEA).

2. Huda A. Al-muzaini, Tasniem N. and Hafida B. (2018) “Automatic Arabic Image

Captioning using RNN LSTM-Based Language Model and CNN”, International

Journal of Advanced Computer Science and Applications (IJACSA), Vol. 9,

No.6.

3. J. Liu, G. Wang, P. Hu, L.-Y. Duan, and A. C. Kot. Global context-aware

attention lstm networks for 3d action recognition. CVPR, 2017.

4. J. Lu, C. Xiong, D. Parikh, and R. Socher. Knowing when to look: Adaptive

attention via a visual sentinel for image captioning. CVPR, 2017

5. S. J. Rennie, E. Marcheret, Y. Mroueh, J. Ross, and V. Goel. Self-critical

sequence training for image captioning. CVPR, 2017.

6. Loshchilov and F. Hutter. Sgdr: Stochastic gradient de[1]scent with restarts.

ICLR, 2016.

7. J. Johnson, A. Karpathy, and L. Fei-Fei. Densecap: Fully convolutional

localization networks for dense captioning. In CVPR, 2016.

References](https://image.slidesharecdn.com/imagecaptioningusingdlandnlp-230406105742-6a39279a/85/Image-captioning-using-DL-and-NLP-pptx-12-320.jpg)